kubernets使用glusterfs作为storageclass并创建pvc和应用

1.创建storageclass

vim two-replica-glusterfs-sc.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: two-replica-glusterfs-sc

provisioner: kubernetes.io/glusterfs

reclaimPolicy: Retain

parameters:

gidMax: "50000"

gidMin: "40000"

resturl: http://10.142.21.23:30088

volumetype: replicate:2

restauthenabled: "true"

restuser: "admin"

restuserkey: "123456"

# secretNamespace: "default"

# secretName: "heketi-secret"kubectl create -f two-replica-glusterfs-sc.yaml

说明:

- 以上创建了一个含有两个副本的gluster的存储类型(storage-class)

- volumetype中的relicate必须大于1,否则创建pvc的时候会报错:[heketi] ERROR 2017/11/14 21:35:20 /src/github.com/heketi/heketi/apps/glusterfs/app_volume.go:154: Failed to create volume: replica count should be greater than 1

- 在这里创建的storageclass显示指定

reclaimPolicy为Retain(默认情况下是Delete),删除pvc后pv以及后端的volume、bricck(lvm)不会被删除。

2.创建pvc

vim kafka-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: kafka-pvc

namespace: kube-system

spec:

storageClassName: two-replica-glusterfs-sc

accessModes:

- ReadWriteMany

resources:

requests:

storage: 20Gikubectl create -f kafka-pvc.yaml

结果详解

创建后会自动产生pvc:

kubectl get pvc -n kube-system

NAME STATUS VOLUME CAPACITY ACCESSMODES STORAGECLASS AGE

kafka-pvc Bound pvc-9749a71a-c943-11e7-8ccf-00505694b7e8 20Gi RWX two-replica-glusterfs-sc 3m还可以看到自动产生了一个pv:

kubectl get pv

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-1c45d9a2-c76e-11e7-892e-00505694eb6a 1Gi RWX Delete Bound default/gluster-pvc1 gfs 17m输入如下命令:

docker exec k8s_heketi_heketi-1521708765-zkq1t_kube-system_89fc7fec-c938-11e7-ac9a-005056949f63_0 heketi-cli --server http://10.142.21.23:30088 --user admin --secret 123456 topology info

或:

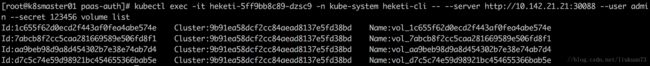

kubectl exec -it heketi-5ff9bb8c89-dzsc9 -n kube-system heketi-cli -- --server http://10.142.21.21:30088 --user admin --secret 123456 topology info可以看到在两个gluster节点的vgroup上各创建了一块lvm卷,它俩构成了逻辑卷vol_11ba5a6b998d8d9542ab8efab251e499:

10.142.21.24:

/var/lib/heketi/mounts/vg_d5adba4823cb6d0a1f34edb220c003b1/brick_811947c009213fbea13f95318c594f27/brick

10.142.21.27:

/var/lib/heketi/mounts/vg_570840088df1618dbdde4a30bc7c7e82/brick_6377153ec8bc5363a62a7d28daf8ed0e/brick3.创建应用

vim nginx-use-glusterfs.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-use-pvc

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx:1.11.4-alpine

imagePullPolicy: IfNotPresent

name: nginx-use-pvc

volumeMounts:

- mountPath: /test

name: my-pvc

volumes:

- name: my-pvc

persistentVolumeClaim:

claimName: kafka-pvc

---

apiVersion: v1

kind: Service

metadata:

name: nginx-use-pvc

namespace: kube-system

spec:

type: NodePort

ports:

- name: nginx-use-pvc

port: 80

targetPort: 80

nodePort: 30080

selector:

app: nginxkubectl create -f nginx-use-glusterfs.yaml

结果详解:

在pod所在的主机节点上通过df -h可见以这种形式mount上来了:

10.142.21.24:vol_11ba5a6b998d8d9542ab8efab251e499 20957184 33920 20923264 1% /var/lib/kubelet/pods/842d09c1-c947-11e7-ac9a-005056949f63/volumes/kubernetes.io~glusterfs/pvc-9749a71a-c943-11e7-8ccf-00505694b7e8在pod的容器中可以看到挂载形式是这样的:

10.142.21.24:vol_11ba5a6b998d8d9542ab8efab251e499

20957184 33920 20923264 0% /test4.删除

4.1 reclaimPolicy为Delete的情况

当storageclass的reclaimPolicy为Delete的时候,当删除应用并删除pvc后,相应的pv、volume、lvm(brick)会一起被删除。

4.2 reclaimPolicy为Retain的情况

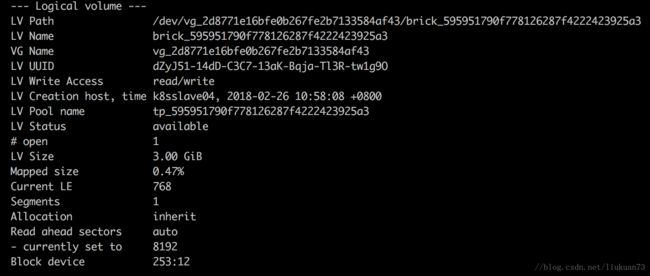

当storageclass的reclaimPolicy为Retain的时候,当删除应用并删除pvc后,相应的pv、volume、lvm(brick)并没有被删除。

通过kubectl get pv命令可以看到对应的pv仍然存在,状态变为Released。

通过heketi-cli topology info命令可以看到相应的volume 和 brick仍然存在。

也可以通过heketi-cli volume list命令查看volume可以看到相应的volume仍然存在:

kubectl exec -it heketi-5ff9bb8c89-dzsc9 -n kube-system heketi-cli -- --server http://10.142.21.21:30088 --user admin --secret 123456 volume list通过在每台机器上执行lvdisplay可以看到相应的lvm卷仍然存在:

假如要彻底删除的话还需要通过kubectl delete pv先删除pv

然后再通过heketi-cli volume delete 命令手动删除volume:

![]()