PyTorch学习(二)—— Linear regression、Logistic Regression、Softmax Classifier

原文链接: http://bit.ly/PyTorchZeroAll

一、Linear regression(in PyTorch way)

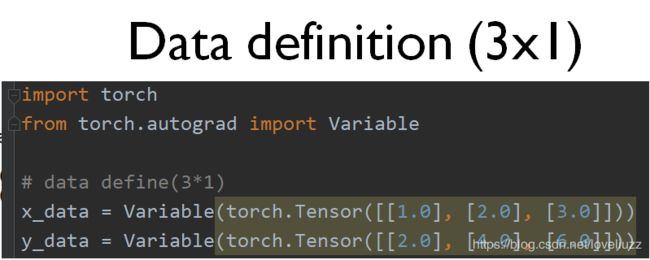

import torch

from torch.autograd import Variable

# data define(3*1)

x_data = Variable(torch.Tensor([[1.0], [2.0], [3.0]]))

y_data = Variable(torch.Tensor([[2.0], [4.0], [6.0]]))

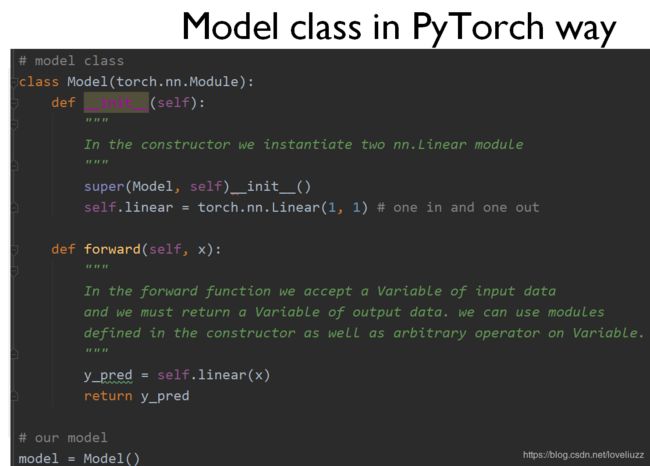

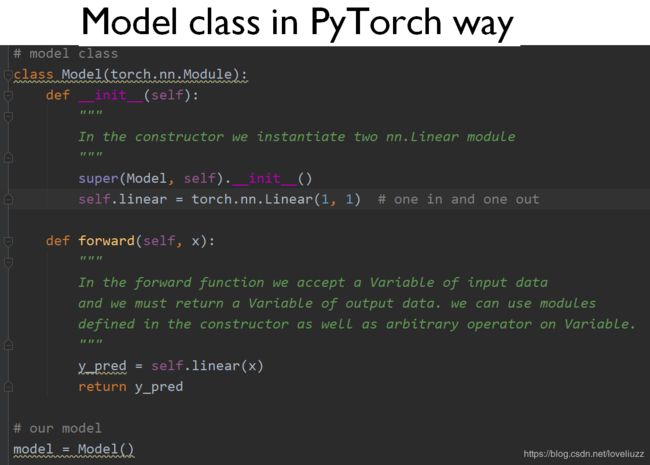

# model class

class Model(torch.nn.Module):

def __init__(self):

"""

In the constructor we instantiate two nn.Linear module

"""

super(Model, self).__init__()

self.linear = torch.nn.Linear(1, 1) # one in and one out

def forward(self, x):

"""

In the forward function we accept a Variable of input data

and we must return a Variable of output data. we can use modules

defined in the constructor as well as arbitrary operator on Variable.

"""

y_pred = self.linear(x)

return y_pred

# our model

model = Model()

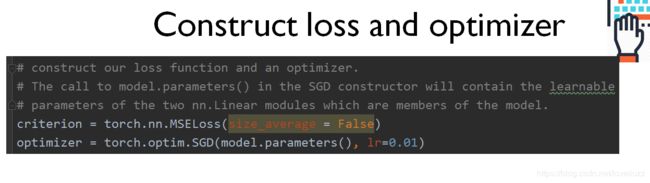

# construct our loss function and an optimizer.

# The call to model.parameters() in the SGD constructor will contain the learnable

# parameters of the two nn.Linear modules which are members of the model.

criterion = torch.nn.MSELoss(size_average=False)

optimizer = torch.optim.SGD(model.parameters(), lr=0.01)

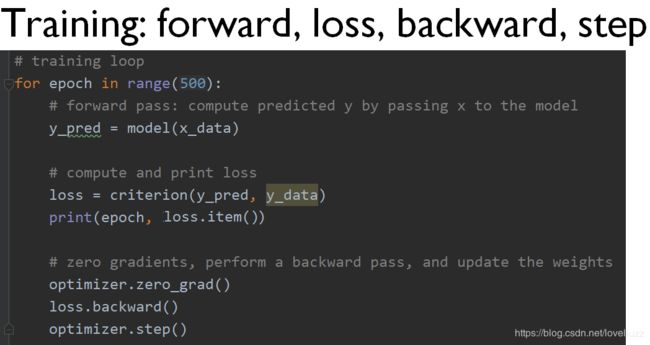

# training loop

for epoch in range(500):

# forward pass: compute predicted y by passing x to the model

y_pred = model(x_data)

# compute and print loss

loss = criterion(y_pred, y_data)

print(epoch, loss.item())

# zero gradients, perform a backward pass, and update the weights

optimizer.zero_grad()

loss.backward()

optimizer.step()

# after training -- test

hour_val = Variable(torch.Tensor([[4.0]]))

print("predict (after training)", 4, model.forward(hour_val).data[0][0])

import torch

import torch.nn as nn

from torch import optim

from torch.autograd import Variable

import torch.nn.functional as F

import torchvision

import torchvision.transforms as transform

#############################################################################

# 0. data loader

#############################################################################

transform = transform.Compose(

[transform.ToTensor(), transform.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]

)

train_set = torchvision.datasets.CIFAR10("./root", train=True, download=True, transform=transform)

train_loader = torch.utils.data.DataLoader(train_set, batch_size=4, shuffle=True, num_workers=0)

test_set = torchvision.datasets.CIFAR10("./root", train=False, download=True, transform=transform)

test_loader = torch.utils.data.DataLoader(test_set, batch_size=4, shuffle=False, num_workers=0)

classes = ("plane", "car", "bird", "cat", "deer", "dog", "frog", "horse", "ship", "trunk")

#############################################################################

# 1. define a neural network

# copy the neural network from the Neural Network section before and modify

# it to take 3-channel images(instead of 1-channel image as it was defined)

#############################################################################

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=6, kernel_size=5)

self.pool = nn.MaxPool2d(kernel_size=2, stride=2)

self.conv2 = nn.Conv2d(in_channels=6, out_channels=16, kernel_size=5)

self.fc1 = nn.Linear(in_features=16*5*5, out_features=120)

self.fc2 = nn.Linear(in_features=120, out_features=84)

self.fc3 = nn.Linear(in_features=84, out_features=10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 16*5*5)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

net = Net()

#############################################################################

# 2. define a loss function and optimizer

# use a classification cross-entropy and SGD with momentum

#############################################################################

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

#############################################################################

# 3. train the network

# we simply have to loop over our data iterator,

# and feed the inputs to the network and optimize

#############################################################################

for epoch in range(2):

running_loss = 0.0

for i, data in enumerate(train_loader, 0):

# get the inputs

inputs, labels = data

# warp them in Variable

inputs, labels = Variable(inputs), Variable(labels)

# zero the parameter gradient

optimizer.zero_grad()

# forward + backward + optimize

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

if i % 2000 == 1999:

print("[%d, %5d] loss: %.3f" %(epoch+1, i+1, running_loss/2000))

running_loss = 0.0

print("Finished Training")运行结果:

Files already downloaded and verified

Files already downloaded and verified

[1, 2000] loss: 2.229

[1, 4000] loss: 1.888

[1, 6000] loss: 1.665

[1, 8000] loss: 1.563

[1, 10000] loss: 1.507

[1, 12000] loss: 1.474

[2, 2000] loss: 1.413

[2, 4000] loss: 1.391

[2, 6000] loss: 1.345

[2, 8000] loss: 1.315

[2, 10000] loss: 1.299

[2, 12000] loss: 1.278

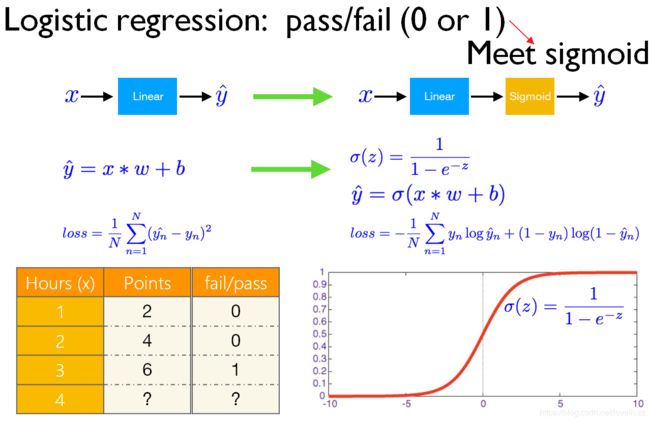

Finished Training二、Logistic Regression

import torch

import torch.nn as nn

from torch.autograd import Variable

# data define(4*1)

x_data = Variable(torch.Tensor([[1.0], [2.0], [3.0], [4.0]]))

y_data = Variable(torch.Tensor([[0.], [0.], [1.], [1.]]))

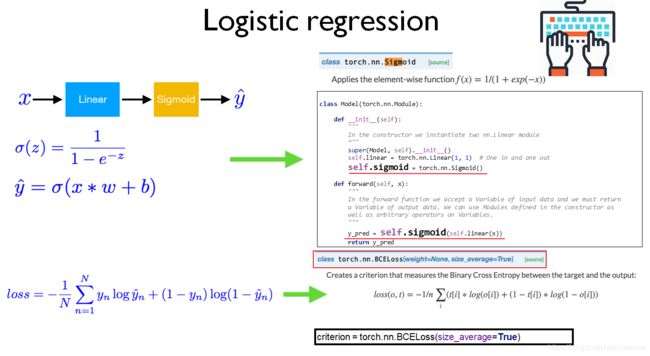

# model class

class Model(torch.nn.Module):

def __init__(self):

super(Model, self).__init__()

self.linear = nn.Linear(1, 1)

self.sigmoid = nn.Sigmoid()

def forward(self, x):

y_pred = self.sigmoid(self.linear(x))

return y_pred

model = Model()

# loss function and optimizer

criterion = nn.BCELoss(size_average=True)

optimizer = torch.optim.SGD(model.parameters(), lr=0.01)

# training loop

for epoch in range(500):

y_pred = model(x_data)

loss = criterion(y_pred, y_data)

print(epoch, loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

# test

hour_val = Variable(torch.Tensor([[0.5]]))

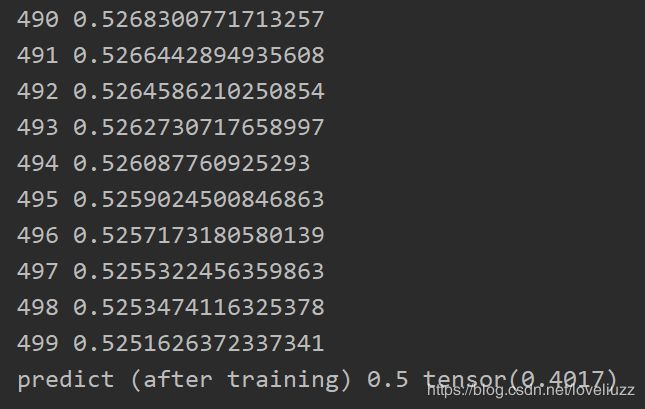

print("predict (after training)", 0.5, model.forward(hour_val).data[0][0])运行结果:

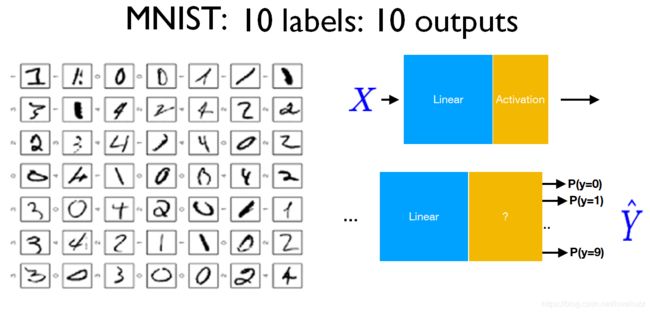

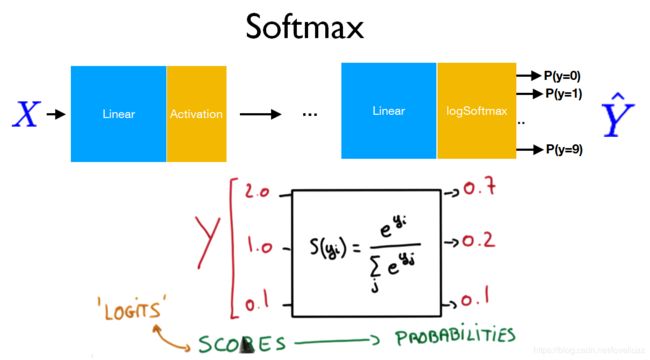

三、Softmax Classifier

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch import optim

from torch.autograd import Variable

from torch.utils.data import Dataset, DataLoader

import torchvision

import torchvision.transforms as transforms

batch_size = 64

#############################################################################

# 0. data loader

#############################################################################

transform = transforms.Compose([

transforms.ToTensor(), transforms.Normalize((0.1037, ), (0.3081,))])

train_set = torchvision.datasets.MNIST("../data", train=True, download=True, transform=transform)

train_loader = DataLoader(train_set, batch_size=batch_size, shuffle=True)

test_set = torchvision.datasets.MNIST("../data", train=False, download=True, transform=transform)

test_loader = DataLoader(test_set, batch_size=batch_size, shuffle=True)

#############################################################################

# 1. define a neural network

#############################################################################

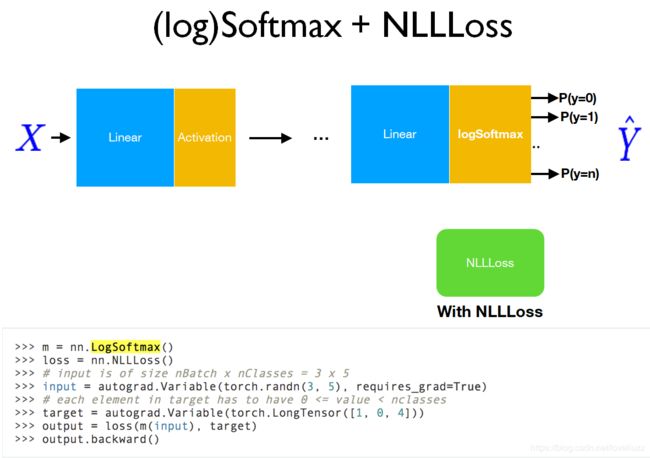

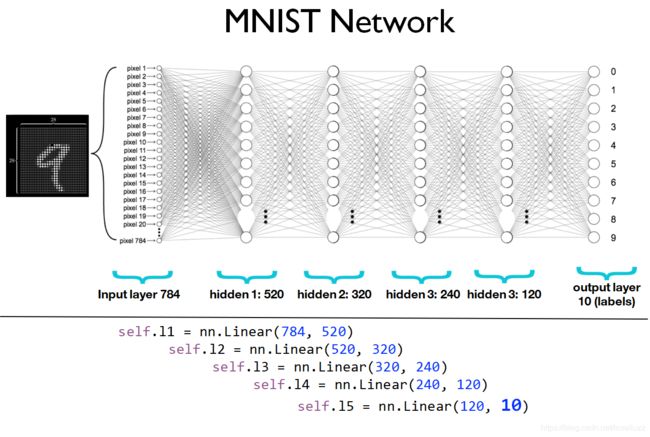

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.l1 = nn.Linear(784, 520)

self.l2 = nn.Linear(520, 320)

self.l3 = nn.Linear(320, 240)

self.l4 = nn.Linear(240, 120)

self.l5 = nn.Linear(120, 10)

def forward(self, x):

x = x.view(-1, 784) # flatten the data (n, 1, 28, 28)--> (n, 784)

x = F.relu(self.l1(x))

x = F.relu(self.l2(x))

x = F.relu(self.l3(x))

x = F.relu(self.l4(x))

x = F.relu(self.l5(x))

return F.log_softmax(x)

model = Net()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

def train(epoch):

model.train()

for batch_idx, (data, target) in enumerate(train_loader):

data, target = Variable(data), Variable(target)

optimizer.zero_grad()

output = model(data)

loss = F.nll_loss(output, target)

loss.backward()

optimizer.step()

if batch_idx % 10 == 0:

print("Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss {:.6f}".format(

epoch, batch_idx*len(data), len(train_loader.dataset),

100.*batch_idx/len(train_loader), loss.item()))

def test():

model.eval()

test_loss = 0

correct = 0

for data, target in test_loader:

data, target = Variable(data, volatile=True), Variable(target)

output = model(data)

# sum up batch loss

test_loss += F.nll_loss(output, target, size_average=False).item()

# get the index of the max log-probability

pred = output.data.max(1, keepdim=True)[1]

correct += pred.eq(target.data.view_as(pred)).cpu().sum()

test_loss /= len(test_loader.dataset)

print("\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n".format(

test_loss, correct, len(test_loader.dataset), 100.*correct/len(test_loader.dataset)))

for epoch in range(1, 10):

train(epoch)

test()