kubernetes k8s部署的详细记录

系统环境:CENTOS7

master,node1:192.168.156.75

node2:192.168.156.76

node3:192.168.156.77

组件版本

Kubernetes 1.10.4

Docker 18.03.1-ce

Etcd 3.3.7

Flanneld 0.10.0

一、前期准备无特别说明一般在每一台机器上面执行

主机名称

[root@v75 ~]# cat /etc/hosts

127.0.0.1 localhost v75 localhost4 localhost4.localdomain4

::1 localhost v75 localhost6 localhost6.localdomain6

192.168.156.75 v75

192.168.156.76 v76

192.168.156.77 v77

在MASTER机器上面配置无密码 ssh 登录其它节点,包括本身的节点

ssh-keygen -t rsa

ssh-copy-id root@v75

ssh-copy-id root@v76

ssh-copy-id root@v77

安装依赖包

在每台机器上安装依赖包:

CentOS:

``` bash

yum install -y epel-release

yum install -y conntrack ipvsadm ipset jq sysstat curl iptables libseccomp

关闭防火墙,关闭SELINUX,关闭swap

[root@v75 75shell]# cat stopfirewall

#!/bin/bash

systemctl stop firewalld

chkconfig firewalld off

setenforce 0

sed -i s#SELINUX=enforcing#SELINUX=disabled# /etc/selinux/config

[root@v75 75shell]# cat iptables4.sh

#!/bin/bash

iptables -F

iptables -X

iptables -F -t nat

iptables -X -t nat

iptables -P FORWARD ACCEPT

[root@v75 75shell]# cat swap5.sh

#!/bin/bash

swapoff -a

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

将可执行文件路径 /opt/k8s/bin 添加到 PATH 变量中

在每台机器上添加环境变量:

[root@v75 75shell]# cat path2.sh

#!/bin/bash

sh -c "echo 'PATH=/opt/k8s/bin:$PATH:$HOME/bin:$JAVA_HOME/bin' >>/root/.bashrc"

echo 'PATH=/opt/k8s/bin:$PATH:$HOME/bin:$JAVA_HOME/bin' >>~/.bashrc

设置系统参数

[root@v75 75shell]# cat mod6.sh

modprobe br_netfilter

modprobe ip_vs

cat > kubernetes.conf <

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

cp kubernetes.conf /etc/sysctl.d/kubernetes.conf

sysctl -p /etc/sysctl.d/kubernetes.conf

mount -t cgroup -o cpu,cpuacct none /sys/fs/cgroup/cpu,cpuacct

timedatectl set-timezone Asia/Shanghai

timedatectl set-local-rtc 0

systemctl restart rsyslog

systemctl restart crond

ntpdate cn.pool.ntp.org

建立用户目录等

[root@v75 75shell]# cat addk8suser

#!/bin/bash

useradd -m k8s

sh -c 'echo 123456 | passwd k8s --stdin'

gpasswd -a k8s wheel

[root@v75 75shell]# cat adddockeruser1

#!/bin/bash

useradd -m docker

gpasswd -a k8s docker

mkdir -p /etc/docker/

cat > /etc/docker/daemon.json <

eof

[root@v75 75shell]# cat mk7.sh

#!/bin/bash

mkdir -p /opt/k8s/bin

chown -R k8s /opt/k8s

mkdir -p /etc/kubernetes/cert

chown -R k8s /etc/kubernetes

mkdir -p /etc/etcd/cert

chown -R k8s /etc/etcd/cert

mkdir -p /var/lib/etcd && chown -R k8s /etc/etcd/cert

环境变量的定义脚本:

[root@v75 75shell]# cat /opt/k8s/bin/environment.sh

#!/usr/bin/bash

# 生成 EncryptionConfig 所需的加密 key

export ENCRYPTION_KEY=$(head -c 32 /dev/urandom | base64)

# 最好使用 当前未用的网段 来定义服务网段和 Pod 网段

# 服务网段,部署前路由不可达,部署后集群内路由可达(kube-proxy 和 ipvs 保证)

export SERVICE_CIDR="10.254.0.0/16"

# Pod 网段,建议 /16 段地址,部署前路由不可达,部署后集群内路由可达(flanneld 保证)

export CLUSTER_CIDR="172.30.0.0/16"

# 服务端口范围 (NodePort Range)

export NODE_PORT_RANGE="8400-9000"

# 集群各机器 IP 数组

export NODE_IPS=(192.168.156.75 192.168.156.76 192.168.156.77)

# 集群各 IP 对应的 主机名数组

export NODE_NAMES=(v75 v76 v77)

# kube-apiserver 的 VIP(HA 组件 keepalived 发布的 IP)

export MASTER_VIP=192.168.156.70

# kube-apiserver VIP 地址(HA 组件 haproxy 监听 8443 端口)

export KUBE_APISERVER="https://${MASTER_VIP}:8443"

# HA 节点,配置 VIP 的网络接口名称

export VIP_IF="eno16777736"

# etcd 集群服务地址列表

export ETCD_ENDPOINTS="https://192.168.156.75:2379,https://192.168.156.76:2379,https://192.168.156.77:2379"

# etcd 集群间通信的 IP 和端口

export ETCD_NODES="v75=https://192.168.156.75:2380,v76=https://192.168.156.76:2380,v77=https://192.168.156.77:2380"

# flanneld 网络配置前缀

export FLANNEL_ETCD_PREFIX="/kubernetes/network"

# kubernetes 服务 IP (一般是 SERVICE_CIDR 中第一个IP)

export CLUSTER_KUBERNETES_SVC_IP="10.254.0.1"

# 集群 DNS 服务 IP (从 SERVICE_CIDR 中预分配)

export CLUSTER_DNS_SVC_IP="10.254.0.2"

# 集群 DNS 域名

export CLUSTER_DNS_DOMAIN="cluster.local."

# 将二进制目录 /opt/k8s/bin 加到 PATH 中

export PATH=/opt/k8s/bin:$PATH

拷贝到每台机器上面

source environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp environment.sh k8s@${node_ip}:/opt/k8s/bin/

ssh k8s@${node_ip} "chmod +x /opt/k8s/bin/*"

done

创建CA证书和密钥

[root@v75 cert]# cat ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "4Paradigm"

}

]

}

生成 CA 证书和私钥

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

拷走:

[root@v75 cert]# cat cppem.sh

#!/bin/bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/kubernetes/cert && chown -R k8s /etc/kubernetes"

scp ca*.pem ca-config.json k8s@${node_ip}:/etc/kubernetes/cert

done

二、部署 kubectl 命令行工具

wget https://dl.k8s.io/v1.10.4/kubernetes-client-linux-amd64.tar.gz

tar -xzvf kubernetes-client-linux-amd64.tar.gz

[root@v75 75shell]# cat cpkubernetes-client9.sh

#!/bin/bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp kubernetes/client/bin/kubectl k8s@${node_ip}:/opt/k8s/bin/

ssh root@${node_ip} "chown -R k8s /opt/k8s"

ssh k8s@${node_ip} "chmod +x /opt/k8s/bin/*"

done

## 创建 admin 证书和私钥

kubectl 与 apiserver https 安全端口通信,apiserver 对提供的证书进行认证和授权。

kubectl 作为集群的管理工具,需要被授予最高权限。这里创建具有**最高权限**的 admin 证书。

创建证书签名请求:

``` bash

cat > admin-csr.json <

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:masters",

"OU": "4Paradigm"

}

]

}

EOF

```

+ O 为 `system:masters`,kube-apiserver 收到该证书后将请求的 Group 设置为 system:masters;

+ 预定义的 ClusterRoleBinding `cluster-admin` 将 Group `system:masters` 与 Role `cluster-admin` 绑定,该 Role 授予**所有 API**的权限;

+ 该证书只会被 kubectl 当做 client 证书使用,所以 hosts 字段为空;

生成证书和私钥:

``` bash

cfssl gencert -ca=/etc/kubernetes/cert/ca.pem \

-ca-key=/etc/kubernetes/cert/ca-key.pem \

-config=/etc/kubernetes/cert/ca-config.json \

-profile=kubernetes admin-csr.json | cfssljson -bare admin

ls admin*

```

## 创建 kubeconfig 文件

kubeconfig 为 kubectl 的配置文件,包含访问 apiserver 的所有信息,如 apiserver 地址、CA 证书和自身使用的证书;

``` bash

source /opt/k8s/bin/environment.sh

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kubectl.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials admin \

--client-certificate=admin.pem \

--client-key=admin-key.pem \

--embed-certs=true \

--kubeconfig=kubectl.kubeconfig

# 设置上下文参数

kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=admin \

--kubeconfig=kubectl.kubeconfig

# 设置默认上下文

kubectl config use-context kubernetes --kubeconfig=kubectl.kubeconfig

```

+ `--certificate-authority`:验证 kube-apiserver 证书的根证书;

+ `--client-certificate`、`--client-key`:刚生成的 `admin` 证书和私钥,连接 kube-apiserver 时使用;

+ `--embed-certs=true`:将 ca.pem 和 admin.pem 证书内容嵌入到生成的 kubectl.kubeconfig 文件中(不加时,写入的是证书文件路径);

## 分发 kubeconfig 文件

分发到所有使用 `kubectl` 命令的节点:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "mkdir -p ~/.kube"

scp kubectl.kubeconfig k8s@${node_ip}:~/.kube/config

ssh root@${node_ip} "mkdir -p ~/.kube"

scp kubectl.kubeconfig root@${node_ip}:~/.kube/config

done

```

+ 保存到用户的 `~/.kube/config` 文件;

三、部署 etcd 集群

wget https://github.com/coreos/etcd/releases/download/v3.3.10/etcd-v3.3.10-linux-amd64.tar.gz

tar -xvf etcd-v3.3.10-linux-amd64.tar.gz

[root@v75 75shell]# cat cpetcd12.sh

#!/bin/bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp etcd-v3.3.10-linux-amd64/etcd* k8s@${node_ip}:/opt/k8s/bin

ssh ${node_ip} "chown -R k8s /opt/k8s"

ssh k8s@${node_ip} "chmod +x /opt/k8s/bin/*"

done

## 创建 etcd 证书和私钥

创建证书签名请求:

[root@v75 etcdcert]# cat etcd-csr.json

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.156.75",

"192.168.156.76",

"192.168.156.77"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "4Paradigm"

}

]

}

+ hosts 字段指定授权使用该证书的 etcd 节点 IP 或域名列表,这里将 etcd 集群的三个节点 IP 都列在其中;

生成证书和私钥:

``` bash

cfssl gencert -ca=/etc/kubernetes/cert/ca.pem \

-ca-key=/etc/kubernetes/cert/ca-key.pem \

-config=/etc/kubernetes/cert/ca-config.json \

-profile=kubernetes etcd-csr.json | cfssljson -bare etcd

ls etcd*

```

分发生成的证书和私钥到各 etcd 节点:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/etcd/cert && chown -R k8s /etc/etcd/cert"

scp etcd*.pem k8s@${node_ip}:/etc/etcd/cert/

done

```

## 创建 etcd 的 systemd unit 模板文件

``` bash

source /opt/k8s/bin/environment.sh

cat > etcd.service.template <

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

User=k8s

Type=notify

WorkingDirectory=/var/lib/etcd/

ExecStart=/opt/k8s/bin/etcd \\

--data-dir=/var/lib/etcd \\

--name=##NODE_NAME## \\

--cert-file=/etc/etcd/cert/etcd.pem \\

--key-file=/etc/etcd/cert/etcd-key.pem \\

--trusted-ca-file=/etc/kubernetes/cert/ca.pem \\

--peer-cert-file=/etc/etcd/cert/etcd.pem \\

--peer-key-file=/etc/etcd/cert/etcd-key.pem \\

--peer-trusted-ca-file=/etc/kubernetes/cert/ca.pem \\

--peer-client-cert-auth \\

--client-cert-auth \\

--listen-peer-urls=https://##NODE_IP##:2380 \\

--initial-advertise-peer-urls=https://##NODE_IP##:2380 \\

--listen-client-urls=https://##NODE_IP##:2379,http://127.0.0.1:2379 \\

--advertise-client-urls=https://##NODE_IP##:2379 \\

--initial-cluster-token=etcd-cluster-0 \\

--initial-cluster=${ETCD_NODES} \\

--initial-cluster-state=new

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

```

+ `User`:指定以 k8s 账户运行;

+ `WorkingDirectory`、`--data-dir`:指定工作目录和数据目录为 `/var/lib/etcd`,需在启动服务前创建这个目录;

+ `--name`:指定节点名称,当 `--initial-cluster-state` 值为 `new` 时,`--name` 的参数值必须位于 `--initial-cluster` 列表中;

+ `--cert-file`、`--key-file`:etcd server 与 client 通信时使用的证书和私钥;

+ `--trusted-ca-file`:签名 client 证书的 CA 证书,用于验证 client 证书;

+ `--peer-cert-file`、`--peer-key-file`:etcd 与 peer 通信使用的证书和私钥;

+ `--peer-trusted-ca-file`:签名 peer 证书的 CA 证书,用于验证 peer 证书;

## 为各节点创建和分发 etcd systemd unit 文件

替换模板文件中的变量,为各节点创建 systemd unit 文件:

``` bash

source /opt/k8s/bin/environment.sh

for (( i=0; i < 3; i++ ))

do

sed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_IPS[i]}/" etcd.service.template > etcd-${NODE_IPS[i]}.service

done

ls *.service

```

+ NODE_NAMES 和 NODE_IPS 为相同长度的 bash 数组,分别为节点名称和对应的 IP;

分发生成的 systemd unit 文件:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /var/lib/etcd && chown -R k8s /var/lib/etcd"

scp etcd-${node_ip}.service root@${node_ip}:/etc/systemd/system/etcd.service

done

```

+ 必须先创建 etcd 数据目录和工作目录;

+ 文件重命名为 etcd.service;

完整 unit 文件见:[etcd.service](https://github.com/opsnull/follow-me-install-kubernetes-cluster/blob/master/systemd/etcd.service)

## 启动 etcd 服务

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable etcd && systemctl restart etcd &"

done

```

+ etcd 进程首次启动时会等待其它节点的 etcd 加入集群,命令 `systemctl start etcd` 会卡住一段时间,为正常现象。

## 检查启动结果

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "systemctl status etcd|grep Active"

done

```

确保状态为 `active (running)`,否则查看日志,确认原因:

``` bash

$ journalctl -u etcd

```

## 验证服务状态

部署完 etcd 集群后,在任一 etc 节点上执行如下命令:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ETCDCTL_API=3 /opt/k8s/bin/etcdctl \

--endpoints=https://${node_ip}:2379 \

--cacert=/etc/kubernetes/cert/ca.pem \

--cert=/etc/etcd/cert/etcd.pem \

--key=/etc/etcd/cert/etcd-key.pem endpoint health

done

四、部署 flannel 网络

kubernetes 要求集群内各节点(包括 master 节点)能通过 Pod 网段互联互通。flannel 使用 vxlan 技术为各节点创建一个可以互通的 Pod 网络,使用的端口为 UDP 8472

flannel 第一次启动时,从 etcd 获取 Pod 网段信息,为本节点分配一个未使用的 `/24` 段地址,然后创建 `flannel.1`(也可能是其它名称,如 flannel1 等) 接口。

flannel 将分配的 Pod 网段信息写入 `/run/flannel/docker` 文件,docker 后续使用这个文件中的环境变量设置 `docker0` 网桥。

## 下载和分发 flanneld 二进制文件

到 [https://github.com/coreos/flannel/releases](https://github.com/coreos/flannel/releases) 页面下载最新版本的发布包:

``` bash

mkdir flannel

wget https://github.com/coreos/flannel/releases/download/v0.10.0/flannel-v0.10.0-linux-amd64.tar.gz

tar -xzvf flannel-v0.10.0-linux-amd64.tar.gz -C flannel

```

分发 flanneld 二进制文件到集群所有节点:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp flannel/{flanneld,mk-docker-opts.sh} k8s@${node_ip}:/opt/k8s/bin/

ssh k8s@${node_ip} "chmod +x /opt/k8s/bin/*"

done

```

## 创建 flannel 证书和私钥

flannel 从 etcd 集群存取网段分配信息,而 etcd 集群启用了双向 x509 证书认证,所以需要为 flanneld 生成证书和私钥。

创建证书签名请求:

``` bash

cat > flanneld-csr.json <

"CN": "flanneld",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "4Paradigm"

}

]

}

EOF

```

+ 该证书只会被 kubectl 当做 client 证书使用,所以 hosts 字段为空;

生成证书和私钥:

``` bash

cfssl gencert -ca=/etc/kubernetes/cert/ca.pem \

-ca-key=/etc/kubernetes/cert/ca-key.pem \

-config=/etc/kubernetes/cert/ca-config.json \

-profile=kubernetes flanneld-csr.json | cfssljson -bare flanneld

ls flanneld*pem

```

将生成的证书和私钥分发到**所有节点**(master 和 worker):

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/flanneld/cert && chown -R k8s /etc/flanneld"

scp flanneld*.pem k8s@${node_ip}:/etc/flanneld/cert

done

```

## 向 etcd 写入集群 Pod 网段信息

``` bash

source /opt/k8s/bin/environment.sh

etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/cert/ca.pem \

--cert-file=/etc/flanneld/cert/flanneld.pem \

--key-file=/etc/flanneld/cert/flanneld-key.pem \

set ${FLANNEL_ETCD_PREFIX}/config '{"Network":"'${CLUSTER_CIDR}'", "SubnetLen": 24, "Backend": {"Type": "vxlan"}}'

```

+ flanneld **当前版本 (v0.10.0) 不支持 etcd v3**,故使用 etcd v2 API 写入配置 key 和网段数据;

+ 写入的 Pod 网段 ${CLUSTER_CIDR} 必须是 /16 段地址,必须与 kube-controller-manager 的 `--cluster-cidr` 参数值一致;

## 创建 flanneld 的 systemd unit 文件

``` bash

source /opt/k8s/bin/environment.sh

export IFACE=eth0

cat > flanneld.service << EOF

[Unit]

Description=Flanneld overlay address etcd agent

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

ExecStart=/opt/k8s/bin/flanneld \\

-etcd-cafile=/etc/kubernetes/cert/ca.pem \\

-etcd-certfile=/etc/flanneld/cert/flanneld.pem \\

-etcd-keyfile=/etc/flanneld/cert/flanneld-key.pem \\

-etcd-endpoints=${ETCD_ENDPOINTS} \\

-etcd-prefix=${FLANNEL_ETCD_PREFIX} \\

-iface=${IFACE} #注意网卡的名称与实际的一致

ExecStartPost=/opt/k8s/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker

Restart=on-failure

[Install]

WantedBy=multi-user.target

RequiredBy=docker.service

EOF

```

+ `mk-docker-opts.sh` 脚本将分配给 flanneld 的 Pod 子网网段信息写入 `/run/flannel/docker` 文件,后续 docker 启动时使用这个文件中的环境变量配置 docker0 网桥;

+ flanneld 使用系统缺省路由所在的接口与其它节点通信,对于有多个网络接口(如内网和公网)的节点,可以用 `-iface` 参数指定通信接口,如上面的 eth0 接口;

+ flanneld 运行时需要 root 权限;

完整 unit 见 [flanneld.service](https://github.com/opsnull/follow-me-install-kubernetes-cluster/blob/master/systemd/flanneld.service)

## 分发 flanneld systemd unit 文件到**所有节点**

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp flanneld.service root@${node_ip}:/etc/systemd/system/

done

```

## 启动 flanneld 服务

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable flanneld && systemctl restart flanneld"

done

```

## 检查启动结果

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "systemctl status flanneld|grep Active"

done

```

确保状态为 `active (running)`,否则查看日志,确认原因:

``` bash

$ journalctl -u flanneld

```

## 检查分配给各 flanneld 的 Pod 网段信息

查看集群 Pod 网段(/16):

``` bash

source /opt/k8s/bin/environment.sh

etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/cert/ca.pem \

--cert-file=/etc/flanneld/cert/flanneld.pem \

--key-file=/etc/flanneld/cert/flanneld-key.pem \

get ${FLANNEL_ETCD_PREFIX}/config

```

输出:

`{"Network":"172.30.0.0/16", "SubnetLen": 24, "Backend": {"Type": "vxlan"}}`

查看已分配的 Pod 子网段列表(/24):

``` bash

source /opt/k8s/bin/environment.sh

etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/cert/ca.pem \

--cert-file=/etc/flanneld/cert/flanneld.pem \

--key-file=/etc/flanneld/cert/flanneld-key.pem \

ls ${FLANNEL_ETCD_PREFIX}/subnets

```

输出:

``` bash

/kubernetes/network/subnets/172.30.81.0-24

/kubernetes/network/subnets/172.30.29.0-24

/kubernetes/network/subnets/172.30.39.0-24

```

查看某一 Pod 网段对应的节点 IP 和 flannel 接口地址:

``` bash

source /opt/k8s/bin/environment.sh

etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/cert/ca.pem \

--cert-file=/etc/flanneld/cert/flanneld.pem \

--key-file=/etc/flanneld/cert/flanneld-key.pem \

get ${FLANNEL_ETCD_PREFIX}/subnets/172.30.81.0-24

```

输出:

`{"PublicIP":"172.27.129.105","BackendType":"vxlan","BackendData":{"VtepMAC":"12:21:93:9e:b1:eb"}}`

## 验证各节点能通过 Pod 网段互通

在**各节点上部署** flannel 后,检查是否创建了 flannel 接口(名称可能为 flannel0、flannel.0、flannel.1 等):

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh ${node_ip} "/usr/sbin/ip addr show flannel.1|grep -w inet"

done

```

输出:

``` bash

inet 172.30.81.0/32 scope global flannel.1

inet 172.30.29.0/32 scope global flannel.1

inet 172.30.39.0/32 scope global flannel.1

```

在各节点上 ping 所有 flannel 接口 IP,确保能通:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh ${node_ip} "ping -c 1 172.30.81.0"

ssh ${node_ip} "ping -c 1 172.30.29.0"

ssh ${node_ip} "ping -c 1 172.30.39.0"

done

五、部署 master 节点

kubernetes master 节点运行如下组件:

+ kube-apiserver

+ kube-scheduler

+ kube-controller-manager

kube-scheduler 和 kube-controller-manager 可以以集群模式运行,通过 leader 选举产生一个工作进程,其它进程处于阻塞模式。

对于 kube-apiserver,可以运行多个实例,但对其它组件需要提供统一的访问地址,该地址需要高可用。本文档使用 keepalived 和 haproxy 实现 kube-apiserver VIP 高可用和负载均衡。

## 下载最新版本的二进制文件

从 [`CHANGELOG`页面](https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG.md) 下载 server tarball 文件。

``` bash

wget https://dl.k8s.io/v1.10.4/kubernetes-server-linux-amd64.tar.gz

tar -xzvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes

tar -xzvf kubernetes-src.tar.gz

```

将二进制文件拷贝到所有 master 节点:

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp server/bin/* k8s@${node_ip}:/opt/k8s/bin/

ssh k8s@${node_ip} "chmod +x /opt/k8s/bin/*"

done

五一、部署高可用组件

+ keepalived 提供 kube-apiserver 对外服务的 VIP;

+ haproxy 监听 VIP,后端连接所有 kube-apiserver 实例,提供健康检查和负载均衡功能;

运行 keepalived 和 haproxy 的节点称为 LB 节点。由于 keepalived 是一主多备运行模式,故至少两个 LB 节点。

本文档复用 master 节点的三台机器,haproxy 监听的端口(8443) 需要与 kube-apiserver 的端口 6443 不同,避免冲突。

keepalived 在运行过程中周期检查本机的 haproxy 进程状态,如果检测到 haproxy 进程异常,则触发重新选主的过程,VIP 将飘移到新选出来的主节点,从而实现 VIP 的高可用。

所有组件(如 kubeclt、apiserver、controller-manager、scheduler 等)都通过 VIP 和 haproxy 监听的 8443 端口访问 kube-apiserver 服务。

## 安装软件包

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "yum install -y keepalived haproxy"

done

```

配置和下发 haproxy 配置文件

[root@v75 75shell]# cat /etc/haproxy/haproxy.cfg

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /var/run/haproxy-admin.sock mode 660 level admin

stats timeout 30s

user haproxy

group haproxy

daemon

nbproc 1

defaults

log global

timeout connect 5000

timeout client 10m

timeout server 10m

listen admin_stats

bind 0.0.0.0:10080

mode http

log 127.0.0.1 local0 err

stats refresh 30s

stats uri /status

stats realm welcome login\ Haproxy

stats auth admin:123456

stats hide-version

stats admin if TRUE

listen kube-master

bind 0.0.0.0:8443

mode tcp

option tcplog

balance source

server 192.168.156.75 192.168.156.75:6443 check inter 2000 fall 2 rise 2 weight 1

server 192.168.156.76 192.168.156.76:6443 check inter 2000 fall 2 rise 2 weight 1

server 192.168.156.77 192.168.156.77:6443 check inter 2000 fall 2 rise 2 weight 1

+ haproxy 在 10080 端口输出 status 信息;

+ haproxy 监听**所有接口**的 8443 端口,该端口与环境变量 ${KUBE_APISERVER} 指定的端口必须一致;

+ server 字段列出所有 kube-apiserver 监听的 IP 和端口;

下发 haproxy.cfg 到所有 master 节点:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp haproxy.cfg root@${node_ip}:/etc/haproxy

done

起 haproxy 服务

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl restart haproxy"

done

```

## 检查 haproxy 服务状态

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status haproxy|grep Active"

done

```

确保状态为 `active (running)`,否则查看日志,确认原因:

``` bash

journalctl -u haproxy

```

检查 haproxy 是否监听 8443 端口:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "netstat -lnpt|grep haproxy"

done

```

确保输出类似于:

``` bash

tcp 0 0 0.0.0.0:8443 0.0.0.0:* LISTEN 120583/haproxy

```

## 配置和下发 keepalived 配置文件

keepalived 是一主(master)多备(backup)运行模式,故有两种类型的配置文件。master 配置文件只有一份,backup 配置文件视节点数目而定,对于本文档而言,规划如下:

+ master: 192.168.156.75

+ backup:192.168.156.76、192.168.156.77

master 配置文件:

``` bash

source /opt/k8s/bin/environment.sh

cat > keepalived-master.conf <

router_id lb-master-105

}

vrrp_script check-haproxy {

script "killall -0 haproxy"

interval 5

weight -30

}

vrrp_instance VI-kube-master {

state MASTER

priority 120

dont_track_primary

interface ${VIP_IF}

virtual_router_id 68

advert_int 3

track_script {

check-haproxy

}

virtual_ipaddress {

${MASTER_VIP}

}

}

[root@v75 ha]# cat keepalived-master.conf

global_defs {

router_id lb-master-105

}

vrrp_script check-haproxy {

script "killall -0 haproxy"

interval 5

weight -30

}

vrrp_instance VI-kube-master {

state MASTER

priority 120

dont_track_primary

interface eth0

virtual_router_id 68

advert_int 3

track_script {

check-haproxy

}

virtual_ipaddress {

192.168.156.70

}

}

[root@v75 ha]# cat keepalived-backup.conf

global_defs {

router_id lb-backup-105

}

vrrp_script check-haproxy {

script "killall -0 haproxy"

interval 5

weight -30

}

vrrp_instance VI-kube-master {

state BACKUP

priority 110

dont_track_primary

interface eno16777736

virtual_router_id 68

advert_int 3

track_script {

check-haproxy

}

virtual_ipaddress {

192.168.156.70

}

}

+ VIP 所在的接口(interface ${VIP_IF})为 `eth0`;

+ 使用 `killall -0 haproxy` 命令检查所在节点的 haproxy 进程是否正常。如果异常则将权重减少(-30),从而触发重新选主过程;

+ router_id、virtual_router_id 用于标识属于该 HA 的 keepalived 实例,如果有多套 keepalived HA,则必须各不相同;

backup 配置文件:

``` bash

source /opt/k8s/bin/environment.sh

cat > keepalived-backup.conf <

router_id lb-backup-105

}

vrrp_script check-haproxy {

script "killall -0 haproxy"

interval 5

weight -30

}

vrrp_instance VI-kube-master {

state BACKUP

priority 110

dont_track_primary

interface ${VIP_IF}

virtual_router_id 68

advert_int 3

track_script {

check-haproxy

}

virtual_ipaddress {

${MASTER_VIP}

}

}

EOF

```

+ VIP 所在的接口(interface ${VIP_IF})为 `eth0`;

+ 使用 `killall -0 haproxy` 命令检查所在节点的 haproxy 进程是否正常。如果异常则将权重减少(-30),从而触发重新选主过程;

+ router_id、virtual_router_id 用于标识属于该 HA 的 keepalived 实例,如果有多套 keepalived HA,则必须各不相同;

+ priority 的值必须小于 master 的值;

## 下发 keepalived 配置文件

下发 master 配置文件:

``` bash

scp keepalived-master.conf [email protected]:/etc/keepalived/keepalived.conf

```

下发 backup 配置文件:

``` bash

scp keepalived-backup.conf [email protected]:/etc/keepalived/keepalived.conf

scp keepalived-backup.conf [email protected]:/etc/keepalived/keepalived.conf

```

## 起 keepalived 服务

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl restart keepalived"

done

```

## 检查 keepalived 服务

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status keepalived|grep Active"

done

```

确保状态为 `active (running)`,否则查看日志,确认原因:

``` bash

journalctl -u keepalived

```

查看 VIP 所在的节点,确保可以 ping 通 VIP:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh ${node_ip} "/usr/sbin/ip addr show ${VIP_IF}"

ssh ${node_ip} "ping -c 1 ${MASTER_VIP}"

done

```

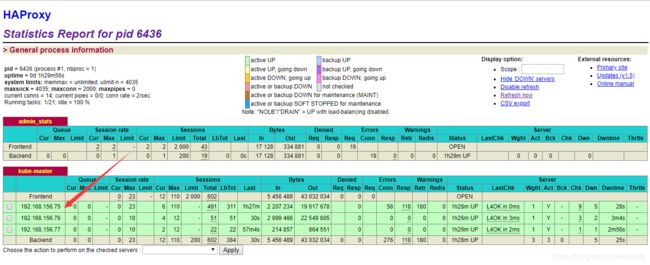

## 查看 haproxy 状态页面

浏览器访问 ${MASTER_VIP}:10080/status 地址,查看 haproxy 状态页面:

六、部署 kube-apiserver 组件

[root@v75 kubernetescert]# cat kubernetes-csr.json

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.156.75",

"192.168.156.76",

"192.168.156.77",

"192.168.156.70",

"10.254.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "4Paradigm"

}

]

}

+ hosts 字段指定授权使用该证书的 **IP 或域名列表**,这里列出了 VIP 、apiserver 节点 IP、kubernetes 服务 IP 和域名;

+ 域名最后字符不能是 `.`(如不能为 `kubernetes.default.svc.cluster.local.`),否则解析时失败,提示: `x509: cannot parse dnsName "kubernetes.default.svc.cluster.local."`;

+ 如果使用非 `cluster.local` 域名,如 `opsnull.com`,则需要修改域名列表中的最后两个域名为:`kubernetes.default.svc.opsnull`、`kubernetes.default.svc.opsnull.com`

+ kubernetes 服务 IP 是 apiserver 自动创建的,一般是 `--service-cluster-ip-range` 参数指定的网段的**第一个IP**,后续可以通过如下命令获取:

生成证书和私钥:

``` bash

cfssl gencert -ca=/etc/kubernetes/cert/ca.pem \

-ca-key=/etc/kubernetes/cert/ca-key.pem \

-config=/etc/kubernetes/cert/ca-config.json \

-profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes

ls kubernetes*pem

```

将生成的证书和私钥文件拷贝到 master 节点:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/kubernetes/cert/ && sudo chown -R k8s /etc/kubernetes/cert/"

scp kubernetes*.pem k8s@${node_ip}:/etc/kubernetes/cert/

done

```

+ k8s 账户可以读写 /etc/kubernetes/cert/ 目录;

## 创建加密配置文件

``` bash

source /opt/k8s/bin/environment.sh

cat > encryption-config.yaml <

apiVersion: v1

resources:

- resources:

- secrets

providers:

- aescbc:

keys:

- name: key1

secret: ${ENCRYPTION_KEY}

- identity: {}

EOF

```

将加密配置文件拷贝到 master 节点的 `/etc/kubernetes` 目录下:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp encryption-config.yaml root@${node_ip}:/etc/kubernetes/

done

```

## 创建 kube-apiserver systemd unit 模板文件

``` bash

source /opt/k8s/bin/environment.sh

cat > kube-apiserver.service.template <

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

ExecStart=/opt/k8s/bin/kube-apiserver \\

--enable-admission-plugins=Initializers,NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \\

--anonymous-auth=false \\

--experimental-encryption-provider-config=/etc/kubernetes/encryption-config.yaml \\

--advertise-address=##NODE_IP## \\

--bind-address=##NODE_IP## \\

--insecure-port=0 \\

--authorization-mode=Node,RBAC \\

--runtime-config=api/all \\

--enable-bootstrap-token-auth \\

--service-cluster-ip-range=${SERVICE_CIDR} \\

--service-node-port-range=${NODE_PORT_RANGE} \\

--tls-cert-file=/etc/kubernetes/cert/kubernetes.pem \\

--tls-private-key-file=/etc/kubernetes/cert/kubernetes-key.pem \\

--client-ca-file=/etc/kubernetes/cert/ca.pem \\

--kubelet-client-certificate=/etc/kubernetes/cert/kubernetes.pem \\

--kubelet-client-key=/etc/kubernetes/cert/kubernetes-key.pem \\

--service-account-key-file=/etc/kubernetes/cert/ca-key.pem \\

--etcd-cafile=/etc/kubernetes/cert/ca.pem \\

--etcd-certfile=/etc/kubernetes/cert/kubernetes.pem \\

--etcd-keyfile=/etc/kubernetes/cert/kubernetes-key.pem \\

--etcd-servers=${ETCD_ENDPOINTS} \\

--enable-swagger-ui=true \\

--allow-privileged=true \\

--apiserver-count=3 \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/var/log/kube-apiserver-audit.log \\

--event-ttl=1h \\

--alsologtostderr=true \\

--logtostderr=false \\

--log-dir=/var/log/kubernetes \\

--v=2

Restart=on-failure

RestartSec=5

Type=notify

User=k8s

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

```

+ `--experimental-encryption-provider-config`:启用加密特性;

+ `--authorization-mode=Node,RBAC`: 开启 Node 和 RBAC 授权模式,拒绝未授权的请求;

+ `--enable-admission-plugins`:启用 `ServiceAccount` 和 `NodeRestriction`;

+ `--service-account-key-file`:签名 ServiceAccount Token 的公钥文件,kube-controller-manager 的 `--service-account-private-key-file` 指定私钥文件,两者配对使用;

+ `--tls-*-file`:指定 apiserver 使用的证书、私钥和 CA 文件。`--client-ca-file` 用于验证 client (kue-controller-manager、kube-scheduler、kubelet、kube-proxy 等)请求所带的证书;

+ `--kubelet-client-certificate`、`--kubelet-client-key`:如果指定,则使用 https 访问 kubelet APIs;需要为证书对应的用户(上面 kubernetes*.pem 证书的用户为 kubernetes) 用户定义 RBAC 规则,否则访问 kubelet API 时提示未授权;

+ `--bind-address`: 不能为 `127.0.0.1`,否则外界不能访问它的安全端口 6443;

+ `--insecure-port=0`:关闭监听非安全端口(8080);

+ `--service-cluster-ip-range`: 指定 Service Cluster IP 地址段;

+ `--service-node-port-range`: 指定 NodePort 的端口范围;

+ `--runtime-config=api/all=true`: 启用所有版本的 APIs,如 autoscaling/v2alpha1;

+ `--enable-bootstrap-token-auth`:启用 kubelet bootstrap 的 token 认证;

+ `--apiserver-count=3`:指定集群运行模式,多台 kube-apiserver 会通过 leader 选举产生一个工作节点,其它节点处于阻塞状态;

+ `User=k8s`:使用 k8s 账户运行;

## 为各节点创建和分发 kube-apiserver systemd unit 文件

替换模板文件中的变量,为各节点创建 systemd unit 文件:

``` bash

source /opt/k8s/bin/environment.sh

for (( i=0; i < 3; i++ ))

do

sed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_IPS[i]}/" kube-apiserver.service.template > kube-apiserver-${NODE_IPS[i]}.service

done

ls kube-apiserver*.service

```

+ NODE_NAMES 和 NODE_IPS 为相同长度的 bash 数组,分别为节点名称和对应的 IP;

分发生成的 systemd unit 文件:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /var/log/kubernetes && chown -R k8s /var/log/kubernetes"

scp kube-apiserver-${node_ip}.service root@${node_ip}:/etc/systemd/system/kube-apiserver.service

done

```

+ 必须先创建日志目录;

+ 文件重命名为 kube-apiserver.service;

替换后的 unit 文件:[kube-apiserver.service](https://github.com/opsnull/follow-me-install-kubernetes-cluster/blob/master/systemd/kube-apiserver.service)

## 启动 kube-apiserver 服务

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-apiserver && systemctl restart kube-apiserver"

done

```

## 检查 kube-apiserver 运行状态

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status kube-apiserver |grep 'Active:'"

done

```

确保状态为 `active (running)`,否则到 master 节点查看日志,确认原因:

``` bash

journalctl -u kube-apiserver

```

## 打印 kube-apiserver 写入 etcd 的数据

``` bash

source /opt/k8s/bin/environment.sh

ETCDCTL_API=3 etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--cacert=/etc/kubernetes/cert/ca.pem \

--cert=/etc/etcd/cert/etcd.pem \

--key=/etc/etcd/cert/etcd-key.pem \

get /registry/ --prefix --keys-only

```

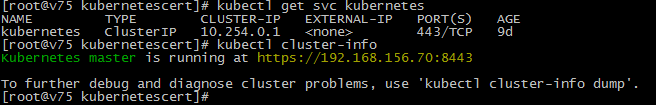

## 检查集群信息

``` bash

$ kubectl cluster-info

$ kubectl get all --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.254.0.1

$ kubectl get componentstatuses

七、部署高可用 kube-controller-manager 集群

[root@v75 con]# cat kube-controller-manager-csr.json

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"hosts": [

"127.0.0.1",

"192.168.156.75",

"192.168.156.76",

"192.168.156.77"

],

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:kube-controller-manager",

"OU": "4Paradigm"

}

]

}

+ hosts 列表包含**所有** kube-controller-manager 节点 IP;

+ CN 为 system:kube-controller-manager、O 为 system:kube-controller-manager,kubernetes 内置的 ClusterRoleBindings system:kube-controller-manager 赋予 kube-controller-manager 工作所需的权限。

生成证书和私钥:

``` bash

cfssl gencert -ca=/etc/kubernetes/cert/ca.pem \

-ca-key=/etc/kubernetes/cert/ca-key.pem \

-config=/etc/kubernetes/cert/ca-config.json \

-profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

```

将生成的证书和私钥分发到所有 master 节点:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-controller-manager*.pem k8s@${node_ip}:/etc/kubernetes/cert/

done

```

## 创建和分发 kubeconfig 文件

kubeconfig 文件包含访问 apiserver 的所有信息,如 apiserver 地址、CA 证书和自身使用的证书;

``` bash

source /opt/k8s/bin/environment.sh

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=kube-controller-manager.pem \

--client-key=kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-context system:kube-controller-manager \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

```

分发 kubeconfig 到所有 master 节点:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-controller-manager.kubeconfig k8s@${node_ip}:/etc/kubernetes/

done

```

## 创建和分发 kube-controller-manager systemd unit 文件

``` bash

source /opt/k8s/bin/environment.sh

cat > kube-controller-manager.service <

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/opt/k8s/bin/kube-controller-manager \\

--port=0 \\

--secure-port=10252 \\

--bind-address=127.0.0.1 \\

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\

--service-cluster-ip-range=${SERVICE_CIDR} \\

--cluster-name=kubernetes \\

--cluster-signing-cert-file=/etc/kubernetes/cert/ca.pem \\

--cluster-signing-key-file=/etc/kubernetes/cert/ca-key.pem \\

--experimental-cluster-signing-duration=8760h \\

--root-ca-file=/etc/kubernetes/cert/ca.pem \\

--service-account-private-key-file=/etc/kubernetes/cert/ca-key.pem \\

--leader-elect=true \\

--feature-gates=RotateKubeletServerCertificate=true \\

--controllers=*,bootstrapsigner,tokencleaner \\

--horizontal-pod-autoscaler-use-rest-clients=true \\

--horizontal-pod-autoscaler-sync-period=10s \\

--tls-cert-file=/etc/kubernetes/cert/kube-controller-manager.pem \\

--tls-private-key-file=/etc/kubernetes/cert/kube-controller-manager-key.pem \\

--use-service-account-credentials=true \\

--alsologtostderr=true \\

--logtostderr=false \\

--log-dir=/var/log/kubernetes \\

--v=2

Restart=on

Restart=on-failure

RestartSec=5

User=k8s

[Install]

WantedBy=multi-user.target

EOF

```

+ `--port=0`:关闭监听 http /metrics 的请求,同时 `--address` 参数无效,`--bind-address` 参数有效;

+ `--secure-port=10252`、`--bind-address=0.0.0.0`: 在所有网络接口监听 10252 端口的 https /metrics 请求;

+ `--kubeconfig`:指定 kubeconfig 文件路径,kube-controller-manager 使用它连接和验证 kube-apiserver;

+ `--cluster-signing-*-file`:签名 TLS Bootstrap 创建的证书;

+ `--experimental-cluster-signing-duration`:指定 TLS Bootstrap 证书的有效期;

+ `--root-ca-file`:放置到容器 ServiceAccount 中的 CA 证书,用来对 kube-apiserver 的证书进行校验;

+ `--service-account-private-key-file`:签名 ServiceAccount 中 Token 的私钥文件,必须和 kube-apiserver 的 `--service-account-key-file` 指定的公钥文件配对使用;

+ `--service-cluster-ip-range` :指定 Service Cluster IP 网段,必须和 kube-apiserver 中的同名参数一致;

+ `--leader-elect=true`:集群运行模式,启用选举功能;被选为 leader 的节点负责处理工作,其它节点为阻塞状态;

+ `--feature-gates=RotateKubeletServerCertificate=true`:开启 kublet server 证书的自动更新特性;

+ `--controllers=*,bootstrapsigner,tokencleaner`:启用的控制器列表,tokencleaner 用于自动清理过期的 Bootstrap token;

+ `--horizontal-pod-autoscaler-*`:custom metrics 相关参数,支持 autoscaling/v2alpha1;

+ `--tls-cert-file`、`--tls-private-key-file`:使用 https 输出 metrics 时使用的 Server 证书和秘钥;

+ `--use-service-account-credentials=true`:

+ `User=k8s`:使用 k8s 账户运行;

kube-controller-manager 不对请求 https metrics 的 Client 证书进行校验,故不需要指定 `--tls-ca-file` 参数,而且该参数已被淘汰。

完整 unit 见 [kube-controller-manager.service](https://github.com/opsnull/follow-me-install-kubernetes-cluster/blob/master/systemd/kube-controller-manager.service)

分发 systemd unit 文件到所有 master 节点:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-controller-manager.service root@${node_ip}:/etc/systemd/system/

done

```

## kube-controller-manager 的权限

ClusteRole: system:kube-controller-manager 的**权限很小**,只能创建 secret、serviceaccount 等资源对象,各 controller 的权限分散到 ClusterRole system:controller:XXX 中。

需要在 kube-controller-manager 的启动参数中添加 `--use-service-account-credentials=true` 参数,这样 main controller 会为各 controller 创建对应的 ServiceAccount XXX-controller。

内置的 ClusterRoleBinding system:controller:XXX 将赋予各 XXX-controller ServiceAccount 对应的 ClusterRole system:controller:XXX 权限。

## 启动 kube-controller-manager 服务

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /var/log/kubernetes && chown -R k8s /var/log/kubernetes"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-controller-manager && systemctl restart kube-controller-manager"

done

```

+ 必须先创建日志目录;

## 检查服务运行状态

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "systemctl status kube-controller-manager|grep Active"

done

```

确保状态为 `active (running)`,否则查看日志,确认原因:

``` bash

$ journalctl -u kube-controller-manager

```

查看当前的 leader

``` bash

$ kubectl get endpoints kube-controller-manager --namespace=kube-system -o yaml

可见,当前的 leader 为 v76 节点

八、部署高可用 kube-scheduler 集群

[root@v75 sch]# cat kube-scheduler-csr.json

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"192.168.156.75",

"192.168.156.76",

"192.168.156.77"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:kube-scheduler",

"OU": "4Paradigm"

}

]

}

+ hosts 列表包含**所有** kube-scheduler 节点 IP;

+ CN 为 system:kube-scheduler、O 为 system:kube-scheduler,kubernetes 内置的 ClusterRoleBindings system:kube-scheduler 将赋予 kube-scheduler 工作所需的权限。

生成证书和私钥:

``` bash

cfssl gencert -ca=/etc/kubernetes/cert/ca.pem \

-ca-key=/etc/kubernetes/cert/ca-key.pem \

-config=/etc/kubernetes/cert/ca-config.json \

-profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

```

## 创建和分发 kubeconfig 文件

kubeconfig 文件包含访问 apiserver 的所有信息,如 apiserver 地址、CA 证书和自身使用的证书;

``` bash

source /opt/k8s/bin/environment.sh

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config set-credentials system:kube-scheduler \

--client-certificate=kube-scheduler.pem \

--client-key=kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config set-context system:kube-scheduler \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

```

+ 上一步创建的证书、私钥以及 kube-apiserver 地址被写入到 kubeconfig 文件中;

分发 kubeconfig 到所有 master 节点:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-scheduler.kubeconfig k8s@${node_ip}:/etc/kubernetes/

done

```

## 创建和分发 kube-scheduler systemd unit 文件

``` bash

cat > kube-scheduler.service <

Description=Kubernetes Scheduler

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/opt/k8s/bin/kube-scheduler \\

--address=127.0.0.1 \\

--kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \\

--leader-elect=true \\

--alsologtostderr=true \\

--logtostderr=false \\

--log-dir=/var/log/kubernetes \\

--v=2

Restart=on-failure

RestartSec=5

User=k8s

[Install]

WantedBy=multi-user.target

EOF

```

+ `--address`:在 127.0.0.1:10251 端口接收 http /metrics 请求;kube-scheduler 目前还不支持接收 https 请求;

+ `--kubeconfig`:指定 kubeconfig 文件路径,kube-scheduler 使用它连接和验证 kube-apiserver;

+ `--leader-elect=true`:集群运行模式,启用选举功能;被选为 leader 的节点负责处理工作,其它节点为阻塞状态;

+ `User=k8s`:使用 k8s 账户运行

分发 systemd unit 文件到所有 master 节点:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-scheduler.service root@${node_ip}:/etc/systemd/system/

done

```

## 启动 kube-scheduler 服务

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /var/log/kubernetes && chown -R k8s /var/log/kubernetes"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-scheduler && systemctl restart kube-scheduler"

done

```

+ 必须先创建日志目录;

## 检查服务运行状态

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "systemctl status kube-scheduler|grep Active"

done

```

确保状态为 `active (running)`,否则查看日志,确认原因:

``` bash

journalctl -u kube-scheduler

```

## 查看输出的 metric

注意:以下命令在 kube-scheduler 节点上执行。

kube-scheduler 监听 10251 端口,接收 http 请求:

## 测试 kube-scheduler 集群的高可用

随便找一个或两个 master 节点,停掉 kube-scheduler 服务,看其它节点是否获取了 leader 权限(systemd 日志)。

## 查看当前的 leader

``` bash

$ kubectl get endpoints kube-scheduler --namespace=kube-system -o yaml

可见,当前的 leader 为 v76节点。

九、部署 worker 节点

kubernetes work 节点运行如下组件:

+ docker

+ kubelet

+ kube-proxy

CentOS:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "yum install -y epel-release"

ssh root@${node_ip} "yum install -y conntrack ipvsadm ipset jq iptables curl sysstat libseccomp && /usr/sbin/modprobe ip_vs "

done

十、部署 docker 组件

wget https://download.docker.com/linux/static/stable/x86_64/docker-18.03.1-ce.tgz

tar -xvf docker-18.03.1-ce.tgz

分发二进制文件到所有 worker 节点:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp docker/docker* k8s@${node_ip}:/opt/k8s/bin/

ssh k8s@${node_ip} "chmod +x /opt/k8s/bin/*"

done

```

## 创建和分发 systemd unit 文件

``` bash

cat > docker.service <<"EOF"

[Unit]

Description=Docker Application Container Engine

Documentation=http://docs.docker.io

[Service]

Environment="PATH=/opt/k8s/bin:/bin:/sbin:/usr/bin:/usr/sbin"

EnvironmentFile=-/run/flannel/docker

ExecStart=/opt/k8s/bin/dockerd --log-level=error $DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

Restart=on-failure

RestartSec=5

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

```

+ EOF 前后有双引号,这样 bash 不会替换文档中的变量,如 $DOCKER_NETWORK_OPTIONS;

+ dockerd 运行时会调用其它 docker 命令,如 docker-proxy,所以需要将 docker 命令所在的目录加到 PATH 环境变量中;

+ flanneld 启动时将网络配置写入 `/run/flannel/docker` 文件中,dockerd 启动前读取该文件中的环境变量 `DOCKER_NETWORK_OPTIONS` ,然后设置 docker0 网桥网段;

+ 如果指定了多个 `EnvironmentFile` 选项,则必须将 `/run/flannel/docker` 放在最后(确保 docker0 使用 flanneld 生成的 bip 参数);

+ docker 需要以 root 用于运行;

+ docker 从 1.13 版本开始,可能将 **iptables FORWARD chain的默认策略设置为DROP**,从而导致 ping 其它 Node 上的 Pod IP 失败,遇到这种情况时,需要手动设置策略为 `ACCEPT`:

``` bash

$ sudo iptables -P FORWARD ACCEPT

```

并且把以下命令写入 `/etc/rc.local` 文件中,防止节点重启**iptables FORWARD chain的默认策略又还原为DROP**

``` bash

/sbin/iptables -P FORWARD ACCEPT

```

完整 unit 见 [docker.service](https://github.com/opsnull/follow-me-install-kubernetes-cluster/blob/master/systemd/docker.service)

分发 systemd unit 文件到所有 worker 机器:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp docker.service root@${node_ip}:/etc/systemd/system/

done

```

## 配置和分发 docker 配置文件

使用国内的仓库镜像服务器以加快 pull image 的速度,同时增加下载的并发数 (需要重启 dockerd 生效):

``` bash

cat > docker-daemon.json <

"registry-mirrors": ["https://hub-mirror.c.163.com", "https://docker.mirrors.ustc.edu.cn"],

"max-concurrent-downloads": 20

}

EOF

```

分发 docker 配置文件到所有 work 节点:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/docker/"

scp docker-daemon.json root@${node_ip}:/etc/docker/daemon.json

done

```

## 启动 docker 服务

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl stop firewalld && systemctl disable firewalld"

ssh root@${node_ip} "/usr/sbin/iptables -F && /usr/sbin/iptables -X && /usr/sbin/iptables -F -t nat && /usr/sbin/iptables -X -t nat"

ssh root@${node_ip} "/usr/sbin/iptables -P FORWARD ACCEPT"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable docker && systemctl restart docker"

ssh root@${node_ip} 'for intf in /sys/devices/virtual/net/docker0/brif/*; do echo 1 > $intf/hairpin_mode; done'

ssh root@${node_ip} "sudo sysctl -p /etc/sysctl.d/kubernetes.conf"

done

```

+ 关闭 firewalld(centos7)/ufw(ubuntu16.04),否则可能会重复创建 iptables 规则;

+ 清理旧的 iptables rules 和 chains 规则;

+ 开启 docker0 网桥下虚拟网卡的 hairpin 模式;

## 检查服务运行状态

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "systemctl status docker|grep Active"

done

```

确保状态为 `active (running)`,否则查看日志,确认原因:

``` bash

$ journalctl -u docker

```

### 检查 docker0 网桥

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "/usr/sbin/ip addr show flannel.1 && /usr/sbin/ip addr show docker0"

done

```

十一、部署 kubelet 组件

## 创建 kubelet bootstrap kubeconfig 文件

``` bash

source /opt/k8s/bin/environment.sh

for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

# 创建 token

export BOOTSTRAP_TOKEN=$(kubeadm token create \

--description kubelet-bootstrap-token \

--groups system:bootstrappers:${node_name} \

--kubeconfig ~/.kube/config)

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

done

```

+ 证书中写入 Token 而非证书,证书后续由 controller-manager 创建。

查看 kubeadm 为各节点创建的 token:

``` bash

$ kubeadm token list --kubeconfig ~/.kube/config

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

k0s2bj.7nvw1zi1nalyz4gz 23h 2018-06-14T15:14:31+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:kube-node1

mkus5s.vilnjk3kutei600l 23h 2018-06-14T15:14:32+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:kube-node3

zkiem5.0m4xhw0jc8r466nk 23h 2018-06-14T15:14:32+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:kube-node2

```

+ 创建的 token 有效期为 1 天,超期后将不能再被使用,且会被 kube-controller-manager 的 tokencleaner 清理(如果启用该 controller 的话);

+ kube-apiserver 接收 kubelet 的 bootstrap token 后,将请求的 user 设置为 system:bootstrap:

各 token 关联的 Secret:

``` bash

$ kubectl get secrets -n kube-system

NAME TYPE DATA AGE

bootstrap-token-k0s2bj bootstrap.kubernetes.io/token 7 1m

bootstrap-token-mkus5s bootstrap.kubernetes.io/token 7 1m

bootstrap-token-zkiem5 bootstrap.kubernetes.io/token 7 1m

default-token-99st7 kubernetes.io/service-account-token 3 2d

```

## 分发 bootstrap kubeconfig 文件到所有 worker 节点

``` bash

source /opt/k8s/bin/environment.sh

for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

scp kubelet-bootstrap-${node_name}.kubeconfig k8s@${node_name}:/etc/kubernetes/kubelet-bootstrap.kubeconfig

done

```

## 创建和分发 kubelet 参数配置文件

从 v1.10 开始,kubelet **部分参数**需在配置文件中配置,`kubelet --help` 会提示:

DEPRECATED: This parameter should be set via the config file specified by the Kubelet's --config flag

创建 kubelet 参数配置模板文件:

``` bash

source /opt/k8s/bin/environment.sh

cat > kubelet.config.json.template <

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"authentication": {

"x509": {

"clientCAFile": "/etc/kubernetes/cert/ca.pem"

},

"webhook": {

"enabled": true,

"cacheTTL": "2m0s"

},

"anonymous": {

"enabled": false

}

},

"authorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"address": "##NODE_IP##",

"port": 10250,

"readOnlyPort": 0,

"cgroupDriver": "cgroupfs",

"hairpinMode": "promiscuous-bridge",

"serializeImagePulls": false,

"featureGates": {

"RotateKubeletClientCertificate": true,

"RotateKubeletServerCertificate": true

},

"clusterDomain": "${CLUSTER_DNS_DOMAIN}",

"clusterDNS": ["${CLUSTER_DNS_SVC_IP}"]

}

EOF

```

+ address:API 监听地址,不能为 127.0.0.1,否则 kube-apiserver、heapster 等不能调用 kubelet 的 API;

+ readOnlyPort=0:关闭只读端口(默认 10255),等效为未指定;

+ authentication.anonymous.enabled:设置为 false,不允许匿名访问 10250 端口;

+ authentication.x509.clientCAFile:指定签名客户端证书的 CA 证书,开启 HTTP 证书认证;

+ authentication.webhook.enabled=true:开启 HTTPs bearer token 认证;

+ 对于未通过 x509 证书和 webhook 认证的请求(kube-apiserver 或其他客户端),将被拒绝,提示 Unauthorized;

+ authroization.mode=Webhook:kubelet 使用 SubjectAccessReview API 查询 kube-apiserver 某 user、group 是否具有操作资源的权限(RBAC);

+ featureGates.RotateKubeletClientCertificate、featureGates.RotateKubeletServerCertificate:自动 rotate 证书,证书的有效期取决于 kube-controller-manager 的 --experimental-cluster-signing-duration 参数;

+ 需要 root 账户运行;

为各节点创建和分发 kubelet 配置文件:

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

sed -e "s/##NODE_IP##/${node_ip}/" kubelet.config.json.template > kubelet.config-${node_ip}.json

scp kubelet.config-${node_ip}.json root@${node_ip}:/etc/kubernetes/kubelet.config.json

done

创建 kubelet systemd unit 文件模板:

``` bash

cat > kubelet.service.template <

Description=Kubernetes Kubelet

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/opt/k8s/bin/kubelet \\

--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \\

--cert-dir=/etc/kubernetes/cert \\

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \\

--config=/etc/kubernetes/kubelet.config.json \\

--hostname-override=##NODE_NAME## \\

--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest \\

--allow-privileged=true \\

--alsologtostderr=true \\

--logtostderr=false \\

--log-dir=/var/log/kubernetes \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

```

+ 如果设置了 `--hostname-override` 选项,则 `kube-proxy` 也需要设置该选项,否则会出现找不到 Node 的情况;

+ `--bootstrap-kubeconfig`:指向 bootstrap kubeconfig 文件,kubelet 使用该文件中的用户名和 token 向 kube-apiserver 发送 TLS Bootstrapping 请求;

+ K8S approve kubelet 的 csr 请求后,在 `--cert-dir` 目录创建证书和私钥文件,然后写入 `--kubeconfig` 文件;

为各节点创建和分发 kubelet systemd unit 文件:

``` bash

source /opt/k8s/bin/environment.sh

for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

sed -e "s/##NODE_NAME##/${node_name}/" kubelet.service.template > kubelet-${node_name}.service

scp kubelet-${node_name}.service root@${node_name}:/etc/systemd/system/kubelet.service

done

## Bootstrap Token Auth 和授予权限

kublet 启动时查找配置的 --kubeletconfig 文件是否存在,如果不存在则使用 --bootstrap-kubeconfig 向 kube-apiserver 发送证书签名请求 (CSR)。

kube-apiserver 收到 CSR 请求后,对其中的 Token 进行认证(事先使用 kubeadm 创建的 token),认证通过后将请求的 user 设置为 system:bootstrap:

默认情况下,这个 user 和 group 没有创建 CSR 的权限,kubelet 启动失败,错误日志如下:

``` bash

$ sudo journalctl -u kubelet -a |grep -A 2 'certificatesigningrequests'

May 06 06:42:36 kube-node1 kubelet[26986]: F0506 06:42:36.314378 26986 server.go:233] failed to run Kubelet: cannot create certificate signing request: certificatesigningrequests.certificates.k8s.io is forbidden: User "system:bootstrap:lemy40" cannot create certificatesigningrequests.certificates.k8s.io at the cluster scope

May 06 06:42:36 kube-node1 systemd[1]: kubelet.service: Main process exited, code=exited, status=255/n/a

May 06 06:42:36 kube-node1 systemd[1]: kubelet.service: Failed with result 'exit-code'.

```

解决办法是:创建一个 clusterrolebinding,将 group system:bootstrappers 和 clusterrole system:node-bootstrapper 绑定:

``` bash

$ kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --group=system:bootstrappers

```

## 启动 kubelet 服务

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /var/lib/kubelet"

ssh root@${node_ip} "/usr/sbin/swapoff -a"

ssh root@${node_ip} "mkdir -p /var/log/kubernetes && chown -R k8s /var/log/kubernetes"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kubelet && systemctl restart kubelet"

done

```

+ 关闭 swap 分区,否则 kubelet 会启动失败;

+ 必须先创建工作和日志目录;

十二、部署 kube-proxy 组件

[root@v75 kubeproxy]# cat kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "4Paradigm"

}

]

}

+ CN:指定该证书的 User 为 `system:kube-proxy`;

+ 预定义的 RoleBinding `system:node-proxier` 将User `system:kube-proxy` 与 Role `system:node-proxier` 绑定,该 Role 授予了调用 `kube-apiserver` Proxy 相关 API 的权限;

+ 该证书只会被 kube-proxy 当做 client 证书使用,所以 hosts 字段为空;

生成证书和私钥:

``` bash

cfssl gencert -ca=/etc/kubernetes/cert/ca.pem \

-ca-key=/etc/kubernetes/cert/ca-key.pem \

-config=/etc/kubernetes/cert/ca-config.json \

-profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

```

## 创建和分发 kubeconfig 文件

``` bash

source /opt/k8s/bin/environment.sh

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=kube-proxy.pem \

--client-key=kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

```

+ `--embed-certs=true`:将 ca.pem 和 admin.pem 证书内容嵌入到生成的 kubectl-proxy.kubeconfig 文件中(不加时,写入的是证书文件路径);

分发 kubeconfig 文件:

``` bash

source /opt/k8s/bin/environment.sh

for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

scp kube-proxy.kubeconfig k8s@${node_name}:/etc/kubernetes/

done

```

## 创建 kube-proxy 配置文件

从 v1.10 开始,kube-proxy **部分参数**可以配置文件中配置。可以使用 `--write-config-to` 选项生成该配置文件,或者参考 kubeproxyconfig 的类型定义源文件 :https://github.com/kubernetes/kubernetes/blob/master/pkg/proxy/apis/kubeproxyconfig/types.go

创建 kube-proxy config 文件模板:

``` bash

cat >kube-proxy.config.yaml.template <

bindAddress: ##NODE_IP##

clientConnection:

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

clusterCIDR: ${CLUSTER_CIDR}

healthzBindAddress: ##NODE_IP##:10256

hostnameOverride: ##NODE_NAME##

kind: KubeProxyConfiguration

metricsBindAddress: ##NODE_IP##:10249

mode: "ipvs"

EOF

```

+ `bindAddress`: 监听地址;

+ `clientConnection.kubeconfig`: 连接 apiserver 的 kubeconfig 文件;

+ `clusterCIDR`: kube-proxy 根据 `--cluster-cidr` 判断集群内部和外部流量,指定 `--cluster-cidr` 或 `--masquerade-all` 选项后 kube-proxy 才会对访问 Service IP 的请求做 SNAT;

+ `hostnameOverride`: 参数值必须与 kubelet 的值一致,否则 kube-proxy 启动后会找不到该 Node,从而不会创建任何 ipvs 规则;

+ `mode`: 使用 ipvs 模式;

为各节点创建和分发 kube-proxy 配置文件:

``` bash

source /opt/k8s/bin/environment.sh

for (( i=0; i < 3; i++ ))

do

echo ">>> ${NODE_NAMES[i]}"

sed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_IPS[i]}/" kube-proxy.config.yaml.template > kube-proxy-${NODE_NAMES[i]}.config.yaml

scp kube-proxy-${NODE_NAMES[i]}.config.yaml root@${NODE_NAMES[i]}:/etc/kubernetes/kube-proxy.config.yaml

done

```

创建和分发 kube-proxy systemd unit 文件

[root@v75 ~]# cat /etc/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/opt/k8s/bin/kube-proxy \

--config=/etc/kubernetes/kube-proxy.config.yaml \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

调用关系,启动kube-proxy.service时,/opt/k8s/bin/kube-proxy使用了配置文件/etc/kubernetes/kube-proxy.config.yaml,配置文件/etc/kubernetes/kube-proxy.config.yaml再调用文件/etc/kubernetes/kube-proxy.kubeconfig

分发 kube-proxy systemd unit 文件:

``` bash

source /opt/k8s/bin/environment.sh

for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

scp kube-proxy.service root@${node_name}:/etc/systemd/system/

done

```

分发 kube-proxy 文件:

``` bash

source /opt/k8s/bin/environment.sh

for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

scp kube-proxy root@${node_name}:/opt/k8s/

done

```

## 启动 kube-proxy 服务

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /var/lib/kube-proxy"

ssh root@${node_ip} "mkdir -p /var/log/kubernetes && chown -R k8s /var/log/kubernetes"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-proxy && systemctl restart kube-proxy"

done

```

+ 必须先创建工作和日志目录;

## 检查启动结果

``` bash

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "systemctl status kube-proxy|grep Active"

done

```

确保状态为 `active (running)`,否则查看日志,确认原因:

``` bash

journalctl -u kube-proxy

```

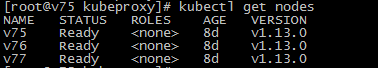

十三、验证群集功能

kubectl get nodes