CentOS 7下Mongodb副本集搭建

CentOS 7下Mongodb副本集搭建

实验环境

操作系统:CentOS Linux release 7.3.1611 (Core)

数据库系统: Mongodb 3.4.9-1.el7

mongo1 IP: 192.168.58.129

mongo2 IP: 192.168.58.130

mongo3 IP: 192.168.58.131- 1

- 2

- 3

- 4

- 5

- 6

- 7

副本集原理

MongoDB中的副本集是一组保持相同数据集的mongod进程。副本集提供冗余和高可用性,是所有生产部署的基础。

冗余和数据可用性

复制提供冗余并增加数据可用性。在不同数据库服务器上有多个数据副本的情况下,复制可以提供一定的容错能力,以防止单个数据库服务器的丢失。

在某些情况下,复制可以提高读取容量,因为客户端可以将读取操作发送到不同的服务器。在不同的数据中心维护数据副本可以增加分布式应用程序的数据本地化和可用性。您还可以为了专门目的而保留其他副本,例如灾难恢复,报告或备份。

在MongoDB中复制

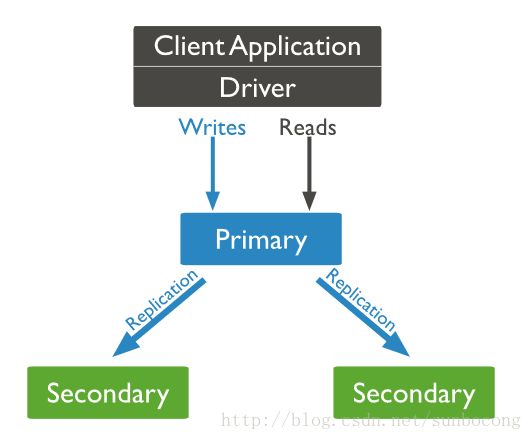

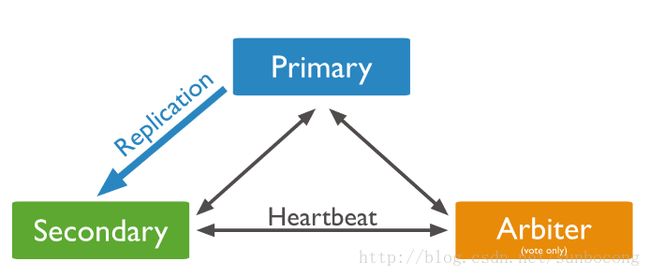

副本集是一组保持相同数据集的mongod实例。副本集包含多个数据承载节点和可选的一个仲裁节点。在数据承载节点中,只有一个成员被认为是主节点,而其他节点被认为是次节点。

在复制集中,主节点是唯一能够接收写请求的节点。MongoDB在 主节点 上进行写操作,并会将这些操作记录到主节点的 oplog 中。 从节点 会将oplog复制到其本机并将这些操作应用到其自己的数据集上。

在拥有下述三个成员的复制集中,主节点将接收所有的写请求,而从节点会将oplog复制到本机并在其自己的数据集上应用这些操作。

复制集中任何成员都可以接收读请求。但是默认情况下,应用程序会直接连接到在主节点上进行读操作。

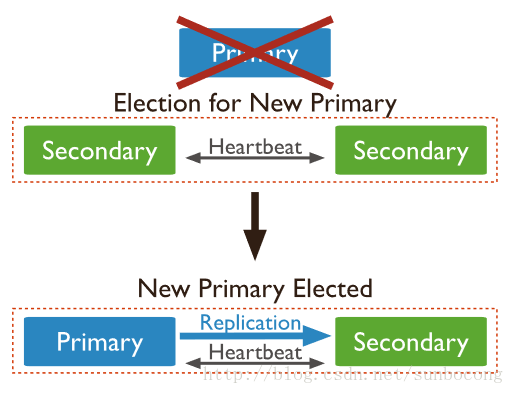

复制集最多只能拥有一个主节点。一旦当前的主节点不可用了,复制集就会选举出新的主节点。

辅助程序复制主要的oplog并将操作应用于其数据集,以便辅助数据集反映主要的数据集。 如果主节点不可用,剩下的从节点会自动选举出新的主节点。

构建基础环境

本实验计划以mongo1为主库,mongo2,mongo3为从库进行集合搭建。设定mongo1中原本有数据。

在mongo1建测试数据

进入mongo1的数据库

并在数据库中新建了一个集合test并在其中插入一条数据

宁波整形医院http://www.lyxcl.org/

宁波整形美容医院http://www.lyxcl.org/

[root@localhost ~]# mongo

MongoDB shell version v3.4.10

connecting to: mongodb://127.0.0.1:27017

MongoDB server version: 3.4.10

Server has startup warnings:

2017-11-16T23:21:29.868-0800 I CONTROL [initandlisten]

2017-11-16T23:21:29.868-0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2017-11-16T23:21:29.868-0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2017-11-16T23:21:29.868-0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended.

2017-11-16T23:21:29.868-0800 I CONTROL [initandlisten]

2017-11-16T23:21:29.868-0800 I CONTROL [initandlisten]

2017-11-16T23:21:29.868-0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'.

2017-11-16T23:21:29.868-0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2017-11-16T23:21:29.868-0800 I CONTROL [initandlisten]

2017-11-16T23:21:29.868-0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2017-11-16T23:21:29.868-0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2017-11-16T23:21:29.868-0800 I CONTROL [initandlisten]

> use test

switched to db test

> db.test.insert({"name":"super sbc"})

WriteResult({ "nInserted" : 1 })

> show dbs

admin 0.000GB

local 0.000GB

test 0.000GB- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

删除mongo2,mongo3中的所有数据库

分别进入mongo2,mongo3

删除所有数据库(包括admin和local)

[root@localhost ~]# mongo

MongoDB shell version v3.4.10

connecting to: mongodb://127.0.0.1:27017

MongoDB server version: 3.4.10

Server has startup warnings:

2017-11-16T22:57:33.260-0800 I CONTROL [initandlisten]

2017-11-16T22:57:33.260-0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2017-11-16T22:57:33.260-0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2017-11-16T22:57:33.260-0800 I CONTROL [initandlisten]

2017-11-16T22:57:33.260-0800 I CONTROL [initandlisten]

2017-11-16T22:57:33.260-0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'.

2017-11-16T22:57:33.260-0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2017-11-16T22:57:33.260-0800 I CONTROL [initandlisten]

2017-11-16T22:57:33.260-0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2017-11-16T22:57:33.260-0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2017-11-16T22:57:33.260-0800 I CONTROL [initandlisten]

> use admin

switched to db admin

> db.shutdownServer()

server should be down...

2017-11-16T23:50:42.453-0800 I NETWORK [thread1] trying reconnect to 127.0.0.1:27017 (127.0.0.1) failed

2017-11-16T23:50:42.453-0800 W NETWORK [thread1] Failed to connect to 127.0.0.1:27017, in(checking socket for error after poll), reason: Connection refused

2017-11-16T23:50:42.453-0800 I NETWORK [thread1] reconnect 127.0.0.1:27017 (127.0.0.1) failed failed

> exit

bye- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

创建集合

分别在mongo1,mongo2和mongo3上启动命名为sbc的mongod服务

mongo1:

[root@localhost ~]# mongod --replSet sbc -f /etc/mongod.conf --fork

about to fork child process, waiting until server is ready for connections.

forked process: 73721

child process started successfully, parent exiting- 1

- 2

- 3

- 4

mongo2:

[root@localhost ~]# mongod --replSet sbc -f /etc/mongod.conf --fork

about to fork child process, waiting until server is ready for connections.

forked process: 96907

child process started successfully, parent exiting- 1

- 2

- 3

- 4

mongo3:

[root@localhost ~]# mongod --replSet sbc -f /etc/mongod.conf --fork

about to fork child process, waiting until server is ready for connections.

forked process: 96105

child process started successfully, parent exiting- 1

- 2

- 3

- 4

配置集合

在mongo1端进行集合配置

config文件配置

进入mongo1的mongodb数据库服务,声明配置文件

[root@localhost ~]# mongo

MongoDB shell version v3.4.10

connecting to: mongodb://127.0.0.1:27017

MongoDB server version: 3.4.10

Server has startup warnings:

2017-11-19T23:36:34.302-0800 I CONTROL [initandlisten]

2017-11-19T23:36:34.302-0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2017-11-19T23:36:34.302-0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2017-11-19T23:36:34.302-0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended.

2017-11-19T23:36:34.302-0800 I CONTROL [initandlisten]

2017-11-19T23:36:34.302-0800 I CONTROL [initandlisten]

2017-11-19T23:36:34.303-0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'.

2017-11-19T23:36:34.303-0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2017-11-19T23:36:34.303-0800 I CONTROL [initandlisten]

2017-11-19T23:36:34.303-0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2017-11-19T23:36:34.303-0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2017-11-19T23:36:34.303-0800 I CONTROL [initandlisten]

> config = {

... "_id" : "sbc",

... "members" : [

... {"_id" : 0, "host" : "192.168.58.129:27017"},

... {"_id" : 1, "host" : "192.168.58.130:27017"},

... {"_id" : 2, "host" : "192.168.58.131:27017"}

... ]

... }

{

"_id" : "sbc",

"members" : [

{

"_id" : 0,

"host" : "192.168.58.129:27017"

},

{

"_id" : 1,

"host" : "192.168.58.130:27017"

},

{

"_id" : 2,

"host" : "192.168.58.131:27017"

}

]

}- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

初始化集群

在mongo1服务中初始化集群

> rs.initiate(config)

{ "ok" : 1 }- 1

- 2

检查集群状态

在mongo1服务中查看集群状态

sbc:OTHER> rs.status()

{

"set" : "sbc",

"date" : ISODate("2017-11-20T07:47:25.901Z"),

"myState" : 1,

"term" : NumberLong(1),

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1511164036, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1511164036, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1511164036, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "192.168.58.129:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 653,

"optime" : {

"ts" : Timestamp(1511164036, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2017-11-20T07:47:16Z"),

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1511163995, 1),

"electionDate" : ISODate("2017-11-20T07:46:35Z"),

"configVersion" : 1,

"self" : true

},

{

"_id" : 1,

"name" : "192.168.58.130:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 60,

"optime" : {

"ts" : Timestamp(1511164036, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1511164036, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2017-11-20T07:47:16Z"),

"optimeDurableDate" : ISODate("2017-11-20T07:47:16Z"),

"lastHeartbeat" : ISODate("2017-11-20T07:47:25.220Z"),

"lastHeartbeatRecv" : ISODate("2017-11-20T07:47:24.519Z"),

"pingMs" : NumberLong(1),

"syncingTo" : "192.168.58.129:27017",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.58.131:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 60,

"optime" : {

"ts" : Timestamp(1511164036, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1511164036, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2017-11-20T07:47:16Z"),

"optimeDurableDate" : ISODate("2017-11-20T07:47:16Z"),

"lastHeartbeat" : ISODate("2017-11-20T07:47:25.207Z"),

"lastHeartbeatRecv" : ISODate("2017-11-20T07:47:24.448Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.58.129:27017",

"configVersion" : 1

}

],

"ok" : 1

}- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

可以看出mongo1为主节点,mongo2和mongo3为从节点,集群状态正常,搭建完成

搭建中遇到的问题

cannot initiate set

在mongo1上初始化集群时返回错误 “‘192.168.58.130:27017’ has data already, cannot initiate set.”

> rs.initiate(config)

{

"ok" : 0,

"errmsg" : "'192.168.58.130:27017' has data already, cannot initiate set.",

"code" : 110,

"codeName" : "CannotInitializeNodeWithData"

}- 1

- 2

- 3

- 4

- 5

- 6

- 7

经过检查发现时由于mongo2,mongo3中有数据,搭建副本集的时候,必须将从数据库完全清空,admin和local库都得删除。

exited with error number 100

尝试启动mongod服务,发现报错child process failed, exited with error number 100

[root@localhost ~]# mongod --replSet sbc -f /etc/mongod.conf --fork

about to fork child process, waiting until server is ready for connections.

forked process: 15101

ERROR: child process failed, exited with error number 100- 1

- 2

- 3

- 4

经检查发现这是由于之前mongodb非正常关闭,mongod.lock文件残留,删除掉即可

[root@localhost ~]# rm /var/lib/mongo/mongod.lock

rm: remove regular file ‘/var/lib/mongo/mongod.lock’? y

[root@localhost mongo]# mongod --replSet sbc -f /etc/mongod.conf --fork

about to fork child process, waiting until server is ready for connections.

forked process: 15215

child process started successfully, parent exiting- 1

- 2

- 3

- 4

- 5

- 6

exited with error number 48

尝试启动mongod服务,发现报错child process failed, exited with error number 48

[root@localhost ~]# mongod --replSet sbc -f /etc/mongod.conf --fork

about to fork child process, waiting until server is ready for connections.

forked process: 15129

ERROR: child process failed, exited with error number 48- 1

- 2

- 3

- 4

使用ps命令查看进程情况

[root@localhost mongo]# ps -ef|grep mongo

root 14905 1 0 23:22 ? 00:00:06 mongod -f /etc/mongod.conf --fork

root 15212 12169 0 23:42 pts/0 00:00:00 grep --color=auto mongo- 1

- 2

- 3

还是由于mongodb未正常关闭

使用kill语句关闭进程后修复

[root@localhost mongo]# kill -2 14905

[root@localhost mongo]# mongod --replSet sbc -f /etc/mongod.conf --fork

about to fork child process, waiting until server is ready for connections.

forked process: 15215

child process started successfully, parent exiting- 1

- 2

- 3

- 4

- 5

参考mongodb官方文档

参考mongodb中文社区