Ffmpeg+QT简单播放器的设计

文章目录

- 目的

- 开发准备

- 开发环境

- 开发语言

- 框架

- 该工程的目的

- 代码

- 代码预览

- audio.c

- video.c

目的

有问题请加qq群进行讨论 (音视频高级开发交流群3 群号782508536)

更多FFmpeg知识请点击:音视频FFmpeg实战(入门到提高)

让读者对以下知识有初步的掌握

- 理解播放器的基本框架

- 熟悉常用的结构体

- AVFormatContext

- AVCodecContext

- AVCodec

- AVFrame

- AVPacket

- AVStream

- 理解基本的同步原理

开发准备

开发环境

- Windows7

- QT 5.9.2 + Creator 4.4.1

- 第三方库

- FFMPEG 用来读取码流以及解码

- SDL2 用来显示画面

- PortAudio 用来播放声音

开发语言

- C/C++ 该demo主要以C的方式去做开发,但多线程的创建使用了C++11的机制;后续的开发以C++为主

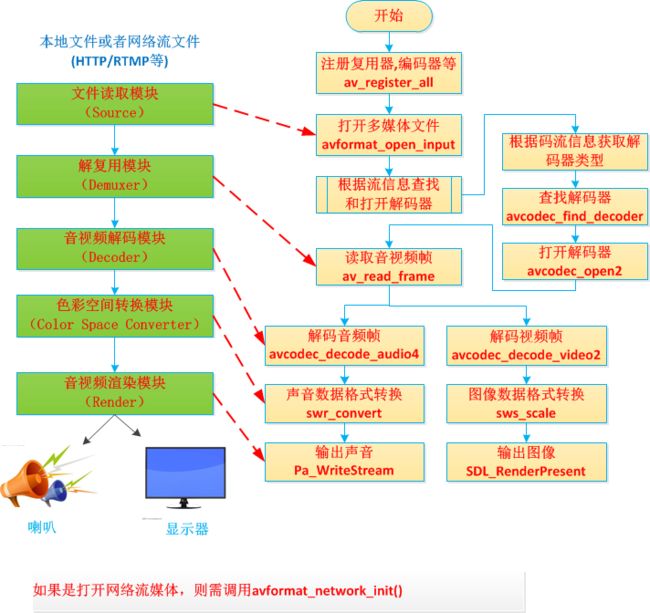

框架

-

线程划分

- 主循环读取数据

- 音频线程解码并播放声音

- 视频线程解码并显示视频

-

文件划分

-

main.c 做初始化工作,读取码流,分发码流

-

audio.c 音频解码和声音播放

- AudioInit 初始化音频

- AudioClose 释放资源

- AudioDecodeThread 音频解码和播放线程

- AudioPlay 播放声音

- AudioPacketPush 写入未解码的音频包

- AudioPacketSize 当前音频尚未解码的数据总容量

- AudioSetTimeBase 音频的base time(以时钟有关系,比如TS为1/90KHZ,另一常见的为1/1000)

-

vidoe.c 视频解码和视频播放

- VideoInit 初始化视频

- VideoClose 释放资源

- VideoDecodeThread 视频解码和播放线程

- VideoDisplay 显示帧

- VideoPacketPush 写入未解码的视频包

- VideoPacketSize 当前视频尚未解码的数据总容量

- VideoGetFirstFrame 是否已经解出第一帧

- VideoSetTimeBase 视频的base time

-

avpacket_queue.c 音频、视频队列,存储解码前的数据

- PacketQueueInit 初始化队列

- PacketQueueGetSize 队列中所有packet的数据长度(单位为字节)

- PacketQueuePut 插入元素

- PacketQueueTake 读取元素

-

log.c 打印日志

- LogInit 初始化Log模块,启动后将在运行目录生成一个log.txt的文件,每次程序重启都会重新产生一个文件并将原来的文件覆盖。

- LogDebug、LogInfo、LogError和LogNotice 对应不同级别的打印,但目前只是通过宏是否实现来控制

- FunEntry 用在函数入口

- FunExit 用在函数出口

-

clock.c 时钟同步用

- AVClockResetTime 重置时钟

- AVClockGetCurTime 获取当前时间

- AVClockSetTime 设置时间

- AVClockEnable 使能时钟

- AVClockDisable 禁用时钟

- AVClockIsEnable 获取时钟是否使能

-

-

开发前需要理清的逻辑

- 采用什么方法实现多线程(这里使用C++11的Thread)

- 读取结束时如何处理(通过插入数据为0的数据包通知解码线程)

- 音视频数据队列的最大buffer 音频+视频最大缓存为15M

- 同步方式的处理(该工程使用了公共时钟做同步)

该工程的目的

该工程实现了简单的播放器,主要是搭建一个方便QT进行调试的环境,读者可以在QT环境通过Debug的运行方式理解第一章节提到的重要结构体。

代码

下载地址:http://download.csdn.net/download/muyuyuzhong/10272272

必须把dll目录的文件拷贝到编译目录,比如

代码预览

audio.c

#include

#include

#include "audio.h"

#include "avpackets_queue.h"

#include "av_clock.h"

#include "video.h"

#include "log.h"

#define AVCODE_MAX_AUDIO_FRAME_SIZE 192000 /* 1 second of 48khz 32bit audio */

const double kPortAudioLatency = 0.3; // 0.3秒,也就是说PortAudio库需要累积0.3秒的数据后才真正播放

#define ERR_STREAM stderr

typedef struct audio_param

{

AVFrame wantFrame; // 指定PCM输出格式

PaStreamParameters *outputParameters;

PaStream *stream;

int sampleRate;

int format;

PacketQueue audioQueue; // 音频队列

int packetEof; // 数据包已经读取到最后

int quit; // 是否退出线程

double audioBaseTime; // 音频base time

SwrContext *swrCtx; // 音频PCM格式转换

}T_AudioParam;

static T_AudioParam sAudioParam;

static int _AudioDecodeFrame( AVCodecContext *pAudioCodecCtx, uint8_t *audioBuf, int bufSize , int *packeEof);

void AudioDecodeThread( void *userdata )

{

FunEntry();

AVCodecContext *pAudioCodecCtx = (AVCodecContext *) userdata;

int len1 = 0;

int audio_size = 0;

uint8_t audioBuf[AVCODE_MAX_AUDIO_FRAME_SIZE];

AVClockDisable(); // 禁止时钟

while ( sAudioParam.quit != 1 )

{

if(sAudioParam.packetEof && sAudioParam.audioQueue.size == 0)

{

sAudioParam.quit = 1;

}

audio_size = 0;

if(VideoGetFirstFrame()) // 等图像出来后再出声音

{

audio_size = _AudioDecodeFrame( pAudioCodecCtx, audioBuf, sizeof(audioBuf), &sAudioParam.packetEof);

}

if ( audio_size > 0 )

{

AudioPlay(audioBuf, audio_size, 0);

}

else

{

std::this_thread::sleep_for(std::chrono::milliseconds(20)); // 没有数据时先休眠20毫秒

}

}

FunExit();

}

// 对于音频来说,一个packet里面,可能含有多帧(frame)数据

/**

* @brief _AudioDecodeFrame

* @param pAudioCodecCtx

* @param audioBuf

* @param bufSize

* @param packeEof

* @return

*/

int _AudioDecodeFrame( AVCodecContext *pAudioCodecCtx,

uint8_t *audioBuf, int bufSize, int *packeEof)

{

AVPacket packet;

AVFrame *pFrame = NULL;

int gotFrame = 0;

int decodeLen = 0;

long audioBufIndex = 0;

int convertLength = 0;

int convertAll = 0;

if ( PacketQueueTake( &sAudioParam.audioQueue, &packet, 1 ) < 0 )

{

std::this_thread::sleep_for(std::chrono::milliseconds(30)); // 没有取到数据休眠

return(-1);

}

pFrame = av_frame_alloc();

*packeEof = packet.size ? 0: 1; // 约定使用数据长度为0时来标记packet已经读取完毕

while ( packet.size > 0 )

{

/*

* pAudioCodecCtx:解码器信息

* pFrame:输出,存数据到frame

* gotFrame:输出。0代表有frame取了,不意味发生了错误。

* packet:输入,取数据解码。

*/

decodeLen = avcodec_decode_audio4( pAudioCodecCtx, pFrame, &gotFrame, &packet );

if(decodeLen < 0)

{

LogError("avcodec_decode_audio4 failed");

break;

}

if ( gotFrame )

{

if(!AVClockIsEnable())

{

int64_t pts = sAudioParam.audioBaseTime * pFrame->pts * 1000;

// 设置同步时钟,因为PortAudio Latency设置为了kPortAudioLatency秒,所以这里需要进去这个时间,避免视频提前kPortAudioLatency秒

AVClockResetTime(pts - (int64_t)(kPortAudioLatency*1000)); // 单位为毫秒

LogInfo("pts = %lld, clk = %lld", pts, AVClockGetCurTime());

LogInfo("audio base = %lf", sAudioParam.audioBaseTime);

AVClockEnable();

}

// 针对特殊情况推算channel_layout或者channels的参数值

if ( pFrame->channels > 0 && pFrame->channel_layout == 0 )

{

pFrame->channel_layout = av_get_default_channel_layout( pFrame->channels );

}

else if ( pFrame->channels == 0 && pFrame->channel_layout > 0 )

{

pFrame->channels = av_get_channel_layout_nb_channels( pFrame->channel_layout );

}

if ( sAudioParam.swrCtx != NULL )

{

swr_free( &sAudioParam.swrCtx );

sAudioParam.swrCtx = NULL;

}

// 配置转换器

sAudioParam.swrCtx = swr_alloc_set_opts( NULL,

sAudioParam.wantFrame.channel_layout,

(enum AVSampleFormat) (sAudioParam.wantFrame.format),

sAudioParam.wantFrame.sample_rate,

pFrame->channel_layout,

(enum AVSampleFormat) (pFrame->format),

pFrame->sample_rate,

0, NULL );

if ( sAudioParam.swrCtx == NULL || swr_init( sAudioParam.swrCtx ) < 0 )

{

LogError( "swr_init error" );

break;

}

// 转换为PortAudio输出需要的数据格式

convertLength = swr_convert( sAudioParam.swrCtx,

&audioBuf + audioBufIndex,

AVCODE_MAX_AUDIO_FRAME_SIZE,

(const uint8_t * *) pFrame->data,

pFrame->nb_samples );

// 转换后的有效数据存储到哪里

audioBufIndex += convertLength;

/* 返回所有转换后的有效数据的长度 */

convertAll += convertLength; // 该packet解出来并做转换后的数据总长度

av_frame_unref(pFrame);

if ( sAudioParam.swrCtx != NULL )

{

swr_free( &sAudioParam.swrCtx ); // 不释放导致的内存泄漏

sAudioParam.swrCtx = NULL;

}

}

if(decodeLen > 0)

{

packet.size -= decodeLen ; // 计算当前解码剩余的数据

if(packet.size > 0)

LogInfo("packet.size = %d, orig size = %d", packet.size, packet.size + decodeLen);

packet.data += decodeLen; // 改变数据起始位置

}

}

exit_:

av_packet_unref(&packet);

av_frame_free(&pFrame);

return (sAudioParam.wantFrame.channels * convertAll * av_get_bytes_per_sample( (enum AVSampleFormat) (sAudioParam.wantFrame.format) ) );

}

int AudioInit(AVCodecContext *pAudioCodecCtx)

{

FunEntry();

int ret;

//1 初始化音频输出设备

//memset(&sAudioParam, 0, sizeof(sAudioParam));

// 初始化音频输出设备,调用PortAudio库

Pa_Initialize();

// 分配PaStreamParameters

sAudioParam.outputParameters = (PaStreamParameters *)malloc(sizeof(PaStreamParameters));

sAudioParam.outputParameters->suggestedLatency = kPortAudioLatency; // 设置latency为0.3秒

sAudioParam.outputParameters->sampleFormat = paFloat32; // PCM格式

sAudioParam.outputParameters->hostApiSpecificStreamInfo = NULL;

// 设置音频信息, 用来打开音频设备

sAudioParam.outputParameters->channelCount = pAudioCodecCtx->channels; // 通道数量

if(sAudioParam.outputParameters->sampleFormat == paFloat32) // 目前PortAudio我们只处理paFloat32和paInt16两种格式

{

sAudioParam.format = AV_SAMPLE_FMT_FLT;

}

else if(sAudioParam.outputParameters->sampleFormat == paInt16)

{

sAudioParam.format = AV_SAMPLE_FMT_S16;

}

else

{

sAudioParam.format = AV_SAMPLE_FMT_S16;

}

sAudioParam.sampleRate = pAudioCodecCtx->sample_rate;

// 获取音频输出设备

sAudioParam.outputParameters->device = Pa_GetDefaultOutputDevice();

if(sAudioParam.outputParameters->device < 0)

{

LogError("Pa_GetDefaultOutputDevice failed, index = %d", sAudioParam.outputParameters->device);

}

// 打开一个输出流

if((ret = Pa_OpenStream( &(sAudioParam.stream), NULL, sAudioParam.outputParameters, pAudioCodecCtx->sample_rate, 0, 0, NULL, NULL )) != paNoError)

{

LogError("Pa_OpenStream open failed, ret = %d", ret);

}

// 设置PortAudio需要的格式

sAudioParam.wantFrame.format = sAudioParam.format;

sAudioParam.wantFrame.sample_rate = sAudioParam.sampleRate;

sAudioParam.wantFrame.channel_layout = av_get_default_channel_layout(sAudioParam.outputParameters->channelCount);

sAudioParam.wantFrame.channels = sAudioParam.outputParameters->channelCount;

// 初始化音频队列

PacketQueueInit( &sAudioParam.audioQueue );

sAudioParam.packetEof = 0;

// 初始化音频PCM格式转换器

sAudioParam.swrCtx = NULL;

FunExit();

return 0;

}

void AudioPacketPush(AVPacket *packet)

{

if(PacketQueuePut( &sAudioParam.audioQueue, packet ) != 0)

{

LogError("PacketQueuePut failed");

}

}

int AudioPacketSize()

{

return PacketQueueGetSize(&sAudioParam.audioQueue);

}

void AudioPlay( const uint8_t *data, const uint32_t size, int stop )

{

int chunkSize;

int chunk = ( int )( sAudioParam.sampleRate * 0.02 ) * sAudioParam.outputParameters->channelCount * sizeof( short );

int offset = 0;

if ( !Pa_IsStreamActive( sAudioParam.stream ) )

Pa_StartStream( sAudioParam.stream );

while ( !stop && size > offset )

{

if( chunk < size - offset )

{

chunkSize = chunk;

}

else

{

chunkSize = size - offset;

}

if ( Pa_WriteStream( sAudioParam.stream, data + offset, chunkSize / sAudioParam.outputParameters->channelCount / sizeof( float ) ) == paUnanticipatedHostError )

break;

offset += chunkSize;

}

}

void AudioClose(void)

{

FunEntry();

if(sAudioParam.outputParameters)

{

if ( sAudioParam.stream )

{

Pa_StopStream( sAudioParam.stream );

Pa_CloseStream( sAudioParam.stream );

sAudioParam.stream = NULL;

}

Pa_Terminate();

free(sAudioParam.outputParameters);

}

if(sAudioParam.swrCtx)

{

swr_free( &sAudioParam.swrCtx );

}

FunExit();

}

void AudioSetTimeBase(double timeBase)

{

sAudioParam.audioBaseTime = timeBase;

}

video.c

#include // std::cout

#include // std::mutex

#include

#include

#include

#include

#include

#include

#include

#include

#include "video.h"

#include "av_clock.h"

#include "avpackets_queue.h"

#include "log.h"

typedef struct video_param

{

/* 视频输出 */

SDL_Texture *pFrameTexture = NULL;

SDL_Window *pWindow = NULL;

SDL_Renderer *pRenderer = NULL;

PacketQueue videoQueue;

SDL_Rect rect;

struct SwsContext *pSwsCtx = NULL;

AVFrame* pFrameYUV = NULL;

enum AVPixelFormat sPixFmt = AV_PIX_FMT_YUV420P;

int quit = 0;

int getFirstFrame = 0;

double videoBaseTime = 0.0;

int packetEof = 0;

}T_VideoParam;

static T_VideoParam sVideoParam;

static int64_t getNowTime()

{

auto time_now = std::chrono::system_clock::now();

auto duration_in_ms = std::chrono::duration_cast(time_now.time_since_epoch());

return duration_in_ms.count();

}

int VideoInit(int width, int height, enum AVPixelFormat pix_fmt)

{

memset(&sVideoParam, 0 ,sizeof(T_VideoParam));

PacketQueueInit(&sVideoParam.videoQueue);

return 0;

}

int _VideoSDL2Init(int width, int height, enum AVPixelFormat pix_fmt)

{

sVideoParam.pWindow = SDL_CreateWindow( "Video Window",

SDL_WINDOWPOS_UNDEFINED,

SDL_WINDOWPOS_UNDEFINED,

width, height,

SDL_WINDOW_SHOWN | SDL_WINDOW_OPENGL );

if ( !sVideoParam.pWindow )

{

LogError( "SDL: could not set video mode - exiting\n" );

return -1;

}

SDL_RendererInfo info;

sVideoParam.pRenderer = SDL_CreateRenderer( sVideoParam.pWindow, -1, SDL_RENDERER_ACCELERATED | SDL_RENDERER_PRESENTVSYNC );

if ( !sVideoParam.pRenderer )

{

av_log( NULL, AV_LOG_WARNING, "Failed to initialize a hardware accelerated renderer: %s\n", SDL_GetError() );

sVideoParam.pRenderer = SDL_CreateRenderer( sVideoParam.pWindow, -1, 0 );

}

if ( sVideoParam.pRenderer )

{

if ( !SDL_GetRendererInfo( sVideoParam.pRenderer, &info ) )

av_log( NULL, AV_LOG_VERBOSE, "Initialized %s renderer.\n", info.name );

}

sVideoParam.pFrameTexture = SDL_CreateTexture( sVideoParam.pRenderer, SDL_PIXELFORMAT_IYUV, SDL_TEXTUREACCESS_STREAMING, width, height);

if(!sVideoParam.pFrameTexture)

{

LogError( "SDL: SDL_CreateTexture failed\n" );

return -1;

}

LogInfo("pix_fmt = %d, %d\n", pix_fmt, AV_PIX_FMT_YUV420P);

// ffmepg decode output format为YUV420

sVideoParam.pSwsCtx = sws_getContext

(

width, height, pix_fmt,

width, height, AV_PIX_FMT_YUV420P,

SWS_BICUBIC,

NULL,

NULL,

NULL

);

if(!sVideoParam.pSwsCtx)

{

LogError( "SDL: sws_getContext failed\n" );

return -1;

}

sVideoParam.rect.x = 0;

sVideoParam.rect.y = 0;

sVideoParam.rect.w = width;

sVideoParam.rect.h = height;

// 分配用于保存转换后的视频帧

sVideoParam.pFrameYUV = av_frame_alloc();

if (!sVideoParam.pFrameYUV)

{

LogError( "av_frame_alloc sVideoParam.pFrameYUV failed!\n" );

return -1;

}

int numBytes = avpicture_get_size( AV_PIX_FMT_YUV420P, width, height );

uint8_t* buffer = (uint8_t *) av_malloc( numBytes * sizeof(uint8_t) );

avpicture_fill( (AVPicture *) sVideoParam.pFrameYUV, buffer, AV_PIX_FMT_YUV420P, width, height );

return 0;

}

int VideoDecodeThread( void *userdata )

{

FunEntry();

int64_t pts;

AVCodecContext *pVideoCodecCtx = (AVCodecContext *) userdata;

AVPacket packet;

int frameFinished; // 是否获取帧

// SDL2的初始化和显示必须在同一线程进行

_VideoSDL2Init(pVideoCodecCtx->width, pVideoCodecCtx->height, pVideoCodecCtx->pix_fmt);

// 控制事件

SDL_Event event;

int64_t th = 0;

// 分配视频帧

AVFrame *pVideoFrame = NULL;

pVideoFrame = av_frame_alloc();

if(!pVideoFrame)

{

LogError( "av_frame_alloc pVideoFrame failed!\n" );

sVideoParam.quit = 1;

}

static int64_t sPrePts = 0;

sVideoParam.getFirstFrame = 0;

sVideoParam.packetEof = 0;

int first = 0;

int64_t curTime3 = 0;

while (sVideoParam.quit != 1)

{

if(sVideoParam.packetEof && sVideoParam.videoQueue.size == 0)

sVideoParam.quit = 1;

int ret = PacketQueueTake( &sVideoParam.videoQueue, &packet, 0);

if(ret == 0)

{

sVideoParam.packetEof = packet.size ? 0 : 1;

// 解视频帧

( pVideoCodecCtx, pVideoFrame, &frameFinished, &packet );

// 确定已经获取到视频帧

if ( frameFinished )

{

pts = sVideoParam.videoBaseTime * pVideoFrame->pts * 1000; // 转为毫秒计算

// LogInfo("video1 pts = %lld", pVideoFrame->pts);

th = pVideoFrame->pkt_duration * sVideoParam.videoBaseTime * 1000;

if(pts < 0)

{

pts = th + sPrePts;

}

// LogInfo("video2 pts = %lld, th = %lld", pVideoFrame->pts, th);

while(!sVideoParam.quit)

{

int64_t diff = (pts - AVClockGetCurTime());

if(0==first)

{

LogInfo("\n video pts = %lld, cur = %lld, diff = %lld", pts, AVClockGetCurTime(), diff);

LogInfo("video base = %lf", sVideoParam.videoBaseTime);

}

if(th = 0)

{

if(sPrePts != 0)

{

pts - sPrePts;

}

else

th = 30;

}

if(diff > th && diff < 10000) // 阈值为30毫秒, 超过一定的阈值则直接播放

{

std::this_thread::sleep_for(std::chrono::milliseconds(2));

}

else

{

break;

}

if(!AVClockIsEnable())

{

if(sVideoParam.getFirstFrame)

std::this_thread::sleep_for(std::chrono::milliseconds(2));

else

{

break;

}

}

}

sVideoParam.getFirstFrame = 1;

int64_t curTime = getNowTime();

static int64_t sPreTime = curTime;

if(0 == first)

LogInfo("cur = %lld, dif pts = %lld, time = %lld ", curTime, pts - sPrePts, curTime - sPreTime);

sPreTime = curTime;

sPrePts = pts;

curTime3 = getNowTime();

VideoDisplay(pVideoFrame, pVideoCodecCtx->height);

//LogInfo("VideoDisplay diff = %lld", getNowTime() - curTime3);

first = 1;

if(pVideoCodecCtx->refcounted_frames)

{

av_frame_unref( pVideoFrame);

}

}

// 释放packet占用的内存

av_packet_unref( &packet );

}

else

{

//LogInfo("sleep_for wait data , time = %lld", getNowTime());

std::this_thread::sleep_for(std::chrono::milliseconds(10));

}

//LogInfo("SDL_PollEvent, time = %lld", getNowTime() - curTime3);

SDL_PollEvent( &event );

switch ( event.type )

{

case SDL_QUIT:

SDL_Quit();

exit( 0 );

break;

default:

break;

}

}

/* Free the YUV frame */

av_free( pVideoFrame );

FunExit();

}

void VideoDisplay(AVFrame *pVideoFrame, int height)

{

static int64_t sPreTime = 0;

static int64_t sPrePts = 0;

int64_t curTime = getNowTime();

AVFrame *pDisplayFrame;

if(!pVideoFrame)

{

LogError( "pVideoFrame is null\n");

return;

}

sPreTime = curTime;

sPrePts = pVideoFrame->pts;

if(sVideoParam.pFrameYUV)

{

sws_scale // 经过scale,pts信息被清除?

(

sVideoParam.pSwsCtx,

(uint8_t const * const *) pVideoFrame->data,

pVideoFrame->linesize,

0,

height,

sVideoParam.pFrameYUV->data,

sVideoParam.pFrameYUV->linesize

);

pDisplayFrame = sVideoParam.pFrameYUV;

}

else

{

pDisplayFrame = pVideoFrame;

}

/*

* 视频帧直接显示

* //iPitch 计算yuv一行数据占的字节数

*/

SDL_UpdateTexture( sVideoParam.pFrameTexture, &sVideoParam.rect, pDisplayFrame->data[0], pDisplayFrame->linesize[0] );

SDL_RenderClear( sVideoParam.pRenderer );

SDL_RenderCopy( sVideoParam.pRenderer, sVideoParam.pFrameTexture, &sVideoParam.rect, &sVideoParam.rect );

SDL_RenderPresent( sVideoParam.pRenderer );

}

int VideoPacketPush(AVPacket *packet)

{

PacketQueuePut( &sVideoParam.videoQueue, packet);

}

int VideoPacketSize()

{

return PacketQueueGetSize(&sVideoParam.videoQueue);

}

void VideoClose()

{

FunEntry();

if(sVideoParam.pSwsCtx)

sws_freeContext(sVideoParam.pSwsCtx);

if(sVideoParam.pFrameTexture)

SDL_DestroyTexture( sVideoParam.pFrameTexture );

if(sVideoParam.pRenderer)

SDL_DestroyRenderer(sVideoParam.pRenderer);

if(sVideoParam.pWindow)

SDL_DestroyWindow(sVideoParam.pWindow);

if(sVideoParam.pFrameYUV)

av_free( sVideoParam.pFrameYUV );

FunExit();

}

int VideoGetFirstFrame()

{

return sVideoParam.getFirstFrame;

}

void VideoSetTimeBase(double timeBase)

{

sVideoParam.videoBaseTime = timeBase;

}