Python批量爬取小说

利用BeautifulSoup批量爬取笔趣阁小说。

from bs4 import BeautifulSoup

import urllib.request

import re

import os

import threading

import time

# 通过爬虫爬取一本小说

base_url = 'http://www.qu.la' # 笔趣阁首页网址

class myThread (threading.Thread): #继承父类threading.Thread

def __init__(self, threadID, counter,start_page):

threading.Thread.__init__(self)

self.threadID = threadID

self.counter = counter

self.start_page=start_page

self.bookname, self.url, self.first_url = get_book_by_id(self.counter,self.start_page)

def run(self): #把要执行的代码写到run函数里面 线程在创建后会直接运行run函数

get_chapter_content(self.bookname, self.url, self.first_url)

def get_book_by_id(counter, start_page):

url = base_url + '/book/' + str(counter + start_page) + '/'

html_res = urllib.request.urlopen(url)

soup = BeautifulSoup(html_res, 'html.parser')

info = soup.select('#wrapper .box_con #maininfo #info')[0]

bookname = info.contents[1].string

writer = info.find('p').string

latest = info.find_all('p')[2].string # 最后更新

newest = info.find_all('p')[3] # 最新章节

intro = soup.select('#wrapper .box_con #maininfo #intro')[0].text

introduction = u"{0}\n{1}\n{2}\n{3}\n{4}\n".format(bookname, writer, latest, newest, intro, intro)

fw = open("{}.txt.download".format(bookname), 'w', encoding='utf-8')

fw.write(introduction)

# 找到第一章的href开始下载

contents = soup.select('#wrapper .box_con #list dl dt')

#first_url.find_all('dt')

for content in contents:

if str(content).__contains__('一') or str(content).__contains__('正文'):

start = content

first_href = start.findNextSibling('dd').contents[1]['href']

first_url = base_url + first_href

return bookname, url, first_url

def get_chapter_content(bookname, url, chapter_url):

fa = open("{0}.txt.download".format(bookname), 'a', encoding='utf-8')

while(True):

try:

html_ret = urllib.request.urlopen(chapter_url, timeout=15).read()

except:

continue

soup = BeautifulSoup(html_ret, 'html.parser')

chapter = soup.select('#wrapper .content_read .box_con .bookname')[0]

chapter_url = chapter.findAll('a')[2]['href']

chapter_name = chapter.h1.string

chapter_content = soup.select('#wrapper .content_read .box_con #content')[0].text

chapter_content = re.sub('\s+', '\r\n\t', chapter_content).strip('\r\n')

fa.write(chapter_name)

fa.write(chapter_content)

if chapter_url == "./":

break

chapter_url = url + chapter_url

os.rename('{}.txt.download'.format(bookname), '{}.txt'.format(bookname))

print("{}.txt下载完成".format(bookname))

#批量获取txt 900-1000

def get_txts(start_page):

threads = []

print("当前起始页面:" + str(start_page))

print("===============创建下载任务====================")

for i in range(start_page, start_page+10):

thread_one = myThread(i, i, start_page)

thread_one.start()

threads.append(thread_one)

print("================下载任务创建完成================")

print("================等待下载任务完成================")

task_num = len(threads)

count = 0

while (1):

os.system('clear')

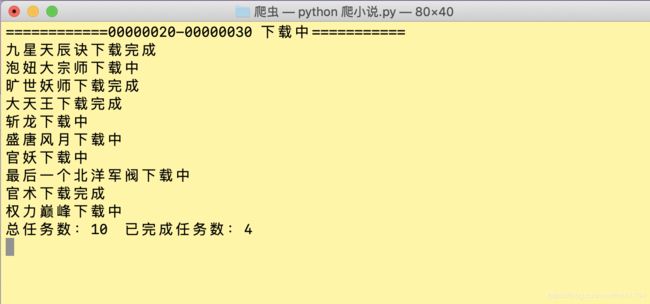

print('============{0:0>8}-{1:0>8} '.format(start_page, start_page + 10) + "下载中===========")

run_task = 0

for thread in threads:

if (thread.isAlive()):

run_task += 1

print('{}下载中'.format(thread.bookname))

else:

print('{}下载完成'.format(thread.bookname))

print('\b'+"总任务数:" + str(task_num) + " 已完成任务数:" + str(task_num - run_task)+"\r")

if (run_task == 0):

break

time.sleep(1)

if (count > 100000):

count = 0

else:

count += 1

os.system('clear')

print("所有下载任务已完成")

time.sleep(2)

if __name__ == "__main__":

get_txts(20)