kubernetes部署NFS持久存储

NFS简介

NFS是网络文件系统Network File System的缩写,NFS服务器可以让PC将网络中的NFS服务器共享的目录挂载到本地的文件系统中,而在本地的系统中来看,那个远程主机的目录就好像是自己的一个磁盘分区一样。

kubernetes使用NFS共享存储有两种方式:

- 1.手动方式静态创建所需要的PV和PVC。

- 2.通过创建PVC动态地创建对应PV,无需手动创建PV。

下面对这两种方式进行配置并进行演示。

搭建NFS服务器

k8s集群准备,以这篇文章为例:https://blog.csdn.net/networken/article/details/84991940

这里作为测试,临时在master节点上部署NFS服务器。

#master节点安装nfs

yum -y install nfs-utils

#创建nfs目录

mkdir -p /nfs/data/

#修改权限

chmod -R 777 /nfs/data

#编辑export文件

vim /etc/exports

/nfs/data *(rw,no_root_squash,sync)

#配置生效

exportfs -r

#查看生效

exportfs

#启动rpcbind、nfs服务

systemctl restart rpcbind && systemctl enable rpcbind

systemctl restart nfs && systemctl enable nfs

#查看 RPC 服务的注册状况

rpcinfo -p localhost

#showmount测试

showmount -e 192.168.92.56

#所有node节点安装客户端

yum -y install nfs-utils

systemctl start nfs && systemctl enable nfs

作为准备工作,我们已经在 k8s-master 节点上搭建了一个 NFS 服务器,目录为 /nfs/data.

静态申请PV卷

添加pv卷对应目录,这里创建2个pv卷,则添加2个pv卷的目录作为挂载点。

#创建pv卷对应的目录

mkdir -p /nfs/data/pv001

mkdir -p /nfs/data/pv002

#配置exportrs

vim /etc/exports

/nfs/data *(rw,no_root_squash,sync)

/nfs/data/pv001 *(rw,no_root_squash,sync)

/nfs/data/pv002 *(rw,no_root_squash,sync)

#配置生效

exportfs -r

#重启rpcbind、nfs服务

systemctl restart rpcbind && systemctl restart nfs

创建PV

下面创建2个名为pv001和pv002的PV卷,配置文件 nfs-pv001.yaml 如下:

[centos@k8s-master ~]$ vim nfs-pv001.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv001

labels:

pv: nfs-pv001

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

storageClassName: nfs

nfs:

path: /nfs/data/pv001

server: 192.168.92.56

nfs-pv002.yaml文件如下:

[centos@k8s-master ~]$ vim nfs-pv001.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv002

labels:

pv: nfs-pv002

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

storageClassName: nfs

nfs:

path: /nfs/data/pv002

server: 192.168.92.56

配置说明:

① capacity 指定 PV 的容量为 1G。

② accessModes 指定访问模式为 ReadWriteOnce,支持的访问模式有:

- ReadWriteOnce – PV 能以 read-write 模式 mount 到单个节点。

- ReadOnlyMany – PV 能以 read-only 模式 mount 到多个节点。

- ReadWriteMany – PV 能以 read-write 模式 mount 到多个节点。

③ persistentVolumeReclaimPolicy 指定当 PV 的回收策略为 Recycle,支持的策略有:

- Retain – 需要管理员手工回收。

- Recycle – 清除 PV 中的数据,效果相当于执行 rm -rf /thevolume/*。

- Delete – 删除 Storage Provider 上的对应存储资源,例如 AWS EBS、GCE PD、Azure

Disk、OpenStack Cinder Volume 等。

④ storageClassName 指定 PV 的 class 为 nfs。相当于为 PV 设置了一个分类,PVC 可以指定 class 申请相应 class 的 PV。

⑤ 指定 PV 在 NFS 服务器上对应的目录。

创建 pv:

[centos@k8s-master ~]$ kubectl apply -f nfs-pv001.yaml

persistentvolume/nfs-pv001 created

[centos@k8s-master ~]$ kubectl apply -f nfs-pv002.yaml

persistentvolume/nfs-pv002 created

[centos@k8s-master ~]$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nfs-pv001 1Gi RWO Recycle Available nfs 4s

nfs-pv002 1Gi RWO Recycle Available nfs 2s

[centos@k8s-master ~]$

STATUS 为 Available,表示 pv就绪,可以被 PVC 申请。

创建PVC

接下来创建一个名为pvc001和pvc002的PVC,配置文件 nfs-pvc001.yaml 如下:

[centos@k8s-master ~]$ vim nfs-pvc001.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc001

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: nfs

selector:

matchLabels:

pv: nfs-pv001

nfs-pvc002.yaml配置文件

[centos@k8s-master ~]$ vim nfs-pvc001.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc002

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: nfs

selector:

matchLabels:

pv: nfs-pv002

执行yaml文件创建 pvc:

[centos@k8s-master ~]$ kubectl apply -f nfs-pvc001.yaml

persistentvolumeclaim/nfs-pvc001 created

[centos@k8s-master ~]$ kubectl apply -f nfs-pvc002.yaml

persistentvolumeclaim/nfs-pvc002 created

[centos@k8s-master ~]$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nfs-pvc001 Bound pv001 1Gi RWO nfs 6s

nfs-pvc002 Bound pv002 1Gi RWO nfs 3s

[centos@k8s-master ~]$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nfs-pv001 1Gi RWO Recycle Bound default/pvc001 nfs 9m12s

nfs-pv002 1Gi RWO Recycle Bound default/pvc002 nfs 9m10s

[centos@k8s-master ~]$

从 kubectl get pvc 和 kubectl get pv 的输出可以看到 pvc001 和pvc002分别绑定到pv001和pv002,申请成功。注意pvc绑定到对应pv通过labels标签方式实现,也可以不指定,将随机绑定到pv。

接下来就可以在 Pod 中使用存储了,Pod 配置文件 nfs-pod001.yaml 如下:

[centos@k8s-master ~]$ vim nfs-pod001.yaml

kind: Pod

apiVersion: v1

metadata:

name: nfs-pod001

spec:

containers:

- name: myfrontend

image: nginx

volumeMounts:

- mountPath: "/var/www/html"

name: nfs-pv001

volumes:

- name: nfs-pv001

persistentVolumeClaim:

claimName: nfs-pvc001

nfs-pod002.yaml 如下:

[centos@k8s-master ~]$ vim nfs-pod002.yaml

kind: Pod

apiVersion: v1

metadata:

name: nfs-pod002

spec:

containers:

- name: myfrontend

image: nginx

volumeMounts:

- mountPath: "/var/www/html"

name: nfs-pv002

volumes:

- name: nfs-pv002

persistentVolumeClaim:

claimName: nfs-pvc002

与使用普通 Volume 的格式类似,在 volumes 中通过 persistentVolumeClaim 指定使用nfs-pvc001和nfs-pvc002申请的 Volume。

执行yaml文件创建nfs-pdo001和nfs-pod002:

[centos@k8s-master ~]$ kubectl apply -f nfs-pod001.yaml

pod/nfs-pod001 created

[centos@k8s-master ~]$ kubectl apply -f nfs-pod002.yaml

pod/nfs-pod002 created

[centos@k8s-master ~]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-75bf876d88-sqqpv 1/1 Running 0 25m

nfs-pod001 1/1 Running 0 12s

nfs-pod002 1/1 Running 0 9s

[centos@k8s-master ~]$

验证 PV 是否可用:

[centos@k8s-master ~]$ kubectl exec nfs-pod001 touch /var/www/html/index001.html

[centos@k8s-master ~]$ kubectl exec nfs-pod002 touch /var/www/html/index002.html

[centos@k8s-master ~]$ ls /nfs/data/pv001/

index001.html

[centos@k8s-master ~]$ ls /nfs/data/pv002/

index002.html

[centos@k8s-master ~]$

进入pod查看挂载情况

[centos@k8s-master ~]$ kubectl exec -it nfs-pod001 /bin/bash

root@nfs-pod001:/# df -h

......

192.168.92.56:/nfs/data/pv001 47G 5.2G 42G 11% /var/www/html

......

root@nfs-pod001:/#

删除pv

删除pod,pv和pvc不会被删除,nfs存储的数据不会被删除。

[centos@k8s-master ~]$ kubectl delete -f nfs-pod001.yaml

pod "nfs-pod001" deleted

[centos@k8s-master ~]$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nfs-pv001 1Gi RWO Recycle Bound default/pvc001 nfs 34m

nfs-pv002 1Gi RWO Recycle Bound default/pvc002 nfs 34m

[centos@k8s-master ~]$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nfs-pvc001 Bound pv001 1Gi RWO nfs 25m

nfs-pvc002 Bound pv002 1Gi RWO nfs 25m

[centos@k8s-master ~]$ ls /nfs/data/pv001/

index001.html

[centos@k8s-master ~]$

继续删除pvc,pv将被释放,处于 Available 可用状态,并且nfs存储中的数据被删除。

[centos@k8s-master ~]$ kubectl delete -f nfs-pvc001.yaml

persistentvolumeclaim "nfs-pvc001" deleted

[centos@k8s-master ~]$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nfs-pv001 1Gi RWO Recycle Available nfs 35m

nfs-pv002 1Gi RWO Recycle Bound default/pvc002 nfs 35m

[centos@k8s-master ~]$ ls /nfs/data/pv001/

[centos@k8s-master ~]$

继续删除pv

[centos@k8s-master ~]$ kubectl delete -f nfs-pv001.yaml

persistentvolume "pv001" deleted

动态申请PV卷

项目地址:

https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client

External NFS驱动的工作原理

K8S的外部NFS驱动,可以按照其工作方式(是作为NFS server还是NFS client)分为两类:

1.nfs-client:

也就是我们接下来演示的这一类,它通过K8S的内置的NFS驱动挂载远端的NFS服务器到本地目录;然后将自身作为storage provider,关联storage class。当用户创建对应的PVC来申请PV时,该provider就将PVC的要求与自身的属性比较,一旦满足就在本地挂载好的NFS目录中创建PV所属的子目录,为Pod提供动态的存储服务。

2.nfs:

与nfs-client不同,该驱动并不使用k8s的NFS驱动来挂载远端的NFS到本地再分配,而是直接将本地文件映射到容器内部,然后在容器内使用ganesha.nfsd来对外提供NFS服务;在每次创建PV的时候,直接在本地的NFS根目录中创建对应文件夹,并export出该子目录。

利用NFS动态提供Kubernetes后端存储卷

本文将介绍使用nfs-client-provisioner这个应用,利用NFS Server给Kubernetes作为持久存储的后端,并且动态提供PV。前提条件是有已经安装好的NFS服务器,并且NFS服务器与Kubernetes的Slave节点都能网络连通。将nfs-client驱动做一个deployment部署到K8S集群中,然后对外提供存储服务。

nfs-client-provisioner 是一个Kubernetes的简易NFS的外部provisioner,本身不提供NFS,需要现有的NFS服务器提供存储

部署nfs-client-provisioner

首先克隆仓库获取yaml文件

git clone https://github.com/kubernetes-incubator/external-storage.git

cp -R external-storage/nfs-client/deploy/ $HOME

cd deploy

修改deployment.yaml文件

这里修改的参数包括NFS服务器所在的IP地址(192.168.92.56),以及NFS服务器共享的路径(/nfs/data),两处都需要修改为你实际的NFS服务器和共享目录。另外修改nfs-client-provisioner镜像从dockerhub拉取。

[centos@k8s-master deploy]$ vim deployment.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

---

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: willdockerhub/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 192.168.92.56

- name: NFS_PATH

value: /nfs/data

volumes:

- name: nfs-client-root

nfs:

server: 192.168.92.56

path: /nfs/data

部署deployment.yaml

kubectl apply -f deployment.yaml

查看创建的POD

[centos@k8s-master ~]$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-client-provisioner-75bf876d88-578lg 1/1 Running 0 51m 10.244.2.131 k8s-node2 <none> <none>

创建StorageClass

storage class的定义,需要注意的是:provisioner属性要等于驱动所传入的环境变量PROVISIONER_NAME的值。否则,驱动不知道知道如何绑定storage class。

此处可以不修改,或者修改provisioner的名字,需要与上面的deployment的PROVISIONER_NAME名字一致。

[centos@k8s-master deploy]$ vim class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false"

部署yaml文件

kubectl apply -f class.yaml

查看创建的storageclass

[centos@k8s-master deploy]$ kubectl get sc

NAME PROVISIONER AGE

managed-nfs-storage fuseim.pri/ifs 95m

[centos@k8s-master deploy]$

配置授权

如果集群启用了RBAC,则必须执行如下命令授权provisioner。

[centos@k8s-master deploy]$ vim rbac.yaml

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

部署yaml文件

kubectl create -f rbac.yaml

测试

创建测试PVC

kubectl create -f test-claim.yaml

这里指定了其对应的storage-class的名字为managed-nfs-storage,如下:

[centos@k8s-master deploy]$ vim test-claim.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi

查看创建的PVC

可以看到PVC状态为Bound,绑定的volume为pvc-a17d9fd5-237a-11e9-a2b5-000c291c25f3。

[centos@k8s-master deploy]$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

test-claim Bound pvc-a17d9fd5-237a-11e9-a2b5-000c291c25f3 1Mi RWX managed-nfs-storage 34m

[centos@k8s-master deploy]$

查看自动创建的PV

[centos@k8s-master deploy]$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-a17d9fd5-237a-11e9-a2b5-000c291c25f3 1Mi RWX Delete Bound default/test-claim managed-nfs-storage 34m

[centos@k8s-master deploy]$

然后,我们进入到NFS的export目录,可以看到对应该volume name的目录已经创建出来了。

其中volume的名字是namespace,PVC name以及uuid的组合:

[root@k8s-master ~]# cd /nfs/data/

[root@k8s-master data]# ll

total 0

drwxrwxrwx 2 root root 21 Jan 29 12:03 default-test-claim-pvc-a17d9fd5-237a-11e9-a2b5-000c291c25f3

创建测试Pod

指定该pod使用我们刚刚创建的PVC:test-claim,另外注意这里将镜像改为dockerhub镜像。

完成之后,如果attach到pod中执行一些文件的读写操作,就可以确定pod的/mnt已经使用了NFS的存储服务了。

[centos@k8s-master deploy]$ vim test-pod.yaml

kind: Pod

apiVersion: v1

metadata:

name: test-pod

spec:

containers:

- name: test-pod

image: willdockerhub/busybox:1.24

command:

- "/bin/sh"

args:

- "-c"

- "touch /mnt/SUCCESS && exit 0 || exit 1"

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

restartPolicy: "Never"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: test-claim

执行yaml文件

kubectl create -f test-pod.yaml

查看创建的测试POD

[centos@k8s-master ~]$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-client-provisioner-75bf876d88-578lg 1/1 Running 0 51m 10.244.2.131 k8s-node2 <none> <none>

test-pod 0/1 Completed 0 41m 10.244.1.129 k8s-node1 <none> <none>

在NFS服务器上的共享目录下的卷子目录中检查创建的NFS PV卷下是否有"SUCCESS" 文件。

[root@k8s-master ~]# cd /nfs/data/

[root@k8s-master data]# ll

total 0

drwxrwxrwx 2 root root 21 Jan 29 12:03 default-test-claim-pvc-a17d9fd5-237a-11e9-a2b5-000c291c25f3

[root@k8s-master data]#

[root@k8s-master data]# cd default-test-claim-pvc-a17d9fd5-237a-11e9-a2b5-000c291c25f3/

[root@k8s-master default-test-claim-pvc-a17d9fd5-237a-11e9-a2b5-000c291c25f3]# ll

total 0

-rw-r--r-- 1 root root 0 Jan 29 12:03 SUCCESS

清理测试环境

删除测试POD

kubectl delete -f test-pod.yaml

删除测试PVC

kubectl delete -f test-claim.yaml

在NFS服务器上的共享目录下查看NFS的PV卷已经被删除。

官方wordpress示例

官方链接:

https://kubernetes.io/docs/tutorials/stateful-application/mysql-wordpress-persistent-volume/

创建secret

创建secret以存储mysql数据库密码,这里数据库登录密码设为123456

kubectl create secret generic mysql-pass --from-literal=password=123456

查看创建的secret

[centos@k8s-master ~]$ kubectl get secrets

NAME TYPE DATA AGE

mysql-pass Opaque 1 68m

[centos@k8s-master ~]$

部署MYSQL

MYSQL容器挂载持久卷到容器/var/lib/mysql目录下,其中MYSQL_ROOT_PASSWORD环境变量通过Secret方式设置数据库密码。

注意这里在官方示例基础上做了3处修改:

1.PersistentVolumeClaim中增加了如下内容

annotations:

volume.beta.kubernetes.io/storage-class: “managed-nfs-storage”

2.存储改为1Gi仅用来测试

3.msyql镜像改为mysql:latest

[centos@k8s-master ~]$ vim wordpress-mysql.yaml

apiVersion: v1

kind: Service

metadata:

name: wordpress-mysql

labels:

app: wordpress

spec:

ports:

- port: 3306

selector:

app: wordpress

tier: mysql

clusterIP: None

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pv-claim

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

labels:

app: wordpress

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1 # for versions before 1.9.0 use apps/v1beta2

kind: Deployment

metadata:

name: wordpress-mysql

labels:

app: wordpress

spec:

selector:

matchLabels:

app: wordpress

tier: mysql

strategy:

type: Recreate

template:

metadata:

labels:

app: wordpress

tier: mysql

spec:

containers:

- image: mysql:latest

name: mysql

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-pass

key: password

ports:

- containerPort: 3306

name: mysql

volumeMounts:

- name: mysql-persistent-storage

mountPath: /var/lib/mysql

volumes:

- name: mysql-persistent-storage

persistentVolumeClaim:

claimName: mysql-pv-claim

部署wordpress

注意这里同样在官方示例基础上做了4处修改:

1.Service项的类型改为NodePort

type: NodePort

2.PersistentVolumeClaim中增加了如下内容

annotations:

volume.beta.kubernetes.io/storage-class: “managed-nfs-storage”

3.存储改为1Gi仅用来测试

4.wordpress镜像改为wordpress:latest

[centos@k8s-master ~]$ vim wordpress.yaml

apiVersion: v1

kind: Service

metadata:

name: wordpress

labels:

app: wordpress

spec:

ports:

- port: 80

selector:

app: wordpress

tier: frontend

type: NodePort

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: wp-pv-claim

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

labels:

app: wordpress

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1 # for versions before 1.9.0 use apps/v1beta2

kind: Deployment

metadata:

name: wordpress

labels:

app: wordpress

spec:

selector:

matchLabels:

app: wordpress

tier: frontend

strategy:

type: Recreate

template:

metadata:

labels:

app: wordpress

tier: frontend

spec:

containers:

- image: wordpress:latest

name: wordpress

env:

- name: WORDPRESS_DB_HOST

value: wordpress-mysql

- name: WORDPRESS_DB_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-pass

key: password

ports:

- containerPort: 80

name: wordpress

volumeMounts:

- name: wordpress-persistent-storage

mountPath: /var/www/html

volumes:

- name: wordpress-persistent-storage

persistentVolumeClaim:

claimName: wp-pv-claim

修改mysql连接认证方式

mysql8.0以上版本默认认证方式已经改为caching_sha2_password,wordpres等客户端还不支持,这里改回原来的mysql_native_password认证方式。(你也可以使用mysql:5.7.25等版本镜像避免此问题)。

#获取mysql容器所在pod名称

$ kubectl get pod

#进入mysql的pod

$ kubectl exec -it wordpress-mysql-5fd57746c7-8dhrq /bin/bash

#登录数据库(此处的密码为参数MYSQL_ROOT_PASSWORD对应的值,此处密码为123456)

mysql -u root -p

#使用mysql数据库

use mysql;

#查询mysql的root用户

mysql> select host, user, plugin from user;

+-----------+------------------+-----------------------+

| host | user | plugin |

+-----------+------------------+-----------------------+

| % | root | caching_sha2_password |

| localhost | mysql.infoschema | caching_sha2_password |

| localhost | mysql.session | caching_sha2_password |

| localhost | mysql.sys | caching_sha2_password |

| localhost | root | caching_sha2_password |

+-----------+------------------+-----------------------+

5 rows in set (0.00 sec)

#修改加密规则

ALTER USER 'root'@'%' IDENTIFIED BY 'password' PASSWORD EXPIRE NEVER;

#修改root用户插件验证方式,注意这里的密码,改为个人登录的密码

ALTER USER 'root'@'%' IDENTIFIED WITH mysql_native_password BY '123456';

#刷新权限

FLUSH PRIVILEGES;

查看创建的POD

[centos@k8s-master ~]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-75bf876d88-578lg 1/1 Running 2 9h

wordpress-8556476bc5-v79q2 1/1 Running 12 156m

wordpress-mysql-5fd57746c7-8dhrq 1/1 Running 0 13m

查看创建的PV和PVC

[centos@k8s-master ~]$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-pv-claim Bound pvc-a528b88f-23c7-11e9-9d07-000c291c25f3 1Gi RWO managed-nfs-storage 12m

wp-pv-claim Bound pvc-8f93dd7e-23b3-11e9-a2b5-000c291c25f3 1Gi RWO managed-nfs-storage 155m

[centos@k8s-master ~]$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-8f93dd7e-23b3-11e9-a2b5-000c291c25f3 1Gi RWO Delete Bound default/wp-pv-claim managed-nfs-storage 155m

pvc-a528b88f-23c7-11e9-9d07-000c291c25f3 1Gi RWO Delete Bound default/mysql-pv-claim managed-nfs-storage 12m

[centos@k8s-master ~]$

查看wordpress service

[centos@k8s-master ~]$ kubectl get svc wordpress

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

wordpress NodePort 10.106.151.14 <none> 80:30533/TCP 157m

[centos@k8s-master ~]$

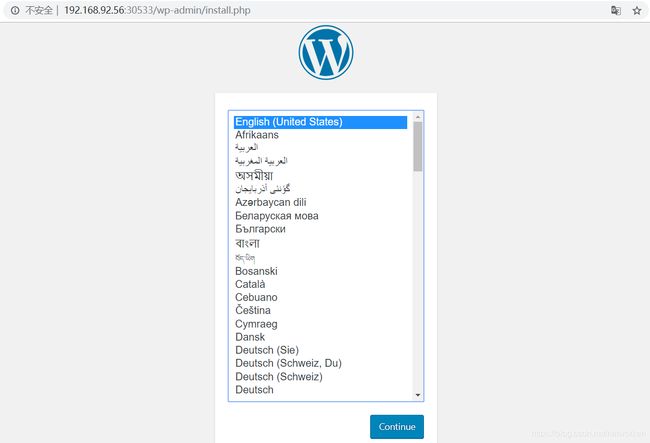

服务端口为30533,通过nodeport方式访问wordpress.

访问wordpress

http://192.168.92.56:30533

NFS端查看已创建的卷

卷目录下已写入持久化的mysql和wordpress配置数据,删除pod重新创建不会导致数据丢失。

[centos@k8s-master ~]$ cd /nfs/data/

[centos@k8s-master data]$ ll

total 8

drwxrwxrwx 7 polkitd ssh_keys 4096 Jan 29 21:16 default-mysql-pv-claim-pvc-a528b88f-23c7-11e9-9d07-000c291c25f3

drwxrwxrwx 5 root root 4096 Jan 29 21:16 default-wp-pv-claim-pvc-8f93dd7e-23b3-11e9-a2b5-000c291c25f3

[centos@k8s-master data]$

[centos@k8s-master data]$ cd default-mysql-pv-claim-pvc-a528b88f-23c7-11e9-9d07-000c291c25f3/

[centos@k8s-master default-mysql-pv-claim-pvc-a528b88f-23c7-11e9-9d07-000c291c25f3]$ ll

total 181172

-rw-r----- 1 polkitd ssh_keys 56 Jan 29 21:13 auto.cnf

-rw-r----- 1 polkitd ssh_keys 3090087 Jan 29 21:13 binlog.000001

-rw-r----- 1 polkitd ssh_keys 964626 Jan 29 21:28 binlog.000002

-rw-r----- 1 polkitd ssh_keys 32 Jan 29 21:13 binlog.index

-rw------- 1 polkitd ssh_keys 1676 Jan 29 21:13 ca-key.pem

-rw-r--r-- 1 polkitd ssh_keys 1112 Jan 29 21:13 ca.pem

-rw-r--r-- 1 polkitd ssh_keys 1112 Jan 29 21:13 client-cert.pem

-rw------- 1 polkitd ssh_keys 1676 Jan 29 21:13 client-key.pem

-rw-r----- 1 polkitd ssh_keys 5933 Jan 29 21:13 ib_buffer_pool

-rw-r----- 1 polkitd ssh_keys 12582912 Jan 29 21:28 ibdata1

-rw-r----- 1 polkitd ssh_keys 50331648 Jan 29 21:28 ib_logfile0

-rw-r----- 1 polkitd ssh_keys 50331648 Jan 29 21:13 ib_logfile1

-rw-r----- 1 polkitd ssh_keys 12582912 Jan 29 21:14 ibtmp1

drwxr-x--- 2 polkitd ssh_keys 187 Jan 29 21:13 #innodb_temp

drwxr-x--- 2 polkitd ssh_keys 143 Jan 29 21:13 mysql

-rw-r----- 1 polkitd ssh_keys 31457280 Jan 29 21:20 mysql.ibd

drwxr-x--- 2 polkitd ssh_keys 4096 Jan 29 21:13 performance_schema

-rw------- 1 polkitd ssh_keys 1676 Jan 29 21:13 private_key.pem

-rw-r--r-- 1 polkitd ssh_keys 452 Jan 29 21:13 public_key.pem

-rw-r--r-- 1 polkitd ssh_keys 1112 Jan 29 21:13 server-cert.pem

-rw------- 1 polkitd ssh_keys 1676 Jan 29 21:13 server-key.pem

drwxr-x--- 2 polkitd ssh_keys 28 Jan 29 21:13 sys

-rw-r----- 1 polkitd ssh_keys 12582912 Jan 29 21:28 undo_001

-rw-r----- 1 polkitd ssh_keys 11534336 Jan 29 21:28 undo_002

drwxr-x--- 2 polkitd ssh_keys 287 Jan 29 21:19 wordpress

执行pod删除操作,数据和配置不会丢失

[centos@k8s-master ~]$ kubectl delete pod wordpress-mysql-5fd57746c7-87f2m

Pv无法删除问题

PV处于Terminating并且无法删除:

[centos@k8s-master ~]$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nfs-pv3 200M RWX Recycle Terminating kube-system/redis-data-redis-app-5 7h54m

nfs-pv4 200M RWX Recycle Terminating kube-system/redis-data-redis-app-2 7h54m

[centos@k8s-master ~]$ kubectl delete pv nfs-pv3

persistentvolume "nfs-pv3" deleted

^C

删除 kubernetes.io/pv-protection项 可强制删除处于Terminating状态的PV,更改后:wq保存即可。

[centos@k8s-master ~]$ kubectl edit pv nfs-pv3

......

finalizers:

- kubernetes.io/pv-protection #删除此行即可自动删除处于Terminating状态的PV

......

参考文档:

https://jimmysong.io/kubernetes-handbook/practice/using-nfs-for-persistent-storage.html

https://blog.csdn.net/aixiaoyang168/article/details/83988253

http://dockone.io/article/2598