Caffe2 - (九)MNIST 手写字体识别

Caffe2 - MNIST 手写字体识别

- LeNet - CNN 网络训练; 采用 ReLUs 激活函数代替 Sigmoid.

- model helper

import matplotlib.pyplot as plt

import numpy as np

import os

import shutil

import caffe2.python.predictor.predictor_exporter as pe

from caffe2.python import core, model_helper, net_drawer, workspace, visualize, brew

# 设置不显示初始化信息

# 可以将 --caffe2_log_level=0 变为 --caffe2_log_level=-1

core.GlobalInit(['caffe2', '--caffe2_log_level=0'])

print("Necessities imported!")1. MNIST 数据准备

下载 MNIST Dataset,并解压.

Caffe2 提供了 LevelDB 数据集的工具 - make_mnist_db,

caffe2/build/caffe2/binaries或/usr/local/bin/make_mnist_db./usr/local/bin/make_mnist_db --channel_first --db leveldb --image_file data/mnist/train-images-idx3-ubyte --label_file data/mnist/train-labels-idx1-ubyte --output_file data/mnist/mnist-train-nchw-leveldb /usr/local/bin/make_mnist_db --channel_first --db leveldb --image_file data/mnist/t10k-images-idx3-ubyte --label_file data/mnist/t10k-labels-idx1-ubyte --output_file data/mnist/mnist-test-nchw-leveldbPython 转换 LevelDB:

import os def GenerateDB(image, label, name): '''Calls the make_mnist_db binary to generate a leveldb from a mnist dataset''' name = os.path.join(data_folder, name) print 'DB: ', name if not os.path.exists(name): syscall = "/usr/local/bin/make_mnist_db --channel_first --db leveldb --image_file " + image + " --label_file " + label + " --output_file " + name # print "Creating database with: ", syscall os.system(syscall) else: print "Database exists already. Delete the folder if you have issues/corrupted DB, then rerun this." if os.path.exists(os.path.join(name, "LOCK")): # print "Deleting the pre-existing lock file" os.remove(os.path.join(name, "LOCK")) data_folder = 'data/mnist' image_file_train = os.path.join(data_folder, "train-images-idx3-ubyte") label_file_train = os.path.join(data_folder, "train-labels-idx1-ubyte") image_file_test = os.path.join(data_folder, "t10k-images-idx3-ubyte") label_file_test = os.path.join(data_folder, "t10k-labels-idx1-ubyte") GenerateDB(image_file_train, label_file_train, "mnist-train-nchw-leveldb") GenerateDB(image_file_test, label_file_test, "mnist-test-nchw-leveldb")Caffe2 也提供了转换后的 MNIST 数据集:

- MNIST-nchw-lmdb - lmdb, NCHW

- MNIST-nchw-leveldb - leveldb, NCHW

- MNIST-nchw-minidb - minidb,NCHW

2. LeNet 网络模块

主要包括四部分:

- 数据输入 - AddInput 函数

- 网络定义 - AddLeNetModel 函数

- 网络训练 - AddTrainingOperators 函数

- BookKeeping - AddBookkeepingOperators 函数

2.1 AddInput 函数

- 从 DB 中读取 MNIST 数据,存储了图片像素值,网络读入数据格式为

[batch_size, num_channels, width, height],这里是[batch_size, 1, 28, 28],datatype 为 uint8; label 的格式为[batch_size],datatype 为 int. - 网络进行的是浮点计算(float computations),这里将数据设为 float 类型.

- 数值稳定性,将数据从 [0, 255] 范围,转换到 [0, 1]. in-place 计算.

def AddInput(model, batch_size, db, db_type):

# load the data

data_uint8, label = model.TensorProtosDBInput(

[], ["data_uint8", "label"], batch_size=batch_size,

db=db, db_type=db_type)

# cast the data to float

data = model.Cast(data_uint8, "data", to=core.DataType.FLOAT)

# scale data from [0,255] down to [0,1]

data = model.Scale(data, data, scale=float(1./256))

# don't need the gradient for the backward pass

data = model.StopGradient(data, data)

return data, label2.2 AddLeNetModel 函数

- 网络输入是:data 和 label. 输出各类的概率值 [0, 1].

- Softmax 输出.

def AddLeNetModel(model, data):

'''

Standard LeNet model: from data to the softmax prediction.

convolutional layer:

dim_in - number of input channels

dim_out - number or output channels

each Conv and MaxPool layer changes the image size.

For example, kernel of size 5 reduces each side of an image by 4.

MaxPool layer, kernel and stride sizes equal 2, which divides each side in half.

'''

# Image size: 28 x 28 -> 24 x 24

conv1 = brew.conv(model, data, 'conv1', dim_in=1, dim_out=20, kernel=5)

# Image size: 24 x 24 -> 12 x 12

pool1 = brew.max_pool(model, conv1, 'pool1', kernel=2, stride=2)

# Image size: 12 x 12 -> 8 x 8

conv2 = brew.conv(model, pool1, 'conv2', dim_in=20, dim_out=50, kernel=5)

# Image size: 8 x 8 -> 4 x 4

pool2 = brew.max_pool(model, conv2, 'pool2', kernel=2, stride=2)

# 50 * 4 * 4 stands for dim_out from previous layer multiplied by the image size

fc3 = brew.fc(model, pool2, 'fc3', dim_in=50 * 4 * 4, dim_out=500)

fc3 = brew.relu(model, fc3, fc3)

pred = brew.fc(model, fc3, 'pred', 500, 10)

softmax = brew.softmax(model, pred, 'softmax')

return softmaxdef AddAccuracy(model, softmax, label):

"""Accuracy op to estimate the model"""

accuracy = brew.accuracy(model, [softmax, label], "accuracy")

return accuracy2.3 AddTrainingOperators

网络模型的训练,添加训练 operators:

Operator

LabelCrossEntropy- 计算输入和 label 的交叉熵. 一般是:Softmax + LabelCrossEntropy + Loss

xent = model.LabelCrossEntropy([softmax, label], 'xent')Operator

AveragedLoss- 计算交叉熵的平均 loss,其输入是交叉熵:loss = model.AveragedLoss(xent, "loss")函数

AddAccuracy- 计算模型的精度,以用于 bookkeeping:AddAccuracy(model, softmax, label)梯度 Operators - 计算关于 loss 的梯度:

model.AddGradientOperators([loss])Operator

Iter- 训练中迭代次数的计数器:ITER = brew.iter(model, "iter")学习率 Learning_rate - lr=base_lr∗(tgamma) l r = b a s e _ l r ∗ ( t g a m m a ) . 最优化时,是最小化 Loss,则 base_lr 是负值(negative),沿着 DownHill 方向进行:

LR = model.LearningRate(ITER, "LR", base_lr=-0.1, policy="step", stepsize=1, gamma=0.999 )ONE - 用于更新梯度的常数,只需要创建一次,放在 pram_init_net 中:

ONE = model.param_init_net.ConstantFill([], "ONE", shape=[1], value=1.0)梯度更新时,需要对每个参数进行更新. 每个参数的梯度,采用 ModelHelper 来追踪. 以加权和的方式: param=param+param_grad∗LR p a r a m = p a r a m + p a r a m _ g r a d ∗ L R .

for param in model.params: param_grad = model.param_to_grad[param] model.WeightedSum([param, ONE, param_grad, LR], param)Operator

Checkpoint- 模型参数断点保存:model.Checkpoint([ITER] + model.params, [], db="mnist_lenet_checkpoint_%05d.lmdb", # 保存的名字 db_type="lmdb", every=20) # 每 20 次迭代保存一次

AddTrainingOperators 函数:

def AddTrainingOperators(model, softmax, label):

"""Training operators to the model."""

xent = model.LabelCrossEntropy([softmax, label], 'xent')

# 计算 Loss

loss = model.AveragedLoss(xent, "loss")

# 计算模型精度

AddAccuracy(model, softmax, label)

# 根据 loss 计算模型梯度,gradient operators

model.AddGradientOperators([loss])

# SGD 优化

ITER = brew.iter(model, "iter")

# 设置 learning_rate 更新

LR = model.LearningRate(ITER, "LR", base_lr=-0.1, policy="step", stepsize=1, gamma=0.999 )

# param_init_net 中创建常数值,ONE

ONE = model.param_init_net.ConstantFill([], "ONE", shape=[1], value=1.0)

# 对每一个参数,更新梯度

for param in model.params:

# 采用 ModelHelper,获得每个参数的梯度

param_grad = model.param_to_grad[param]

# 采用加权和的方式更新梯度

# param = param + param_grad * LR

model.WeightedSum([param, ONE, param_grad, LR], param)2.4 AddBookkeepingOperators

该函数不影响训练过程,只是用来输出保存 logs.

def AddBookkeepingOperators(model):

"""

Only collect statistics and prints them to file or to logs.

"""

# 输出 blob 内容,to_file=1 表示打印输出到文件

# 文件保存路径:root_folder/[blob name]

model.Print('accuracy', [], to_file=1)

model.Print('loss', [], to_file=1)

# 累加参数,并给出参数的统计值,如 mean, std, min and max

for param in model.params:

model.Summarize(param, [], to_file=1)

model.Summarize(model.param_to_grad[param], [], to_file=1)3. LeNet 网络

3.1 LeNet 网络定义

arg_scope = {"order": "NCHW"}

# 训练网络

train_model = model_helper.ModelHelper(name="mnist_train", arg_scope=arg_scope)

data, label = AddInput(train_model, batch_size=64,

db=os.path.join(data_folder, 'mnist-train-nchw-lmdb'),

db_type='lmdb')

softmax = AddLeNetModel(train_model, data)

AddTrainingOperators(train_model, softmax, label)

AddBookkeepingOperators(train_model)

# 测试网络

test_model = model_helper.ModelHelper(name="mnist_test", arg_scope=arg_scope, init_params=False)

data, label = AddInput(test_model, batch_size=100,

db=os.path.join(data_folder, 'mnist-test-nchw-lmdb'),

db_type='lmdb')

softmax = AddLeNetModel(test_model, data)

AddAccuracy(test_model, softmax, label)

# 模型部署

deploy_model = model_helper.ModelHelper(name="mnist_deploy", arg_scope=arg_scope, init_params=False)

AddLeNetModel(deploy_model, "data")3.2 LeNet 可视化

Caffe2 提供了可视化工具,先安装 graphviz:

sudo yum install graphviz可视化网络:

显示全部参数和 Operators

graph = net_drawer.GetPydotGraph(train_model.net.Proto().op, "mnist", rankdir="LR") graph.write_png('graph.png')只显示 Operators

graph = net_drawer.GetPydotGraphMinimal(train_model.net.Proto().op, "mnist", rankdir="LR", minimal_dependency=True) graph.write_png('graph.png'))保存网络结构到文件,类似与 caffe 网络定义:

with open(os.path.join(root_folder, "train_net.pbtxt"), 'w') as fid: fid.write(str(train_model.net.Proto())) with open(os.path.join(root_folder, "train_init_net.pbtxt"), 'w') as fid: fid.write(str(train_model.param_init_net.Proto())) with open(os.path.join(root_folder, "test_net.pbtxt"), 'w') as fid: fid.write(str(test_model.net.Proto())) with open(os.path.join(root_folder, "test_init_net.pbtxt"), 'w') as fid: fid.write(str(test_model.param_init_net.Proto())) with open(os.path.join(root_folder, "deploy_net.pbtxt"), 'w') as fid: fid.write(str(deploy_model.net.Proto())) print("Protocol buffers files have been created in your root folder: " + root_folder)

3.3 LeNet 训练

主要处理步骤:

初始化网络:

workspace.RunNetOnce(train_model.param_init_net)创建网络:

workspace.CreateNet(train_model.net)设置训练迭代次数,并创建数组记录每次迭代的 accuracy 和loss:

total_iters = 200 accuracy = np.zeros(total_iters) loss = np.zeros(total_iters)网络训练,主要是通过调用

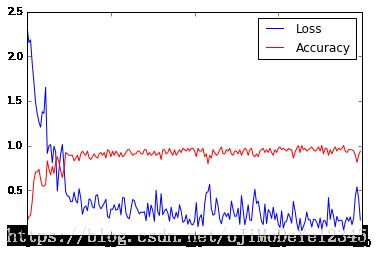

workspace.RunNet,并传递网络名train_model.net.Proto().name:for i in range(total_iters): workspace.RunNet(train_model.net.Proto().name) accuracy[i] = workspace.FetchBlob('accuracy') loss[i] = workspace.FetchBlob('loss')可视化训练 accuracy 和 loss.

LeNet 训练过程:

# 网络初始化

workspace.RunNetOnce(train_model.param_init_net)

# 网络创建

workspace.CreateNet(train_model.net, overwrite=True)

# 迭代次数设置,创建 accuracy 和 loss 数组

total_iters = 200

accuracy = np.zeros(total_iters)

loss = np.zeros(total_iters)

# 训练网络

for i in range(total_iters):

workspace.RunNet(train_model.net)

accuracy[i] = workspace.FetchBlob('accuracy')

loss[i] = workspace.FetchBlob('loss')

# 可视化训练 accuracy 和 loss

plt.plot(loss, 'b')

plt.plot(accuracy, 'r')

plt.legend(('Loss', 'Accuracy'), loc='upper right')

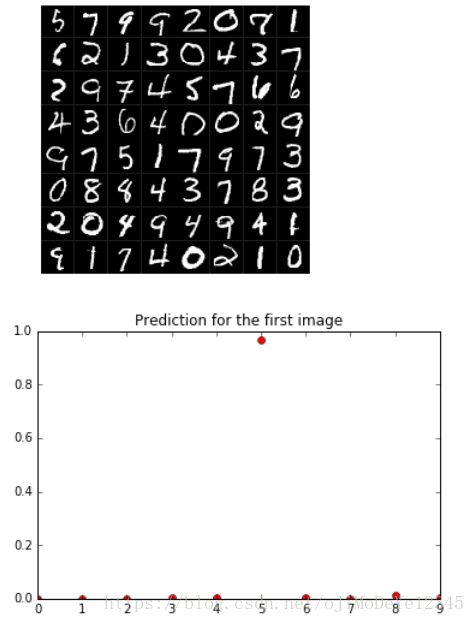

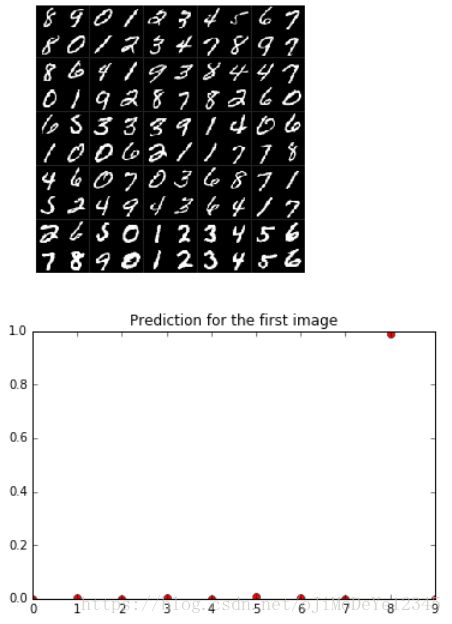

3.4 LeNet 中间数据查看

plt.figure()

data = workspace.FetchBlob('data')

_ = visualize.NCHW.ShowMultiple(data)

plt.figure()

softmax = workspace.FetchBlob('softmax')

_ = plt.plot(softmax[0], 'ro')

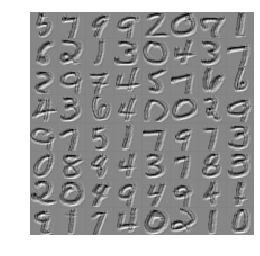

plt.title('Prediction for the first image')# Conv 层数据

plt.figure()

conv = workspace.FetchBlob('conv1')

shape = list(conv.shape)

shape[1] = 1

# 15 channel

# feature model learned

conv = conv[:,15,:,:].reshape(shape)

_ = visualize.NCHW.ShowMultiple(conv)3.5 LeNet 模型测试

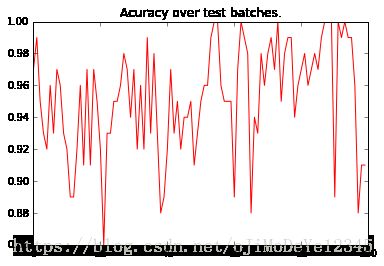

# 测试集

workspace.RunNetOnce(test_model.param_init_net)

workspace.CreateNet(test_model.net, overwrite=True)

test_accuracy = np.zeros(100)

for i in range(100):

workspace.RunNet(test_model.net.Proto().name)

test_accuracy[i] = workspace.FetchBlob('accuracy')

# 可视化测试精度.

plt.plot(test_accuracy, 'r')

plt.title('Acuracy over test batches.')

print('test_accuracy: %f' % test_accuracy.mean())3.6 LeNet 模型部署

模型保存:

# 输出模型到文件,需要手工指定模型的 inputs/outputs

pe_meta = pe.PredictorExportMeta(predict_net=deploy_model.net.Proto(),

parameters=[str(b) for b in deploy_model.params],

inputs=["data"],

outputs=["softmax"],)

# 采用 minidb 格式保存模型

pe.save_to_db("minidb", os.path.join(root_folder, "mnist_model.minidb"), pe_meta)

print("The deploy model is saved to: " + root_folder + "/mnist_model.minidb")模型加载与部署:

# 采用最后一个 input data 作为输出,进行预测.

blob = workspace.FetchBlob("data")

plt.figure()

_ = visualize.NCHW.ShowMultiple(blob)

# 重置 workspace,以确保模型加载.

workspace.ResetWorkspace(root_folder)

# 确定 workspace 被置空.

print("The blobs in the workspace after reset: {}".format(workspace.Blobs()))

# 加载训练的模型

predict_net = pe.prepare_prediction_net(os.path.join(root_folder, "mnist_model.minidb"), "minidb")

# 查看加载网络,确定正确

print("The blobs in the workspace after loading the model: {}".format(workspace.Blobs()))

# 输入数据到 workspace

workspace.FeedBlob("data", blob)

# 预测

workspace.RunNetOnce(predict_net)

softmax = workspace.FetchBlob("softmax")

# 预测结果

plt.figure()

_ = plt.plot(softmax[0], 'ro')

plt.title('Prediction for the first image')4. Reference

[1] - MNIST - Handwriting Recognition