TensorFlow神经网络(三)神经网络优化

一、激活函数

二、损失函数

① mse(mean squared error)均方误差

loss_mse = tf.reduce_mean(tf.square(y_ - y)

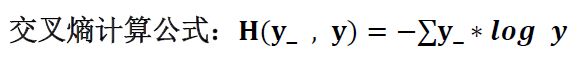

② ce(cross entropy)交叉熵

表示两个概率分布之间的距离。

交叉熵越大,两个概率分布距离越远,两个概率分布越相异。

交叉熵越小,两个概率分布距离越近,两个概率分布越相似。

ce = tf.reduce_mean(y_ * tf.log(tf.clip_by_value(y, 1e-12, 1.0)))

# y 小于1e-12为1e-12,否则大于1.0为1.0

在实际操作中,为了让前向传播的结果满足概率分布,即推测出的n分类的输出y1~yn(每个情况出现的可能性)每个都在[0,1]之间,且n个输出之和为1,引入softmax函数,将n个输出经过softmax函数,得到符合概率分布的分类结果:

在TensorFlow中,一般让模型的输出经过softmax函数,以获得输出分类的概率分布,再与标准答案对比,求出交叉熵,得到损失函数:

ce = tf.nn.sparse_softmax_cross_entropy_with_logits(logits = y, lables = tf.argmax(y_, 1))

cem = tf.reduce_mean(ce)

# 在工程实用中,可以直接用此两行替换上面一行求交叉熵的函数

③ 自定义

# e.g. 自定义分段函数

# greater函数结果为真时取前一个分段,否则取后一个

loss_selfdefine = tf.reduce_sum( tf.where( tf.greater(y, y_), COST*(y - y_), PROFIT*(y_ - y) ) )

三、学习率

① 任意设置学习率

损失函数

loss = (w +1)^2

学习率设置0.2,可以最终得到损失函数为0的参数w=-1

# coding: utf-8

import tensorflow as tf

# initialize w

w = tf.Variable(tf.constant(5, dtype = tf.float32))

# loss function

loss = tf.square(w+1)

# define back propagation method

train_step = tf.train.GradientDescentOptimizer(0.2).minimize(loss)

# train

with tf.Session() as sess:

init_op = tf.global_variables_initializer()

sess.run(init_op)

for i in range(40):

sess.run(train_step)

w_val = sess.run(w)

loss_val = sess.run(loss)

print("after %s steps: w is %f, loss is %f." % (i, w_val, loss_val))

- 学习率大了,振荡不收敛,学习率小了收敛速度太慢

② 指数衰减学习率

global_step表示运行到第几轮

LEARNING_RATE_STEP表示多少轮更新一次学习率,如500个训练样本,BATCH_SIZE取5,共100轮,则每100轮更新一次,即每次喂完所有数据之后,下一轮重新进500个样本的时候更新。

# coding: utf-8

import tensorflow as tf

LEARNING_RATE_BASE = 0.1 #最初学习率

LEARNING_RATE_DECAY = 0.99 #学习率衰减率

LEARNING_RATE_STEP = 1 #喂人多少轮BATCH_SIZE后,更新学习率

#标记运行到第几轮Batch_size的计数器,初始化0,设为不可被训练

global_step = tf.Variable(0, trainable = False)

#定义指数下降学习率

learning_rate = tf.train.exponential_decay(LEARNING_RATE_BASE, global_step, LEARNING_RATE_STEP, LEARNING_RATE_DECAY, staircase = True)

#定义待优化函数

w = tf.Variable(tf.constant(5, dtype = tf.float32))

#损失函数

loss = tf.square(w + 1)

# 反传方法

train_step = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss, global_step = global_step)

#生成会话

with tf.Session() as sess:

init_op = tf.global_variables_initializer()

sess.run(init_op)

for i in range(40):

sess.run(train_step)

learning_rate_val = sess.run(learning_rate)

global_step_val = sess.run(global_step)

w_val = sess.run(w)

loss_val = sess.run(loss)

print("after %s steps: global_step is %f, w is %f, lr is %f, loss is %f" %(i, global_step_val, w_val, learning_rate_val, loss_val))

四、滑动平均/影子值

############# warm up ! #############

'''

# 实例化滑动平均类。给出滑动平均参数,衰减率和当前轮数

ema = tf.train.ExponentialMovingAverage(MOVING_AVERAGE_DECAY, global_step)

# tf.trainable_variables()自动将所有待训练参数汇总为列表

# 所以下面这句可以对所有参数求滑动平均

ema_op = ema.apply(tf.trainable_variables())

# 将滑动平均和训练过程同步进行

with tf.control_dependencies([train_step, ema_op]):

# 将滑动平均和训练过程合成一个训练节点

train_op = tf.no_op(name = 'train')

# 查看某参数的滑动平均值

ema.average(parameter)

'''

############ full coding! ##########

# coding : utf-8

import tensorflow as tf

import os

os.environ['TF_CPP_MIN_LOG_LEVEL']='2' #hide warnings

# step 1: 定义变量及滑动平均类

# 要优化的w1参数,赋初值0.0

w1 = tf.Variable(0, dtype = tf.float32)

# 定义神经网络迭代轮数,赋初值0,不可被训练/优化

global_step = tf.Variable(0, trainable = False)

# 滑动平均衰减率

MOVING_AVERAGE_DECAY = 0.99

# 实例化滑动平均类

ema = tf.train.ExponentialMovingAverage(MOVING_AVERAGE_DECAY, global_step)

# 将滑动平均类apply到所有待训练的参数列表中,得到滑动平均节点

ema_op = ema.apply(tf.trainable_variables())

# step 2: 查看不同迭代中变量取值的变化

with tf.Session() as sess:

# 初始化训练节点

init_op = tf.global_variables_initializer()

sess.run(init_op)

# 打印出当前参数w1和其滑动平均值

print(sess.run([w1, ema.average(w1)]))

# 更新w1 的值为1

sess.run(tf.assign(w1, 1))

# 执行滑动平均节点

sess.run(ema_op)

# 打印

print(sess.run([w1, ema.average(w1)]))

# 更新step和w1的值,模拟100次迭代后,w1的值变为10

sess.run(tf.assign(global_step, 100))

sess.run(tf.assign(w1, 10))

sess.run(ema_op)

# 打印

print(sess.run([w1, ema.average(w1)]))

# 继续重复,执行滑动平均节点的操作

sess.run(ema_op)

print(sess.run([w1, ema.average(w1)]))

sess.run(ema_op)

print(sess.run([w1, ema.average(w1)]))

sess.run(ema_op)

print(sess.run([w1, ema.average(w1)]))

sess.run(ema_op)

print(sess.run([w1, ema.average(w1)]))

sess.run(ema_op)

print(sess.run([w1, ema.average(w1)]))

sess.run(ema_op)

print(sess.run([w1, ema.average(w1)]))

- 输出结果:

λ python movingAverage.py

D:\anaconda3-5.2.0\anaconda3-5.2.0\lib\site-packages\h5py\__init__.py:36: FutureWarning: Conversion of the second argument of issubdtype from `float` to `np.floating` is deprecated. In future, it will be treated as `np.float64 == np.dtype(float).type`.

from ._conv import register_converters as _register_converters

[0.0, 0.0]

[1.0, 0.9]

[10.0, 1.6445453]

[10.0, 2.3281732]

[10.0, 2.955868]

[10.0, 3.532206]

[10.0, 4.061389]

[10.0, 4.547275]

[10.0, 4.9934072]

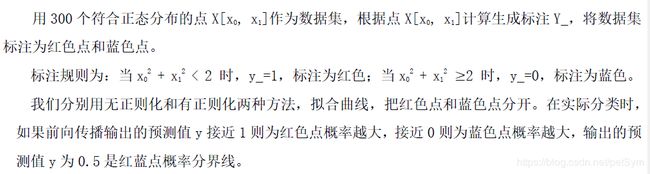

五、正则化

- 神经网络模型在训练数据集上的准确率较高,但是在对新的数据进行预测或分类时准确率较低,说明模型的泛化能力差,模型存在过拟合。

- 正则化:在损失函数中引入模型复杂度指标,即给每个参数

w加上权重,从而弱化训练数据的噪声,减小过拟合。 - 使用正则化后,损失函数

loss变为两项之和:

loss = loss(y, y_) + REGULARIZER * loss(w)

// 1.第一项是预测y和标准答案y_之间的差距

////(原来不正则化方案的loss),如交叉熵、均方误差等;

// 2.第二项是正则化结果,有L1和L2两种正则化计算方法。

////REGULARIZER是正则化的权重,

////给出参数w在总loss中比例的超参数

- 正则化的两种计算方法:

loss(w) = tf.contrib.layers.l1_regularizer(REGULARIZER)(W)

loss(w) = tf.contrib.layers.l2_regularizer(REGULARIZER)(W)

################# warm up ! ######################

# 把各个w的正则化加到losses集合对应位置

tf.add_to_collection('losses', tf.contrib.layers.l2_regularizer(REGULARIZER)(w))

# 把losses集合的各个值相加,再加上交叉熵:

loss = cem + tf.add_n(tf.get_collection('losses'))

# 安装python中的可视化工具模块

pip install matplotlib

# 指定颜色实现点(x, y)的可视化

plt.scatter(x, y, c = " ")

# 显示结果

plt.show()

# 找到指定区域内以步长为分辨率的行列网格坐标点

xx, yy = np.mgrid[startx: endx: stepx, starty, endy, stepy]

# 收集规定区域内所有的网格坐标点,组成矩阵

grid = np.c_[xx.ravel(), yy.ravel()]

# 告知x,y坐标,用levels指定高度的点描上颜色

plt.contour(x, y, height, levels = [])

- 不使用正则化进行预测的结果

################ complete coding! #################

# coding: utf-8

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

import os

os.environ['TF_CPP_MIN_LOG_LEVEL']='2' #hide warnings

BATCH_SIZE = 30

seed = 2

######## step 1 :数据集的产生和预处理

# 基于seed产生随机数

rdm = np.random.RandomState(seed)

# 随机数返回300行2列的矩阵,表示300组坐标点,作为输入数据集

X = rdm.randn(300, 2)

# 手工标注数据分类

Y_ = [int(x0*x0 + x1*x1 < 2)for (x0, x1) in X]

# Y_为1,标记红色,否则蓝色

Y_c = [['red' if y else 'blue'] for y in Y_]

# 对数据集和标签进行reshape, X整理为n行2列,Y为n行1列,第一个元素-1表示n行

X = np.vstack(X).reshape(-1, 2)

Y_ = np.vstack(Y_).reshape(-1, 1)

print("X:\n")

print(X)

print("Y_:\n")

print(Y_)

print("Y_c:\n")

print(Y_c)

# 从每个点的可视化,到整个图的可视化

plt.scatter(X[:, 0], X[:, 1], c = np.squeeze(Y_c))

plt.show()

########### step 2 :神经网络前向传播

# 定义初始化权重、将正则化后权重加入losses集合的函数

def get_weight(shape, regularizer):

w = tf.Variable(tf.random_normal(shape), dtype = tf.float32)

tf.add_to_collection('losses', tf.contrib.layers.l2_regularizer(regularizer)(w))

return w

# 定义初始化偏置的函数

def get_bias(shape):

b = tf.Variable(tf.constant(0.01, shape = shape))

return b

# placeholder占位

x = tf.placeholder(tf.float32, shape = (None, 2))

y_ = tf.placeholder(tf.float32, shape = (None, 1))

# 网络结构计算图

w1 = get_weight([2, 11], 0.01)

b1 = get_bias([11])

y1 = tf.nn.relu(tf.matmul(x, w1) + b1)

w2 = get_weight([11, 1], 0.01)

b2 = get_bias([1])

y = tf.matmul(y1, w2) + b2 #输出层不通过激活函数

# 定义损失函数

loss_mse = tf.reduce_mean(tf.square(y - y_))

# 均方误差loss加上正则化w的loss

loss_total = loss_mse + tf.add_n(tf.get_collection('losses'))

########### step 3 :神经网络反向传播 + 不含正则化

train_step = tf.train.AdamOptimizer(0.0001).minimize(loss_mse)

with tf.Session() as sess:

init_op = tf.global_variables_initializer()

sess.run(init_op)

STEPS = 40000

for i in range(STEPS):

start = (i * BATCH_SIZE) % 300

end = start + BATCH_SIZE

sess.run(train_step, feed_dict = {x: X[start: end], y_: Y_[start: end]})

if i % 2000 ==0:

loss_mse_val = sess.run(loss_mse, feed_dict = {x: X, y_: Y_})

print("after %d steps, loss of mse is %f:" %(i, loss_mse_val))

# 生成二维网格坐标点

xx, yy = np.mgrid[-3:3:.01, -3:3:.01]

# 将xx,yy拉直,合并成一个2列的矩阵,得到一个网络坐标点的集合

grid = np.c_[xx.ravel(), yy.ravel()]

# 将网格坐标点喂入神经网络,神经网络推算出的结果赋给probs

# 这样两列的输入x才是符合这个神经网络结构的

probs = sess.run(y, feed_dict = {x: grid})

# probs调整成和xx一样的shape

probs = probs.reshape(xx.shape)

print("w1:\n", sess.run(w1))

print("b1:\n", sess.run(b1))

print("w2:\n", sess.run(w2))

print("b2:\n", sess.run(b2))

# 可视化

plt.scatter(X[:, 0], X[:, 1], c = np.squeeze(Y_c))

# 给probs值为0.5的所有点(xx, yy)上色

plt.contour(xx, yy, probs, levels = [.5])

plt.show()

########### step 3 v2:神经网络反向传播 + 含正则化

train_step = tf.train.AdamOptimizer(0.0001).minimize(loss_total)

with tf.Session() as sess:

init_op = tf.global_variables_initializer()

sess.run(init_op)

STEPS = 40000

for i in range(STEPS):

start = (i * BATCH_SIZE) % 300

end = start + BATCH_SIZE

sess.run(train_step, feed_dict = {x: X[start:end], y_: Y_[start:end]})

if i % 2000 ==0:

loss_v = sess.run(loss_total, feed_dict = {x: X, y_: Y_})

print("after %d steps, loss for total is %f" %(i, loss_v))

xx, yy = np.mgrid[-3:3:.01, -3:3:.01]

grid = np.c_[xx.ravel(), yy.ravel()]

probs = sess.run(y, feed_dict = {x: grid})

probs = probs.reshape(xx.shape)

print("w1:\n", sess.run(w1))

print("b1:\n", sess.run(b1))

print("w2:\n", sess.run(w2))

print("b2:\n", sess.run(b2))

# 可视化

plt.scatter(X[:, 0], X[:, 1], c = np.squeeze(Y_c))

# 给probs值为0.5的所有点(xx, yy)上色

plt.contour(xx, yy, probs, levels = [.5])

plt.show()