TensorFlow神经网络(六)制作数据集,实现特定应用

【致谢】内容来自mooc人工智能实践第六讲

并广泛参考文章 https://www.jianshu.com/p/766a2af5eb6a

一、数据集生成读取文件mnist_generateds.py

1.基本函数和用法

tfrecords文件:二进制文件,可以先将图片和标签制作成该格式的文件。

使用tfrecords文件进行数据读取,可以提高内存的利用率。tf.train.Example:用来存储训练数据。

训练数据的特征用键值对的形式表示;值可以是字符串(Byteslist)、实数列表(FloatList)、整数列表(Int64List)。SerializeToString():把数据序列转化成字符串存储。

2.生成tfrecords文件

- 创建一个writer

writer = tf.python_io.TFRecordWriter(tfRecordName)

- for循环遍历每张图片和标签

把每张图片和标签封装到example中:

tf.train.Features中,特征会以字典的形式给出

example = tf.train.Example(features = tf.train.Features(feature = \

{'img_raw ': tf.train.Feature(bytes_list = tf.train.BytesList(value = [img_raw])),\

'label': tf.train.Feature(int64_list = tf.train.Int64List(value = labels))\

}))

- 把example进行序列化

writer.write(example.SerializeToString())

- 关闭writer

writer.close()

3.解析tfrecords文件得到图片和标签

- 建立文件队列名

# 2018.12.02订正,因为缺少shuffle=True参数导致之前一直调试backward都有问题!!!

# shuffle:布尔值。如果为true,则在每个epoch内随机打乱顺序

# 这也是解决之前一直out of range的一步

#之前backward.py调用generateds.py中的read_tfRecord函数批读取tfrecords文件代码,一直都没有读到东西

filename_queue = tf.train.string_input_producer([tfRecord_path], shuffle=True)

- 新建reader

reader = tf.TFRecordReader()

- 读出的每一个样本保存在serialized_example中,进行解序列化

_, serialized_example = reader.read(filename_queue)

features = tf.parse_single_example(serialized_example, features = \

{'img_raw': tf.FixedLenFeature([], tf.string),\

'lable': tf.FixedLenFeature([10], tf.int64)})

- 把图片和标签变为满足模型输入要求的形式

img = tf.decode_raw(features['img_raw'], tf.uint8)

# 形状变为1行784列

img.set_shape([784])

# 变为0-1之间的浮点数

img = tf.cast(img, tf.float32) * (1./255)

# label的元素也变为浮点数

label = tf.cast(features['label'], tf.float32)

完整的mnist_generateds.py

# mnist_generateds.py

# coding: utf-8

import tensorflow as tf

import numpy as np

from PIL import Image

import os

image_train_path = './mnist_data_jpg/mnist_train_jpg_60000/'

label_train_path = './mnist_data_jpg/mnist_train_jpg_60000.txt'

tfRecord_train = './data/mnist_train.tfrecords'

image_test_path = './mnist_data_jpg/mnist_test_jpg_10000/'

label_test_path = './mnist_data_jpg/mnist_test_jpg_10000.txt'

tfRecord_test = './data/mnist_test.tfrecords'

data_path = './data'

resize_height = 28

resize_width = 28

def write_tfRecord(tfRecordName, image_path, label_path):

# 创建writer

writer = tf.python_io.TFRecordWriter(tfRecordName)

# 计数器

num_pic = 0

# 以读的形式打开标签文件txt,每行为图片名空格标签

f = open(label_path, 'r')

# 读取整个文件内容

contents = f.readlines()

f.close()

# 遍历每行的内容

for content in contents:

# 用空格分隔每行的内容组成列表

value = content.split()

# 每张图片路径为:图片集文件路径+图片名

img_path = image_path + value[0]

# 打开图片

img = Image.open(img_path)

# 转化成二进制数据

img_raw = img.tobytes()

# 初始化标签

labels = [0] * 10

labels[int(value[1])] = 1

# 把每张图片和标签封装到example中

example = tf.train.Example(features=tf.train.Features(feature={

'img_raw': tf.train.Feature(bytes_list=tf.train.BytesList(value=[img_raw])),

'label': tf.train.Feature(int64_list=tf.train.Int64List(value=labels))

}))

# 把example进行序列化

writer.write(example.SerializeToString())

num_pic += 1

# 打印进度提示

print("the number of picture:", num_pic)

writer.close()

print("write tfrecord successful")

def generate_tfRecord():

# 判断保存路径是否存在

isExists = os.path.exists(data_path)

if not isExists:

os.makedirs(data_path)

print("The directory was created successfully")

else:

print("directory already exists")

# 写数据和标签到tfrecords文件

write_tfRecord(tfRecord_train, image_train_path, label_train_path)

write_tfRecord(tfRecord_test, image_test_path, label_test_path)

# 批读取tfrecords文件代码

def read_tfRecord(tfRecord_path):

# filename_queue = tf.train.string_input_producer([tfRecord_path]) # 2018.12.02

filename_queue = tf.train.string_input_producer([tfRecord_path], shuffle=True)

reader = tf.TFRecordReader()

# 读出的每一个样本保存在serialized_example中,进行解序列化

_, serialized_example = reader.read(filename_queue)

features = tf.parse_single_example(serialized_example,

features={

'label': tf.FixedLenFeature([10], tf.int64),

'img_raw': tf.FixedLenFeature([], tf.string)

})

img = tf.decode_raw(features['img_raw'], tf.uint8)

# 形状变为1行784列

img.set_shape([784])

# 变为0-1之间的浮点数

img = tf.cast(img, tf.float32) * (1. / 255)

# label的元素也变为浮点数

label = tf.cast(features['label'], tf.float32)

return img, label

def get_tfrecord(num, isTrain = True):

if isTrain:

tfRecord_path = tfRecord_train

else:

tfRecord_path = tfRecord_test

img, label = read_tfRecord(tfRecord_path)

# 从总样本中顺序取出capacity个数据,打乱顺序,每次输出batch_size组

# 整个过程使用两个线程

img_batch, label_batch = tf.train.shuffle_batch([img, label],

batch_size = num,

num_threads = 2,

capacity = 1000,

min_after_dequeue = 700)

# 返回随机取出的batch_size组数据

return img_batch, label_batch

def main():

generate_tfRecord()

if __name__ == '__main__':

main()

二、反向传播文件 ---- 修改图片标签获取的接口(mnist_backward.py)

- 关键操作:将批获取操作放到线程协调器开启和关闭之间,提高图片和标签的批获取效率

[附:前向传播py文件]

# mnist_forward.py

# coding: utf-8

import tensorflow as tf

INPUT_NODE = 784

OUTPUT_NODE = 10

LAYER1_NODE = 500

# 给w赋初值,并把w的正则化损失加到总损失中

def get_weight(shape, regularizer):

w = tf.Variable(tf.truncated_normal(shape, stddev = 0.1))

if regularizer != None: tf.add_to_collection('losses', tf.contrib.layers.l2_regularizer(regularizer)(w))

return w

# 给b赋初值

def get_bias(shape):

b = tf.Variable(tf.zeros(shape))

return b

def forward(x, regularizer):

w1 = get_weight([INPUT_NODE, LAYER1_NODE], regularizer)

b1 = get_bias([LAYER1_NODE])

y1 = tf.nn.relu(tf.matmul(x, w1) + b1)

w2 = get_weight([LAYER1_NODE, OUTPUT_NODE], regularizer)

b2 = get_bias([OUTPUT_NODE])

y = tf.matmul(y1, w2) + b2 #输出层不通过激活函数

return y

# mnist_backward.py

# coding: utf-8

import tensorflow as tf

# 导入imput_data模块

#-1 from tensorflow.examples.tutorials.mnist import input_data

import mnist_forward

import os

import mnist_generateds #*1

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' #hide warnings

# 定义超参数

BATCH_SIZE = 200

LEARNING_RATE_BASE = 0.1 #初始学习率

LEARNING_RATE_DECAY = 0.99 # 学习率衰减率

REGULARIZER = 0.0001 # 正则化参数

STEPS = 50000 #训练轮数

MOVING_AVERAGE_DECAY = 0.99

MODEL_SAVE_PATH = "./model/"

MODEL_NAME = "mnist_model"

train_num_examples = 60000 #*2 手动给出训练样本数

def backward(): #*10

# placeholder占位

x = tf.placeholder(tf.float32, shape = (None, mnist_forward.INPUT_NODE))

y_ = tf.placeholder(tf.float32, shape = (None, mnist_forward.OUTPUT_NODE))

# 前向传播推测输出y

y = mnist_forward.forward(x, REGULARIZER)

# 定义global_step轮数计数器,定义为不可训练

global_step = tf.Variable(0, trainable = False)

# 包含正则化的损失函数

# 交叉熵

ce = tf.nn.sparse_softmax_cross_entropy_with_logits(logits = y, labels = tf.argmax(y_, 1))

cem = tf.reduce_mean(ce)

# 使用正则化时的损失函数

loss = cem + tf.add_n(tf.get_collection('losses'))

# 定义指数衰减学习率

learning_rate = tf.train.exponential_decay(

LEARNING_RATE_BASE,

global_step,

train_num_examples / BATCH_SIZE, #11

LEARNING_RATE_DECAY,

staircase = True)

# 定义反向传播方法:包含正则化

train_step = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss, global_step = global_step)

# 定义滑动平均时,加上:

ema = tf.train.ExponentialMovingAverage(MOVING_AVERAGE_DECAY, global_step)

ema_op = ema.apply(tf.trainable_variables())

with tf.control_dependencies([train_step, ema_op]):

train_op = tf.no_op(name = 'train')

# 实例化saver

saver = tf.train.Saver()

# 一次从训练集批获取batch组

img_batch, label_batch = mnist_generateds.get_tfrecord(BATCH_SIZE, isTrain = True) #3

# 训练过程

with tf.Session() as sess:

# 初始化所有参数

#init_op = tf.global_variables_initializer()

#sess.run(init_op)

# https://blog.csdn.net/qq_34638161/article/details/80752596

sess.run(tf.local_variables_initializer())

sess.run(tf.global_variables_initializer())

#sess.run(tf.group(tf.local_variables_initializer(), tf.global_variables_initializer()))

# 断点续训 breakpoint_continue.py

ckpt = tf.train.get_checkpoint_state(MODEL_SAVE_PATH)

if ckpt and ckpt.model_checkpoint_path:

# 恢复当前会话,将ckpt中的值赋给 w 和 b

saver.restore(sess, ckpt.model_checkpoint_path)

# 打开线程协调器

coord = tf.train.Coordinator() #*4

threads = tf.train.start_queue_runners(sess = sess, coord = coord) #*5

#threads = tf.train.start_queue_runners(coord = coord)

# 循环迭代

for i in range(STEPS):

# 将训练集中一定batchsize的数据和标签赋给左边的变量

xs, ys = sess.run([img_batch, label_batch]) #*6

# 喂入神经网络,执行训练过程train_step

_, loss_value, step = sess.run([train_op, loss, global_step], feed_dict = {x: xs, y_: ys})

if i % 1000 == 0: # 拼接成./MODEL_SAVE_PATH/MODEL_NAME-global_step路径

# 打印提示

print("After %d steps, loss on traing batch is %g." % (step, loss_value))

saver.save(sess, os.path.join(MODEL_SAVE_PATH, MODEL_NAME), global_step = global_step)

# 关闭线程协调器

coord.request_stop() #*7

coord.join(threads) #*8

def main():

#* mnist = input_data.read_data_sets('./data/', one_hot = True)

# 调用定义好的测试函数

backward() #*9

# 判断python运行文件是否为主文件,如果是,则执行

if __name__ == '__main__':

main()

【补充】参考博文

p.s.【20128.12.02订正】这里的基本步骤是正确的,但是最后生成的tfrecords文件有点问题,所以导致backward.py无法正确运行。

https://www.jianshu.com/p/766a2af5eb6a ,进行如下一些修改:

1.修改常用目录为纯英文目录【2018.12.02改成纯英文没有空格的路径】

2.对照上述博文检查mnist_generateds.py文件,结果一致【2018.12.02最后证明就是这一步的细节有问题】

3.在mnist_generateds.py文件的同一个目录下建立文件夹data,这是我们最后生成的tfrecords文件存放的地方,以后就只需要copy这两个文件,就可以跑数据了。(把之前没跑出来的结果时data文件夹里面的内容删掉)

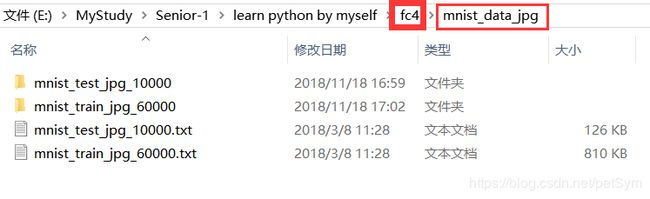

4.再在这个目录下建立文件夹,命名"mnist_data_jpg"

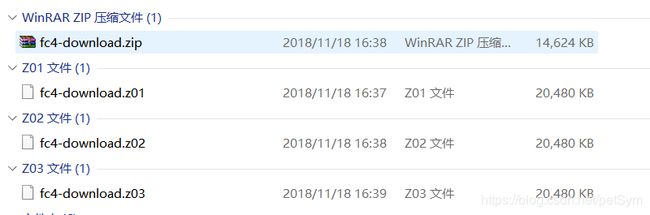

5.在github上下载,链接为 https://github.com/cj0012/AI-Practice-Tensorflow-Notes 我是下载了其中的如下图几个:

然后为了避免解压后文件夹重名,改了下名字:

然后选第一个zip解压,因为“z01或z02,以此类推的这种文件,主要是压缩文件的时候为了方便在网络传播分割起来的,比如某些论坛的附件就只能1m大小,就会分割成2个文件才能上传,就形成这样”,所以会自动出来结果是:

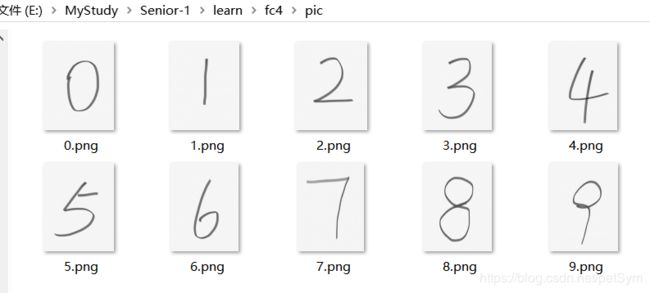

然后我就把这个里面的mnist_data_jpg文件夹(里面是训练集图片、测试集图片,以及它们的标签的txt文件),以及pic文件夹,复制到了我的目录下【2018.12.02这一步对于无知的自己是非常重要的】

结果如下图:

6.运行mnist_generateds.py,运行完以后就会发现在data文件夹下多了两个文件,就是我们打包好的数据集。结果如下:

【2018.12.02就是上面这两个文件有问题】

正确的应该是下面这个大小:

- 得到了数据集之后,原来的调用文件的方式还是

from tensorflow.examples.tutorials.mnist import input_data,导入imput_data模块、下载数据集、读取mnist,所以要修改【2018.12.02订正:这里的说法完全错误】因为已经是自己在制作数据集了,所以不再需要用这种import的方法导入数据集了,所以这一条代码会被删掉。

运行backward.py,结果如下:

在开始运行之后,即可开始同时运行测试文件,具体见下一章:

三、修改测试文件(mnist_test.py)的图片、标签获取接口

注意其中标识的修改的地方,特别是从测试集读取图片和对应标签(这里的测试实际上是验证,是指用已知标签的图片来检验之前生成的网络模型在这个验证集上的正确率)

即主要是增加了:

#从测试集读取图片

img_batch, label_batch = mnist_generateds.get_tfrecord(TEST_NUM, isTrain = False) #2

# coding:utf-8

# mnist_test.py

# 延时

import time

import tensorflow as tf

# 导入imput_data模块

#-1 from tensorflow.examples.tutorials.mnist import input_data

import mnist_forward

import mnist_backward

import mnist_generateds #0

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' #hide warnings

# 程序循环间隔时间5秒

TEST_INTERVAL_SECS = 5

# 手动训练数据个数

TEST_NUM = 10000 #1

def test(): #9

# 用于复现已经定义好了的神经网络

with tf.Graph().as_default() as g: # 其内定义的节点在计算图g中

# placeholder占位

x = tf.placeholder(tf.float32, shape=(None, mnist_forward.INPUT_NODE))

y_ = tf.placeholder(tf.float32, shape=(None, mnist_forward.OUTPUT_NODE))

# 前向传播推测输出y

y = mnist_forward.forward(x, None)

# 实例化带滑动平均的saver对象

# 这样,所有参数在会话中被加载时,会被复制为各自的滑动平均值

ema = tf.train.ExponentialMovingAverage(mnist_backward.MOVING_AVERAGE_DECAY)

ema_restore = ema.variables_to_restore()

saver = tf.train.Saver(ema_restore)

# 计算正确率

correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(y_, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

# 从测试集读取图片

img_batch, label_batch = mnist_generateds.get_tfrecord(TEST_NUM, isTrain = False) #2

while True:

with tf.Session() as sess:

# 加载训练好的模型,也即把滑动平均值赋给各个参数

ckpt = tf.train.get_checkpoint_state(mnist_backward.MODEL_SAVE_PATH)

#若ckpt和保存的模型在指定路径中存在

if ckpt and ckpt.model_checkpoint_path:

# 恢复会话

saver.restore(sess, ckpt.model_checkpoint_path)

# 恢复轮数

global_step = ckpt.model_checkpoint_path.split('/')[-1].split('-')[-1]

# 打开线程协调器

coord = tf.train.Coordinator() #3

threads = tf.train.start_queue_runners(sess = sess, coord = coord) #4

xs, ys = sess.run([img_batch, label_batch]) #5

# 计算准确率

# accuracy_score = sess.run(accuracy, feed_dict={x: mnist.test.images, y_: mnist.test.labels})

accuracy_score = sess.run(accuracy, feed_dict={x: xs, y_: ys})

# 打印提示

print("After %s training steps, test accuracy = %g" % (global_step, accuracy_score))

# 关闭线程协调器

coord.request_stop() #6

coord.join(threads) #7

#如果没有模型

else:

print("No checkpoint file found")

return

time.sleep(TEST_INTERVAL_SECS)

def main():

#8 mnist = input_data.read_data_sets('./data/', one_hot=True)

# 调用定义好的测试函数

test()

if __name__ == '__main__':

main()

运行test结果如下:

按照笔记6的pdf中的说法,当验证准确率达到95%以上之后,可以运行应用程序mnist_app.py:

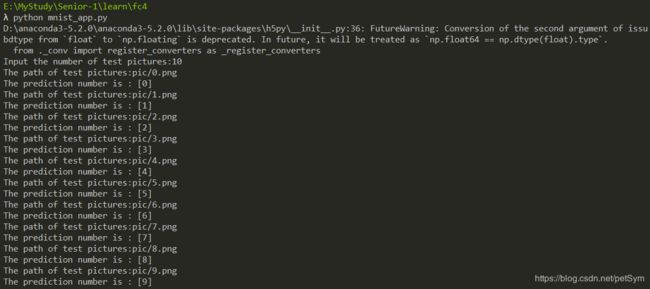

四、应用网络来测试任意手写数字

# mnist_app.py

# coding:utf-8

import tensorflow as tf

import numpy as np

from PIL import Image

import mnist_forward

import mnist_backward

def restore_model(testPicArr):

# 重现计算图

with tf.Graph().as_default() as tg:

x = tf.placeholder(tf.float32, [None, mnist_forward.INPUT_NODE])

y = mnist_forward.forward(x, None)

preValue = tf.argmax(y, 1) # y 的最大值对应的列表索引号

# 实例化带有滑动平均值的saver

variable_averages = tf.train.ExponentialMovingAverage(\

mnist_backward.MOVING_AVERAGE_DECAY)

variables_to_restore = variable_averages.variables_to_restore()

saver = tf.train.Saver(variables_to_restore)

# 用with结构加载ckpt

with tf.Session() as sess:

ckpt = tf.train.get_checkpoint_state(mnist_backward.MODEL_SAVE_PATH)

# 如果ckpt存在,恢复ckpt的参数和信息到当前会话

if ckpt and ckpt.model_checkpoint_path:

saver.restore(sess, ckpt.model_checkpoint_path)

# 把刚刚准备好的图片喂入网络,执行预测操作

preValue = sess.run(preValue, feed_dict = {x: testPicArr})

return preValue

else:

print("No checkpoint file found!")

return -1

def pre_pic(picName):

# 打开图片

img = Image.open(picName)

# 用消除锯齿的方法resize图片尺寸

reIm = img.resize((28, 28), Image.ANTIALIAS)

# 转化成灰度图,并转化成矩阵

im_arr = np.array(reIm.convert('L'))

# 二值化阈值

threshold = 50

# 模型要求黑底白字,故需要进行反色

for i in range(28):

for j in range(28):

im_arr[i][j] = 255 - im_arr[i][j]

# 二值化,过滤噪声,留下主要特征

if(im_arr[i][j] < threshold):

im_arr[i][j] = 0

else: im_arr[i][j] = 255

# 整理矩阵形状

nm_arr = im_arr.reshape([1, 784])

# 由于模型要求是浮点数,先改为浮点型

nm_arr = nm_arr.astype(np.float32)

# 0到255浮点转化成0到1浮点

img_ready = np.multiply(nm_arr, 1.0/255.0)

# 返回预处理完的图片

return img_ready

def application():

# 输入要识别的图片数目 # input从控制台读入返回的是str型!!!

testNum = int(input("Input the number of test pictures:") )

for i in range(testNum):

# 给出识别图片的路径 # raw_input从控制台读入字符串

testPic = input("The path of test pictures:")

# 接收的图片需进行预处理

testPicArr = pre_pic(testPic)

# 把整理好的图片喂入神经网络

preValue = restore_model(testPicArr)

# 输出预测结果

print("The prediction number is :", preValue)

# 程序从main函数开始执行

def main():

# 调用application函数

application()

if __name__ == '__main__':

main()

可见所有结果均正确!

五、小结[2018.12.02]

- 以后出现tab和空格导致的tab error的时候,表示python代码中混用了四个空格和一个tab键,可以将代码用notepad++打开,然后全选->编辑->空白字符操作->Tab转空格,这样就把所有的Tab都转化成了空格,再用sublime打开就可以发现都变成了点点点点(四个点表示四个空格键)。

- 之前一直生成的tfrecords文件和 https://www.jianshu.com/p/766a2af5eb6a 中的大小总是不一样不知道是为什么,今天还是把github上老师的整个zip中的代码拿来跑,发现没有问题;然后才先对比了老师的data文件夹里面两个tfrecords文件大小,和我的不一样,但是和前述链接中的是一样的。于是才去对比了mnist_generateds文件,这才发现老师的这个py文件里面和前述链接、以及视频、以及pdf文档中都不一样的这个,困扰了我很久的小地方:

filename_queue = tf.train.string_input_producer([tfRecord_path], shuffle=True)

- 终于解决了这个心梗,完结撒花【2018.12.02.19:54】