利用tensorflow实现LeNet5卷积神经网络

首先介绍一下什么是卷积神经网络。

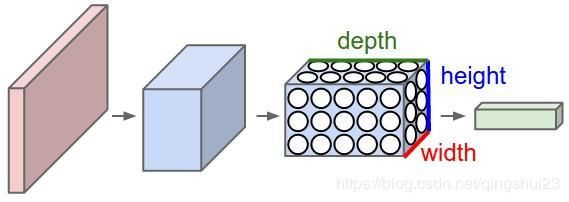

卷积神经网络(Convolutional Neural Networks, CNN)是一类包含卷积计算且具有深度结构的前馈神经网络(Feedforward Neural Networks),是深度学习(deep learning)的代表算法之一,它主要包含卷积层和池化层。下图是卷积神经网络的基本结构。

一个卷积神经网络通常由以下5部分组成。

- 输入层。 输入层是整个神经网络的输入,在卷积神经网络中,是一个像素矩阵,而且引入了深度的概念,如果是黑白灰度图像的话,那么深度为1,如果是彩色图像,深度为3(RGB3个色彩通道)。

- 卷积层。卷积层是一组平行的特征图(feature map),它通过在输入图像上滑动不同的卷积核并执行一定的运算而组成。此外,每一个滑动的位置上,卷积核与输入图像之间会执行一个元素对应乘积并求和的运算以将感受野内的信息投影到特征图中的一个元素。卷积核的尺寸要比输入图像小得多,且重叠或平行地作用于输入图像中,一张特征图中的所有元素都是通过一个卷积核计算得出的,也即一张特征图共享了相同的权重和偏置项。一般来说,通过卷积层处理之后深度会增加。

- 池化层。池化(Pooling)是卷积神经网络中另一个重要的概念,它实际上是一种形式的降采样。有多种不同形式的非线性池化函数,而其中“最大池化(Max pooling)”是最为常见的。可以认为是将一张分辨率高的图片转化为分辨率低的图片,通过池化层会不断地减小数据的空间大小,因此参数的数量和计算量也会下降,这在一定程度上也控制了过拟合。通常来说,CNN的卷积层之间都会周期性地插入池化层。

- 全连接层。在经过多次卷积和池化处理之后,在卷积神经网络的最后一般由1到2个全连接层来给出最后分类结果,一般来说,我们可以将卷积和池化处理过程当作图像自动特征提取的过程,所以特征提取之后,还使用全连接层来完成分类任务。

- Softmax层。这一层主要用于分类问题,通过Softmax层,可以得到不同分类的概率分布。

在本文中,我们将介绍经典的LeNet-5模型。

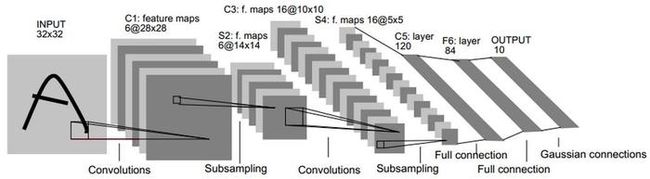

下图为LeNet-5模型图。

以mnist数据集为例介绍LeNet-5的每一层的结构及其参数设置。

第一层:输入层

这一层为原始的图像元素,图片大小为28*28*1,其中1表示黑白图像,所以channel为1

第二层:卷积层

这一层的输入为输入层的输出,即原始图像,卷积层的过滤器尺寸为5*5,深度为32,使用全0填充,所以本层的输出矩阵大小为28*28*32。

第三层:池化层

这一层的输入为卷积层的输出,是一个28*28*32的节点矩阵。本层采用过滤器大小为2*2,长和宽的步长均为2,所以本层输出矩阵大小为 14*14*32。

第四层:卷积层

这一层的输入为上一池化层的输出,卷积层的过滤器尺寸为5*5,深度为64,使用全0填充,所以本层的输出矩阵大小为14*14*32。

第五层:池化层

这一层的输入为卷积层的输出,是一个14*14*64的节点矩阵。本层采用过滤器大小为2*2,长和宽的步长均为2,所以本层输出矩阵大小为 7*7*64。

第六层:全连接层

这一层的输入为上一池化层的输出,输入节点个数为7*7*64=3136个,输出节点个数为512(人为设置)个。

第七层:全连接层

本层输入节点个数为512,输出节点个数为10(分类个数)。

上面介绍了LeNet-5模型每一层的结构和参数设置,下面给出利用tensorflow实现LeNet-5模型的卷积神经网络来解决mnist数字识别问题。本文采用滑动平均模型与正则化方法等优化方法对网络结构进行优化,并且用drop out方法防止过拟合,具体代码如下。

inference.py (前向传播结果)

import tensorflow as tf

IN_NODE = 784

OUT_NODE = 10

IMAGE_SIZE = 28

NUM_CHANNELS = 1

NUM_LABELS = 10

CONV1_DEEP = 32

CONV1_SIZE = 5

CONV2_DEEP = 64

CONV2_SIZE = 5

FC_SIZE = 512

def inference(input,train, regularizer):

with tf.variable_scope('layer1-conv1'):

conv1_w = tf.get_variable('w', [CONV1_SIZE, CONV1_SIZE, NUM_CHANNELS, CONV1_DEEP], initializer=tf.truncated_normal_initializer(stddev=0.1))

conv1_b = tf.get_variable('b', [CONV1_DEEP], initializer=tf.constant_initializer(0.0))

conv1 = tf.nn.conv2d(input, conv1_w, strides=[1,1,1,1], padding='SAME')

relu1 = tf.nn.relu(tf.nn.bias_add(conv1, conv1_b))

print(relu1.shape)

with tf.name_scope('layer2-pool1'):

pool1 = tf.nn.max_pool(relu1, ksize=[1, 2, 2, 1],strides=[1,2,2,1],padding='SAME')

print(pool1.shape)

with tf.variable_scope('layer3-conv2'):

conv2_w = tf.get_variable('w', [CONV2_SIZE, CONV2_SIZE, CONV1_DEEP, CONV2_DEEP], initializer=tf.truncated_normal_initializer(stddev=0.1))

conv2_b = tf.get_variable('b', [CONV2_DEEP], initializer=tf.constant_initializer(0.0))

conv2 = tf.nn.conv2d(pool1, conv2_w, strides=[1,1,1,1], padding='SAME')

relu2 = tf.nn.relu(tf.nn.bias_add(conv2, conv2_b))

print(relu2.shape)

#池化层 7*7*64

with tf.name_scope('layer4-pool2'):

pool2 = tf.nn.max_pool(relu2, ksize=[1,2,2,1], strides=[1,2,2,1], padding='SAME')

print(pool2.shape)

pool_shape = pool2.get_shape().as_list()

nodes = pool_shape[1] * pool_shape[2] * pool_shape[3]

reshaped = tf.reshape(pool2, [pool_shape[0], nodes])

with tf.variable_scope('layer5-fc1'):

fc1_w = tf.get_variable('w', [nodes, FC_SIZE], initializer=tf.truncated_normal_initializer(stddev=0.1))

fc1_b = tf.get_variable('b', [FC_SIZE], initializer=tf.constant_initializer(0.1))

if regularizer != None:

tf.add_to_collection('losses', regularizer(fc1_w))

fc1 = tf.nn.relu(tf.matmul(reshaped, fc1_w)+fc1_b)

print(fc1.shape)

if train == True:

fc1 = tf.nn.dropout(fc1, 0.5)

with tf.variable_scope('layer6-fc2'):

fc2_w = tf.get_variable('w', [FC_SIZE, NUM_LABELS], initializer=tf.truncated_normal_initializer(stddev=0.1))

fc2_b = tf.get_variable('b', [NUM_LABELS], initializer=tf.constant_initializer(0.1))

if regularizer != None:

tf.add_to_collection('losses', regularizer(fc2_w))

logis = tf.matmul(fc1, fc2_w)+fc2_b

print(logis.shape)

return logis

train.py(训练过程)

import os

import tensorflow as tf

import numpy as np

from tensorflow.examples.tutorials.mnist import input_data

import mnist_inference

BATCH_SIZE = 100

LEARNINIG_RATE_BASE = 0.01

LEARNING_RATE_DECAY = 0.99

REGULARAZTION_RATE = 0.0001

TRAINING_STEPS = 3000

MOVING_AVERAGE_DEACY = 0.99

#模型保存文件的路径

MODEL_SAVE_PATH = '/path/to/model/mnist_CNN/'

MODEL_NAME = 'mnist.ckpt'

def train(mnist):

x = tf.placeholder(tf.float32, [BATCH_SIZE, mnist_inference.IMAGE_SIZE,mnist_inference.IMAGE_SIZE,mnist_inference.NUM_CHANNELS], name='x-input')

y = tf.placeholder(tf.float32, [None, mnist_inference.OUT_NODE], name='y-input')

regularizer = tf.contrib.layers.l2_regularizer(REGULARAZTION_RATE)

y_ = mnist_inference.inference(x, True, regularizer)

global_step = tf.Variable(0, trainable=False)

variable_averages = tf.train.ExponentialMovingAverage(MOVING_AVERAGE_DEACY, global_step)

variable_averages_op = variable_averages.apply(tf.trainable_variables())

cross_entropy = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(logits=y_, labels=tf.argmax(y,1)))

loss = cross_entropy + tf.add_n(tf.get_collection('losses'))

learning_rate = tf.train.exponential_decay(LEARNINIG_RATE_BASE, global_step, mnist.train.num_examples/BATCH_SIZE, LEARNING_RATE_DECAY)

train_step = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss, global_step=global_step)

with tf.control_dependencies([train_step, variable_averages_op]):

train_op = tf.no_op(name='train')

saver = tf.train.Saver()

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for i in range(TRAINING_STEPS):

xs, ys = mnist.train.next_batch(BATCH_SIZE)

reshaped_xs = np.reshape(xs, (BATCH_SIZE, mnist_inference.IMAGE_SIZE,mnist_inference.IMAGE_SIZE,mnist_inference.NUM_CHANNELS))

_, loss_value, step = sess.run([train_op, loss, global_step], feed_dict={x:reshaped_xs, y:ys})

if i % 100 == 0:

print("%d轮之后的损失率为%g" % (i, loss_value))

saver.save(sess, os.path.join(MODEL_SAVE_PATH, MODEL_NAME), global_step=global_step)

def main(argv = None):

print(os.path.join(MODEL_SAVE_PATH, MODEL_NAME))

mnist = input_data.read_data_sets("/data/MNIST_data", one_hot=True)

train(mnist)

if __name__ == '__main__':

tf.app.run()

test.py(测试验证)

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import mnist_inference

import mnist_train

import numpy as np

def evaluate(mnist):

with tf.Graph().as_default() as g:

x = tf.placeholder(tf.float32, [5000, mnist_inference.IMAGE_SIZE, mnist_inference.IMAGE_SIZE,

mnist_inference.NUM_CHANNELS], name='x-input')

y = tf.placeholder(tf.float32, [None, mnist_inference.OUT_NODE], name='y-input')

xx = mnist.validation.images

reshape_x = np.reshape(xx, (5000, mnist_inference.IMAGE_SIZE,mnist_inference.IMAGE_SIZE,mnist_inference.NUM_CHANNELS))

validate_feed = {x:reshape_x, y:mnist.validation.labels}

y_ = mnist_inference.inference(x, None, None)

correct = tf.equal(tf.argmax(y, 1), tf.argmax(y_,1))

acc = tf.reduce_mean(tf.cast(correct, tf.float32))

variable_averages = tf.train.ExponentialMovingAverage(mnist_train.MOVING_AVERAGE_DEACY)

variables_to_restore = variable_averages.variables_to_restore()

saver = tf.train.Saver(variables_to_restore)

while True:

with tf.Session() as sess:

ckpt = tf.train.get_checkpoint_state(mnist_train.MODEL_SAVE_PATH)

if ckpt and ckpt.model_checkpoint_path:

saver.restore(sess, ckpt.model_checkpoint_path)

global_step = ckpt.model_checkpoint_path.split('/')[-1].split('-')[-1]

acc_restore = sess.run(acc, feed_dict=validate_feed)

print("%s轮之后的正确率为%g" % (global_step, acc_restore))

else:

return

def main(argv=None):

mnist = input_data.read_data_sets("/data/MNIST_data", one_hot=True)

evaluate(mnist)

if __name__ == '__main__':

tf.app.run()