python使用梯度下降算法实现一个多线性回归

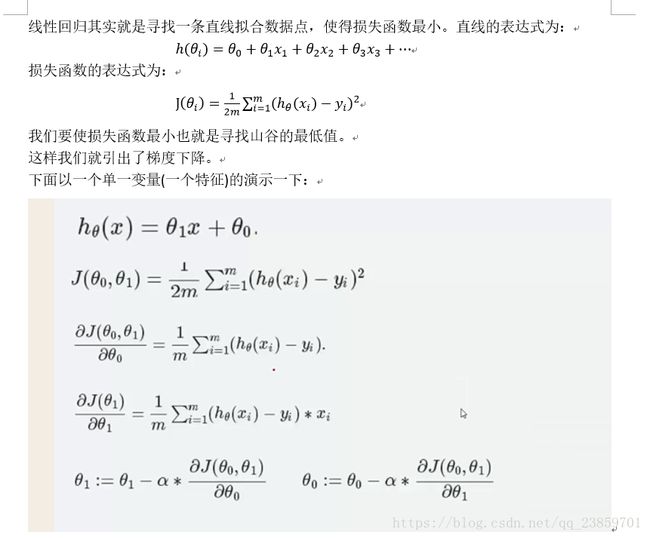

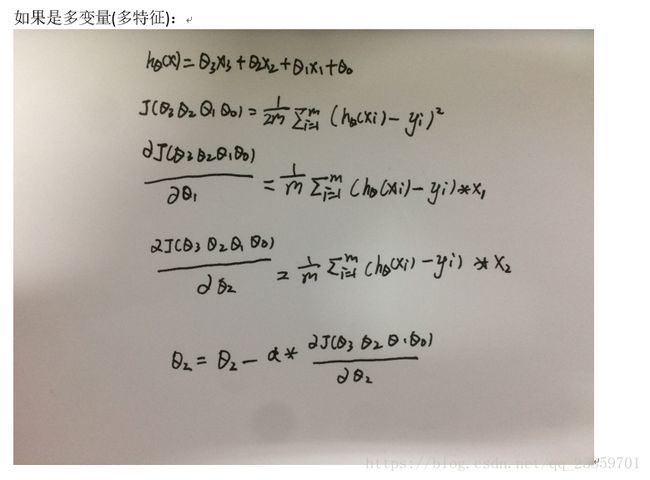

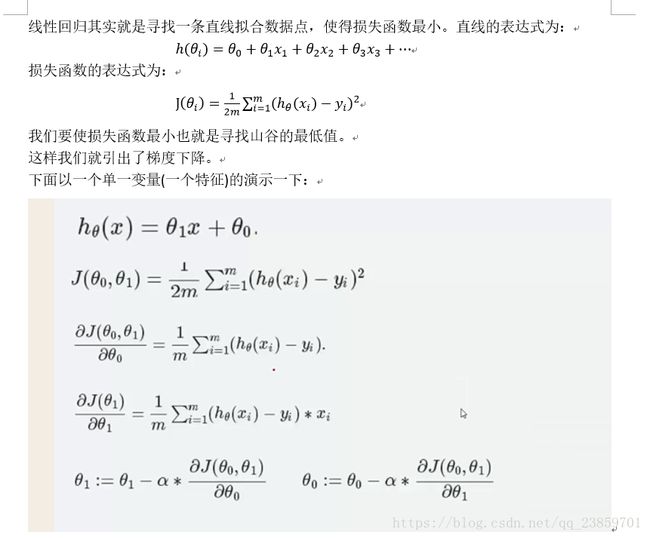

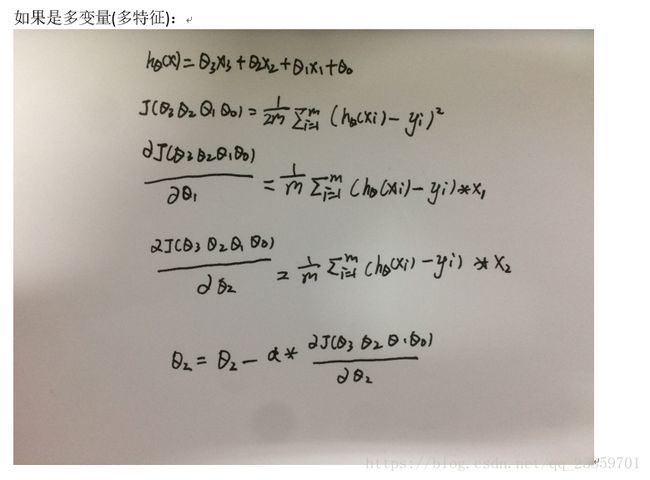

图示:

import pandas as pd

import matplotlib.pylab as plt

import numpy as np

pga = pd.read_csv("D:\python3\data\Test.csv")

pga.AT = (pga.AT - pga.AT.mean()) / pga.AT.std()

pga.V = (pga.V - pga.V.mean()) / pga.V.std()

pga.AP = (pga.AP - pga.AP.mean()) / pga.AP.std()

pga.RH = (pga.RH - pga.RH.mean()) / pga.RH.std()

pga.PE = (pga.PE - pga.PE.mean()) / pga.PE.std()

def cost(theta0, theta1, theta2, theta3, theta4, x1, x2, x3, x4, y):

J = 0

m = len(x1)

for i in range(m):

h=theta0+x1[i]*theta1+x2[i]*theta2+x3[i]*theta3+x4[i]*theta4

J += (h - y[i])**2

J /= (2*m)

return J

def partial_cost_theta4(theta0,theta1,theta2,theta3,theta4,x1,x2,x3,x4,y):

h = theta0 + x1 * theta1 + x2 * theta2 + x3 * theta3 + x4 * theta4

diff = (h - y) * x4

partial = diff.sum() / (x2.shape[0])

return partial

def partial_cost_theta3(theta0,theta1,theta2,theta3,theta4,x1,x2,x3,x4,y):

h = theta0 + x1 * theta1 + x2 * theta2 + x3 * theta3 + x4 * theta4

diff = (h - y) * x3

partial = diff.sum() / (x2.shape[0])

return partial

def partial_cost_theta2(theta0,theta1,theta2,theta3,theta4,x1,x2,x3,x4,y):

h = theta0 + x1 * theta1 + x2 * theta2 + x3 * theta3 + x4 * theta4

diff = (h - y) * x2

partial = diff.sum() / (x2.shape[0])

return partial

def partial_cost_theta1(theta0,theta1,theta2,theta3,theta4,x1,x2,x3,x4,y):

h = theta0 + x1 * theta1 + x2 * theta2 + x3 * theta3 + x4 * theta4

diff = (h - y) * x1

partial = diff.sum() / (x2.shape[0])

return partial

def partial_cost_theta0(theta0, theta1, theta2, theta3, theta4, x1, x2, x3, x4, y):

h = theta0 + x1 * theta1 + x2 * theta2 + x3 * theta3 + x4 * theta4

diff = (h - y)

partial = diff.sum() / (x2.shape[0])

return partial

def gradient_descent(x1,x2,x3,x4,y, alpha=0.1, theta0=0, theta1=0,theta2=0,theta3=0,theta4=0):

max_epochs = 1000

counter = 0

c = cost(theta0, theta1, theta2, theta3, theta4, x1, x2, x3, x4, y)

costs = [c]

convergence_thres = 0.000001

cprev = c + 10

theta0s = [theta0]

theta1s = [theta1]

theta2s = [theta2]

theta3s = [theta3]

theta4s = [theta4]

while (np.abs(cprev - c) > convergence_thres) and (counter < max_epochs):

cprev = c

update0 = alpha * partial_cost_theta0(theta0, theta1, theta2, theta3, theta4, x1, x2, x3, x4, y)

update1 = alpha * partial_cost_theta1(theta0, theta1, theta2, theta3, theta4, x1, x2, x3, x4, y)

update2 = alpha * partial_cost_theta2(theta0, theta1, theta2, theta3, theta4, x1, x2, x3, x4, y)

update3 = alpha * partial_cost_theta3(theta0, theta1, theta2, theta3, theta4, x1, x2, x3, x4, y)

update4 = alpha * partial_cost_theta4(theta0, theta1, theta2, theta3, theta4, x1, x2, x3, x4, y)

theta0 -= update0

theta1 -= update1

theta2 -= update2

theta3 -= update3

theta4 -= update4

theta0s.append(theta0)

theta1s.append(theta1)

theta2s.append(theta2)

theta3s.append(theta3)

theta4s.append(theta4)

c = cost(theta0, theta1, theta2, theta3, theta4, x1, x2, x3, x4, y)

costs.append(c)

counter += 1

return {'costs':costs}

print("costs =", gradient_descent(pga.AT, pga.V,pga.AP,pga.RH,pga.PE)['costs'])

descend = gradient_descent(pga.AT, pga.V,pga.AP,pga.RH,pga.PE, alpha=.01)

plt.scatter(range(len(descend["costs"])), descend["costs"])

plt.show()

损失函数随迭代次数变换图: