完整Hadoop+Spark+Zookeeper+Hbase+HIve+Pig+Mysql+Sqoop集群配置文件记录

目录结构说明:整个集群采用node63 node64 node65 node66 node67 node69 node70节点 **Hadoop:**node63作为namenode,node64作为secondarynamenode,node65,node66 node67

node68 node69 node70作为datanode

**Zookeeper:**node63 node64 node65 node66 node67 node69 node70都有部署,一般node66作

为 leader

**Hbase:**node63 node64 node65 node66 node67 node69 node70都有部署,一般选取node63作

为HMaster,node64作为备用的。

HIve:node63作为HIve服务端,node70作为客户端

**Pig:**node63

**Spark:**node63 node64 node65 node66 node67 node69 node70都有部署

**Mysql:**node63,node64,选取node63作为本地策略

**Sqoop:**node63

node63目录截图:

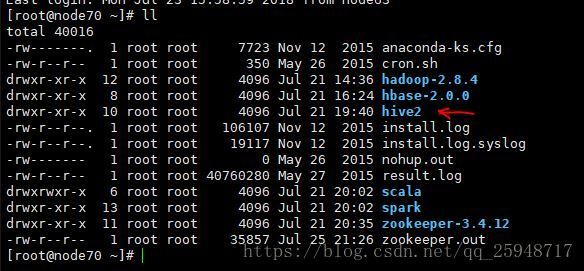

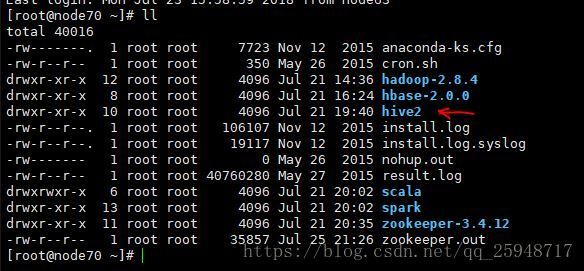

node70目录截图:

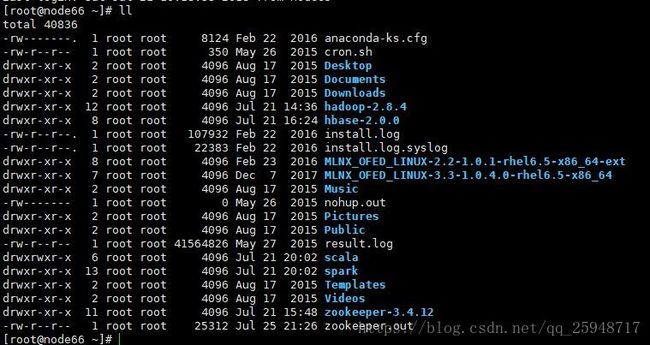

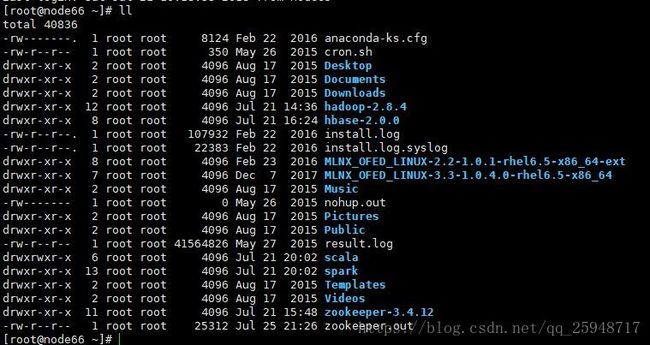

node64-node69截图:

关闭个节点的防火墙。

各个节点的/etc/profile配置截图:

export JAVA_HOME=/usr/local/jdk1.8.0_172 #这里路径为自己解压的JDK的路径

export CLASSPATH= JAVAHOME/libexportPATH= J A V A H O M E / l i b e x p o r t P A T H = {JAVA_HOME}/bin: PATHexportHADOOPHOME=/root/hadoop−2.8.4exportHADOOPINSTALL= P A T H e x p o r t H A D O O P H O M E = / r o o t / h a d o o p − 2.8.4 e x p o r t H A D O O P I N S T A L L = HADOOP_HOME

export HADOOP_MAPRED_HOME= HADOOPHOMEexportHADOOPCOMMONHOME= H A D O O P H O M E e x p o r t H A D O O P C O M M O N H O M E = HADOOP_HOME

export HADOOP_HDFS_HOME= HADOOPHOMEexportYARNHOME= H A D O O P H O M E e x p o r t Y A R N H O M E = HADOOP_HOME

export HADOOP_CONF_DIR= HADOOPHOME/etc/hadoopexportYARNCONFDIR= H A D O O P H O M E / e t c / h a d o o p e x p o r t Y A R N C O N F D I R = HADOOP_HOME/etc/hadoop

export HADOOP_COMMON_LIB_NATIVE_DIR= HADOOPHOME/lib/nativeexportPATH= H A D O O P H O M E / l i b / n a t i v e e x p o r t P A T H = PATH: HADOOPHOME/sbin: H A D O O P H O M E / s b i n : HADOOP_HOME/bin

export ZOOKEEPER_HOME=/root/zookeeper-3.4.12

export PATH= PATH: P A T H : ZOOKEEPER_HOME/bin

export HBASE_HOME=/root/hbase-2.0.0

export PATH= PATH: P A T H : HBASE_HOME/bin

export SCALA_HOME=/root/scala

export PATH= PATH: P A T H : SCALA_HOME/bin

export SPARK_HOME=/root/spark

export PATH= PATH: P A T H : SPARK_HOME/bin

export HIVE_HOME=/root/hive2

export HIVE_CONF_DIR=/root/hive2/conf

export PATH= PATH: P A T H : HIVE_HOME/bin

export PIG_HOME=/root/pig-0.16.0

export PIG_CLASSPATH= HADOOPHOME/etc/hadoopexportPATH= H A D O O P H O M E / e t c / h a d o o p e x p o r t P A T H = PATH: PIGHOME/binexportSQOOPHOME=/root/sqoop−hadoopexportPATH= P I G H O M E / b i n e x p o r t S Q O O P H O M E = / r o o t / s q o o p − h a d o o p e x p o r t P A T H = PATH:$SQOOP_HOME/bin

node63目录截图:

node70目录截图:

node64-node69截图:

关闭个节点的防火墙。

Hadoop配置信息:

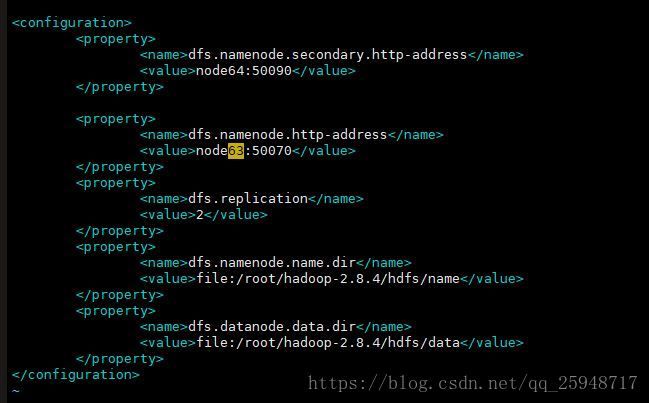

hdfs-site.xml

dfs.namenode.secondary.http-address

node64:50090

dfs.namenode.http-address

node63:50070

dfs.replication

2

dfs.namenode.name.dir

file:/root/hadoop-2.8.4/hdfs/name

dfs.datanode.data.dir

file:/root/hadoop-2.8.4/hdfs/data

core-site.xml

fs.defaultFS

hdfs://node63:9000

hadoop.tmp.dir

file:/root/hadoop-2.8.4/tmp

Abase for other temporary directories.

ha.zookeeper.quorum

node63:2181,node65:2181,node66:2181,node67:2181,node69:2181

hadoop.proxyuser.hadoop.hosts

*

hadoop.proxyuser.hadoop.groups

*

yarn-site.xml

yarn.resourcemanager.hostname

node63

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.aux-services.mapreduce.shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

yarn.resourcemanager.address

node63:8032

yarn.resourcemanager.scheduler.address

node63:8030

yarn.resourcemanager.resource-tracker.address

node63:8031

yarn.resourcemanager.admin.address

node63:8033

yarn.resourcemanager.webapp.address

node63:8088

mapred-site.xml

mapreduce.framework.name

yarn

mapreduce.jobhistory.address

node63:10020

mapreduce.jobhistory.webapp.address

node63:19888

vim slaves:

node65

node66

node67

node69

node70

vim hadoop-env.sh

添加:

export JAVA_HOME=/usr/local/jdk1.8.0_172

export HADOOP_OPTS=”-Djava.library.path=${HADOOP_HOME}/lib/native”

其他节点 都是一样,配置完毕后拷贝即可

Zookeeper配置文件记录:

各节点的下创建data/myid,在myid里面输入zoo.cfg里面配置的数字:

vim conf/zoo.cfg

其他节点一样,data/myid内容不一样。

*hbase配置文件:

将zookeeper的zoo.cfg拷贝到habse的conf下。

vim hbase-site.xml

hbase.rootdir

hdfs://node63:9000/hbase

hbase.zookeeper.quorum

node63,node64,node65,node66,node67,node69,node70

hbase.zookeeper.property.dataDir

/root/zookeeper-3.4.12/data

hbase.cluster.distributed

true

hbase.regionserver.handler.count

20

hbase.regionserver.maxlogs

64

hbase.master.maxclockskew

150000

vim hbase-env.sh

export JAVA_HOME=/usr/local/jdk1.8.0_172

export HBASE_MANAGES_ZK=false

vim backup-masters—–备选的HMaster

node65

vim regionservers

node63

node64

node65

node66

node67

node69

node70

其他节点一样。

Hive配置文件:

node63

需要将来来连接数据库的驱动拷贝到lib中

vim hive-env.sh

HADOOP_HOME=/root/hadoop-2.8.4

export HIVE_AUX_JARS_PATH=/root/hive2/lib/

export HIVE_CONF_DIR=/root/hive2/conf/

vim hive-site.xml

hive.metastore.warehouse.dir

/hive/warehouse

hive.metastore.uris

thrift://node63:9083

hive.querylog.location

/root/hive2/logs

javax.jdo.option.ConnectionUserName

root

javax.jdo.option.ConnectionPassword

12345

javax.jdo.option.ConnectionURL

jdbc:mysql://node63:3306/hive?createDatabaseIfNotExist=true&useSSL=false

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

hive.server2.webui.host

node63

hive.server2.webui.host.port

10002

hive.zookeeper.quorum

node63,node64,node65,node66,node67,node69,node70

node70:

其他一样,

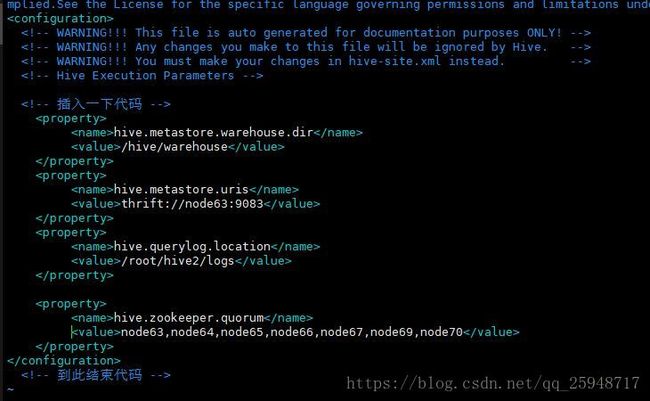

vim hive-site.xml

hive.metastore.warehouse.dir

/hive/warehouse

hive.metastore.uris

thrift://node63:9083

hive.querylog.location

/root/hive2/logs

hive.zookeeper.quorum

node63,node64,node65,node66,node67,node69,node70

Pig配置文件:

vim log4j.properties

log4j.logger.org.apache.pig=info, A

log4j.appender.A=org.apache.log4j.ConsoleAppender

log4j.appender.A.layout=org.apache.log4j.PatternLayout

log4j.appender.A.layout.ConversionPattern=%-4r [%t] %-5p %c %x - %m%n

pig.logfile=/root/pig-0.16.0/logs

log4jconf=/root/pig-0.16.0/conf/log4j.properties

exectype=mapreduce

Sqoop配置:

vim sqoop-env.sh

export HADOOP_MAPRED_HOME= HADOOPHOMEexportHADOOPCOMMONHOME= H A D O O P H O M E e x p o r t H A D O O P C O M M O N H O M E = HADOOP_HOME

export ZOOCFGDIR=/root/zookeeper-3.4.12

export HBASE_HOME=/root/hbase-2.0.0

export HIVE_HOME=/root/hive2

export HCAT_HOME=/root/hive2/hcatalogs

将 mysql-connector-java-6.0.6.jar拷贝到lib下

Spark配置文件:

安装见博客:https://blog.csdn.net/qq_25948717/article/details/80758713

vim spark-env.sh

export JAVA_HOME=/usr/local/jdk1.8.0_172 #这里路径为自己解压的JDK的路径

export CLASSPATH= JAVAHOME/libexportPATH= J A V A H O M E / l i b e x p o r t P A T H = {JAVA_HOME}/bin: PATHexportHADOOPHOME=/root/hadoop−2.8.4exportHADOOPCONFDIR= P A T H e x p o r t H A D O O P H O M E = / r o o t / h a d o o p − 2.8.4 e x p o r t H A D O O P C O N F D I R = HADOOP_HOME/etc/hadoop

export YARN_CONF_DIR= HADOOPHOME/etc/hadoopexportHADOOPCOMMONLIBNATIVEDIR= H A D O O P H O M E / e t c / h a d o o p e x p o r t H A D O O P C O M M O N L I B N A T I V E D I R = HADOOP_HOME/lib/native

export SCALA_HOME=/root/scala

export PATH= PATH: P A T H : SCALA_HOME/bin

export SPARK_HOME=/root/spark

export PATH= PATH: P A T H : SPARK_HOME/bin

export SPARK_MASTER_IP=node63

export SPARK_WORKER_MEMORY=2g

vim slaves

node64

node65

node66

node67

node69

node70