kubernetes安装总结

Kubernetes安装总结

1.前言

(1)Kubernetes系统架构简介

(2)快速了解kubernetes核心概念

Kubernetes是一个在集群主机间进行自动化部署、扩展和容器操作的提供以容器为中心基础设施的开源平台。说起来安装搭建kubernetes也有段时间了,这段时间来,收获最大的就是一种感觉吧,作为一个国人加小白的我,对linux系统平时使用较少,现在也渐渐熟悉了,总是在不经意间有所小收获。好了,切入正题,接下来就分享一下安装kubernetes的经验,下文将kubernetes简称k8s。

1.1令人惊喜的k8s

在次也不谈kubernetes的构建部署原理,作为初学者,知道他有些什么惊喜之处就可以了。Kubernetes的开始起源于谷歌,它在谷歌系统中有自己的起源:Borg和Omega。许多基于这些系统的设计和安装的相同概念,已经作为一个新的表现形式渗入Kubernetes,这个表现形式包括现今的标准,合并了很多谷歌在过去十年里吸取到的实践经验教训。

Kubernetes 旨在作为你容器的管理层。然而,它的重点是无缝提供给你的应用程序真实实在的需要,满足你的应用程序所依赖的需要。举个例子,这些应用所需就是由Kubernetes提供的:访问与供应商无关的数据卷、负载均衡、冗余控制、弹性扩容、滚动更新以及配置密钥管理。

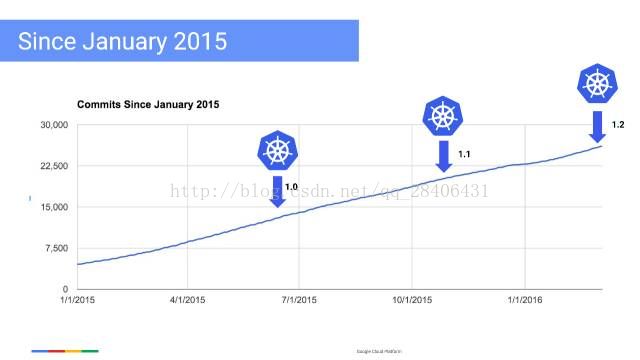

这是 Kubernetes 自从 2015 年以来收到的代码提交数量的一个截图:

1.2大火的容器化应用

在此不得不提到容器,因为k8s的服务就是以容器为中心的。近几年来,容器大火,Docker在这其中做出了突出的贡献。总结一下容器的优点:

敏捷地应用创建和部署、持续开发,集成和部署、开发和运行相分离(使得应用和基础设施解耦)、开发,测试和生产环境的持续、云和操作系统版本的可移植性、资源隔离等。

1.3 Docker与k8s的整合

可以参见:同是容器管理系统,Kubernetes为什么那么火?

近年来,三个主要云提供商都加入了由Kubernetes主持的开源基金会,即云原生基金会(CNCF),这让k8s势不可挡。同为容器编排工具的docker swarm似乎成为了孤家寡人Docker也终于在Dockercon Europe 2017的主题演讲中宣布将Kubernetes整合加入日程,总算登上Kubernetes的列车。通过将Docker EE和Kubernetes整合,Docker不再只是人们口中的开发平台,它也是一个生产级别的能和PaaS提供的解决方案一战的生态系统。当然,k8s也不是一个传统的包罗万象的PaaS,他保留了一部分用户的选择。

2.k8s启动前准备

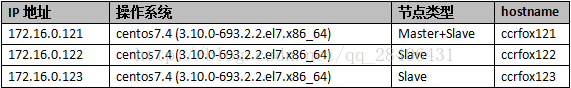

2.1机器环境:

2.2 ssh免密登陆设置:

参见:ssh免密登陆(注:centos7 ssh配置必须修改ssh目录权限,ubuntu17可跳过此操作)

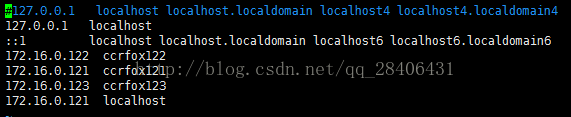

2.3编辑本地 host文件,做好访问映射:

vim /etc/hosts

2.4各组件在节点上的分布:

master安装的组件有:

docker

etcd 可以理解为是k8s的数据库,存储所有节点、pods、网络信息

kube-proxy 提供service服务的基础组件

kubelet 管理k8s节点的组件,因为这台master同时也是nodes,所以也要安装

kube-apiserver k8s提供API的接口,是整个k8s的核心

kube-controller-manager 管理分配资源的组件

kube-scheduler 调度资源的组件

flanneld 整个k8s的网络组件

slaves安装的组件有:

docker

kube-proxy

Kubelet

Flanneld

2.5关闭防火墙:(否则可能在etcd组网时出现问题)

最后,记得关闭各节点的SElinux,避免不必要的问题。防火墙也要关闭,避免与docker容器内部的firewall冲突。

关闭 SELinux和防火墙:

setenforce 0

systemctl stop firewalld

systemctl disable firewalld

2.6首先在所有节点添加k8s组件下载的yum源,如下所示:

sudo vi /etc/yum.repos.d/virt7-docker-common-release.repo

2.7 更换阿里源,下载速度会快些

2.7.1 先连上网,然后更换yum源

新建的用户没有sudo权限,所以首先切换到root用户

su root

输入密码

2.7.2 备份之前的yum源

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup

2.7.3 下载yum源centos7

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

2.7.4 清理并生成缓存

yum clean all

yum makecache

yum update

2.8安装docker

yum -y install docker

docker自是必不可少的,安装好后可以拉取几个镜像试试,看看能不能启动成功。

2.9 安装etcd(轻量级数据库)

sudo yum -y install etcd

2.9.1修改配置文件

vim /etc/etcd/etcd.conf

3个结点上的etcd配置文件如下:

IP(ccrfox121): 172.16.0.121

# [member]

ETCD_NAME=etcd1

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

#ETCD_WAL_DIR=""

#ETCD_SNAPSHOT_COUNT="10000"

#ETCD_HEARTBEAT_INTERVAL="100"

#ETCD_ELECTION_TIMEOUT="1000"

ETCD_LISTEN_PEER_URLS="http://172.16.0.121:2380"

ETCD_LISTEN_CLIENT_URLS="http://172.16.0.121:2379,http://127.0.0.1:2379"

#ETCD_MAX_SNAPSHOTS="5"

#ETCD_MAX_WALS="5"

#ETCD_CORS=""

#

#[cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://172.16.0.121:2380"

# if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."

ETCD_INITIAL_CLUSTER="etcd1=http://172.16.0.121:2380"

ETCD_INITIAL_CLUSTER_STATE="new"

#ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_ADVERTISE_CLIENT_URLS="http://172.16.0.121:2379"

#ETCD_DISCOVERY=""

#ETCD_DISCOVERY_SRV=""

#ETCD_DISCOVERY_FALLBACK="proxy"

#ETCD_DISCOVERY_PROXY=""

#ETCD_STRICT_RECONFIG_CHECK="false"

#ETCD_AUTO_COMPACTION_RETENTION="0"

#ETCD_ENABLE_V2="true"

#

#[proxy]

#ETCD_PROXY="off"

#ETCD_PROXY_FAILURE_WAIT="5000"

#ETCD_PROXY_REFRESH_INTERVAL="30000"

#ETCD_PROXY_DIAL_TIMEOUT="1000"

#ETCD_PROXY_WRITE_TIMEOUT="5000"

#ETCD_PROXY_READ_TIMEOUT="0"

#

#[security]

#ETCD_CERT_FILE=""

#ETCD_KEY_FILE=""

#ETCD_CLIENT_CERT_AUTH="false"

#ETCD_TRUSTED_CA_FILE=""

#ETCD_AUTO_TLS="false"

#ETCD_PEER_CERT_FILE=""

#ETCD_PEER_KEY_FILE=""

#ETCD_PEER_CLIENT_CERT_AUTH="false"

#ETCD_PEER_TRUSTED_CA_FILE=""

#ETCD_PEER_AUTO_TLS="false"

#

#[logging]

#ETCD_DEBUG="false"

# examples for -log-package-levels etcdserver=WARNING,security=DEBUG

#ETCD_LOG_PACKAGE_LEVELS=""

#

#[profiling]

#ETCD_ENABLE_PPROF="false"

#ETCD_METRICS="basic"

#

#[auth]

#ETCD_AUTH_TOKEN="simple"

IP(ccrfox122): 172.16.0.122

# [member]

ETCD_NAME=etcd2

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

#ETCD_WAL_DIR=""

#ETCD_SNAPSHOT_COUNT="10000"

#ETCD_HEARTBEAT_INTERVAL="100"

#ETCD_ELECTION_TIMEOUT="1000"

ETCD_LISTEN_PEER_URLS="http://172.16.0.122:2380"

ETCD_LISTEN_CLIENT_URLS="http://172.16.0.122:2379,http://127.0.0.1:2379"

#ETCD_MAX_SNAPSHOTS="5"

#ETCD_MAX_WALS="5"

#ETCD_CORS=""

#

#[cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://172.16.0.122:2380"

# if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."

ETCD_INITIAL_CLUSTER="etcd2=http://172.16.0.122:2380,etcd1=http://172.16.0.121:2380"

ETCD_INITIAL_CLUSTER_STATE="existing"

#ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_ADVERTISE_CLIENT_URLS="http://172.16.0.122:2379"

#ETCD_DISCOVERY=""

#ETCD_DISCOVERY_SRV=""

#ETCD_DISCOVERY_FALLBACK="proxy"

#ETCD_DISCOVERY_PROXY=""

#ETCD_STRICT_RECONFIG_CHECK="false"

#ETCD_AUTO_COMPACTION_RETENTION="0"

#ETCD_ENABLE_V2="true"

#

#[proxy]

#ETCD_PROXY="off"

#ETCD_PROXY_FAILURE_WAIT="5000"

#ETCD_PROXY_REFRESH_INTERVAL="30000"

#ETCD_PROXY_DIAL_TIMEOUT="1000"

#ETCD_PROXY_WRITE_TIMEOUT="5000"

#ETCD_PROXY_READ_TIMEOUT="0"

#

#[security]

#ETCD_CERT_FILE=""

#ETCD_KEY_FILE=""

#ETCD_CLIENT_CERT_AUTH="false"

#ETCD_TRUSTED_CA_FILE=""

#ETCD_AUTO_TLS="false"

#ETCD_PEER_CERT_FILE=""

#ETCD_PEER_KEY_FILE=""

#ETCD_PEER_CLIENT_CERT_AUTH="false"

#ETCD_PEER_TRUSTED_CA_FILE=""

#ETCD_PEER_AUTO_TLS="false"

#

#[logging]

#ETCD_DEBUG="false"

# examples for -log-package-levels etcdserver=WARNING,security=DEBUG

#ETCD_LOG_PACKAGE_LEVELS=""

#

#[profiling]

#ETCD_ENABLE_PPROF="false"

#ETCD_METRICS="basic"

#

#[auth]

#ETCD_AUTH_TOKEN="simple"

IP(ccrfox123): 172.16.0.123

# [member]

ETCD_NAME=etcd3

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

#ETCD_WAL_DIR=""

#ETCD_SNAPSHOT_COUNT="10000"

#ETCD_HEARTBEAT_INTERVAL="100"

#ETCD_ELECTION_TIMEOUT="1000"

ETCD_LISTEN_PEER_URLS="http://172.16.0.123:2380"

ETCD_LISTEN_CLIENT_URLS="http://172.16.0.123:2379,http://127.0.0.1:2379"

#ETCD_MAX_SNAPSHOTS="5"

#ETCD_MAX_WALS="5"

#ETCD_CORS=""

#

#[cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://172.16.0.123:2380"

# if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."

ETCD_INITIAL_CLUSTER="etcd1=http://172.16.0.121:2380,etcd2=http://172.16.0.122:2380,etcd3=http://172.16.0.123:2380"

ETCD_INITIAL_CLUSTER_STATE="existing"

#ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_ADVERTISE_CLIENT_URLS="http://172.16.0.123:2379"

#ETCD_DISCOVERY=""

#ETCD_DISCOVERY_SRV=""

#ETCD_DISCOVERY_FALLBACK="proxy"

#ETCD_DISCOVERY_PROXY=""

#ETCD_STRICT_RECONFIG_CHECK="false"

#ETCD_AUTO_COMPACTION_RETENTION="0"

#ETCD_ENABLE_V2="true"

#

#[proxy]

#ETCD_PROXY="off"

#ETCD_PROXY_FAILURE_WAIT="5000"

#ETCD_PROXY_REFRESH_INTERVAL="30000"

#ETCD_PROXY_DIAL_TIMEOUT="1000"

#ETCD_PROXY_WRITE_TIMEOUT="5000"

#ETCD_PROXY_READ_TIMEOUT="0"

#

#[security]

#ETCD_CERT_FILE=""

#ETCD_KEY_FILE=""

#ETCD_CLIENT_CERT_AUTH="false"

#ETCD_TRUSTED_CA_FILE=""

#ETCD_AUTO_TLS="false"

#ETCD_PEER_CERT_FILE=""

#ETCD_PEER_KEY_FILE=""

#ETCD_PEER_CLIENT_CERT_AUTH="false"

#ETCD_PEER_TRUSTED_CA_FILE=""

#ETCD_PEER_AUTO_TLS="false"

#

#[logging]

#ETCD_DEBUG="false"

# examples for -log-package-levels etcdserver=WARNING,security=DEBUG

#ETCD_LOG_PACKAGE_LEVELS=""

#

#[profiling]

#ETCD_ENABLE_PPROF="false"

#ETCD_METRICS="basic"

#

#[auth]

#ETCD_AUTH_TOKEN="simple"

2.9.2启动etcd组网

在ccrfox121结点上:

sudo systemctl start etcd

sudo etcdctl member add etcd1 http://ccrfox122:2380 //扩展集群,添加新结点组网

sudo systemctl start etcd //在ccrfox122结点上启动etcd

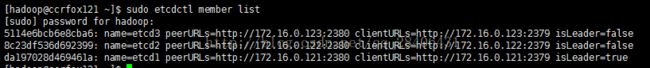

sudo etcdctl member list //查看新加入的结点

同样的方法添加ccrfox123。(注意:要先add结点,再在对应结点上启动etcd)

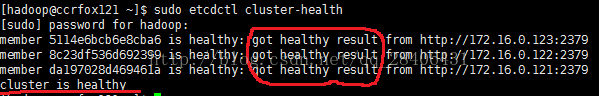

正确状况如下:

sudo etcdctl cluster-health //检查集群健康状态

只有状态均为健康,才可以继续下一步安装操作:

错误调试,可以参考:etcd集群部署遇到的坑

3.安装 Kubernetes环境

yum -y install kubernetes-master flannel (Master结点上执行)

yum -y install kubernetes-node flannel (Slave结点上执行)

3.1 k8s配置文件修改:

vim /etc/kubernetes/config Master结点

###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=true"

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://172.16.0.121:8080"vim /etc/kubernetes/apiserver Master结点

###

# kubernetes system config

#

# The following values are used to configure the kube-apiserver

#

# The address on the local server to listen to.

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

#KUBE_API_ADDRESS="--address=0.0.0.0"

# The port on the local server to listen on.

KUBE_API_PORT="--port=8080"

# Port minions listen on

KUBELET_PORT="--kubelet-port=10250"

# Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=http://172.16.0.121:2379,http://172.16.0.122:2379,http://172.16.0.123:2379"

# Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

# default admission control policies

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,ResourceQuota"

# Add your own!

KUBE_API_ARGS=""vim /etc/kubernetes/controller-manager Master结点 无改动

###

# The following values are used to configure the kubernetes controller-manager

# defaults from config and apiserver should be adequate

# Add your own!

#KUBE_CONTROLLER_MANAGER_ARGS=""Vim /etc/kubernetes/scheduler Master结点 无改动

###

# kubernetes scheduler config

# default config should be adequate

# Add your own!

KUBE_SCHEDULER_ARGS=""vim /etc/kubernetes/config Slave结点

###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=true"

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://172.16.0.121:8080"vim /etc/kubernetes/kubelet Slave结点

###

# kubernetes kubelet (minion) config

# The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=0.0.0.0"

# The port for the info server to serve on

KUBELET_PORT="--port=10250"

# You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=172.16.0.122"

# location of the api-server

KUBELET_API_SERVER="--api-servers=http://172.16.0.121:8080"

# pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

# Add your own!

KUBELET_ARGS="--cluster-dns=10.254.0.254 --cluster-domain=cluster.local"Vim /etc/kubernetes/proxy Slave结点 无改动

###

# kubernetes proxy config

# default config should be adequate

# Add your own!

KUBE_PROXY_ARGS=""3.2安装flannel

3.2.1 原理介绍

参见:一篇文章带你了解Flannel

3.2.2 配置文件修改:

Vim /etc/sysconfig/flanneld (3个节点上配置成一样的)

# Flanneld configuration options

# etcd url location. Point this to the server where etcd runs

FLANNEL_ETCD_ENDPOINTS="http://172.16.0.121:2379"

# etcd config key. This is the configuration key that flannel queries

# For address range assignment

FLANNEL_ETCD_PREFIX="/atomic.io/network"

# Any additional options that you want to pass

FLANNEL_OPTIONS=""3.2.3为flannel创建分配的网络

# 只在master上etcd执行

etcdctl mk /atomic.io/network/config '{"Network": "10.1.0.0/16"}'

# 若要重新建,先删除

etcdctl rm /coreos.com/network/ --recursive

重置docker0网桥的配置

删除docker启动时默认创建的docker0网桥,flannel启动时会获取到一个网络地址,并且配置docker0的IP地址,作为该网络的网关地址,如果此时docker0上配置有IP地址,那么flannel将会启动失败。

ip link del docker0

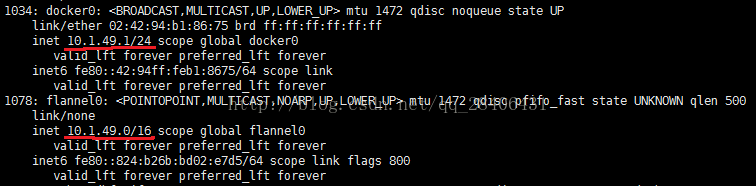

查看docker0是否托管给flannel0

可以看出docker0和flannel在同一网段,若失败:ip link del docker0再重启docker

3.2.4 检查

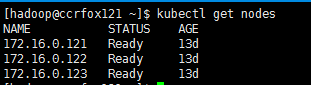

在master上执行下面,检查kubernetes的状态

只有所有结点状态均为ready才算是大功告成,划分的子网间也可以相互ping一下,看是否连通。

3.3启动集群所有组件:

sudo systemctl daemon-reload

sudo systemctl start etcd

sudo systemctl enable etcd

sudo systemctl restart docker

sudo systemctl enable docker

sudo systemctl start flanneld

sudo systemctl enable flanneld

Master结点上执行:

sudo systemctl start kube-apiserver

sudo systemctl start kube-controller-manager

sudo systemctl start kube-scheduler

Slave结点上执行:

sudo systemctl start kubelet

sudo systemctl start kube-proxy

是否启动成功,可通过:sudo ss -tlnp 查看

3.4测试案例:

相信大家已近迫不及待的要跑个例子了,那就开始吧:

请先确保你给的镜像本机可以拉取成功:

现在我们要让k8s来管理容器启动,编写一个ReplicationController,简称RC。

RC配置:

Vim ~/frontend-localredis-pods.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: testrc

labels:

name: testrc

spec:

replicas: 6

selector:

name: doublepod

template:

metadata:

labels:

name: doublepod

spec:

containers:

- name: front

image: fedora/apache

ports:

- containerPort: 5000

- name: back

image: tomcat

ports:

- containerPort: 8080yaml格式文档对格式要求比较严格,书写时注意空格

Sudo kubectl create -f ~/frontend-localredis-pods.yaml //创建RC

Sudo kubectl get pods -o wide //查看pod执行情况

Sudo kubectl describe pods //查看详细容器启动事件信息

Sudo systemctl docker status docker //docker镜像启动信息

3.5 部分好用的命令推荐

快速查看k8s组件配置

systemctl status kube-proxy -l

启动日志

Kubectl logs container-name

Docker启动日志

Sudo docker logs containerId

kubernetes查看全部pod命令

kubectl get pod --all-namespaces -o wide

强制删除pod绝佳命令

sudo kubectl delete pods --all --grace-period=0 --force

日志查看

sudo /usr/bin/journalctl -u kubelet --since="2017-10-17 14:38:00" --until="2017-10-17 14:38:30"

参考:Journalctl日志查看工具介绍

推荐:

如何编写pod配置:K8s学习2--RC/Service/Pod实践

k8s部分配置项解释:k8s中文社区