如何用Nginx+Keepalived实现高可用的反向代理+负载均衡

keealived服务工作在3层(IP)、4层(TCP)、5(应用层)

nginx通过Virtual IP对外提供www服务,能通过算法实现后端web服务的负载均衡

下列操作徐预先安装tengine,或者nginx。

【第一部分】配置一个虚拟IP地址,只向外界暴露这个VIP

以node1(192.168.100.151)为例说明操作步骤。

【第一步】

添加http子模块upstream,设置多个负载tomcat server(ip尾号152、153、154)

[root@node1 conf]# grep -v “#” nginx.conf

worker_processes 2;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 1;

upstream howareyou { # 3台tomcat服务器

server 192.168.100.152:8080;

server 192.168.100.153:8080;

server 192.168.100.154:8080;

}

server {

listen 8000;

location /howareyou {

proxy_pass http://howareyou/;

}

location / {

root html;

index index.html index.htm;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

}

【第二步】在现有网卡上添加一个虚拟IP地址

[root@node1 ~]# ifconfig eth0:1 192.168.100.200 netmask 255.255.255.0 up

[root@node1 ~]# ifconfig -a | grep --color -A 3 “eth0:1”

eth0:1 Link encap:Ethernet HWaddr 00:0C:29:35:A8:FD

inet addr:192.168.100.200 Bcast:192.168.100.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

分别指定3台tomcat服务器限定返回给web客户端的内容——

[root@node2 ROOT]# pwd

/opt/apache-tomcat-8.0.53/webapps/ROOT

[root@node2 ROOT]# grep -vE “#|^\n” index.jsp

from node2Session=<%=session.getId()%>

[root@node3 ~]# cd /opt/apache-tomcat-8.0.53/webapps/ROOT

[root@node3 ROOT]# cat index.jsp

from node3Session=<%=session.getId()%>

[root@node4 ~]# cd /opt/apache-tomcat-8.0.53/webapps/ROOT/

[root@node4 ROOT]# cat index.jsp

from node4Session=<%=session.getId()%>

【第三步】

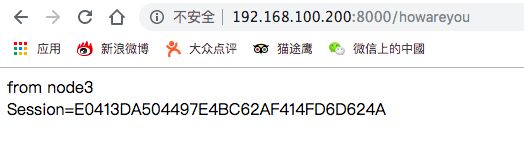

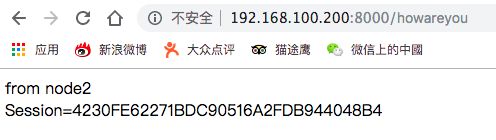

访问虚拟IP的URL:http://192.168.100.200:8000/howareyou

结果:

首次:

from node4

Session=0248C9E8C73DA87237EFA89974430ECB

刷新:

from node2

Session=AEA89EF695781E1829517EEAFAC93A10

再刷新:

from node3

Session=42F8D3CD8C20B246E382D6F5A2B04B72

可以看出:三台tomcat web服务器的权重相同时,每次http请求由nginx均衡地调度到3台后端tomcat web服务器,http客户端无需关注实际是哪一台tomcat提供web服务,只需访问VIP+port+URI 即可。

可以看出:三台tomcat web服务器的权重相同时,每次http请求由nginx均衡地调度到3台后端tomcat web服务器,http客户端无需关注实际是哪一台tomcat提供web服务,只需访问VIP+port+URI 即可。

【第四步】把node1上的VIP换到node4上去

[root@node1 ~]# ifconfig eth0:1 down

[root@node1 ~]# ifconfig -a | grep --color -A 3 “eth0”

eth0 Link encap:Ethernet HWaddr 00:0C:29:35:A8:FD

inet addr:192.168.100.151 Bcast:192.168.100.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe35:a8fd/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

[root@node4 ROOT]# ifconfig eth1:192.168.100.200 netmask 255.255.255.0 up

【第五步】关闭node1的nginx服务,同时在node4启动nginx服务

node4的nginx.conf与node1的一致

【第六步】访问URL http://192.168.100.200:8000/howareyou

效果和上面的第三步一样

【第二部分】配置高可用 Nginx+keepalived服务

【第1步】角色规划

node1:state=MASTER、priority=100

node4:state=BACKUP、priority=50

【第2步】ndoe1、node4分别安装keepalived

[root@node4 keepalived]# cat /etc/yum.repos.d/CentOS-Base.repo | grep -v “#”

[base]

name=CentOS- r e l e a s e v e r − B a s e m i r r o r l i s t = h t t p : / / m i r r o r l i s t . c e n t o s . o r g / ? r e l e a s e = releasever - Base mirrorlist=http://mirrorlist.centos.org/?release= releasever−Basemirrorlist=http://mirrorlist.centos.org/?release=releasever&arch=KaTeX parse error: Expected 'EOF', got '&' at position 9: basearch&̲repo=os&infra=infra

baseurl=http://mirror.centos.org/centos/ r e l e a s e v e r / o s / releasever/os/ releasever/os/basearch/

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-6

yum clean all

yum makecache

yum install -y keepalived # 会默认安装到目录 /etc/keepalived/ ,该目录下的keepalived.conf是配置文件

【第3步】按照第一步的角色规划来配置node1、node4

3.1 分别修改nginx.conf、keepalived.conf

修改node1节点的nginx.conf,和node4的相同

[root@node1 ~]# grep -vE “#|^$” /opt/tengine-2.3.2/conf/nginx.conf

worker_processes 2;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 1;

upstream howareyou {

server 192.168.100.152:8080; # 后端3台tomcat服务器

server 192.168.100.153:8080; # 3台tomcat返回前端的内容请已在上文第一部分的【第二步】分别指定

server 192.168.100.154:8080;

}

server {

listen 8000;

location /howareyou {

proxy_pass http://howareyou/;

}

location / {

root html;

index index.html index.htm;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

}

修改node1节点的keepalived.conf

[root@node1 ~]# grep -v “^$” /etc/keepalived/keepalived.conf

#ConfigurationFile for keepalived

global_defs {

notification_email { #定义接受邮件的邮箱

[email protected]

}

notification_email_from [email protected] #定义发送邮件的邮箱

smtp_server mail.qq.com

smtp_connect_timeout 30

route_id nginx_backup

}

vrrp_script check_nginx { #定义监控nginx的脚本

script “/root/check_nginx.sh”

interval 2 #监控时间间隔

weight 2 #负载参数

}

vrrp_instance vrrptest { #定义vrrptest实例

state MASTER #服务器状态

interface eth0 #当前进行vrrp通讯使用的网络接口卡(当前centos的网卡)

virtual_router_id 51 #虚拟路由的标志,(俩nginx服务器)同一组lvs的虚拟路由标识必须相同才能切换

priority 100 #服务启动优先级,值越大优先级越高,BACKUP角色节点的该值不能大于MASTER

advert_int 1 #服务器之间的存活检查时间

authentication {

auth_type PASS #认证类型

auth_pass ufsoft #认证密码,一组lvs 服务器的认证密码必须一致

}

track_script { #执行监控nginx进程的脚本

check_nginx

}

virtual_ipaddress { #虚拟IP地址

192.168.100.200

}

}

然后在我node1的eth0网卡下创建一个虚拟IP地址

[root@node1 ~]# ifconfig eth0:1 192.168.100.200 netmask 255.255.255.0 up

修改node4节点的nginx.conf

内容和node1节点的一样,因为二者提供相同的服务:为web用户提供反向代理、为后端tomcat web服务器均衡负载。

修改node4节点的keepalived.conf

[root@node4 ~]# grep -v “^KaTeX parse error: Expected 'EOF', got '#' at position 35: …eepalived.conf #̲ConfigurationFi…(ps -ef | grep “nginx: master process”| grep -v grep )” == “” ]

then service keepalived stop

else echo “nginx is running”

该脚本功能:在nginx服务停止时关闭本机的keepalived服务,以便模拟该主机的nginx、keepalived服务不可用,那么会将BACKUP角色切换成MASTER角色。

然后在我node1的eth1网卡下创建一个虚拟IP地址

[root@node1 ~]# ifconfig eth1:1 192.168.100.200 netmask 255.255.255.0 up

【第4步】分别启动node1、node4上的nginx服务和keepalived服务

[root@node1 ~]# nginx

[root@node1 ~]# service keepalived start

[root@node4 ~]# nginx

[root@node4 ~]# service keepalived start

[root@node1 ~]# ps aux |grep --color nginx

[root@node1 ~]# service keepalived status

[root@node4 ~]# ps aux |grep --color nginx

[root@node4 ~]# service keepalived status

【第5步】主从 nginx+keepalived 的故障测试

停掉MASTER角色的keepalived服务所在主机的nginx服务。

[root@node4 ~]# grep --color -C 3 BACKUP /etc/keepalived/keepalived.conf

weight 2 #负载参数

}

vrrp_instance vrrptest { #定义vrrptest实例

state BACKUP #服务器状态

interface eth1 #当前进行vrrp通讯使用的网络接口卡(当前centos的网卡)

virtual_router_id 51 #虚拟路由的标志,一组lvs的虚拟路由标识必须相同,这样才能切换

priority 40 #服务启动优先级,值越大优先级越高,BACKUP角色节点的该值不能大于MASTER

advert_int 1 #服务器之间的存活检查时间

authentication {

auth_type PASS #认证类型

模拟node1上的nginx服务宕机,观察keepalived服务能否自动将node4的keepalived服务从BACKUP切换成MASTER状态

[root@node1 ~]# nginx -s stop # 那么keepalived里面的脚本check_nginx.sh 会将keealive服务停止

通过浏览器访问VIP——

每刷新一下,可依次看到from node2、from node3、from node4字样,说明MASTER角色能正常提供反向代理服务。

紧接着查看配置为BACKUP状态的node4上发生了什么——

[root@node4 ~]# ps aux | grep -v grep | grep --color nginx

root 31787 0.0 0.1 47004 1160 ? Ss 18:14 0:00 nginx: master process nginx

nobody 31788 0.0 0.2 47388 2152 ? S 18:14 0:00 nginx: worker process

nobody 31789 0.0 0.2 47388 2124 ? S 18:14 0:00 nginx: worker process

[root@node4 ~]# service keepalived status

keepalived (pid 30901) is running…

[root@node4 ~]# tail /var/log/messages

Sep 9 17:56:54 node4 Keepalived_vrrp[30903]: Kernel is reporting: interface eth1 UP

Sep 9 17:56:55 node4 NetworkManager[1908]: (eth1): carrier now OFF (device state 8, deferring action for 4 seconds)

Sep 9 17:56:55 node4 kernel: e1000: eth1 NIC Link is Down

Sep 9 17:56:58 node4 Keepalived_vrrp[30903]: Kernel is reporting: interface eth1 DOWN

Sep 9 17:56:58 node4 Keepalived_vrrp[30903]: VRRP_Instance(vrrptest) Now in FAULT state

Sep 9 17:56:59 node4 kernel: e1000: eth1 NIC Link is Up 1000 Mbps Full Duplex, Flow Control: None

Sep 9 17:56:59 node4 NetworkManager[1908]: (eth1): carrier now ON (device state 8)

Sep 9 17:57:02 node4 Keepalived_vrrp[30903]: Kernel is reporting: interface eth1 UP

Sep 9 17:57:05 node4 Keepalived_vrrp[30903]: VRRP_Instance(vrrptest) Transition to MASTER STATE

Sep 9 17:57:06 node4 Keepalived_vrrp[30903]: VRRP_Instance(vrrptest) Entering MASTER STATE

注意看这个日志中的 “Entering MASTER STATE”记录,意思是node4节点的Nginx服务角色正在被切换成MASTER状态。

所以,node1上的nginx服务停掉以后,该节点的keepalived服务也被关停,会立即将node4节点的nginx角色变为MASTER状态。

【第6步】将原MASTER角色的nginx服务及其keepalived服务启动,查看角色变化

在上一步中,我们关停了node1的nginx及其对应的keepalived服务。

现在我们把他们再次启动,看看能恢复成MASTER角色吗。

[root@node1 ~]# ps aux | grep -v grep |grep --color nginx

[root@node1 ~]# service keepalived status

keepalived is stopped

[root@node1 ~]# nginx

[root@node1 ~]# service keepalived start

Starting keepalived: [ OK ]

[root@node1 ~]# date

Mon Sep 9 19:36:14 CST 2019

[root@node1 ~]# ps aux | grep -v grep |grep --color nginx

root 24728 0.0 0.1 47016 1156 ? Ss 19:35 0:00 nginx: master process nginx

nobody 24729 0.0 0.2 47484 2124 ? S 19:35 0:00 nginx: worker process

nobody 24730 0.0 0.2 47484 2120 ? S 19:35 0:00 nginx: worker process

[root@node1 ~]# service keepalived status

keepalived (pid 24750) is running…

查看一下node1节点的keepalived有关日志

[root@node1 ~]# tail -n 5 /var/log/messages

Sep 9 19:35:49 node1 Keepalived_vrrp[24753]: VRRP_Instance(vrrptest) Entering MASTER STATE

Sep 9 19:35:49 node1 Keepalived_vrrp[24753]: VRRP_Instance(vrrptest) setting protocol VIPs.

Sep 9 19:35:49 node1 Keepalived_vrrp[24753]: VRRP_Instance(vrrptest) Sending gratuitous ARPs on eth0 for 192.168.100.200

Sep 9 19:35:49 node1 Keepalived_healthcheckers[24752]: Netlink reflector reports IP 192.168.100.200 added

Sep 9 19:35:54 node1 Keepalived_vrrp[24753]: VRRP_Instance(vrrptest) Sending gratuitous ARPs on eth0 for 192.168.100.200

对应地,我们查看一下node4的keepalived日志

[root@node4 ~]# date

Mon Sep 9 19:36:12 CST 2019

[root@node4 ~]# tail -n 5 /var/log/messages

Sep 9 17:57:02 node4 Keepalived_vrrp[30903]: Kernel is reporting: interface eth1 UP

Sep 9 17:57:05 node4 Keepalived_vrrp[30903]: VRRP_Instance(vrrptest) Transition to MASTER STATE

Sep 9 17:57:06 node4 Keepalived_vrrp[30903]: VRRP_Instance(vrrptest) Entering MASTER STATE

Sep 9 19:35:48 node4 Keepalived_vrrp[30903]: VRRP_Instance(vrrptest) Received higher prio advert

Sep 9 19:35:48 node4 Keepalived_vrrp[30903]: VRRP_Instance(vrrptest) Entering BACKUP STATE

可以看出,启动Keepalived之前,配置的node1作为MASTER状态的nginx生效了,也就是node1的nginx关停以后再次启动时,Node1的nginx恢复了MASTER状态。