【目标检测系列:三】Faster RCNN simple-faster-rcnn-pytorch 实现详解

Faster RCNN 论文详解 请看 上篇 :【目标检测系列:二】Faster RCNN 论文阅读+解析+pytorch实现

本文主要重点不在于代码调试 ,而是 解析 每个函数是什么意思 ,来达到对Faster RCNN 理解的效果~~~

Pytorch

https://github.com/jwyang/faster-rcnn.pytorch

https://github.com/ruotianluo/pytorch-faster-rcnn

https://github.com/chenyuntc/simple-faster-rcnn-pytorch 跑了这个 ☺

1. Prepare data

Pascal VOC2007

Download the training, validation, test data and VOCdevkit

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtrainval_06-Nov-2007.tar

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtest_06-Nov-2007.tar

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCdevkit_08-Jun-2007.tar

Extract all of these tars into one directory named VOCdevkit

tar xvf VOCtrainval_06-Nov-2007.tar

tar xvf VOCtest_06-Nov-2007.tar

tar xvf VOCdevkit_08-Jun-2007.tar

It should have this basic structure

$VOCdevkit/ # development kit

$VOCdevkit/VOCcode/ # VOC utility code

$VOCdevkit/VOC2007 # image sets, annotations, etc.

# ... and several other directories ...

modify voc_data_dir cfg item in utils/config.py, or pass it to program using argument like --voc-data-dir=/path/to/VOCdevkit/VOC2007/ .

2. Prepare caffe-pretrained vgg16

If you want to use caffe-pretrain model as initial weight, you can run below to get vgg16 weights converted from caffe, which is the same as the origin paper use.

python misc/convert_caffe_pretrain.py

NOTE, caffe pretrained model has shown slight better performance.

NOTE: caffe model require images in BGR 0-255, while torchvision model requires images in RGB and 0-1. See data/dataset.pyfor more detail.

3. begin training

mkdir checkpoints/ # folder for snapshots

python train.py train --env='fasterrcnn-caffe' --plot-every=100 --caffe-pretrain

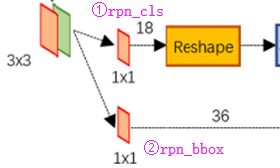

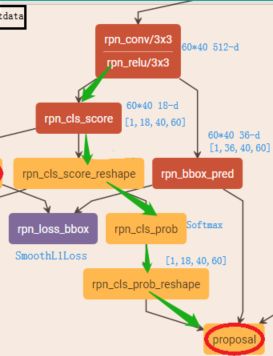

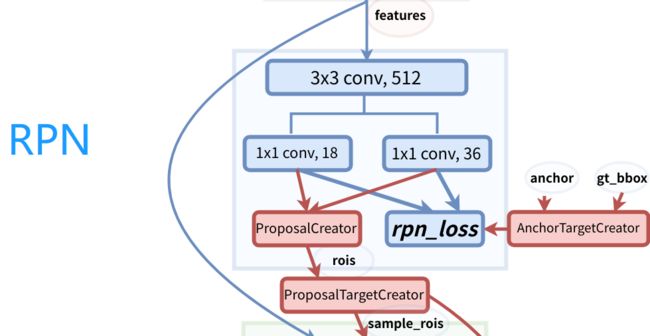

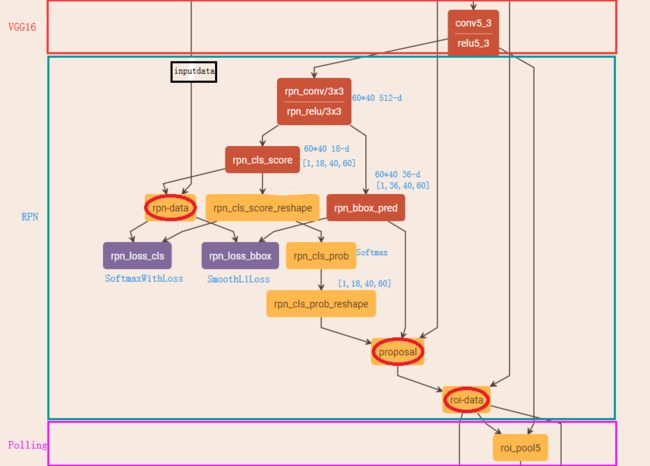

RPN

conv

- h = conv 3×3 / ReLU (x)

- rpn_locs = conv 1×1(h)

(N,4A,H,W) - rpn_scores = conv 1×1(h)

(N,2A,H,W)

rpn_scores → reshape → rpn_softmax_scores → reshape → rpn_fg_scores

(绿色的线)

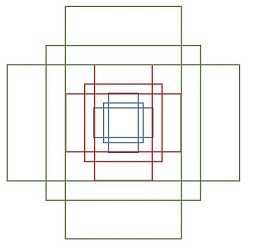

Anchor

anchor = _enumerate_shifted_anchor()

Enumerate all shifted anchors

n_anchor 个数

import numpy as np

import numpy as xp

import six # six是用来兼容python 2 和 3的

from six import __init__

def generate_anchor_base(base_size=16, ratios=[0.5, 1, 2],

anchor_scales=[8, 16, 32]):

"""Generate anchor base windows by enumerating aspect ratio and scales.

Generate anchors that are scaled and modified to the given aspect ratios.

Area of a scaled anchor is preserved when modifying to the given aspect

ratio.

:obj:`R = len(ratios) * len(anchor_scales)` anchors are generated by this

function.

The :obj:`i * len(anchor_scales) + j` th anchor corresponds to an anchor

generated by :obj:`ratios[i]` and :obj:`anchor_scales[j]`.

For example, if the scale is :math:`8` and the ratio is :math:`0.25`,

the width and the height of the base window will be stretched by :math:`8`.

For modifying the anchor to the given aspect ratio,

the height is halved and the width is doubled.

Args:

base_size (number): The width and the height of the reference window.

ratios (list of floats): This is ratios of width to height of

the anchors.

anchor_scales (list of numbers): This is areas of anchors.

Those areas will be the product of the square of an element in

:obj:`anchor_scales` and the original area of the reference

window.

Returns:

~numpy.ndarray:

An array of shape :math:`(R, 4)`.

Each element is a set of coordinates of a bounding box.

The second axis corresponds to

:math:`(y_{min}, x_{min}, y_{max}, x_{max})` of a bounding box.

"""

py = base_size / 2.

px = base_size / 2.

# ratios=[0.5, 1, 2]

# anchor_scales=[8, 16, 32]

anchor_base = np.zeros((len(ratios) * len(anchor_scales), 4),

dtype=np.float32)

for i in six.moves.range(len(ratios)):

for j in six.moves.range(len(anchor_scales)):

h = base_size * anchor_scales[j] * np.sqrt(ratios[i])

w = base_size * anchor_scales[j] * np.sqrt(1. / ratios[i])

index = i * len(anchor_scales) + j

anchor_base[index, 0] = py - h / 2.

anchor_base[index, 1] = px - w / 2.

anchor_base[index, 2] = py + h / 2.

anchor_base[index, 3] = px + w / 2.

return anchor_base

Result:

index=0

[[-37.254833 -82.50967 53.254833 98.50967 ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]]

index=1

[[ -37.254833 -82.50967 53.254833 98.50967 ]

[ -82.50967 -173.01933 98.50967 189.01933 ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]]

index=2

[[ -37.254833 -82.50967 53.254833 98.50967 ]

[ -82.50967 -173.01933 98.50967 189.01933 ]

[-173.01933 -354.03867 189.01933 370.03867 ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]]

index=3

[[ -37.254833 -82.50967 53.254833 98.50967 ]

[ -82.50967 -173.01933 98.50967 189.01933 ]

[-173.01933 -354.03867 189.01933 370.03867 ]

[ -56. -56. 72. 72. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]]

index=4

[[ -37.254833 -82.50967 53.254833 98.50967 ]

[ -82.50967 -173.01933 98.50967 189.01933 ]

[-173.01933 -354.03867 189.01933 370.03867 ]

[ -56. -56. 72. 72. ]

[-120. -120. 136. 136. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]]

index=5

[[ -37.254833 -82.50967 53.254833 98.50967 ]

[ -82.50967 -173.01933 98.50967 189.01933 ]

[-173.01933 -354.03867 189.01933 370.03867 ]

[ -56. -56. 72. 72. ]

[-120. -120. 136. 136. ]

[-248. -248. 264. 264. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]]

index=6

[[ -37.254833 -82.50967 53.254833 98.50967 ]

[ -82.50967 -173.01933 98.50967 189.01933 ]

[-173.01933 -354.03867 189.01933 370.03867 ]

[ -56. -56. 72. 72. ]

[-120. -120. 136. 136. ]

[-248. -248. 264. 264. ]

[ -82.50967 -37.254833 98.50967 53.254833]

[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]]

index=7

[[ -37.254833 -82.50967 53.254833 98.50967 ]

[ -82.50967 -173.01933 98.50967 189.01933 ]

[-173.01933 -354.03867 189.01933 370.03867 ]

[ -56. -56. 72. 72. ]

[-120. -120. 136. 136. ]

[-248. -248. 264. 264. ]

[ -82.50967 -37.254833 98.50967 53.254833]

[-173.01933 -82.50967 189.01933 98.50967 ]

[ 0. 0. 0. 0. ]]

index=8

[[ -37.254833 -82.50967 53.254833 98.50967 ]

[ -82.50967 -173.01933 98.50967 189.01933 ]

[-173.01933 -354.03867 189.01933 370.03867 ]

[ -56. -56. 72. 72. ]

[-120. -120. 136. 136. ]

[-248. -248. 264. 264. ]

[ -82.50967 -37.254833 98.50967 53.254833]

[-173.01933 -82.50967 189.01933 98.50967 ]

[-354.03867 -173.01933 370.03867 189.01933 ]]

bbox_tools

- R R R

number of bounding boxes.

bbox2loc

encoder :给一个 bounding box ,计算边界框的偏移量和比例

-

bounding box

center ( y , x ) = p y , p x (y, x) = p_y, p_x (y,x)=py,px

size p h , p w p_h, p_w ph,pw -

the target bounding box

center g y , g x g_y, g_x gy,gx

size g h , g w g_h, g_w gh,gw -

the offsets and scales

t y , t x , t h , t w t_y, t_x, t_h, t_w ty,tx,th,tw can be computed by the following formulas.t y = ( g y − p y ) p h t_y = \frac{(g_y - p_y)} {p_h} ty=ph(gy−py)

t x = ( g x − p x ) p w t_x = \frac{(g_x - p_x)} {p_w} tx=pw(gx−px)

t h = log ( g h p h ) t_h = \log(\frac{g_h} {p_h}) th=log(phgh)

t w = log ( g w p w ) t_w = \log(\frac{g_w} {p_w}) tw=log(pwgw) -

输出的type 和 输入相同。

encoder 公式在R-CNN 中也用到了。

loc2bbox

decoder :根据bbox2loc求出来的边界框的偏移量和比例,计算 bounding boxes 坐标

-

Given bounding box offsets and scales computed by

bbox2loc, -

this function Decode bounding boxes from bounding box offsets and scales.

-

Given scales and offsets $ t_y, t_x, t_h, t_w$

-

a bounding box whose center is ( y , x ) = p y , p x (y, x) = p_y, p_x (y,x)=py,px and size p h , p w p_h, p_w ph,pw,

-

the decoded bounding box’s center : g ^ y \hat{g}_y g^y, g ^ x \hat{g}_x g^x and size g ^ h \hat{g}_h g^h, g ^ w \hat{g}_w g^w are calculated by the following formulas.

g ^ y = p h t y + p y \hat{g}_y = p_h t_y + p_y g^y=phty+py

g ^ x = p w t x + p x \hat{g}_x = p_w t_x + p_x g^x=pwtx+px

g ^ h = p h exp ( t h ) \hat{g}_h = p_h \exp(t_h) g^h=phexp(th)

g ^ w = p w exp ( t w ) \hat{g}_w = p_w \exp(t_w) g^w=pwexp(tw) -

bbox_iou

计算边界框之间 iou

-

Args:

bbox_a (array) (R, 4)bbox_b (array): An array similar to bbox_a, (K, 4)

-

Returns:

array ( R , K ) (R, K) (R,K)

generate_anchor_base

Generate anchor base windows by enumerating aspect ratio and scales

- base_size=16, ratios=[0.5, 1, 2], anchor_scales=[8, 16, 32]

Proposal

- proposal_layer = ProposalCreator()

roi = proposal_layer( rpn_locs, rpn_fg_scores ,anchor)

ProposalCreator

-

This class takes parameters to control number of bounding boxes to pass to NMS and keep after NMS.

该类接受参数来控制要传递给NMS并在NMS之后保留的包围框数量。 -

Returns

An array of coordinates of proposal boxes

-

process

- Convert anchors into proposal via bbox transformations.

roi = loc2bbox(anchor, loc)

-

Clip predicted boxes to image.

-

Remove predicted boxes with either height or width < threshold.

-

Sort all (proposal, score) pairs by score from highest to lowest.

Take top pre_nms_topN (e.g. 6000).

-

Apply nms (e.g. threshold = 0.7).

Take after_nms_topN (e.g. 300). -

keep = non_maximum_suppression

ROI Pooling

VGG16RoIHead

head = VGG16RoIHead(

n_class=n_fg_class + 1,

roi_size=7,

spatial_scale=(1. / self.feat_stride),

classifier=classifier

)

-

pool = self.roi(x, indices_and_rois)

roi = RoIPooling2D(self.roi_size, self.roi_size, self.spatial_scale)roi_size=7

FC

VGG16RoIHead

- fc7 = self.classifier(pool)

Two layer Linear ported from vgg16

classifier = decom_vgg16()

-

oi_cls_locs = self.cls_loc(fc7)

cls_loc = nn.Linear(4096, n_class * 4) -

roi_scores = self.score(fc7)

score = nn.Linear(4096, n_class)