Java 8 LongAdder 使用问题记录

这次做一个数据拷贝的活,考虑数据量大,试着做多线程方式,碰到LongAdder的使用问题,记录之

1.第一版拷贝,单线程,嵌套for循环插入数据库,保证数据正确性。由于数据量庞大,100多万条数据拆分成多张表记录,数据量超过1000万条,逐条插入效率低下,总计时间将近10小时。

2.第二版拷贝,单线程,嵌套for循环整理数据,已最小分类为最小单元,批量插入数据库。时间提升将近50%。此时使用LongAdder维持id的增量未出现id重复问题。但是在接口多次请求时,即多线程时,出现id重复问题。记录如下:

(1)初始化各表主键和调用方法如下

private static volatile LongAdder longAdderBig = null;

private static volatile LongAdder longAdderSmall = null;

private static volatile LongAdder longAdderOption = null;

private static volatile LongAdder longAdderAttachment = null;

private synchronized void init() {

if (longAdderBig == null) {

longAdderBig = new LongAdder();

longAdderBig.add(Optional.ofNullable(examQuestionBigMapper.findMaxId()).orElse(0L));

}

if (longAdderSmall == null) {

longAdderSmall = new LongAdder();

longAdderSmall.add(Optional.ofNullable(examQuestionSmallMapper.findMaxId()).orElse(0L));

}

if (longAdderOption == null) {

longAdderOption = new LongAdder();

longAdderOption.add(Optional.ofNullable(examQuestionOptionMapper.findMaxId()).orElse(0L));

}

if (longAdderAttachment == null) {

longAdderAttachment = new LongAdder();

longAdderAttachment.add(Optional.ofNullable(examQuestionAttachmentMapper.findMaxId()).orElse(0L));

}

if (null == examQuestionbankService) {

examQuestionbankService = applicationContext.getBean(ExamQuestionbankServiceImpl.class);

System.out.println("初始化");

}

}

private Long getBigId() {

longAdderBig.increment();

return longAdderBig.longValue();

}

private Long getSmallId() {

longAdderSmall.increment();

return longAdderSmall.longValue();

}

private Long getOptionId() {

longAdderOption.increment();

return longAdderOption.longValue();

}

private Long getAttachmentId() {

longAdderAttachment.increment();

return longAdderAttachment.longValue();

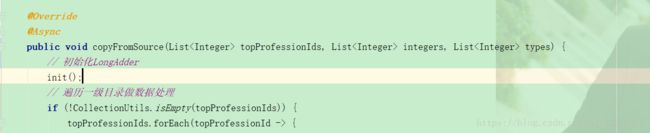

}(2)在方法中初始化,该方法为异步方法,即接口多次请求,会发生多线程

(3)这种写法在接口多次请求发生的初期,会出现id重复的错误

(4)LongAdder都说是较AtomicLong更高级高效的,我不知道我这么用是不是正确,查看LongAdder的lognValue()方法,实现如下,返回是sum()方法的结果。

/**

* Equivalent to {@link #sum}.

*

* @return the sum

*/

public long longValue() {

return sum();

}(5)再看sum()方法

/**

* Returns the current sum. The returned value is NOT an

* atomic snapshot; invocation in the absence of concurrent

* updates returns an accurate result, but concurrent updates that

* occur while the sum is being calculated might not be

* incorporated.

*

* @return the sum

*/

public long sum() {

Cell[] as = cells; Cell a;

long sum = base;

if (as != null) {

for (int i = 0; i < as.length; ++i) {

if ((a = as[i]) != null)

sum += a.value;

}

}

return sum;

}注意到该方法的注释,大概翻译过来是“返回当前的总和。返回的值不是原子快照;在没有并发更新的情况下调用会返回一个准确的结果,但是在计算和时发生的并发更新可能不会被合并。” 什么意思呢?不是很理解,但是注意到一个值,base。在求和时,base是赋值给sum的,如果在计算时,即for循环当中计算sum时,base被改变,是否会改变返回结果呢?目前猜测问题出于此处,未得到验证,如果有大佬验证过,还请不吝赐教。

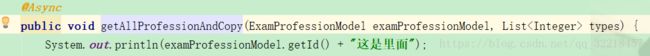

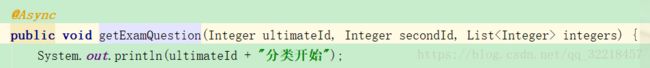

3.第三版拷贝,多线程,批量插入。将该类代理,然后里面的方法之中按分类做异步,以达到多线程处理数据。代理本来就是init()初始化方法最后的service注入。另外将几个方法也做异步,如下

程序跑起来,错误就多了!

首先是数据库连接超时,因为for循环,数据分类较多,查看数据库连接数

mysql> show status like 'Threads%';

+-------------------+-------+

| Variable_name | Value |

+-------------------+-------+

| Threads_cached | 0 |

| Threads_connected | 23 |

| Threads_created | 458 |

| Threads_running | 6 |

+-------------------+-------+mysql> show variables like '%max_connections%';

+-----------------+-------+

| Variable_name | Value |

+-----------------+-------+

| max_connections | 151 |

+-----------------+-------+

1 row in set

mysql> show status like 'Connections';

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| Connections | 37022 |

+---------------+-------+

1 row in set程序报错:连接错误

### Error querying database. Cause: Failed to obtain JDBC Connection; nested exception is java.sql.SQLTransientConnectionException: HikariPool-1 - Connection is not available, request timed out after 30002ms.

贴下id重复。

### Error updating database. Cause: com.mysql.jdbc.exceptions.jdbc4.MySQLIntegrityConstraintViolationException: Duplicate entry '56562' for key 'PRIMARY'

### The error may involve defaultParameterMap

### The error occurred while setting parametersid重复的错误就很多很多了。所以是不是多线程下是不是就不适合用LongAdder呢???

4.还是考虑将LongAdder换成AtomicLong,替换后看效果

private static volatile AtomicLong longAdderBig = null;

private static volatile AtomicLong longAdderSmall = null;

private static volatile AtomicLong longAdderOption = null;

private static volatile AtomicLong longAdderAttachment = null;

private synchronized void init() {

if (longAdderBig == null) {

longAdderBig = new AtomicLong(Optional.ofNullable(examQuestionBigMapper.findMaxId()).orElse(0L));

}

if (longAdderSmall == null) {

longAdderSmall = new AtomicLong(Optional.ofNullable(examQuestionSmallMapper.findMaxId()).orElse(0L));

}

if (longAdderOption == null) {

longAdderOption = new AtomicLong(Optional.ofNullable(examQuestionOptionMapper.findMaxId()).orElse(0L));

}

if (longAdderAttachment == null) {

longAdderAttachment = new AtomicLong(Optional.ofNullable(examQuestionAttachmentMapper.findMaxId()).orElse(0L));

}

if (null == examQuestionbankService) {

examQuestionbankService = applicationContext.getBean(ExamQuestionbankServiceImpl.class);

System.out.println("初始化");

}

}

private Long getBigId() {

return longAdderBig.incrementAndGet();

}

private Long getSmallId() {

return longAdderSmall.incrementAndGet();

}

private Long getOptionId() {

return longAdderOption.incrementAndGet();

}

private Long getAttachmentId() {

return longAdderAttachment.incrementAndGet();

}接口调用后,程序执行时错误,jdbc连接超时等错误频发

错误

org.mybatis.spring.MyBatisSystemException: nested exception is org.apache.ibatis.exceptions.PersistenceException:

### Error querying database. Cause: org.springframework.jdbc.CannotGetJdbcConnectionException: Failed to obtain JDBC Connection; nested exception is java.sql.SQLTransientConnectionException: HikariPool-1 - Connection is not available, request timed out after 30006ms.

The last packet successfully received from the server was 0 milliseconds ago. The last packet sent successfully to the server was 94,951 milliseconds ago.数据库状态

mysql> show status like 'Threads%';

+-------------------+-------+

| Variable_name | Value |

+-------------------+-------+

| Threads_cached | 0 |

| Threads_connected | 23 |

| Threads_created | 463 |

| Threads_running | 8 |

+-------------------+-------+

4 rows in set

mysql> show variables like '%max_connections%';

+-----------------+-------+

| Variable_name | Value |

+-----------------+-------+

| max_connections | 151 |

+-----------------+-------+

1 row in set

mysql> show status like 'Connections';

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| Connections | 37055 |

+---------------+-------+

1 row in set5.将最小分类的异步去掉,只做二级分类的异步,查看远程mysql和本地mysql状态

mysql> show status like 'Connections';

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| Connections | 37095 |

+---------------+-------+

1 row in set

mysql>

mysql> show status like 'Connections';

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| Connections | 37095 |

+---------------+-------+

1 row in set

mysql> show status like 'Threads_connected';

+-------------------+-------+

| Variable_name | Value |

+-------------------+-------+

| Threads_connected | 13 |

+-------------------+-------+

1 row in set

mysql> show status like 'Threads%';

+-------------------+-------+

| Variable_name | Value |

+-------------------+-------+

| Threads_cached | 8 |

| Threads_connected | 13 |

| Threads_created | 465 |

| Threads_running | 1 |

+-------------------+-------+

4 rows in set

mysql> show variables like '%max_connections%';

+-----------------+-------+

| Variable_name | Value |

+-----------------+-------+

| max_connections | 151 |

+-----------------+-------+

1 row in set

mysql> show status like 'Threads%';

+-------------------+-------+

| Variable_name | Value |

+-------------------+-------+

| Threads_cached | 0 |

| Threads_connected | 17 |

| Threads_created | 26 |

| Threads_running | 1 |

+-------------------+-------+

4 rows in set

mysql> show status like 'Connections';

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| Connections | 66 |

+---------------+-------+

1 row in set远程连接还是居高不下,初期出现连接超时问题(可能时间不够长,未暴露问题),考虑重启。

本地重启

mysql> show status like 'Threads%';

+-------------------+-------+

| Variable_name | Value |

+-------------------+-------+

| Threads_cached | 0 |

| Threads_connected | 1 |

| Threads_created | 1 |

| Threads_running | 1 |

+-------------------+-------+

4 rows in set

mysql> show variables like '%max_connections%';

+-----------------+-------+

| Variable_name | Value |

+-----------------+-------+

| max_connections | 151 |

+-----------------+-------+

1 row in set

mysql> show status like 'Connections';

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| Connections | 2 |

+---------------+-------+

1 row in set远程重启

mysql> show status like 'Threads%';

+-------------------+-------+

| Variable_name | Value |

+-------------------+-------+

| Threads_cached | 0 |

| Threads_connected | 1 |

| Threads_created | 1 |

| Threads_running | 1 |

+-------------------+-------+

4 rows in set

mysql> show status like 'Threads_connected';

+-------------------+-------+

| Variable_name | Value |

+-------------------+-------+

| Threads_connected | 1 |

+-------------------+-------+

1 row in set

mysql> show status like 'Connections';

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| Connections | 4 |

+---------------+-------+

1 row in set

mysql> show variables like '%max_connections%';

+-----------------+-------+

| Variable_name | Value |

+-----------------+-------+

| max_connections | 151 |

+-----------------+-------+

1 row in set重启程序,再观察:修改连接池的大小为100(之前为50)

spring:

profiles:

active: @profiles.active@

datasource:

type: com.zaxxer.hikari.HikariDataSource

hikari:

maximum-pool-size: 100相比之下,重启之后的mysql连接数明显下降,但是程序运行过程中连接超时问题频发。

6.使用线程池管理线程:

@Configuration

public class AsyncTaskExecutePool implements AsyncConfigurer {

@Override

public Executor getAsyncExecutor() {

ThreadPoolTaskExecutor executor = new ThreadPoolTaskExecutor();

executor.setCorePoolSize(5);

executor.setMaxPoolSize(20);

executor.setQueueCapacity(3);

executor.setKeepAliveSeconds(60);

executor.setThreadNamePrefix("taskExecutor-");

// rejection-policy:当pool已经达到max size的时候,如何处理新任务

// CALLER_RUNS:不在新线程中执行任务,而是由调用者所在的线程来执行

executor.setRejectedExecutionHandler(new ThreadPoolExecutor.CallerRunsPolicy());

executor.initialize();

return executor;

}

@Override

public AsyncUncaughtExceptionHandler getAsyncUncaughtExceptionHandler() {// 异步任务中异常处理

return new AsyncUncaughtExceptionHandler() {

@Override

public void handleUncaughtException(Throwable throwable, Method method, Object... objects) {

System.out.println("出错了");

}

};

}

}数据拷贝100万左右时发现程序反应慢,此时用时1小时10分左右,速度明显提高,但后面的数据为何慢??不得解。