Efficient ConvNet for Real-time Semantic Segmentation论文解读

Abstract:

real-time :83 FPS in a single Titan X, and at more than 7 FPS in a Jetson TX1 (embedded GPU).

a novel layer : uses residual connections and factorized convolutions in order to remain highly efficient while still retaining remarkable performance. (又好又快)

dataset : Cityscapes dataset

INTRODUCTION:

现有分割任务的网络准确率已经很高了,但是速度很慢,那些速度快的,又损失了准确率。

本文提出:

a novel residual block that uses factorized convolutions

速度快,可以在嵌入式设备上运行。兼顾速度和准确率。

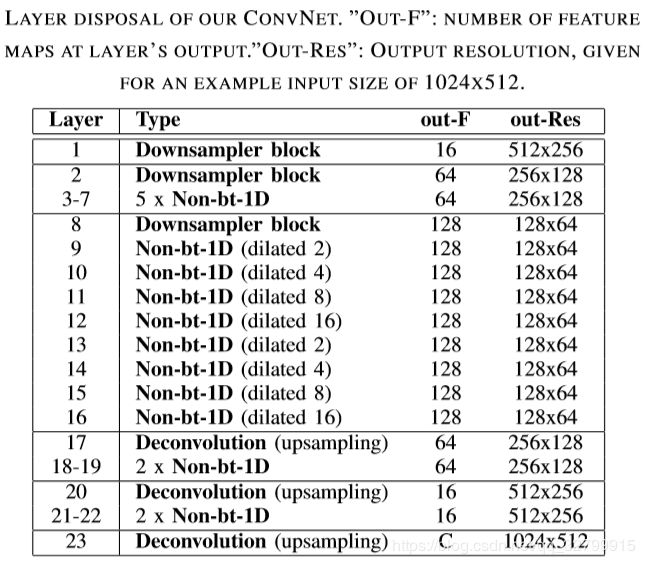

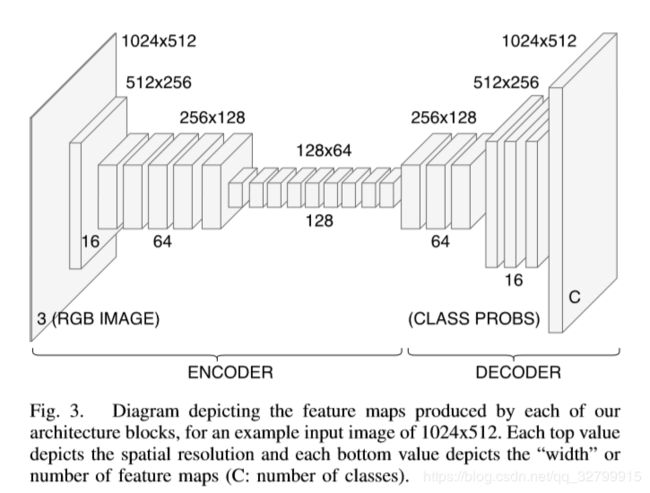

ARCHITECTURE:

Residual connections:avoid the degradation problem that is present in architectures with a large amount of stacked layers

we propose a “wider” architecture (as opposed to “deeper”)

bottleneck and non-bottleneck

bottleneck 一般用来减少计算量,non-bottleneck的 feature 尺度增加,相当于“wider”,在浅层网络中更有利。

重新设计了残差块,结合the efficiency of the bottleneck and the width of the non-bottleneck

网络结构 encoder-decoder architecture

encoder:

由residual blocks and downsampling blocks组成,Downsampling 降低精度,但是可以减少计算,并且让深层网络收集更多的上下文信息,提高分类效果。所以为了平衡,在 1, 2 and 8层下采样。

decoder:

使用simple deconvolution layers with stride 2 (also known as full-convolutions)实现decoder。

downsampling:

图2b,卷积和最大池化相结合

dilated convolutions 在change the second pair of 3x1 and 1x3 convolutions for a pair of dilated 1D convolutions 增大感受野,获取更多的上下文信息,提高分类准确率。

BN,dropout,防止过拟合。

EXPERIMENTS:

Dataset:

Cityscapes train set of 2975 images, validation set of 500 images and a test set of 1525 images , resolution is 2048x1024

只用train训练集图像,不用预训练参数

数据增强:random horizontal flips and translations of 0-2 pixels in both axes

评价指标:Intersection-over-Union (IoU) metric

训练输入大小 1024x512,但是以2048x1024输出,来评价。

Setup:

Torch7 framework

Adam optimization of stochastic gradient descent

batch size of 12

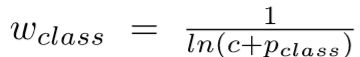

和Enet类似,使用了类别加权训练,提升了效果; 然后先训练decoder, 然后再一起end-to-end的train。

c = 1.10

Comparison to the state of the art

19 classes , 7 categories

每一个类别的准确率:

CONCLUSIONS :

提出一个新的模型,准确率达到现有的较好的水平,同时速度要快很多,可以应用在嵌入式gpu上。

论文阅读感悟:

1.目前的网络都是向着”又瘦又长“的趋势发展,所以残差连接成了一种共识,这篇文章虽然说不是很深的网络,但是算上残差块里的每一层,其实也有几十层了;在最近看到的yolov3中,back_bone是darknet53,也是同样的,全部是1*1,3*3的卷积堆起来的,同时也使用了残差连接,在更深的情况下,没有增加计算量。

2.在语义分割中,下采样越多,高层的感受野越大,有更多的上下文信息,分类准确率会提高,但是重新上采样恢复到原图的精度会损失,需要去平衡这个关系。目前来说空洞卷积是一个很好的解决办法。