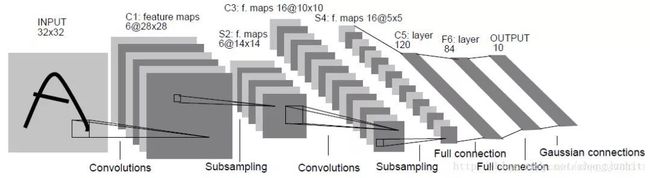

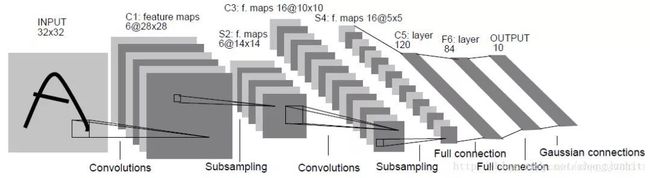

LeNet网络是比较入门的网络,我们今天利用Keras来搭建一个LeNet网络.话不多说,来点干货。。

步骤一:导入相应的库

from keras.models import Sequential

from keras.layers import Dense,Flatten,Dropout

from keras.layers.convolutional import Conv2D,MaxPooling2D

from keras.utils.np_utils import to_categorical

from keras.datasets import mnist

from keras.utils import plot_model

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

from sklearn.model_selection import KFold

步骤二:加载和准备数据集

dataset=mnist.load_data()

(X_train,Y_train),(X_test,Y_test)=dataset

X_train=X_train.reshape(-1,28,28,1)

X_test=X_test.reshape(-1,28,28,1) #[None,width,height,channels]

Y_train=to_categorical(Y_train,num_classes=10)

Y_test=to_categorical(Y_test,num_classes=10) #这里是将数字转换为one-hot编码

步骤三:搭建模型

def LeNet(X_train,Y_train):

model=Sequential()

model.add(Conv2D(filters=5,kernel_size=(3,3),strides=(1,1),input_shape=X_train.shape[1:],padding='same',

data_format='channels_last',activation='relu',kernel_initializer='uniform')) #[None,28,28,5]

model.add(Dropout(0.2))

model.add(MaxPooling2D((2,2))) #池化核大小[None,14,14,5]

model.add(Conv2D(16,(3,3),strides=(1,1),data_format='channels_last',padding='same',activation='relu',kernel_initializer='uniform'))#[None,12,12,16]

model.add(Dropout(0.2))

model.add(MaxPooling2D(2,2)) #output_shape=[None,6,6,16]

model.add(Conv2D(32, (3, 3), strides=(1, 1), data_format='channels_last', padding='same', activation='relu',

kernel_initializer='uniform')) #[None,4,4,32]

model.add(Dropout(0.2))

# model.add(MaxPooling2D(2, 2))

model.add(Conv2D(100,(3,3),strides=(1,1),data_format='channels_last',activation='relu',kernel_initializer='uniform')) #[None,2,2,100]

model.add(Flatten(data_format='channels_last')) #[None,400]

model.add(Dense(168,activation='relu')) #[None,168]

model.add(Dense(84,activation='relu')) #[None,84]

model.add(Dense(10,activation='softmax')) #[None,10]

#打印参数

model.summary()

#编译模型

model.compile(optimizer='adam',loss='categorical_crossentropy',metrics=['accuracy'])

return model

步骤四:运行结果

if __name__=="__main__":

#模型训练

model=LeNet(X_train,Y_train)

model.fit(x=X_train,y=Y_train,batch_size=128,epochs=20)

#模型评估

loss,acc=model.evaluate(x=X_test,y=Y_test)

print("loss:{}===acc:{}".format(loss,acc))

最后手写识别准确率能达到99.5%

ps:对于如何确定经过某些层之后输出数据形状如何计算:例如:当shape=[batch_size,width,height,channels],若输入的形状是[None,28,28,1],当我们经过conv2D(6,(3,3),strides=(1,1))之后,输出的形状应该为[None,26,26,6] ,可以通过28-(3-1)=26得到。若将[None,26,26,6]作为输入的时候,经过MaxPooling2D((2,2))之后,输出的形状为[None,13,13,6],可以通过26/2=13得到。