【Deep Learning】YOLO_v1 的 TensorFlow 源码分析

统一回复:

距离这篇也已经过去有一段时间了,而且到目前 yolo_v3 的论文以及源码已经放出,这里推荐大家看完论文后直接实现 v3 的代码,目前我已经调通并在自己的数据集上做了训练及先关验证,都达到了比 yolo_v1 好很多的效果,过一段时间我会整理上来并发在博客上,我建了一个关于keras 实现 yolo_v3 的微信群,如果大家对 yolo 感兴趣把微信号可以发我邮箱 [email protected],我会拉大家进群一起讨论。

本文是对上一篇文章的继续补充,在这里首先说明,这个 TensorFlow 版本的源码 来自于 hizhangp/yolo_tensorflow,经过部分细节的调整运行在我的设备上,我使用的环境是Win10+GPU+OpenCV 3.3 + Python3.6 +TensorFlow1.4 和 Ubuntu16.04 + GPU+OpenCV 3.3 + Python3.6 + TensorFlow 1.4 。

##1.准备工作

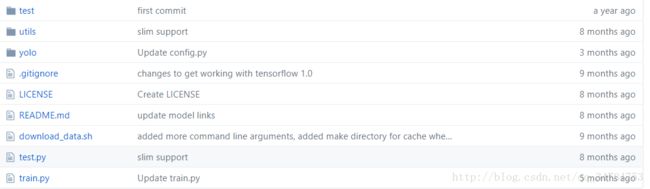

github 的源码页面如图所示:

这里我们将所有文件clone 或 下载到本地后,再根据 README 的连接下载相应的文件放在相应的文件夹内,主要需要下载的是 pascal VOC 2007 数据集和 一个 small_yolo的ckpt文件,这里,考虑到外网可能不太好下载,这里给出百度网盘的下载链接:

- pascal_VOC

- YOLO_small

下载好后分别放在相应的文件夹内即可,我使用的 Python 编译器是 Pycharm,这里可以看一下大致的目录结构,如下所示:

将这些都准备好后,便可以直接运行 test 进行检测了,过程中可能会出现一些报错,主要出错的问题我遇到了两个,分别是

- print 报错,这主要是因为我是用的 Python 版本是 Python3,只需要在相应的位置加上括号即可

- cv2.AA 报错,主要原因应该是 OpenCV 版本问题,解决方法是将 cv2.CV_AA 改为 cv2.LINE_AA。

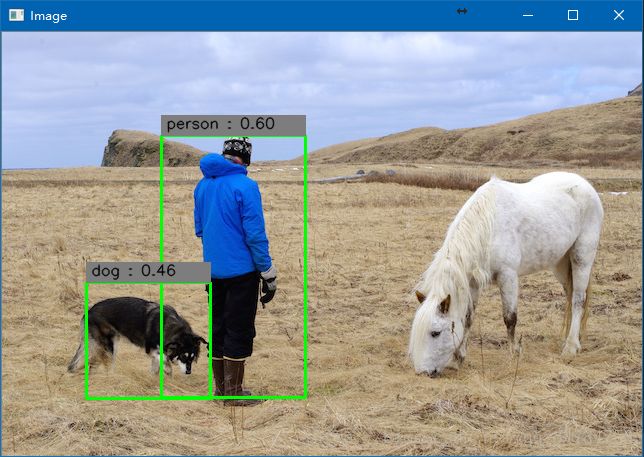

在改好后,直接运行程序,得到的是检测图像的结果,如下所示:

此外,程序还提供了实时调用摄像头进行检测的代码,在电脑上接好摄像头并将相应部分取消注释直接运行即可,效果如下所示:

可以看出,虽然是对艺术作品进行检测,但是Yolo 仍然能够获得比较好的检测效果,而且能够达到实时的效果。

下面分别介绍项目中的各个程序:

##2. config.py

这部分程序主要是用来定义网络中的一些整体结构参数,具体程序如下所示:

import os

#

# path and dataset parameter

#

# 第一部分是使用到的数据相关参数,包括数据路径预训练权重等相关内容。

DATA_PATH = 'data'

PASCAL_PATH = os.path.join(DATA_PATH, 'pascal_voc')

CACHE_PATH = os.path.join(PASCAL_PATH, 'cache')

OUTPUT_DIR = os.path.join(PASCAL_PATH, 'output')

WEIGHTS_DIR = os.path.join(PASCAL_PATH, 'weight')

WEIGHTS_FILE = None

# WEIGHTS_FILE = os.path.join(DATA_PATH, 'weights', 'YOLO_small.ckpt')

CLASSES = ['aeroplane', 'bicycle', 'bird', 'boat', 'bottle', 'bus',

'car', 'cat', 'chair', 'cow', 'diningtable', 'dog', 'horse',

'motorbike', 'person', 'pottedplant', 'sheep', 'sofa',

'train', 'tvmonitor']

# 是否对样本图像进行flip(水平镜像)操作

FLIPPED = True

#

# model parameter

#

# 这部分主要是模型参数

# 图像size

IMAGE_SIZE = 448

# 网格 size

CELL_SIZE = 7

# 每个 cell 中 bounding box 数量

BOXES_PER_CELL = 2

# 权重衰减相关参数

ALPHA = 0.1

DISP_CONSOLE = False

# 权重衰减的相关参数

OBJECT_SCALE = 1.0

NOOBJECT_SCALE = 0.5

CLASS_SCALE = 2.0

COORD_SCALE = 5.0

#

# solver parameter

#

# 训练过程中的相关参数

GPU = ''

# 学习速率

LEARNING_RATE = 0.0001

# 衰减步数

DECAY_STEPS = 30000

# 衰减率

DECAY_RATE = 0.1

STAIRCASE = True

# batch_size初始值为45

BATCH_SIZE = 16

# 最大迭代次数

MAX_ITER = 15000

# 日志记录迭代步数

SUMMARY_ITER = 10

SAVE_ITER = 1000

#

# test parameter

#

# 测试时的相关参数

# 阈值参数

THRESHOLD = 0.2

# IoU 参数

IOU_THRESHOLD = 0.5

因为这部分程序比较简单,具体内容可以参考上面的注释。

3. yolo_net.py

###3.1 def _init_(self, is_training=True)

首先,来看第一部分:

def __init__(self, is_training=True):

self.classes = cfg.CLASSES

self.num_class = len(self.classes)

self.image_size = cfg.IMAGE_SIZE

self.cell_size = cfg.CELL_SIZE

self.boxes_per_cell = cfg.BOXES_PER_CELL

self.output_size = (self.cell_size * self.cell_size) * (self.num_class + self.boxes_per_cell * 5)

self.scale = 1.0 * self.image_size / self.cell_size

self.boundary1 = self.cell_size * self.cell_size * self.num_class

self.boundary2 = self.boundary1 + self.cell_size * self.cell_size * self.boxes_per_cell

self.object_scale = cfg.OBJECT_SCALE

self.noobject_scale = cfg.NOOBJECT_SCALE

self.class_scale = cfg.CLASS_SCALE

self.coord_scale = cfg.COORD_SCALE

self.learning_rate = cfg.LEARNING_RATE

self.batch_size = cfg.BATCH_SIZE

self.alpha = cfg.ALPHA

self.offset = np.transpose(np.reshape(np.array(

[np.arange(self.cell_size)] * self.cell_size * self.boxes_per_cell),

(self.boxes_per_cell, self.cell_size, self.cell_size)), (1, 2, 0))

self.images = tf.placeholder(tf.float32, [None, self.image_size, self.image_size, 3], name='images')

self.logits = self.build_network(self.images, num_outputs=self.output_size, alpha=self.alpha, is_training=is_training)

if is_training:

self.labels = tf.placeholder(tf.float32, [None, self.cell_size, self.cell_size, 5 + self.num_class])

self.loss_layer(self.logits, self.labels)

self.total_loss = tf.losses.get_total_loss()

tf.summary.scalar('total_loss', self.total_loss)

这部分的主要作用是利用 cfg 文件对网络参数进行初始化,同时定义网络的输入和输出 size 等信息,其中 offset 的作用应该是一个定长的偏移;

boundery1和boundery2 作用是在输出中确定每种信息的长度(如类别,置信度等)。其中 boundery1 指的是对于所有的 cell 的类别的预测的张量维度,所以是 self.cell_size * self.cell_size * self.num_class

boundery2 指的是在类别之后每个cell 所对应的 bounding boxes 的数量的总和,所以是self.boundary1 + self.cell_size * self.cell_size * self.boxes_per_cell

###3.2 build_network

这部分主要是实现了 yolo 网络模型的构成,可以清楚的看到网络的组成,而且为了使程序更加简洁,构建网络使用的是 TensorFlow 中的 slim 模块,主要的函数有slim.arg_scope slim.conv2d slim.fully_connected 和 slim.dropoout等,具体程序如下所示:

def build_network(self,

images,

num_outputs,

alpha,

keep_prob=0.5,

is_training=True,

scope='yolo'):

with tf.variable_scope(scope):

with slim.arg_scope([slim.conv2d, slim.fully_connected],

activation_fn=leaky_relu(alpha),

weights_initializer=tf.truncated_normal_initializer(0.0, 0.01),

weights_regularizer=slim.l2_regularizer(0.0005)):

net = tf.pad(images, np.array([[0, 0], [3, 3], [3, 3], [0, 0]]), name='pad_1')

net = slim.conv2d(net, 64, 7, 2, padding='VALID', scope='conv_2')

net = slim.max_pool2d(net, 2, padding='SAME', scope='pool_3')

net = slim.conv2d(net, 192, 3, scope='conv_4')

net = slim.max_pool2d(net, 2, padding='SAME', scope='pool_5')

net = slim.conv2d(net, 128, 1, scope='conv_6')

net = slim.conv2d(net, 256, 3, scope='conv_7')

net = slim.conv2d(net, 256, 1, scope='conv_8')

net = slim.conv2d(net, 512, 3, scope='conv_9')

net = slim.max_pool2d(net, 2, padding='SAME', scope='pool_10')

net = slim.conv2d(net, 256, 1, scope='conv_11')

net = slim.conv2d(net, 512, 3, scope='conv_12')

net = slim.conv2d(net, 256, 1, scope='conv_13')

net = slim.conv2d(net, 512, 3, scope='conv_14')

net = slim.conv2d(net, 256, 1, scope='conv_15')

net = slim.conv2d(net, 512, 3, scope='conv_16')

net = slim.conv2d(net, 256, 1, scope='conv_17')

net = slim.conv2d(net, 512, 3, scope='conv_18')

net = slim.conv2d(net, 512, 1, scope='conv_19')

net = slim.conv2d(net, 1024, 3, scope='conv_20')

net = slim.max_pool2d(net, 2, padding='SAME', scope='pool_21')

net = slim.conv2d(net, 512, 1, scope='conv_22')

net = slim.conv2d(net, 1024, 3, scope='conv_23')

net = slim.conv2d(net, 512, 1, scope='conv_24')

net = slim.conv2d(net, 1024, 3, scope='conv_25')

net = slim.conv2d(net, 1024, 3, scope='conv_26')

net = tf.pad(net, np.array([[0, 0], [1, 1], [1, 1], [0, 0]]), name='pad_27')

net = slim.conv2d(net, 1024, 3, 2, padding='VALID', scope='conv_28')

net = slim.conv2d(net, 1024, 3, scope='conv_29')

net = slim.conv2d(net, 1024, 3, scope='conv_30')

net = tf.transpose(net, [0, 3, 1, 2], name='trans_31')

net = slim.flatten(net, scope='flat_32')

net = slim.fully_connected(net, 512, scope='fc_33')

net = slim.fully_connected(net, 4096, scope='fc_34')

net = slim.dropout(net, keep_prob=keep_prob,

is_training=is_training, scope='dropout_35')

net = slim.fully_connected(net, num_outputs,

activation_fn=None, scope='fc_36')

return net

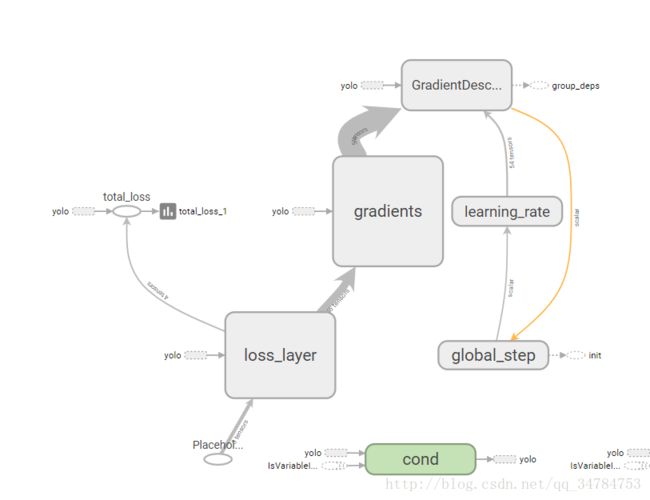

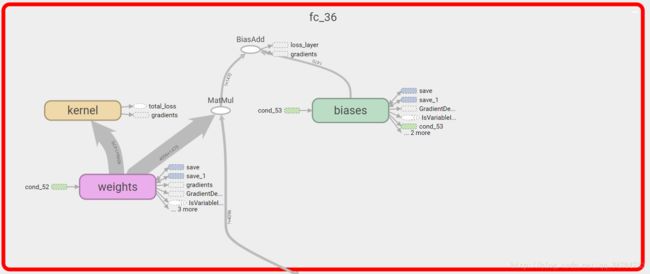

在训练网络的过程中,我们使用 TensorBoard 可以看到这个网络的结构以及每一层的输入输出大小,具体如下所示:

可以看到,网络最后输出的是一个1470 维的张量(1470 = 7*7*30)。最后一层全连接层的内部如下图所示:

###3.3 calc_iou

这个函数的主要作用是计算两个 bounding box 之间的 IoU。这部分程序有些地方我还没有弄没明白,输入是两个 5 维的bounding box,输出的两个 bounding Box 的IoU 。具体程序如下所示:

def calc_iou(self, boxes1, boxes2, scope='iou'):

"""calculate ious

Args:

boxes1: 5-D tensor [CELL_SIZE, CELL_SIZE, BOXES_PER_CELL, 4] ====> (x_center, y_center, w, h)

boxes2: 1-D tensor [CELL_SIZE, CELL_SIZE, BOXES_PER_CELL, 4] ===> (x_center, y_center, w, h)

Return:

iou: 3-D tensor [CELL_SIZE, CELL_SIZE, BOXES_PER_CELL]

"""

with tf.variable_scope(scope):

boxes1 = tf.stack([boxes1[:, :, :, :, 0] - boxes1[:, :, :, :, 2] / 2.0,

boxes1[:, :, :, :, 1] - boxes1[:, :, :, :, 3] / 2.0,

boxes1[:, :, :, :, 0] + boxes1[:, :, :, :, 2] / 2.0,

boxes1[:, :, :, :, 1] + boxes1[:, :, :, :, 3] / 2.0])

boxes1 = tf.transpose(boxes1, [1, 2, 3, 4, 0])

boxes2 = tf.stack([boxes2[:, :, :, :, 0] - boxes2[:, :, :, :, 2] / 2.0,

boxes2[:, :, :, :, 1] - boxes2[:, :, :, :, 3] / 2.0,

boxes2[:, :, :, :, 0] + boxes2[:, :, :, :, 2] / 2.0,

boxes2[:, :, :, :, 1] + boxes2[:, :, :, :, 3] / 2.0])

boxes2 = tf.transpose(boxes2, [1, 2, 3, 4, 0])

# calculate the left up point & right down point

lu = tf.maximum(boxes1[:, :, :, :, :2], boxes2[:, :, :, :, :2])

rd = tf.minimum(boxes1[:, :, :, :, 2:], boxes2[:, :, :, :, 2:])

# intersection

intersection = tf.maximum(0.0, rd - lu)

inter_square = intersection[:, :, :, :, 0] * intersection[:, :, :, :, 1]

# calculate the boxs1 square and boxs2 square

square1 = (boxes1[:, :, :, :, 2] - boxes1[:, :, :, :, 0]) * \

(boxes1[:, :, :, :, 3] - boxes1[:, :, :, :, 1])

square2 = (boxes2[:, :, :, :, 2] - boxes2[:, :, :, :, 0]) * \

(boxes2[:, :, :, :, 3] - boxes2[:, :, :, :, 1])

union_square = tf.maximum(square1 + square2 - inter_square, 1e-10)

return tf.clip_by_value(inter_square / union_square, 0.0, 1.0)

上面这个函数中主要用到的函数有tf.stack tf.transpose 以及 tf.maximum,下面分别简单介绍一下这几个函数:

- tf.stack(),定义为:

def stack(values, axis=0, name="stack")。该函数的主要作用是对矩阵进行拼接,我们在 TensorFlow 源码中可以看到这句话***tf.stack([x, y, z]) = np.stack([x, y, z])***,也就是说,它和numpy 中的 stack 函数的作用是相同的。都是在指定轴的方向上对矩阵进行拼接。默认值是0。具体如下图:

- tf.transpose,定义为

def transpose(a, perm=None, name="transpose")这个函数的作用是根据 perm 的值对矩阵 a 进行转置操作,返回数组的 dimension(尺寸、维度) i与输入的 perm[i]的维度相一致。如果未给定perm,默认设置为 (n-1…0),这里的 n 值是输入变量的 rank 。因此默认情况下,这个操作执行了一个正规(regular)的2维矩形的转置。具体如下图:

- tf.maximum,定义为

def maximum(x, y, name=None)这个函数的作用是返回的是a,b之间的最大值。

###3.3 loss_layer

这个函数的主要作用是计算 Loss。具体程序如下所示:

def loss_layer(self,predicts, labels, scope='loss_layer'):

with tf.variable_scope(scope):

# 将预测结果的前 20维(表示类别)转换为相应的矩阵形式 (类别向量)

predict_classes = tf.reshape(predicts[:, :self.boundary1], [self.batch_size, self.cell_size, self.cell_size, self.num_class])

# 将预测结果 的 21 ~ 34 转换为相应的矩阵形式 (尺度向量?)

predict_scales = tf.reshape(predicts[:, self.boundary1:self.boundary2], [self.batch_size, self.cell_size, self.cell_size, self.box_per_cell])

# 将预测结果剩余的维度转变为相应的矩阵形式(boxes 所在的位置向量)

predict_boxes = tf.reshape(predicts[:, self.boundary2:], [self.batch_size, self.cell_size, self.cell_size, self.box_per_cell, 4])

# 将真实的 labels 转换为相应的矩阵形式

response = tf.reshape(labels[:, :, :, 0], [self.batch_size, self.cell_size, self.cell_size, 1])

boxes = tf.reshape(labels[:, :, :, 1:5], [self.batch_size, self.cell_size, self.cell_size, 1, 4])

boxes = tf.tile(boxes, [1, 1, 1, self.box_per_cell, 1]) / self.image_size

classes = labels[:, :, :, 5:]

offset = tf.constant(self.offset, dtype = tf.float32) # [7, 7, 2]

offset = tf.reshape(offset, [1, self.cell_size, self.cell_size, self.box_per_cell]) # [1, 7, 7, 2]

offset = tf.tile(offset, [self.batch_size, 1, 1, 1]) # [batch_size, 7, 7, 1]

# shape为 [4, batch_size, 7, 7, 2]

predict_boxes_tran = tf.stack([(predict_boxes[:, :, :, :, 0] + offset) / self.cell_size,

(predict_boxes[:, :, :, :, 1] + tf.transpose(offset, (0, 2, 1, 3))) / self.cell_size,

tf.square(predict_boxes[:, :, :, :, 2]),

tf.square(predict_boxes[:, :, :, :, 3])])

# shape为 [batch_size, 7, 7, 2, 4]

predict_boxes_tran = tf.transpose(predict_boxes_tran, [1, 2, 3, 4, 0])

iou_predict_truth = self.calc_iou(predict_boxes_tran, boxes)

# calculate I tensor [BATCH_SIZE, CELL_SIZE, CELL_SIZE, BOXES_PER_CELL]

object_mask = tf.reduce_max(iou_predict_truth, axis = 3, keep_dims = True)

object_mask = tf.cast((iou_predict_truth >= object_mask), tf.float32) * response

# calculate no_I tensor [CELL_SIZE, CELL_SIZE, BOXES_PER_CELL]

noobject_mask = tf.ones_like(object_mask, dtype = tf.float32) - object_mask

# 参数中加上平方根是对 w 和 h 进行开平方操作,原因在论文中有说明

# #shape为(4, batch_size, 7, 7, 2)

boxes_tran = tf.stack([boxes[:, :, :, :, 0] * self.cell_size - offset,

boxes[:, :, :, :, 1] * self.cell_size - tf.transpose(offset, (0, 2, 1, 3)),

tf.sqrt(boxes[:, :, :, :, 2]),

tf.sqrt(boxes[:, :, :, :, 3])])

boxes_tran = tf.transpose(boxes_tran, [1, 2, 3, 4, 0])

# class_loss 分类损失

class_delta = response * (predict_classes - classes)

class_loss = tf.reduce_mean(tf.reduce_sum(tf.square(class_delta),

axis=[1, 2, 3]),

name='class_loss') * self.class_scale

# object_loss 有目标物体存在的损失

object_delta = object_mask * (predict_scales - iou_predict_truth)

object_loss = tf.reduce_mean(tf.reduce_sum(tf.square(object_delta),

axis = [1, 2, 3]), name = 'object_loss') * self.object_scale

# 没有目标物体时的损失

noobject_delta = noobject_mask * predict_scales

noobject_loss = tf.reduce_mean(tf.reduce_sum(tf.square(noobject_delta),

axis = [1, 2, 3]),

name = 'noobject_loss') * self.noobject_scale

# coord_loss 坐标损失 #shape 为 (batch_size, 7, 7, 2, 1)

coord_mask = tf.expand_dims(object_mask, 4)

# shape 为(batch_size, 7, 7, 2, 4)

boxes_delta = coord_mask * (predict_boxes - boxes_tran)

coord_loss = tf.reduce_mean(tf.reduce_sum(tf.square(boxes_delta),

axis = [1, 2, 3, 4]),

name = 'coord_loss') * self.coord_scale

# 将所有损失放在一起

tf.losses.add_loss(class_loss)

tf.losses.add_loss(object_loss)

tf.losses.add_loss(noobject_loss)

tf.losses.add_loss(coord_loss)

# 将每个损失添加到日志记录

tf.summary.scalar('class_loss', class_loss)

tf.summary.scalar('object_loss', object_loss)

tf.summary.scalar('noobject_loss', noobject_loss)

tf.summary.scalar('coord_loss', coord_loss)

tf.summary.histogram('boxes_delta_x', boxes_delta[:, :, :, :, 0])

tf.summary.histogram('boxes_delta_y', boxes_delta[:, :, :, :, 1])

tf.summary.histogram('boxes_delta_w', boxes_delta[:, :, :, :, 2])

tf.summary.histogram('boxes_delta_h', boxes_delta[:, :, :, :, 3])

tf.summary.histogram('iou', iou_predict_truth)

这部分内容的大致内容已经在注释中进行标出。

##4. train.py

这部分代码主要实现的是对已经构建好的网络和损失函数利用数据进行训练,在训练过程中,对变量采用了指数平均数(exponential moving average (EMA))来提高整体的训练性能。同时,为了获得比较好的学习性能,对学习速率同向进行了指数衰减,使用了 exponential_decay 函数来实现这个功能。这个函数的具体计算公式如下所示:

d e c a y e d _ l e a r n i n g _ r a t e = l e a r n i n g _ r a t e ∗ d e c a y _ r a t e ( g l o b a l s t e p / d e c a y s t e p s ) decayed\_learning\_rate = learning\_rate *decay\_rate ^ {(global_step / decay_steps)} decayed_learning_rate=learning_rate∗decay_rate(globalstep/decaysteps)

在训练的同时,对我们的训练模型(网络权重)进行保存,这样以后可以直接进行调用这些权重;同时,每隔一定的迭代次数便写入 TensorBoard,这样在最后可以观察整体的情况。

具体代码如下所示:

class Solver(object):

def __init__(self, net, data):

self.net = net

self.data = data

self.weights_file = cfg.WEIGHT_FILE #网络权重

self.max_iter = cfg.MAX_ITER #最大迭代数目

self.initial_learning_rate = cfg.LEARNING_RATE

self.decay_steps = cfg.DECAY_STEPS

self.decay_rate = cfg.DECAY_RATE

self.staircase = cfg.STAIRCASE

self.summary_iter = cfg.SUMMARY_ITER

self.save_iter = cfg.SAVE_ITER

self.output_dir = os.path.join(

cfg.OUTPUT_PATH, datetime.datetime.now().strftime('%Y_%m_%d_%H_%M'))

if not os.path.exists(self.output_dir):

os.makedirs(self.output_dir)

self.save_cfg()

# tf.get_variable 和tf.Variable不同的一点是,前者拥有一个变量检查机制,

# 会检测已经存在的变量是否设置为共享变量,如果已经存在的变量没有设置为共享变量,

# TensorFlow 运行到第二个拥有相同名字的变量的时候,就会报错。

self.variable_to_restore = tf.global_variables()

self.restorer = tf.train.Saver(self.variable_to_restore, max_to_keep = None)

self.saver = tf.train.Saver(self.variable_to_restore, max_to_keep = None)

self.ckpt_file = os.path.join(self.output_dir, 'save.ckpt')

self.summary_op = tf.summary.merge_all()

self.writer = tf.summary.FileWriter(self.output_dir, flush_secs = 60)

self.global_step = tf.get_variable(

'global_step', [], initializer = tf.constant_initializer(0), trainable = False)

# 产生一个指数衰减的学习速率

self.learning_rate = tf.train.exponential_decay(

self.initial_learning_rate, self.global_step, self.decay_steps,

self.decay_rate, self.staircase, name = 'learning_rate')

self.optimizer = tf.train.GradientDescentOptimizer(

learning_rate = self.learning_rate).minimize(

self.net.total_loss, global_step = self.global_step)

self.ema = tf.train.ExponentialMovingAverage(decay = 0.9999)

self.average_op = self.ema.apply(tf.trainable_variables())

with tf.control_dependencies([self.optimizer]):

self.train_op = tf.group(self.average_op)

gpu_options = tf.GPUOptions()

config = tf.ConfigProto(gpu_options=gpu_options)

self.sess = tf.Session(config = config)

self.sess.run(tf.global_variables_initializer())

if self.weights_file is not None:

print('Restoring weights from: '+ self.weights_file)

self.restorer.restore(self.sess, self.weights_file)

self.writer.add_graph(self.sess.graph)

def train(self):

train_timer = Timer()

load_timer = Timer()

for step in range(1, self.max_iter+1):

load_timer.tic()

images, labels = self.data.get()

load_timer.toc()

feec_dict = {self.net.images: images, self.net.labels: labels}

if step % self.summary_iter == 0:

if step % (self.summary_iter * 10) == 0:

train_timer.tic()

summary_str, loss, _ = self.sess.run(

[self.summary_op, self.net.total_loss, self.train_op],

feed_dict = feec_dict)

train_timer.toc()

log_str = ('{} Epoch: {}, Step: {}, Learning rate : {},'

'Loss: {:5.3f}\nSpeed: {:.3f}s/iter,'

' Load: {:.3f}s/iter, Remain: {}').format(

datetime.datetime.now().strftime('%m/%d %H:%M:%S'),

self.data.epoch,

int(step),

round(self.learning_rate.eval(session = self.sess), 6),

loss,

train_timer.average_time,

load_timer.average_time,

train_timer.remain(step, self.max_iter))

print(log_str)

else:

train_timer.tic()

summary_str, _ = self.sess.run(

[self.summary_op, self.train_op],

feed_dict = feec_dict)

train_timer.toc()

self.writer.add_summary(summary_str, step)

else:

train_timer.tic()

self.sess.run(self.train_op, feed_dict = feec_dict)

train_timer.toc()

if step % self.save_iter == 0:

print('{} Saving checkpoint file to: {}'.format(

datetime.datetime.now().strftime('%m/%d %H:%M:%S'),

self.output_dir))

self.saver.save(self.sess, self.ckpt_file,

global_step = self.global_step)

def save_cfg(self):

with open(os.path.join(self.output_dir, 'config.txt'), 'w') as f:

cfg_dict = cfg.__dict__

for key in sorted(cfg_dict.keys()):

if key[0].isupper():

cfg_str = '{}: {}\n'.format(key, cfg_dict[key])

f.write(cfg_str)

##5. test.py

最后给出test 部分的源码,这部分需要使用我们下载好的 “YOLO_small.ckpt” 权重文件,当然,也可以使用我们之前训练好的权重文件。

这部分的主要内容就是利用训练好的权重进行预测,得到预测输出后利用 OpenCV 的相关函数进行画框等操作。同时,还可以利用 OpenCV 进行视频处理,使程序能够实时地对视频流进行检测。因此,在阅读本段程序之前,大家应该对 OpenCV 有一个大致的了解。

具体代码如下所示:

class Detector(object):

def __init__(self, net, weight_file):

self.net = net

self.weights_file = weight_file

self.classes = cfg.CLASSES

self.num_class = len(self.classes)

self.image_size = cfg.IMAGE_SIZE

self.cell_size = cfg.CELL_SIZE

self.boxes_per_cell = cfg.BOXES_PER_CELL

self.threshold = cfg.THRESHOLD

self.iou_threshold = cfg.IOU_THRESHOLD

self.boundary1 = self.cell_size * self.cell_size * self.num_class

self.boundary2 = self.boundary1 + self.cell_size * self.cell_size * self.boxes_per_cell

self.sess = tf.Session()

self.sess.run(tf.global_variables_initializer())

print('Restoring weights from: ' + self.weights_file)

self.saver = tf.train.Saver()

self.saver.restore(self.sess, self.weights_file)

def draw_result(self, img, result):

for i in range(len(result)):

x = int(result[i][1])

y = int(result[i][2])

w = int(result[i][3] / 2)

h = int(result[i][4] / 2)

cv2.rectangle(img, (x - w, y - h), (x + w, y + h), (0, 255, 0), 2)

cv2.rectangle(img, (x - w, y - h - 20),

(x + w, y - h), (125, 125, 125), -1)

cv2.putText(img, result[i][0] + ' : %.2f' % result[i][5], (x - w + 5, y - h - 7),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 0), 1, cv2.LINE_AA)

def detect(self, img):

img_h, img_w, _ = img.shape

inputs = cv2.resize(img, (self.image_size, self.image_size))

inputs = cv2.cvtColor(inputs, cv2.COLOR_BGR2RGB).astype(np.float32)

inputs = (inputs / 255.0) * 2.0 - 1.0

inputs = np.reshape(inputs, (1, self.image_size, self.image_size, 3))

result = self.detect_from_cvmat(inputs)[0]

for i in range(len(result)):

result[i][1] *= (1.0 * img_w / self.image_size)

result[i][2] *= (1.0 * img_h / self.image_size)

result[i][3] *= (1.0 * img_w / self.image_size)

result[i][4] *= (1.0 * img_h / self.image_size)

return result

def detect_from_cvmat(self, inputs):

net_output = self.sess.run(self.net.logits,

feed_dict={self.net.images: inputs})

results = []

for i in range(net_output.shape[0]):

results.append(self.interpret_output(net_output[i]))

return results

def interpret_output(self, output):

probs = np.zeros((self.cell_size, self.cell_size,

self.boxes_per_cell, self.num_class))

class_probs = np.reshape(output[0:self.boundary1], (self.cell_size, self.cell_size, self.num_class))

scales = np.reshape(output[self.boundary1:self.boundary2],

(self.cell_size, self.cell_size, self.boxes_per_cell))

boxes = np.reshape(output[self.boundary2:], (self.cell_size, self.cell_size,

self.boxes_per_cell, 4))

offset = np.transpose(np.reshape(np.array([np.arange(self.cell_size)] *

self.cell_size * self.boxes_per_cell),

[self.boxes_per_cell, self.cell_size,

self.cell_size]), (1, 2, 0))

boxes[:, :, :, 0] += offset

boxes[:, :, :, 1] += np.transpose(offset, (1, 0, 2))

boxes[:, :, :, :2] = 1.0 * boxes[:, :, :, 0:2] / self.cell_size

boxes[:, :, :, 2:] = np.square(boxes[:, :, :, 2:])

boxes *= self.image_size

for i in range(self.boxes_per_cell):

for j in range(self.num_class):

probs[:, :, i, j] = np.multiply(

class_probs[:, :, j], scales[:, :, i])

filter_mat_probs = np.array(probs >= self.threshold, dtype='bool')

filter_mat_boxes = np.nonzero(filter_mat_probs)

boxes_filtered = boxes[filter_mat_boxes[0],

filter_mat_boxes[1], filter_mat_boxes[2]]

probs_filtered = probs[filter_mat_probs]

classes_num_filtered = np.argmax(filter_mat_probs, axis=3)[filter_mat_boxes[

0], filter_mat_boxes[1], filter_mat_boxes[2]]

argsort = np.array(np.argsort(probs_filtered))[::-1]

boxes_filtered = boxes_filtered[argsort]

probs_filtered = probs_filtered[argsort]

classes_num_filtered = classes_num_filtered[argsort]

for i in range(len(boxes_filtered)):

if probs_filtered[i] == 0:

continue

for j in range(i + 1, len(boxes_filtered)):

if self.iou(boxes_filtered[i], boxes_filtered[j]) > self.iou_threshold:

probs_filtered[j] = 0.0

filter_iou = np.array(probs_filtered > 0.0, dtype='bool')

boxes_filtered = boxes_filtered[filter_iou]

probs_filtered = probs_filtered[filter_iou]

classes_num_filtered = classes_num_filtered[filter_iou]

result = []

for i in range(len(boxes_filtered)):

result.append([self.classes[classes_num_filtered[i]], boxes_filtered[i][0], boxes_filtered[

i][1], boxes_filtered[i][2], boxes_filtered[i][3], probs_filtered[i]])

return result

def iou(self, box1, box2):

tb = min(box1[0] + 0.5 * box1[2], box2[0] + 0.5 * box2[2]) - \

max(box1[0] - 0.5 * box1[2], box2[0] - 0.5 * box2[2])

lr = min(box1[1] + 0.5 * box1[3], box2[1] + 0.5 * box2[3]) - \

max(box1[1] - 0.5 * box1[3], box2[1] - 0.5 * box2[3])

if tb < 0 or lr < 0:

intersection = 0

else:

intersection = tb * lr

return intersection / (box1[2] * box1[3] + box2[2] * box2[3] - intersection)

def camera_detector(self, cap, wait=10):

detect_timer = Timer()

ret, _ = cap.read()

while ret:

ret, frame = cap.read()

detect_timer.tic()

result = self.detect(frame)

detect_timer.toc()

print('Average detecting time: {:.3f}s'.format(detect_timer.average_time))

self.draw_result(frame, result)

cv2.namedWindow('Camera',0)

cv2.imshow('Camera', frame)

cv2.waitKey(wait)

ret, frame = cap.read()

def image_detector(self, imname, wait=0):

detect_timer = Timer()

image = cv2.imread(imname)

detect_timer.tic()

result = self.detect(image)

detect_timer.toc()

print('Average detecting time: {:.3f}s'.format(detect_timer.average_time))

self.draw_result(image, result)

cv2.imshow('Image', image)

cv2.waitKey(wait)

6. 总结

yolo_v1 虽然已经能够对物体进行实时检测,但是整体上来说,检测的效果并不是特别好,检测的类别也比较少,针对这些问题,作者之后还提出了 yolo_v2,以此来对这些问题进行改进。关于 Yolo_v2 的相关内容会在之后的博客中进行介绍。

最后给出这个的完整项目的链接:yolo,只要需要将 pascal 数据集放到 data 内相应文件夹即可。