opencv机器学习---KNN篇

原文 https://www.cnblogs.com/denny402/p/5033898.html

knn算法:https://blog.csdn.net/qq_41577045/article/details/80302968

OpenCV 3.3中给出了K-最近邻(KNN)算法的实现,即cv::ml::Knearest类,此类的声明在include/opecv2/ml.hpp文件中,实现在modules/ml/src/knearest.cpp文件中。其中:

(1)、cv::ml::Knearest类:继承自cv::ml::StateModel,而cv::ml::StateModel又继承自cv::Algorithm;

(2)、create函数:为static,new一个KNearestImpl用来创建一个KNearest对象;

(3)、setDefaultK/getDefaultK函数:在预测时,设置/获取的K值;

(4)、setIsClassifier/getIsClassifier函数:设置/获取应用KNN是进行分类还是回归;

(5)、setEmax/getEmax函数:在使用KDTree算法时,设置/获取Emax参数值;

(6)、setAlgorithmType/getAlgorithmType函数:设置/获取KNN算法类型,目前支持两种:brute_force和KDTree;

(7)、findNearest函数:根据输入预测分类/回归结果。

这是一张密密麻麻的手写数字图:图片大小为1000*2000,有0-9的10个数字,每5行为一个数字,总共50行,共有5000个手写数字。在opencv3.0版本中,图片存放位置为

/opencv/sources/samples/data/digits.png

我们首先要做的,就是把这5000个手写数字,一个个截取出来,每个数字块大小为20*20。直接将每个小图块进行序列化,因此最终得到一个5000*400的特征矩阵。样本数为5000,维度为400维。取其中前3000个样本进行训练。

注意:截取的时候,是按列截取。不然取前3000个样本进行训练就会出现后几个数字训练不到。

#include "opencv2\opencv.hpp"

#include

using namespace std;

using namespace cv;

using namespace cv::ml;

int main()

{

Mat img = imread("digitals.png");

Mat gray;

cvtColor(img, gray, CV_BGR2GRAY);

int b = 20;

int m = gray.rows / b; //原图为1000*2000

int n = gray.cols / b; //裁剪为5000个20*20的小图块

Mat data, labels; //特征矩阵

for (int i = 0; i < n; i++)

{

int offsetCol = i*b; //列上的偏移量

for (int j = 0; j < m; j++)

{

int offsetRow = j*b; //行上的偏移量

//截取20*20的小块

Mat tmp;

gray(Range(offsetRow, offsetRow + b), Range(offsetCol, offsetCol + b)).copyTo(tmp);

data.push_back(tmp.reshape(0, 1)); //序列化后放入特征矩阵

labels.push_back((int)j / 5); //对应的标注

}

}

data.convertTo(data, CV_32F); //uchar型转换为cv_32f

int samplesNum = data.rows;

int trainNum = 3000;

Mat trainData, trainLabels;

trainData = data(Range(0, trainNum), Range::all()); //前3000个样本为训练数据

trainLabels = labels(Range(0, trainNum), Range::all());

//使用KNN算法

int K = 5;

Ptr tData = TrainData::create(trainData, ROW_SAMPLE, trainLabels);

Ptr model = KNearest::create();

model->setDefaultK(K);

model->setIsClassifier(true);

model->train(tData);

//svm分类

Ptr svm = SVM::create();//SVM分类器

svm->setType(SVM::C_SVC);

svm->setC(0.01);

svm->setKernel(SVM::LINEAR);

svm->setTermCriteria(TermCriteria(TermCriteria::MAX_ITER, 3000, 1e-6));

std::cout << "Starting training..." << endl;

svm->train(trainData, ROW_SAMPLE, trainLabels);

//训练分类器

std::cout << "Finishing training..." << endl;

//将训练好的SVM模型保存为xml文件

svm->SVM::save("SVM_HOG.xml");

//Ann分类

Ptr ann = ANN_MLP::create();

Mat layerSizes = (Mat_(1, 5) << 400, 128, 128, 128, 10);

ann->setLayerSizes(layerSizes);

ann->setTrainMethod(ANN_MLP::BACKPROP, 0.001, 0.1);

ann->setActivationFunction(ANN_MLP::SIGMOID_SYM, 1.0, 1.0);

ann->setTermCriteria(TermCriteria(TermCriteria::MAX_ITER | TermCriteria::EPS, 10000, 0.0001));

Ptr trainDatas = TrainData::create(trainData, ROW_SAMPLE, trainLabels);

ann->train(trainDatas);

//保存训练结果

ann->save("MLPModel.xml");

//预测分类

double train_hr = 0, test_hr = 0;

Mat response;

// compute prediction error on train and test data

for (int i = 0; i < samplesNum; i++)

{

Mat sample = data.row(i);

float r = model->predict(sample); //对所有行进行预测

//预测结果与原结果相比,相等为1,不等为0

r = std::abs(r - labels.at(i)) <= FLT_EPSILON ? 1.f : 0.f;

if (i < trainNum)

train_hr += r; //累积正确数

else

test_hr += r;

}

test_hr /= samplesNum - trainNum;

train_hr = trainNum > 0 ? train_hr / trainNum : 1.;

printf("accuracy: train = %.1f%%, test = %.1f%%\n",

train_hr*100., test_hr*100.);

//svm分类

double svmtrain_hr = 0, svmtest_hr = 0;

//Mat svmresponse;

// compute prediction error on train and test data

for (int i = 0; i < samplesNum; i++)

{

Mat sample = data.row(i);

float r =svm->predict(sample); //对所有行进行预测

//预测结果与原结果相比,相等为1,不等为0

r = std::abs(r - labels.at(i)) <= FLT_EPSILON ? 1.f : 0.f;

if (i < trainNum)

svmtrain_hr += r; //累积正确数

else

svmtest_hr += r;

}

svmtest_hr /= samplesNum - trainNum;

train_hr = trainNum > 0 ? svmtrain_hr / trainNum : 1.;

printf("accuracy: train = %.1f%%, test = %.1f%%\n",

svmtrain_hr*100., svmtest_hr*100.);

//ann分类

//预测分类

double anntrain_hr = 0, anntest_hr = 0;

//Mat response;

// compute prediction error on train and test data

for (int i = 0; i < samplesNum; i++)

{

Mat sample = data.row(i);

float r = model->predict(sample); //对所有行进行预测

//预测结果与原结果相比,相等为1,不等为0

r = std::abs(r - labels.at(i)) <= FLT_EPSILON ? 1.f : 0.f;

if (i < trainNum)

anntrain_hr += r; //累积正确数

else

anntest_hr += r;

}

anntest_hr /= samplesNum - trainNum;

anntrain_hr = trainNum > 0 ? anntrain_hr / trainNum : 1.;

printf("accuracy: train = %.1f%%, test = %.1f%%\n",

anntrain_hr*100., anntest_hr*100.);

waitKey(0);

getchar();

return 0;

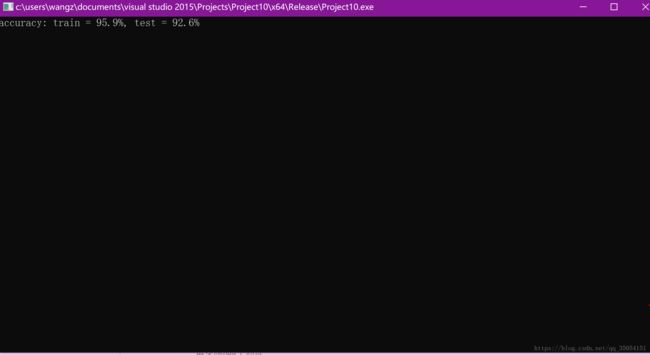

} 最终结果: