Explanation of Scoring Metric(for kaggle TGS Salt Identification Challenge)

kaggle竞赛:TGS Salt Identification Challenge中关于评分度量(scoring metric)

原文:https://www.kaggle.com/pestipeti/explanation-of-scoring-metric

评分指标的说明

我发现这场比赛的得分指标的解释有点令人困惑,我想为那些刚刚进入或尚未进入太远的人创建指南。如果你发现错误,请给我留言,谢谢。

Step-By-Step Explanation of Scoring Metric

https://www.kaggle.com/stkbailey/step-by-step-explanation-of-scoring-metric/notebook

Rationale

I found the explanation for the scoring metric on this competition a little confusing, and I wanted to create a guide for those who are just entering or haven't made it too far yet. The metric used for this competition is defined as the mean average precision at different intersection over union (IoU) thresholds.

我发现这场比赛的得分指标的解释有点令人困惑,我想为那些刚刚进入的新手创建指南。用于该竞争的度量被定义为在设置不同交并比(IoU)阈值下的平均精度。

This tells us there are a few different steps to getting the score reported on the leaderboard. For each image...

- For each submitted nuclei "prediction", calculate the Intersection of Union metric with each "ground truth" mask in the image.

- Calculate whether this mask fits at a range of IoU thresholds.

- At each threshold, calculate the precision across all your submitted masks.

- Average the precision across thresholds.

Across the dataset...

- Calculate the mean of the average precision for each image

这告诉我们在排行榜上报告得分有几个不同的步骤。对于每张图片......

对于每个提交的核“预测”,计算图像中每个“原始图片”掩模的联合度量的交点。

计算此掩码是否适合IoU阈值范围。

在每个阈值处,计算所有提交的蒙版的精度。

平均阈值的精度。

跨数据集......

计算每个图像的平均精度的平均值。

These are the steps to calculate to correct score:以下是计算正确分数的步骤:

-

For all of the images/predictions对于所有原始图像/预测图片

- Calculate the Intersection of Union metric ("compare" the original mask with your predicted one)

- Calculate whether your predicted mask fits at a range of IoU thresholds.

- At each threshold, "calculate" the precision of your predicted masks.

- Average the precision across thresholds.

-

Across the dataset

- Calculate the mean of the average precision for each image.

计算联合交点的度量标准(“比较”原始掩码与预测的掩码)

计算您预测的面罩是否适合IoU阈值范围。

在每个阈值处,“计算”预测掩模的精度。

平均阈值的精度。

跨数据集

计算每个图像的平均精度的平均值。

下面我们进行一次模拟预测来深入理解

Picking test image选择测试图片

I'm going to pick a sample image from the training dataset, load the masks, then create a "mock predict"

我将从训练数据集中选择一个样本图像,加载mask,然后创建一个“模拟预测”(只用一张图片)

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import imageio

from scipy import ndimage

from pathlib import Path

# Get image

im_id = '2bf5343f03'

im_dir = Path('../input/train/')

im_path = im_dir / 'images' / '{}.png'.format(im_id)

img = imageio.imread(im_path.as_posix())

# Get mask

im_dir = Path('../input/train/')

im_path = im_dir / 'masks' / '{}.png'.format(im_id)

target_mask = imageio.imread(im_path.as_posix())

# Fake prediction mask

pred_mask = ndimage.rotate(target_mask, 10, mode='constant', reshape=False, order=0)

pred_mask = ndimage.binary_dilation(pred_mask, iterations=1)

# Plot the objects

fig, axes = plt.subplots(1,3, figsize=(16,9))

axes[0].imshow(img)

axes[1].imshow(target_mask,cmap='hot')

axes[2].imshow(pred_mask, cmap='hot')

labels = ['Original', '"GroundTruth" Mask', '"Predicted" Mask']

for ind, ax in enumerate(axes):

ax.set_title(labels[ind], fontsize=18)

ax.axis('off')Intersection Over Union (for a single Prediction-GroundTruth comparison)

The IoU of a proposed set of object pixels and a set of true object pixels is calculated as:预测出来的一组对象像素和一组真实对象像素的IoU计算如下:

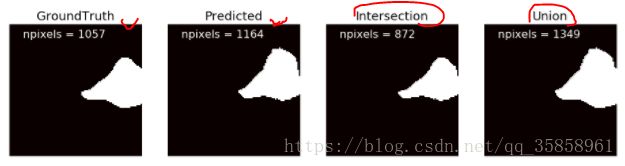

The intersection and the union of our GT and Pred masks are look like this:

本征图片GT mask和预测图片Pred mask的交集和并集看起来像这样:

代码为:

A = target_mask

B = pred_mask

intersection = np.logical_and(A, B)

union = np.logical_or(A, B)

fig, axes = plt.subplots(1,4, figsize=(16,9))

axes[0].imshow(A, cmap='hot')

axes[0].annotate('npixels = {}'.format(np.sum(A>0)),

xy=(10, 10), color='white', fontsize=16)

axes[1].imshow(B, cmap='hot')

axes[1].annotate('npixels = {}'.format(np.sum(B>0)),

xy=(10, 10), color='white', fontsize=16)

axes[2].imshow(intersection, cmap='hot')

axes[2].annotate('npixels = {}'.format(np.sum(intersection>0)),

xy=(10, 10), color='white', fontsize=16)

axes[3].imshow(union, cmap='hot')

axes[3].annotate('npixels = {}'.format(np.sum(union>0)),

xy=(10, 10), color='white', fontsize=16)

labels = ['GroundTruth', 'Predicted', 'Intersection', 'Union']

for ind, ax in enumerate(axes):

ax.set_title(labels[ind], fontsize=18)

ax.axis('off')So, for this mask the IoU metric is calculated as:

Thresholding the IoU value (for a single GroundTruth-Prediction comparison)

Next, we sweep over a range of IoU thresholds to get a vector for each mask comparison. The threshold values range from 0.5 to 0.95 with a step size of 0.05: (0.5, 0.55, 0.6, 0.65, 0.7, 0.75, 0.8, 0.85, 0.9, 0.95). In other words, at a threshold of 0.5, a predicted object is considered a "hit" if its intersection over union with a ground truth object is greater than 0.5.

阈值化IoU值(对于单个GroundTruth-Prediction比较)这里的评价标准是对应于这个竞赛的

接下来,我们对应于每个mask比对计算出来的IoU值,扫描一系列IoU阈值。阈值范围为0.5至0.95,步长为0.05:(0.5,0.55,0.6,0.65,0.7,0.75,0.8,0.85,0.9,0.95)。换句话说,在阈值为0.5时,如果预测对象与地面实况对象结合的交点大于0.5,则认为该预测对象是“命中”。这样,我们就得到一个对应的vector. 如IoU = 0.6464 这次比对所获得的vector为(1,1,1,0,0,0,0,0,0,0)

Does this IoU hit at each threshold?

0.50 True

0.55 True

0.60 True

0.65 False

0.70 False

0.75 False

0.80 False

0.85 False

0.90 False

0.95 False

Name: GT-P, dtype: bool这个步骤的代码为:

def get_iou_vector(A, B, n):

intersection = np.logical_and(A, B)

union = np.logical_or(A, B)

iou = np.sum(intersection > 0) / np.sum(union > 0)

s = pd.Series(name=n)

for thresh in np.arange(0.5,1,0.05):

s[thresh] = iou > thresh

return s

print('Does this IoU hit at each threshold?')

print(get_iou_vector(A, B, 'GT-P'))Single-threshold precision for a single image单个图像的单阈值精度

At each threshold value t, a precision value is calculated based on the number of true positives (TP), false negatives (FN), and false positives (FP) resulting from comparing the predicted object to all ground truth objects

对于每个阈值t,基于通过将预测对象与标签(mask)对象进行比较而得到的真阳性(TP),假阴性(FN)和误报(FP)的数量来计算精度值。

I think this step causes the confusion. I think the evaluation description of this competition was simply copied from the desc of the 2018 Data Science Bowl (or something similar) competition, where you can predict more than one mask/object per image.

我认为这一步(竞赛度量标准metirc)会引起困惑。我认为本次比赛的评估描述只是简单地从2018年数据科学杯(或类似的)比赛中复制而来,在那里你可以预测每张图像不止一个mask/物体。

According to @William Cukierski's Discussion thread:

https://www.kaggle.com/c/tgs-salt-identification-challenge/discussion/61550#360922

Although this competition metric allows prediction of multiple segmented objects per image, note that we have encoded masks as one object here (see train.csv as an example). You will be best off to predict one mask per image.

Because of we have to predict only one mask per image, the computation below (from the evaluation desc) is a bit confusing:

虽然此竞争指标允许预测每个图像的多个分段对象,但请注意我们在此处将mask编码为一个对象(请参阅train.csv作为示例,即标签区域为1,非标签区域而0,纯黑,相当于二分类)。我们构建模型时每张图像预测一个mask。

因为我们每个图像必须只能预测一个mask,所以下面的计算(来自evaluation desc)有点令人困惑:

This only makes sense if we would have more than one predicted mask/segment per image. In our case I think this would be a better description:

GT mask is empty, your prediction non-empty: (FP) Precision(threshold, image) = 0

GT mask non empty, your prediction empty: (FN) Precision(threshold, image) = 0

GT mask empty, your prediction empty: (TN) Precision(threshold, image) = 1

GT mask non-empty, your prediction non-empty: (TP) Precision(threshold, image) = IoU(GT, pred) > threshold

这只有在每个图像有多个预测的mask/区域(即像宏观的语义分割那样有多个分割)时才有意义。在我们的例子中(二值分割),我认为可以将这个评价标准更好的描述为:

GTmask为空,预测非空:(FP)精度(阈值,图像)= 0 (标签图片为空即为全黑,)

GTmask非空,您的预测为空:(FN)精度(阈值,图像)= 0

GTmask为空,预测为空:(TN)精度(阈值,图像)= 1

GTmask非空,您的预测非空:(TP)精度(阈值,图像)= IoU(GT,pred)>阈值

See @William Cukierski's comment here

If a ground truth is empty and you predict nothing, you get a perfect score for that image. If the ground truth is empty and you predict anything, you get a 0 for that image.

我们重新梳理一下上面的metic : 即 如果 数据图片(ground truth)为空,即没有salt的图片,我们的预测mask也为空,则,这个也测满分。而不为空时:1,我们的预测为空,则结果错误,0分;2,我们的预测不为空(有mask区域,对应于这个比赛,就是有结果mask不为0的区域),此时,就比对本征图片的标签mask,与预测图片的mask的IoU,>阈值 则为1,反之则为0.

Multi-threshold precision for a single image单个图像的多阈值精度

The average precision of a single image is then calculated as the mean of the above precision values at each IoU threshold:对应于上述每个IoU阈值处精度值,求其平均值即单个图像的平均精度值。

Here, we simply take the average of the precision values at each threshold to get our mean precision for the image.

在这里,我们简单地取每个阈值处的精度值的平均值来获得图像的平均精度。

ppt = get_iou_vector(A, B, 'precisions_per_thresholds')

print ("Average precision value for image `{}` is {}".format(im_id, ppt.mean()))上述代码是选取一张图片计算其精度(例子)

Average precision value for image `2bf5343f03` is 0.3Let's see what happens if our prediction is 100% accurate.

# `A` is the target mask

ppt = get_iou_vector(A, A, 'GT-P')

print('Does this IoU hit at each threshold?')

print(ppt)

print ("Average precision value for image `{}` is {}".format(im_id, ppt.mean()))Does this IoU hit at each threshold?

0.50 True

0.55 True

0.60 True

0.65 True

0.70 True

0.75 True

0.80 True

0.85 True

0.90 True

0.95 True

Name: GT-P, dtype: bool

Average precision value for image `2bf5343f03` is 1.0Mean average precision for the dataset 数据集的平均精度

Lastly, the score returned by the competition metric is the mean taken over the individual average precisions of each image in the test dataset.最后,竞赛度量返回的分数是在测试数据集中每个图像的各个平均精度所取的平均值。

Therefore, the leaderboard metric will simply be the mean of the precisions across all the images.

因此,排行榜度量将仅仅是所有图像的精度的平均值。

第二部分,我们看看竞赛中别人的IoU计算方式。

https://www.kaggle.com/shaojiaxin/u-net-with-simple-resnet-blocks-v2-new-loss

def get_iou_vector(A, B): #传入ground truth的mask和预测的mask(一次传入一个batch_size大小)

batch_size = A.shape[0]

metric = [] #度量列表,列表中每张元素用于存放一个batch_size中每张图片的的度量值

for batch in range(batch_size):

t, p = A[batch]>0, B[batch]>0

# if np.count_nonzero(t) == 0 and np.count_nonzero(p) > 0:

# metric.append(0)

# continue

# if np.count_nonzero(t) >= 1 and np.count_nonzero(p) == 0:

# metric.append(0)

# continue

# if np.count_nonzero(t) == 0 and np.count_nonzero(p) == 0:

# metric.append(1)

# continue

intersection = np.logical_and(t, p) #这里用了一个很巧妙的函数来计算交并,

# 主要是得益于数据的形式,可以查看train.csv文件中的数据,

# 有salt的标签部位有数字,而没有的地方为空(0,),数据只给出了有值的部分。

union = np.logical_or(t, p)

iou = (np.sum(intersection > 0) + 1e-10 )/ (np.sum(union > 0) + 1e-10)

# 计算一次比对的IoU

thresholds = np.arange(0.5, 1, 0.05)

s = []

for thresh in thresholds:

s.append(iou > thresh)

# s是这样的一组值,如前面例子所示IoU值为0.6464那个[1,1,1,0,0,0,0,0,0,0]

metric.append(np.mean(s))

# metric实际一次存放一次对比数据的平均值,上面的即为0.3? 是的

# 最终metirc中保存的是每次对比后的值,大小为一个batch_size

return np.mean(metric)

# 一个batch_size的 metirc亦是其均值。

def my_iou_metric(label, pred):

return tf.py_func(get_iou_vector, [label, pred>0.5], tf.float64)

def my_iou_metric_2(label, pred):

return tf.py_func(get_iou_vector, [label, pred >0], tf.float64)

关于上面这种IoU计算方式,该作者的思路来源于下面:

https://www.kaggle.com/donchuk/fast-implementation-of-scoring-metric

# This Python 3 environment comes with many helpful analytics libraries installed

# It is defined by the kaggle/python docker image: https://github.com/kaggle/docker-python

# For example, here's several helpful packages to load in

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the "../input/" directory.

# For example, running this (by clicking run or pressing Shift+Enter) will list the files in the input directory

import os

print(os.listdir("../input"))

# Any results you write to the current directory are saved as output.

def get_iou_vector(A, B):

batch_size = A.shape[0]

metric = []

for batch in range(batch_size):

t, p = A[batch], B[batch]

if np.count_nonzero(t) == 0 and np.count_nonzero(p) > 0:

metric.append(0)

continue

if np.count_nonzero(t) >= 1 and np.count_nonzero(p) == 0:

metric.append(0)

continue

if np.count_nonzero(t) == 0 and np.count_nonzero(p) == 0:

metric.append(1)

continue

intersection = np.logical_and(t, p)

union = np.logical_or(t, p)

iou = np.sum(intersection > 0) / np.sum(union > 0)

thresholds = np.arange(0.5, 1, 0.05)

s = []

for thresh in thresholds:

s.append(iou > thresh)

metric.append(np.mean(s))

return np.mean(metric)