sparkstreaming实时读取kakfa到mysql小demo(直读)

步骤:

- 安装部署单机kafka

- 创建mysql表

- sparkstreaming实时消费

一.安装kafka

注:出于方便以及机器问题,使用单机部署,并不需要另外安装zookeeper,使用kafka自带的zookeeper

1.下载https://kafka.apache.org/downloads (使用版本:kafka_2.11-0.10.0.1.tgz)

2.编辑server.properties文件

host.name=内网地址 #kafka绑定的interface

advertised.listeners=PLAINTEXT://外网映射地址:9092 # 注册到zookeeper的地址和端口#添加如上两个地址(云主机!)

log.dirs=/opt/software/kafka/logs#配置log日志地址

3.bin/zookeeper-server-start.sh ../config/zookeeper.properties

如果使用bin/zookeeper-server-start.sh config/zookeeper.properties会导致无法找到config目录而报错。所以最好将kafka配置到全局环境变量中

使用nohup /zookeeper-server-start.sh ../config/zookeeper.properties &

启动后台服务

4.bin/kafka-server-start.sh ../config/server.properties

可以用使用:nohup kafka-server-start.sh config/server.properties &

启动后台服务

5.bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic test#创建topic

6.bin/kafka-topics.sh --list --zookeeper localhost:2181

测试是否创建成功。

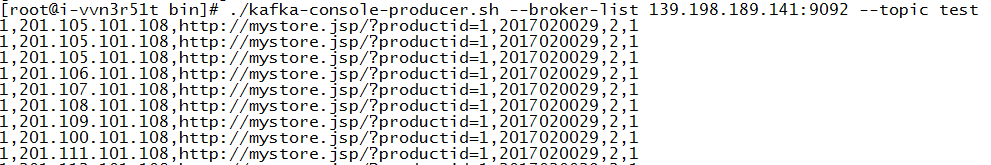

7.启动生产者:bin/kafka-console-producer.sh --broker-list localhost/或者云主机外网ip:9092 --topic test

二.安装mysql

1.使用yum安装mysql5.6

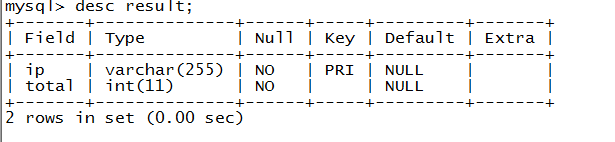

cat >/etc/yum.repos.d/MySQL5.6.repo<2.创建测试表

create database ruozedata;

use ruozedata;

grant all privileges on ruozedata.* to ruoze@'%' identified by '123456';

CREATE TABLE `test` ( `ip` varchar(255) NOT NULL, `total` int(11) NOT NULL, PRIMARY KEY (`ip`) ) ENGINE=InnoDB DEFAULT CHARSET=latin1;

三.sparkstreamingtest_demo

package com.ruozedata.G5

import org.apache.log4j.Level

import org.apache.log4j.Logger

import org.apache.spark.SparkConf

import org.apache.spark.streaming.{Seconds, StreamingContext}

import kafka.serializer.StringDecoder

import org.apache.spark.streaming.kafka.KafkaUtils

import java.sql.DriverManager

import java.sql.PreparedStatement

import java.sql.Connection

object KafkatoSSC {

def main(args: Array[String]): Unit = {

// 减少日志输出

Logger.getLogger("org.apache.spark").setLevel(Level.ERROR)

val sparkConf = new SparkConf().setAppName("KafkatoSSC").setMaster("local[2]")

val sparkStreaming = new StreamingContext(sparkConf, Seconds(10))

// 创建topic名称

val topic = Set("test")

// 制定Kafka的broker地址

val kafkaParams = Map[String, String]("metadata.broker.list" -> "139.198.189.141:9092")

// 创建DStream,接受kafka数据irectStream[String, String, StringDecoder,StringDecoder](sparkStreaming, kafkaParams, topic)

val kafkaStream = KafkaUtils.createDirectStream[String, String, StringDecoder, StringDecoder](sparkStreaming, kafkaParams, topic)

val line = kafkaStream.map(e => {

new String(e.toString())

})

// 获取数据

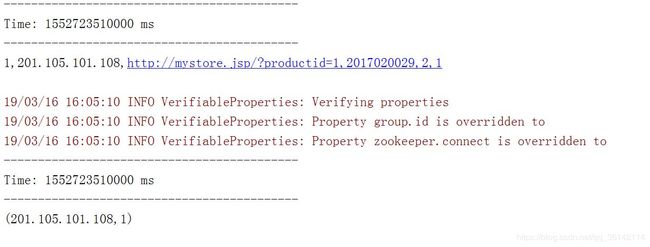

val logRDD = kafkaStream.map(_._2)

// 将数据打印在屏幕

logRDD.print()

// 对接受的数据进行分词处理

val datas = logRDD.map(line => {

// 201.105.101.108,productid=1 输入数据

val index: Array[String] = line.split(",")

val ip = index(0);

(ip, 1)

})

// 打印在屏幕

datas.print()

// 将数据保存在mysql数据库

datas.foreachRDD(cs => {

var conn: Connection = null;

var ps: PreparedStatement = null;

try {

Class.forName("com.mysql.jdbc.Driver").newInstance();

cs.foreachPartition(f => {

conn = DriverManager.getConnection(

"jdbc:mysql://120.142.206.17:3306/ruozedata?useUnicode=true&characterEncoding=utf8",

"ruoze",

"123456");

ps = conn.prepareStatement("insert into result values(?,?)");

f.foreach(s => {

ps.setString(1, s._1);

ps.setInt(2, s._2);

ps.executeUpdate();

})

})

} catch {

case t: Throwable => t.printStackTrace() // TODO: handle error

} finally {

if (ps != null) {

ps.close()

}

if (conn != null) {

conn.close();

}

}

})

sparkStreaming.start()

sparkStreaming.awaitTermination()

}

}

四.pom文件

4.0.0

com.ruozedata

train-scala

1.0

2008

2.11.8

2.2.0

2.6.0-cdh5.7.0

scala-tools.org

Scala-Tools Maven2 Repository

http://scala-tools.org/repo-releases

cloudera

cloudera

https://repository.cloudera.com/artifactory/cloudera-repos/

scala-tools.org

Scala-Tools Maven2 Repository

http://scala-tools.org/repo-releases

org.scala-lang

scala-library

${scala.version}

org.apache.commons

commons-lang3

3.5

org.apache.spark

spark-sql_2.11

${spark.version}

org.apache.spark

spark-streaming_2.11

${spark.version}

org.apache.spark

spark-streaming-flume_2.11

${spark.version}

org.apache.spark

spark-streaming-kafka-0-8_2.11

${spark.version}

org.apache.hadoop

hadoop-client

${hadoop.version}

mysql

mysql-connector-java

5.1.28

provided

src/main/scala

src/test/scala

org.scala-tools

maven-scala-plugin

compile

testCompile

${scala.version}

-target:jvm-1.5

org.apache.maven.plugins

maven-eclipse-plugin

true

ch.epfl.lamp.sdt.core.scalabuilder

ch.epfl.lamp.sdt.core.scalanature

org.eclipse.jdt.launching.JRE_CONTAINER

ch.epfl.lamp.sdt.launching.SCALA_CONTAINER

maven-assembly-plugin

jar-with-dependencies

org.scala-tools

maven-scala-plugin

${scala.version}