ELEGANT: Exchanging Latent Encodings with GAN for Transferring Multiple Face Attributes

ELEGANT: Exchanging Latent Encodings with GAN for Transferring Multiple Face Attributes

Taihong Xiao, Jiapeng Hong, and Jinwen Ma

Abstract

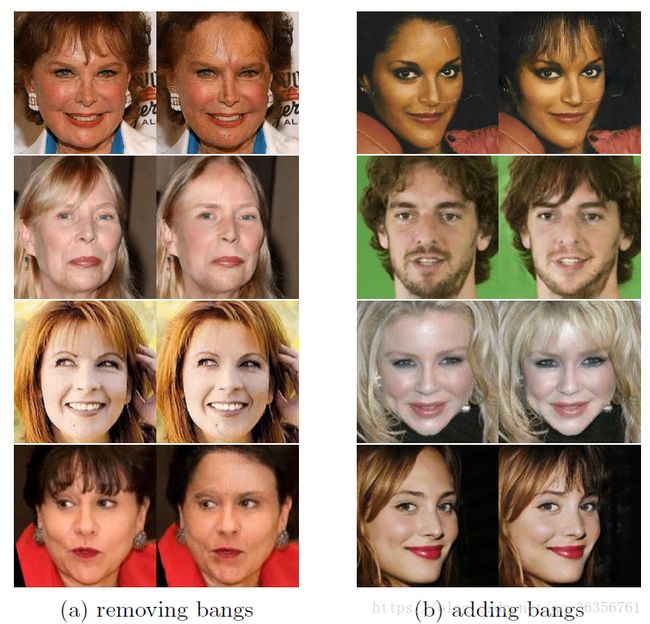

task: face attribute transfer

existing method: image-to-image translation

limitations:(1) failing to make image generation by exemplars; (2) unable to deal with multiple face attributes imultaneously; (3) low-quality generated images

solution: a novel model that receives two images of different attributes as inputs. All the attributes are encoded in the latent space in a disentangled manner(和NICE、Glow有点像?), learn the residual images so as to facilitate training on higher resolution images, generate high-quality images with fi ner details and less artifacts

Introduction

transferring face attributes: ⊂ ⊂ conditional image generation. A source face image would be modi ed to contain the targeted attribute, while the person identity should be preserved during the transferring process.

existing method:

| method | principle | drawbacks |

|---|---|---|

| Deep Manifold Traversal | approximate the natural image manifold and compute the attribute vector from the source domain to the target domain by using maximum mean discrepancy (MMD) | suffers from unbearable time and memory cost |

| Visual Analogy-Making | uses a pair of reference images of the same person but different status to specify the attribute vector. Under the Linear Feature Space assumptions of feature space, image transfering can be formulated as I2=f−1(f(I1)+v) I 2 = f − 1 ( f ( I 1 ) + v ) , f f is encoding/feature-extractingn function, v v is the attribute vector | attribute can be different between inter-classes |

| GAN-based image-to-image translation | dual learning | according to Invariance of Domain Theorem, inappropriate to GAN |

| conditional image generation | receive image labels as the condition for generating images with desired attributes | not able do image generation by exemplars |

| BicycleGAN | introduced a noise term to increase the diversity | unable to generate images of certain attributes |

Purpose and Intuition of Our Work

| purpose | method |

|---|---|

| image generation by exemplars | receive a reference for conditional image generation as latent variable/feature |

| deal with multiple face attributes simultaneously | disentangle multiple attributes |

| satisfying quality | residual learning |

Our Method

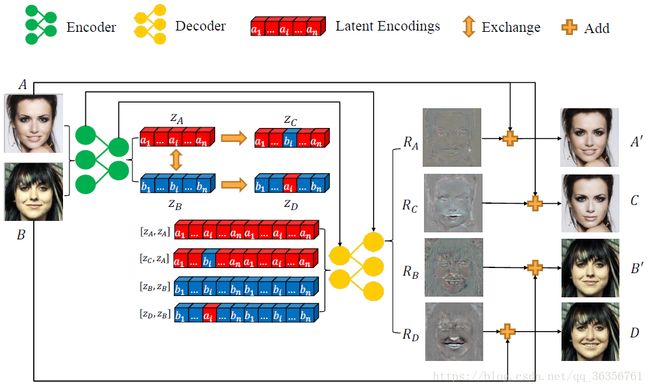

The ELEGANT Model

A∈ A ∈ positive set, with the i i -th attribute

B∈ B ∈ negative set, without the i i -th attribute

A,B A , B are not matched

use an encoder Enc to obtain the latent encodings of images A A and B B

z[i] z [ i ] encodes the information of the i i -th attribute of image. split the tensor zA z A into n n parts along with its channel dimension

problem: Such disentangled representation has to be learned

solution: iterative training strategy (train the model with respect to a single attribute each time and recurrently go over all attributes). Given A,B A , B have different attribute at the i i -th position (whatever attributes at other positions), exchange the i i -th part in their latent encodings zA,zB z A , z B ,

z_C,z_D = swap(z_A,z_B,i)

decode: learn the residual images rather than the original image.

generator = encoder + decoder

discriminator: multi-scale, 2 discriminators that have identical network structure but operate at different image scales. D1 D 1 larger, guiding the Enc and Dec to produce ner details; D2 D 2 smaller, handling the overall image content so as to avoid generating grimaces.

Loss Functions