ES7.x,相关摘要【更新完毕,更新至分词器】

前言:

现在是2019.10.11,最近工作比较忙,小灶时间比较少,现在工作结束,可以继续学习了,敲开心!

- index与create的区别: index的功能比create强一点,也是为什么广泛使用的原因,他的作用是如果文档不存在,则索引新的文档,如果文档已经存在,则会删除现有文档,新的文档会被索引,并且版本号verson会被+1。这点和update还是有区别的。

- index与update的却别: update方法不会像index一样删除原来的文档,而是实现真正的数据更新,但是如果使用update方法,在请求体body中就要指明doc字段,例如

POST user/_update/1 { "doc":{ "name":"xxx" } }下面是简单的crud操作

POST user/_doc { "user":"Mike", "post_date" : "2019-10-11 17:44:00", "message" : "trying out kibana" } PUT user/_doc/1 //这里会默认使用index方式 { "user":"Mike", "post_date" : "2019-10-11 17:44:00", "message" : "trying out kibana" } GET user/_doc/1 //指定方式,因为mike之前已经创建过了,又使用create方式,所以会报错 PUT user/_doc/1?op_type=create { "user":"Mike", "post_date" : "2019-10-11 17:44:00", "message" : "trying out kibana" }

2019-10-12

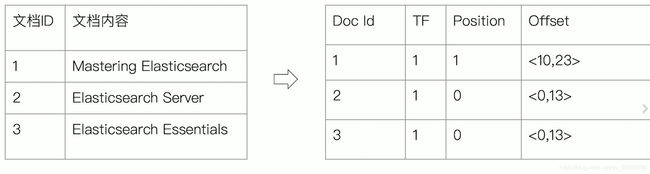

- 正排索引:举个栗子,书本的章节与目录的关系,看到第几页,你就知道第几章了,这就是正派索引,在搜索引擎中对应的就是-文档id与文档内容和单词的关联。

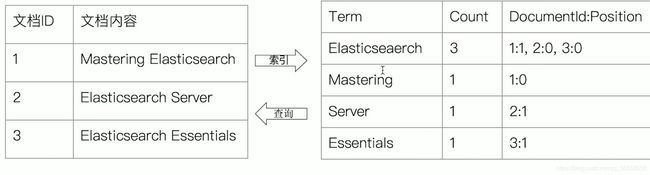

- 倒排索引: 举个栗子,书本的单词,出现在第几页,根据单词,你就知道所在页面,在搜索引擎中就是单词到文档id的对应关系。

上图左侧是正排索引,右侧为倒排索引

- 倒排索引有两个部分

- 分词-standard

GET _analyze { "analyzer": "standard", "text": "i am PHPerJiang" }es默认的分词器是standard,以下是分词结果

{ "tokens" : [ { "token" : "i", "start_offset" : 0, "end_offset" : 1, "type" : "", "position" : 0 }, { "token" : "am", "start_offset" : 2, "end_offset" : 4, "type" : " ", "position" : 1 }, { "token" : "phperjiang", "start_offset" : 5, "end_offset" : 15, "type" : " ", "position" : 2 } ] } standard分词器会去除符号,并将大写转换为小写,然后根据空格进行分词

-

分词-whitespace

GET _analyze { "analyzer": "whitespace", "text": "33 i am PHPer-jiang,i am so good。" }分词结果

{ "tokens" : [ { "token" : "33", "start_offset" : 0, "end_offset" : 2, "type" : "word", "position" : 0 }, { "token" : "i", "start_offset" : 3, "end_offset" : 4, "type" : "word", "position" : 1 }, { "token" : "am", "start_offset" : 5, "end_offset" : 7, "type" : "word", "position" : 2 }, { "token" : "PHPer-jiang,i", "start_offset" : 8, "end_offset" : 21, "type" : "word", "position" : 3 }, { "token" : "am", "start_offset" : 22, "end_offset" : 24, "type" : "word", "position" : 4 }, { "token" : "so", "start_offset" : 25, "end_offset" : 27, "type" : "word", "position" : 5 }, { "token" : "good。", "start_offset" : 28, "end_offset" : 33, "type" : "word", "position" : 6 } ] }whitespace分词器只根据空格进行分词,保留符号

-

分词-stop

GET _analyze { "analyzer": "stop", "text": "33 i am PHPer-jiang,i am so good。the history is new history" }分词结果

{ "tokens" : [ { "token" : "i", "start_offset" : 3, "end_offset" : 4, "type" : "word", "position" : 0 }, { "token" : "am", "start_offset" : 5, "end_offset" : 7, "type" : "word", "position" : 1 }, { "token" : "phper", "start_offset" : 8, "end_offset" : 13, "type" : "word", "position" : 2 }, { "token" : "jiang", "start_offset" : 14, "end_offset" : 19, "type" : "word", "position" : 3 }, { "token" : "i", "start_offset" : 20, "end_offset" : 21, "type" : "word", "position" : 4 }, { "token" : "am", "start_offset" : 22, "end_offset" : 24, "type" : "word", "position" : 5 }, { "token" : "so", "start_offset" : 25, "end_offset" : 27, "type" : "word", "position" : 6 }, { "token" : "good", "start_offset" : 28, "end_offset" : 32, "type" : "word", "position" : 7 }, { "token" : "history", "start_offset" : 37, "end_offset" : 44, "type" : "word", "position" : 9 }, { "token" : "new", "start_offset" : 48, "end_offset" : 51, "type" : "word", "position" : 11 }, { "token" : "history", "start_offset" : 52, "end_offset" : 59, "type" : "word", "position" : 12 } ] }stop分词器与standard分词器相比,会过滤掉 the,is,in 等修饰性词语,去除符号和数字,然后进行分词,同样是大写转为小写

-

分词-keyword

GET _analyze { "analyzer": "keyword", "text": "33 i am PHPer-jiang,i am so good。the history is new history" }分词结果

{ "tokens" : [ { "token" : "33 i am PHPer-jiang,i am so good。the history is new history", "start_offset" : 0, "end_offset" : 59, "type" : "word", "position" : 0 } ] }keyword分词器其实不会进行分词,text当做一个整体分词。

-

分词-pattern

GET _analyze { "analyzer": "pattern", "text": "33 i am PHPer-jiang,i am so good。the history is new history % hahah" }结果如下

{ "tokens" : [ { "token" : "33", "start_offset" : 0, "end_offset" : 2, "type" : "word", "position" : 0 }, { "token" : "i", "start_offset" : 3, "end_offset" : 4, "type" : "word", "position" : 1 }, { "token" : "am", "start_offset" : 5, "end_offset" : 7, "type" : "word", "position" : 2 }, { "token" : "phper", "start_offset" : 8, "end_offset" : 13, "type" : "word", "position" : 3 }, { "token" : "jiang", "start_offset" : 14, "end_offset" : 19, "type" : "word", "position" : 4 }, { "token" : "i", "start_offset" : 20, "end_offset" : 21, "type" : "word", "position" : 5 }, { "token" : "am", "start_offset" : 22, "end_offset" : 24, "type" : "word", "position" : 6 }, { "token" : "so", "start_offset" : 25, "end_offset" : 27, "type" : "word", "position" : 7 }, { "token" : "good", "start_offset" : 28, "end_offset" : 32, "type" : "word", "position" : 8 }, { "token" : "the", "start_offset" : 33, "end_offset" : 36, "type" : "word", "position" : 9 }, { "token" : "history", "start_offset" : 37, "end_offset" : 44, "type" : "word", "position" : 10 }, { "token" : "is", "start_offset" : 45, "end_offset" : 47, "type" : "word", "position" : 11 }, { "token" : "new", "start_offset" : 48, "end_offset" : 51, "type" : "word", "position" : 12 }, { "token" : "history", "start_offset" : 52, "end_offset" : 59, "type" : "word", "position" : 13 }, { "token" : "hahah", "start_offset" : 62, "end_offset" : 67, "type" : "word", "position" : 14 } ] }pattern分词器是正则分词,采用\W+,即非字母的符号进行分词,如上 %haha %、空格、逗号、句号均为非字母字符,所以进行了分词

-

分词器-analysis-icu

GET _analyze { "analyzer": "icu_analyzer", "text": "八百标兵奔北坡" }分词结果

{ "tokens" : [ { "token" : "八百", "start_offset" : 0, "end_offset" : 2, "type" : "", "position" : 0 }, { "token" : "标兵", "start_offset" : 2, "end_offset" : 4, "type" : " ", "position" : 1 }, { "token" : "奔北", "start_offset" : 4, "end_offset" : 6, "type" : " ", "position" : 2 }, { "token" : "坡", "start_offset" : 6, "end_offset" : 7, "type" : " ", "position" : 3 } ] } icu分词会根据中文磁性进行分词,推荐两个分词,ik,thula

-

分词器-ik

-

安装:

-

进入es的bin目录执行

elasticsearch-plugin list,查看当前已有插件

-

若没有analysis-ik分词插件则下载安装与es相同版本或者高于es版本的插件,低版本的安装会报错

elasticsearch-plugin install https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v7.4.0/elasticsearch-analysis-ik-7.4.0.zip

-

-

使用

-

ik_smart

GET _analyze { "analyzer": "ik_smart", "text": "中华人民共和国" }分词结果

{ "tokens" : [ { "token" : "中华人民共和国", "start_offset" : 0, "end_offset" : 7, "type" : "CN_WORD", "position" : 0 } ] }ik_smart会根据最粗可颗粒度拆分,如中华人民共和国,会拆分为中华人民共和国,适合pharse短语查询

-

ik_max_word

GET _analyze { "analyzer": "ik_max_word", "text": "中华人民共和国" }分词如下

{ "tokens" : [ { "token" : "中华人民共和国", "start_offset" : 0, "end_offset" : 7, "type" : "CN_WORD", "position" : 0 }, { "token" : "中华人民", "start_offset" : 0, "end_offset" : 4, "type" : "CN_WORD", "position" : 1 }, { "token" : "中华", "start_offset" : 0, "end_offset" : 2, "type" : "CN_WORD", "position" : 2 }, { "token" : "华人", "start_offset" : 1, "end_offset" : 3, "type" : "CN_WORD", "position" : 3 }, { "token" : "人民共和国", "start_offset" : 2, "end_offset" : 7, "type" : "CN_WORD", "position" : 4 }, { "token" : "人民", "start_offset" : 2, "end_offset" : 4, "type" : "CN_WORD", "position" : 5 }, { "token" : "共和国", "start_offset" : 4, "end_offset" : 7, "type" : "CN_WORD", "position" : 6 }, { "token" : "共和", "start_offset" : 4, "end_offset" : 6, "type" : "CN_WORD", "position" : 7 }, { "token" : "国", "start_offset" : 6, "end_offset" : 7, "type" : "CN_CHAR", "position" : 8 } ] }ik_max_word会根据最细颗粒度分词,适合term-query查询。

-

-

2019-10-23更

- bulk批量处理

POST _bulk {"index":{"_index":"user","_id":1}} {"name":"PHPer"} {"create":{"_index":"user1","_id":1}} {"name":"Gopher"} {"update":{"_index":"user1","_id":1}} {"doc":{"name":"PHPer"}} {"delete":{"_index":"user1","_id":1}}index: 创建,如果已经存在,则删除已有的保存新的,并且版本号+1。而create发现id已经存在,如果再创建会报错,update要指定修改数据是对doc进行操作的,以下是返回结果

{ "took" : 21, "errors" : false, "items" : [ { "index" : { "_index" : "user", "_type" : "_doc", "_id" : "1", "_version" : 26, "result" : "updated", "_shards" : { "total" : 2, "successful" : 1, "failed" : 0 }, "_seq_no" : 25, "_primary_term" : 1, "status" : 200 } }, { "create" : { "_index" : "user1", "_type" : "_doc", "_id" : "1", "_version" : 1, "result" : "created", "_shards" : { "total" : 2, "successful" : 1, "failed" : 0 }, "_seq_no" : 12, "_primary_term" : 1, "status" : 201 } }, { "update" : { "_index" : "user1", "_type" : "_doc", "_id" : "1", "_version" : 2, "result" : "updated", "_shards" : { "total" : 2, "successful" : 1, "failed" : 0 }, "_seq_no" : 13, "_primary_term" : 1, "status" : 200 } }, { "delete" : { "_index" : "user1", "_type" : "_doc", "_id" : "1", "_version" : 3, "result" : "deleted", "_shards" : { "total" : 2, "successful" : 1, "failed" : 0 }, "_seq_no" : 14, "_primary_term" : 1, "status" : 200 } } ] }我们能看到,bluk每条都会产生一个反馈,反复执行会发现create操作是处于报错状态的,因为要操作的文档在es中已经存在了。而index、update、delete操作都能正常执行且版本号+1.