Python爬虫实战项目2 | 动态网站的抓取(爬取电影网站的信息)

1.什么是动态网站?

动态网站和静态网站的区别在于,网页中常常包含JS,CSS等动态效果的内容或者文件,这些内容也是网页的有机整体。但对于浏览器来说,它是如何处理这些额外的文件的呢?首先浏览器先下载html文件,然后根据需要,下载JS等额外文件,它会自动去下载它们,如果我们要爬取这些网页中的动态信息,则需要我们亲手去构造请求数据。

2.如何找到这些动态效果的额外文件?

实例:

我们打开一个电影网站:http://movie.mtime.com/103937/,然后按F12,在开发者工具中找到“网络”这一选项,我用的是FireFox,如图:

刷新下:

可以发现,网页中除了html文件,还加载了其他文件,比如CSS,JS等。

比如网页中的评分信息等是在上图我点击变蓝的一行js文件中动态加载的。那我是如何找到这个文件的呢,很抱歉,目前只能说是经验,因为网站中动态载入的信息大多是在js文件中的,所以我们可在js、xhr文件的响应text中查看下,是否是我们想要的数据,一般都能找到。

3.这些动态信息文件有什么用,以及我们怎么下载它们?

刚刚已经说过,这些文件的作用就是动态地载入网页信息,比如上图中的“票房:10.25亿元”是我们想要爬取的数据,但在网页中却不存在,因此可以预见,是这些js等文件动态载入的。

那怎么下载它们呢?首先点击该文件,然后再点击“消息头”,会看到“消息头”、“Cookie”、“参数”等按钮,如图:

然后我们可以看到这个js文件的的请求网址,该请求网址的结构是有规律的,动态变化的只有3个部分,分别是电影的网址,时间,以及电影的编号,这三项内容显然电影网址和电影编号可以从静态html网页中获得,时间可以自己构造,然后就可以访问该js文件的网址获取数据,数据是以字典的形式呈现的,内容在”响应“里,如图:

我们可以用json模块 来处理它,比较方便。

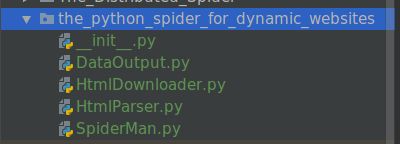

4.下面是该项目的结构和代码:

4.1.目录结构:

4.2.代码模块:

1.HtmlDownloader模块中的download方法用于下载网页信息:

import requests

import chardet

class HtmlDownloader(object):

def download(self, url):

if url is None:

return None

user_agent = 'Mozilla/4.0 (compatible; MISE 5.5; Windows NT)'

headers = {'User-Agent': user_agent}

response = requests.get(url, headers=headers)

if response.status_code == 200:

response.encoding = 'utf-8'

return response.text

return None

2.HtmlParser模块 则根据首页中的电影网址找到所有的js动态文件,然后下载我们需要的数据。

import re

from bs4 import BeautifulSoup

import json

class HtmlParser(object):

def parser_url(self, page_url, response):

pattern = re.compile(r'(http://movie.mtime.com/(\d+)/)')

urls = pattern.findall(response)

if urls:

# 将url进行去重

return list(set(urls))

else:

return None

def parser_json(self, page_url, response):

'''

解析响应

:param page_url:

:param response:

:return:

'''

# 将“=”和“;”之间的内容提取出来

pattern = re.compile(r'=(.*?);')

result = pattern.findall(response)[0]

if result:

# json模块加载字符串

value = json.loads(result)

try:

isRelease = value.get('value').get('isRelease')

except Exception as e:

print('json异常')

return None

if isRelease:

if value.get('value').get('hotValue') == None:

return self._parser_release(page_url, value)

else:

return self._parser_no_release(page_url, value, isRelease=2)

else:

return self._parser_no_release(page_url, value)

def _parser_release(self, page_url, value):

'''

解析已经上映的影片

:param page_url: 电影链接

:param value: json数据

:return:

'''

try:

isRelease = 1

movieRating = value.get('value').get('movieRating')

boxOffice = value.get('value').get('boxOffice')

movieTitle = value.get('value').get('movieTitle')

RPictureFinal = movieRating.get('RPictureFinal')

RStoryFinal = movieRating.get('RStoryFinal')

RDirectoryFinal = movieRating.get('RDirectoryFinal')

ROtherFinal = movieRating.get('ROtherFinal')

RatingFinal = movieRating.get('RatingFinal')

MovieId = movieRating.get('MovieId')

Usercount = movieRating.get('Usercount')

AttitudeCount = movieRating.get('AttitudeCount')

TotalBoxOffice = boxOffice.get('TotalBoxOffice')

TotalBoxOfficeUnit = boxOffice.get('TotalBoxOfficeUnit')

TodayBoxOffice = boxOffice.get('TodayBoxOffice')

TodayBoxOfficeUnit = boxOffice.get('TodayBoxOfficeUnit')

ShowDays = boxOffice.get('ShowDays')

try:

Rank = boxOffice.get('ShowDays')

except Exception:

Rank = 0

# 返回所提取的内容

return (

MovieId, movieTitle, RatingFinal,

ROtherFinal, RPictureFinal, RDirectoryFinal,

RStoryFinal, Usercount, AttitudeCount,

TotalBoxOffice+TotalBoxOfficeUnit,

TodayBoxOffice+TodayBoxOfficeUnit,

Rank, ShowDays, isRelease

)

except Exception:

print(page_url, value)

return None

def _parser_no_release(self, page_url, value, isRelease=0):

'''

解析未上映的电影信息

:param page_url:

:param value:

:param isRelease:

:return:

'''

try:

movieRating = value.get('value').get('movieRating')

movieTitle = value.get('value').get('movieTitle')

RPictureFinal = movieRating.get('RPictureFinal')

RStoryFinal = movieRating.get('RStoryFinal')

RDirectorFinal = movieRating.get('RDirectoryFinal')

ROtherFinal = movieRating.get('ROtherFinal')

RatingFinal = movieRating.get('RatingFinal')

MovieId = movieRating.get('MovieId')

Usercount = movieRating.get('Usercount')

AttitudeCount = movieRating.get('AttitudeCount')

try:

Rank = value.get('value').get('hotValue').get('Ranking')

except Exception:

Rank = 0

return (MovieId, movieTitle, RatingFinal,

ROtherFinal, RPictureFinal, RDirectorFinal,

RStoryFinal, Usercount, AttitudeCount, u'无',

u'无', Rank, 0, isRelease)

except Exception:

print(page_url, value)

return None

3.DataOutput模块则用于将数据存入数据库表中。

import sqlite3

class DataOutput(object):

def __init__(self):

self.cx = sqlite3.connect('MTime.db')

self.create_table('MTime')

self.datas=[]

def create_table(self, table_name):

'''

创建数据表

:param table_name:

:return:

'''

values = '''

id integer primary key,

MovieId integer,

MovieTitle varchar(40) NULL,

RatingFinal REAL NULL DEFAULT 0.0,

ROtherFinal REAL NULL DEFAULT 0.0,

RPictureFinal REAL NULL DEFAULT 0.0,

RDirectoryFinal REAL NULL DEFAULT 0.0,

RStoryFinal REAL NULL DEFAULT 0.0,

Usercount integer NULL DEFAULT 0,

AttitudeCount integer NULL DEFAULT 0,

TotalBoxOffice varchar(20) NULL,

TodayBoxOffice varchar(20) NULL,

Rank integer NULL DEFAULT 0,

ShowDays integer NULL DEFAULT 0,

isRelease integer NULL

'''

self.cx.execute("DROP TABLE IF EXISTS %s" % table_name)

self.cx.execute("CREATE TABLE %s( %s );" % (table_name, values))

def store_data(self, data):

'''

数据存储

:param data:

:return:

'''

if data is None:

return

self.datas.append(data)

print('passby')

if len(self.datas) > 10:

self.output_db('MTime')

print('Output successfully!')

def output_db(self, table_name):

'''

将数据存储到sqlite

:param table_name:

:return:

'''

for data in self.datas:

self.cx.execute("INSERT INTO %s (MovieId, MovieTitle,"

"RatingFinal, ROtherFinal, RPictureFinal,"

"RDirectoryFinal, RStoryFinal, Usercount,"

"AttitudeCount, TotalBoxOffice, TodayBoxOffice,"

"Rank, ShowDays, isRelease) VALUES(?,?,?,?,?,?,?,?,?,?,?,?,?,?)"

"" % table_name, data)

self.datas.remove(data)

self.cx.commit()

def output_end(self):

'''

关闭数据库

:return:

'''

if len(self.datas) > 0:

self.output_db('MTime')

self.cx.close()4.SpiderMan模块则用于调用各个模块,实现功能的统一。

import time

from the_python_spider_for_dynamic_websites.HtmlDownloader import HtmlDownloader

from the_python_spider_for_dynamic_websites.HtmlParser import HtmlParser

from the_python_spider_for_dynamic_websites.DataOutput import DataOutput

class SpiderMan(object):

def __int__(self):

pass

def crawl(self, root_url):

downloader = HtmlDownloader()

parser = HtmlParser()

output = DataOutput()

content = downloader.download(root_url)

urls = parser.parser_url(root_url, content)

# 构造一个获取评分和票房链接

for url in urls:

try:

print(url[0], url[1])

t = time.strftime("%Y%m%d%H%M%S3282", time.localtime())

# print('t:', t)

rank_url = 'http://service.library.mtime.com/Movie.api' \

'?Ajax_CallBack=true'\

'&Ajax_CallBackType=MTime.Library.Services'\

'&Ajax_CallBackMethod=GetMovieOverviewRating'\

'&Ajax_CrossDomain=1'\

'&Ajax_RequestUrl=%s'\

'&t=%s'\

'&Ajax_CallBackArgument0=%s' % (url[0], t, url[1])

rank_content = downloader.download(rank_url)

print("rank_content:", rank_content)

data = parser.parser_json(rank_url, rank_content)

print("data:", data)

output.store_data(data)

except Exception as e:

print("Crawl failed:", e)

output.output_end()

print("Crawl finish")

if __name__ == '__main__':

spider = SpiderMan()

spider.crawl('http://theater.mtime.com/China_Beijing/')最后,放一张爬取数据的图片: