简单CNN层次结构

import torch

import torch.nn as nn

import torch.nn.functional as F

"""

Neural networks can be constructed using the torch.nn package.

nn depends on autograd to define models and differentiate them.

An nn.Module contains layers, and a method forward(input)that returns the output.

"""

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

# 1 input image channel, 6 output channels, 5x5 square convolution

self.conv1 = nn.Conv2d(in_channels=1, out_channels=6, kernel_size=5)

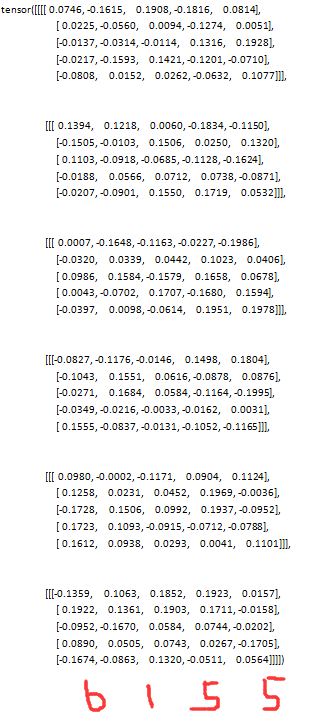

print(self.conv1.weight.data)

self.conv2 = nn.Conv2d(in_channels=6, out_channels=16, kernel_size=5)

print(self.conv2.weight.data)

# an affine仿射 operation: y = Wx + b ;

# class torch.nn.Linear(in_features, out_features, bias=True)

# in_features - 每个输入样本的大小 out_features - 每个输出样本的大小

self.fc1 = nn.Linear(16*5*5, 120) # 为啥是16*5*5

print(self.fc1.weight.data, self.fc1.weight.size())

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

# Max pooling over a (2, 2) window

x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2))

# If the size is a square you can only specify a single number

x = F.max_pool2d(F.relu(self.conv2(x)), (2, 2))

print(x)

x = x.view(-1, self.num_flat_features(x)) # 代表what

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

def num_flat_features(self, x): # 特征展开

print(x.size(), "************")

size = x.size()[1:]

print("--------%s", size)

num_features = 1

for s in size:

num_features *= s

return num_features

net = Net()

print(net)self.conv1.weight.size()![]()

self.conv1.weight.data

self.conv1.bias.size()

![]()

self.conv2.weight.size()![]()

self.conv2.bias.size()

![]()