1.前言:

1)生产环境建议使用:CDH、HDP等商业版本,因为多框架整合方面兼容性比较好

2)统一软件安装包下载路径:http://archive.cloudera.com/cdh5/cdh/5/

选择统一的cdh5.7.0尾号:hadoop-2.6.0-cdh5.7.0.tar.gz、hive-1.1.0-cdh5.7.0.tar.gz

3)用户hadoop,密码hadoop

机器目录:/home/hadoop/

software 存放安装软件

data 存放测试数据

source 存放源代码

lib 存放相关开发的jar

app 软件安装目录

app/tmp 存放HDFS/Kafka/ZK数据目录

maven_repo maven本地仓库

shell 存放上课相关的脚本

mysql 用户名root,密码123456

4)当前环境:

a)虚拟机为VM10

b)Linux系统为centos6.5

c)JDK为jdk-7u80-linux-x64.tar.gz

d)Maven为apache-maven-3.3.9-bin.zip

e)findbugs为findbugs-1.3.9.zip

f)protoc为protobuf-2.5.0.tar.gz

【以上环境必须提前装备好】

2.编译hadoop-2.6.0-cdh5.7.0(因为之前用的apache版本,所以在此重新编译部署hadoop-2.6.0-cdh5.7.0,正好复习一下)

1)下载

下载hadoop-2.6.0-cdh5.7.0-src.tar.gz

下载地址:http://archive.cloudera.com/cdh5/cdh/5/hadoop-2.6.0-cdh5.7.0-src.tar.gz

下载jdk-7u80-linux-x64.tar.gz(失败经验告诉我们编译hadoop-2.6.0-cdh5.7.0应该用1.7版本,1.8版本会编译失败)

下载地址:http://ghaffarian.net/downloads/Java/

2)上传(rz)

上传hadoop-2.6.0-cdh5.7.0-src.tar.gz至/home/hadoop/source

上传jdk-7u80-linux-x64.tar.gz、apache-maven-3.3.9-bin.zip、findbugs-1.3.9.zip、protobuf-2.5.0.tar.gz至/home/hadoop/software

3)解压

[root@hadoop001 ~]# tar -zxvf /home/hadoop/software/jdk-7u80-linux-x64.tar.gz -C /usr/java

[root@hadoop001 ~]# tar -zxvf /home/hadoop/software/protobuf-2.5.0.tar.gz -C /usr/local

[hadoop@hadoop001 source]$ tar -zxvf hadoop-2.6.0-cdh5.7.0-src.tar.gz

[hadoop@hadoop001 software]$ unzip apache-maven-3.3.9-bin.zip findbugs-1.3.9.zip -d /home/hadoop/app

解压后注意观察解压后文件夹的用户、用户组对不对,不对的话chown -R XXX:XXX dir一下

4)查看环境要求

[hadoop@hadoop001 ~]$ cd source/hadoop-2.6.0-cdh5.7.0

[hadoop@hadoop001 hadoop-2.6.0-cdh5.7.0]$ cat BUILDING.txt

Requirements:

* Windows System

* JDK 1.7+

* Maven 3.0 or later

* Findbugs 1.3.9 (if running findbugs)

* ProtocolBuffer 2.5.0

* CMake 2.6 or newer

* Windows SDK or Visual Studio 2010 Professional

* Unix command-line tools from GnuWin32 or Cygwin: sh, mkdir, rm, cp, tar, gzip

* zlib headers (if building native code bindings for zlib)

* Internet connection for first build (to fetch all Maven and Hadoop dependencies)

If using Visual Studio, it must be Visual Studio 2010 Professional (not 2012).

Do not use Visual Studio Express. It does not support compiling for 64-bit,

which is problematic if running a 64-bit system. The Windows SDK is free to

download here:

http://www.microsoft.com/en-us/download/details.aspx?id=8279

5)配置maven目录

[hadoop@hadoop001 ~]$ cd app/apache-maven-3.3.9/conf

[hadoop@hadoop001 conf]$ vi settings.xml

6)预编译安装

[root@hadoop001 protobuf-2.5.0]# yum install -y gcc gcc-c++ make cmake

[root@hadoop001 protobuf-2.5.0]# ./configure --prefix=/usr/local/protobuf

[root@hadoop001 protobuf-2.5.0]# make && make install

7)配置环境变量

[hadoop@hadoop001 ~]$ vi .bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export PATH

export JAVA_HOME=/usr/java/jdk1.7.0_80

export MVN_HOME=/home/hadoop/app/apache-maven-3.3.9

export FINDBUGS_HOME=/home/hadoop/app/findbugs-1.3.9

export PROTOC_HOME=/usr/local/protobuf

export PATH=$PROTOC_HOME/bin:$FINDBUGS_HOME/bin:$MVN_HOME/bin:$JAVA_HOME/bin:$PATH

[hadoop@hadoop001 ~]$ source .bash_profile

[hadoop@hadoop001 ~]$ which java

/usr/java/jdk1.7.0_80/bin/java

[hadoop@hadoop001 ~]$ which mvn

~/app/apache-maven-3.3.9/bin/mvn

[hadoop@hadoop001 ~]$ which findbugs

~/app/findbugs-1.3.9/bin/findbugs

[hadoop@hadoop001 ~]$ which protoc

/usr/local/protobuf/bin/protoc

8)查看软件版本

[hadoop@hadoop001 ~]$ java -version

java version "1.7.0_80"

Java(TM) SE Runtime Environment (build 1.7.0_80-b15)

Java HotSpot(TM) 64-Bit Server VM (build 24.80-b11, mixed mode)

[hadoop@hadoop001 ~]$ mvn -version

Apache Maven 3.3.9 (bb52d8502b132ec0a5a3f4c09453c07478323dc5; 2015-11-11T00:41:47+08:00)

Maven home: /home/hadoop/app/apache-maven-3.3.9

Java version: 1.7.0_80, vendor: Oracle Corporation

Java home: /usr/java/jdk1.7.0_80/jre

Default locale: en_US, platform encoding: UTF-8

OS name: "linux", version: "2.6.32-431.el6.x86_64", arch: "amd64", family: "unix"

[hadoop@hadoop001 ~]$ findbugs -version

1.3.9

[hadoop@hadoop001 ~]$ protoc --version

libprotoc 2.5.0

9)yum源安装其他组件

[root@hadoop001 ~]# yum install -y openssl openssl-devel svn ncurses-devel zlib-devel libtool

[root@hadoop001 ~]# yum install -y snappy snappy-devel bzip2 bzip2-devel lzo lzo-devel lzop autoconf automake

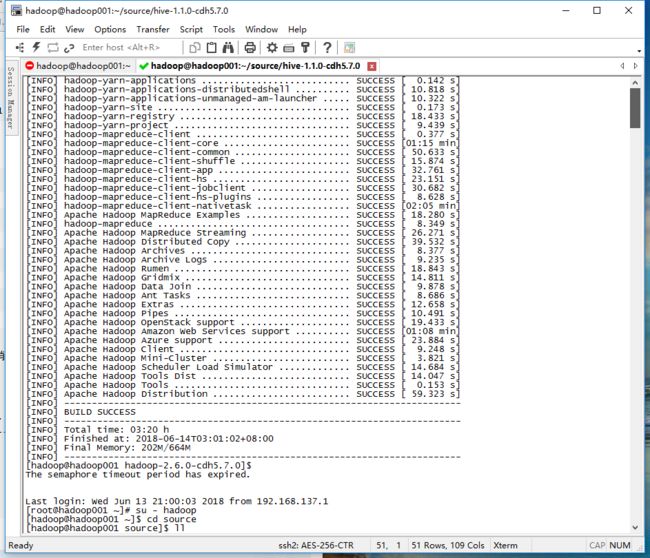

10)开始编译

[hadoop@hadoop001 ~]$ cd source/hadoop-2.6.0-cdh5.7.0

[hadoop@hadoop001 hadoop-2.6.0-cdh5.7.0]$ pwd

/home/hadoop/source/hadoop-2.6.0-cdh5.7.0

[hadoop@hadoop001 hadoop-2.6.0-cdh5.7.0]$ mvn clean package -Pdist,native -DskipTests -Dtar

3.hdfs伪分布式部署

1)解压编译好的hadoop安装包

将编译好的hadoop-2.6.0-cdh5.7.0.tar.gz移至/home/hadoop/software

[hadoop@hadoop001 ~]$ cd source/hadoop-2.6.0-cdh5.7.0/hadoop-dist/target

[hadoop@hadoop001 target]$ cp hadoop-2.6.0-cdh5.7.0.tar.gz /home/hadoop/software

[hadoop@hadoop001 software]$ tar -zxvf hadoop-2.6.0-cdh5.7.0.tar.gz -C ~/app/

2)配置环境变量

[hadoop@hadoop001 ~]$ vi .bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export PATH

export JAVA_HOME=/usr/java/jdk1.7.0_80

export MVN_HOME=/home/hadoop/app/apache-maven-3.3.9

export FINDBUGS_HOME=/home/hadoop/app/findbugs-1.3.9

export PROTOC_HOME=/usr/local/protobuf

export HADOOP_HOME=/home/hadoop/app/hadoop-2.6.0

export PATH=$HADOOP_HOME/bin:$PROTOC_HOME/bin:$FINDBUGS_HOME/bin:$MVN_HOME/bin:$JAVA_HOME/bin:$PATH

[hadoop@hadoop001 ~]$ source .bash_profile

[hadoop@hadoop001 ~]$ which hadoop

~/app/hadoop-2.6.0/bin/hadoop

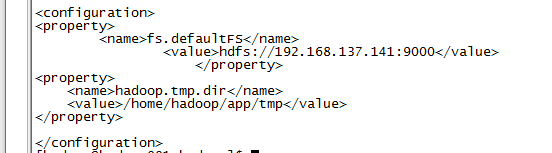

3)修改配置文件

[hadoop@hadoop001 ~]$ /home/hadoop/app/hadoop-2.6.0/etc/hadoop

[hadoop@hadoop001 hadoop]$ vi hadoop-env.sh

export JAVA_HOME=/usr/java/jdk1.7.0_80

[hadoop@hadoop001 hadoop]$ vi core-site.xml

[hadoop@hadoop001 hadoop]$ vi hdfs-site.xml

vi slaves

192.168.137.141

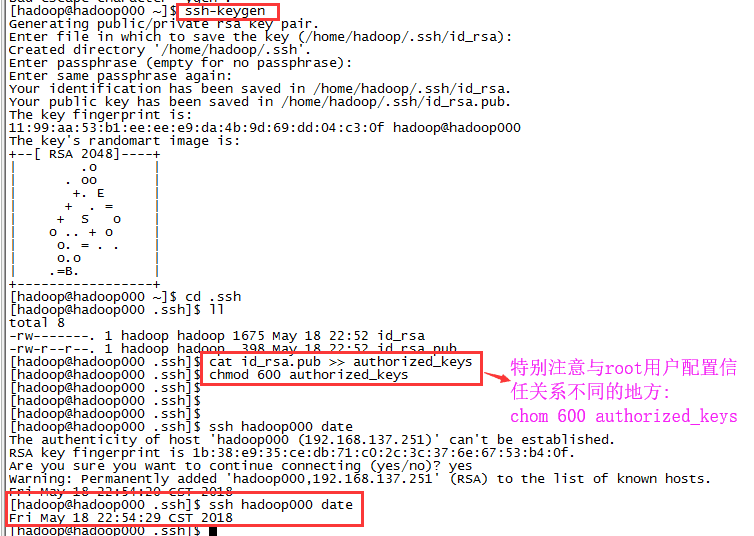

4)ssh配置信任

5)格式化

[hadoop@hadoop001 ~]$ hdfs namenode -format

6)启动hdfs

[hadoop@hadoop001 ~]$ cd app/hadoop-2.6.0/sbin

[hadoop@hadoop001 sbin]$ ./start-dfs.sh

[hadoop@hadoop001 sbin]$ jps

4066 DataNode

4376 Jps

4201 SecondaryNameNode

3976 NameNode