本文档将介绍iOS Camera回调的视频数据如何转换为后续OpenGL图像渲染所需要的texture,并介绍几种常用颜色存储的数据形式转换texture的方式。

1、Camera回调视频数据

camera的回调数据接口:

- (void)captureOutput:(AVCaptureOutput *)captureOutput didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer fromConnection:(AVCaptureConnection *)connection;

回调回来sampleBuffer数据:

CVPixelBufferRef pixelBuffer = (CVPixelBufferRef)CMSampleBufferGetImageBuffer(sampleBuffer);

OSType result = CVPixelBufferGetPixelFormatType(pixelBuffer);

通过CVPixelBufferGetPixelFormatType看到所获取视频数据类型,数据类型其实是在配置Camera时候设置的:

@property(nonatomic, copy) NSDictionary *videoSettings;

常用的3中类型为:

kCVPixelFormatType_32RGBA = 'RGBA', /* 32 bit RGBA */

kCVPixelFormatType_420YpCbCr8BiPlanarVideoRange = '420v', /* Bi-Planar Component Y'CbCr 8-bit 4:2:0, video-range (luma=[16,235] chroma=[16,240]). baseAddr points to a big-endian CVPlanarPixelBufferInfo_YCbCrBiPlanar struct */

kCVPixelFormatType_420YpCbCr8BiPlanarFullRange = '420f', /* Bi-Planar Component Y'CbCr 8-bit 4:2:0, full-range (luma=[0,255] chroma=[1,255]). baseAddr points to a big-endian CVPlanarPixelBufferInfo_YCbCrBiPlanar struct */

后两种其实就是视频处理中的420sp,在ios中排列形式都是NV12,接下来我会对以上的三种形式和yuv420p进行texture的生成。

2、 BGRA8888形式的绑定

- (BOOL)setupOriginTextureWithPixelBuffer:(CVPixelBufferRef)pixelBuffer

{

CVReturn cvRet = CVOpenGLESTextureCacheCreateTextureFromImage(kCFAllocatorDefault,

_cvTextureCache,

pixelBuffer,

NULL,

GL_TEXTURE_2D,

GL_RGBA,

self.imageWidth,

self.imageHeight,

GL_BGRA,

GL_UNSIGNED_BYTE,

0,

&_cvTextureOrigin);

if (!_cvTextureOrigin || kCVReturnSuccess != cvRet) {

NSLog(@"CVOpenGLESTextureCacheCreateTextureFromImage %d" , cvRet);

return NO;

}

_textureOriginInput = CVOpenGLESTextureGetName(_cvTextureOrigin);

glBindTexture(GL_TEXTURE_2D , _textureOriginInput);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glBindTexture(GL_TEXTURE_2D, 0);

return YES;

}

通过使用CVOpenGLESTextureCacheCreateTextureFromImage,将BGRA的数据转换到RGBA的纹理上。

3、对于420f或者420v形式的绑定

使用CVOpenGLESTextureCacheCreateTextureFromImage创建Y、UV两个纹理,并在fragment shader中转换为RGBA

- (BOOL)setupLumaTextureWithPixelBuffer:(CVPixelBufferRef)pixelBuffer

{

//Y-plane

glActiveTexture(GL_TEXTURE0);

CVReturn cvRet = CVOpenGLESTextureCacheCreateTextureFromImage(kCFAllocatorDefault,

_cvTextureCache,

pixelBuffer,

NULL,

GL_TEXTURE_2D,

GL_LUMINANCE,

self.imageWidth,

self.imageHeight,

GL_LUMINANCE,

GL_UNSIGNED_BYTE,

0,

&_cvlumaTexture);

if (!_cvlumaTexture || kCVReturnSuccess != cvRet) {

NSLog(@"CVOpenGLESTextureCacheCreateTextureFromImage %d" , cvRet);

return NO;

}

_textureLuma = CVOpenGLESTextureGetName(_cvlumaTexture);

glBindTexture(CVOpenGLESTextureGetTarget(_cvlumaTexture), _textureLuma);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

//glBindTexture(GL_TEXTURE_2D, 0);

// UV-plane.

glActiveTexture(GL_TEXTURE0);

cvRet = CVOpenGLESTextureCacheCreateTextureFromImage(kCFAllocatorDefault,

_cvTextureCache,

pixelBuffer,

NULL,

GL_TEXTURE_2D,

GL_LUMINANCE_ALPHA,

self.imageWidth / 2,

self.imageHeight / 2,

GL_LUMINANCE_ALPHA,

GL_UNSIGNED_BYTE,

1,

&_cvchromaTexture);

if (!_cvchromaTexture || kCVReturnSuccess != cvRet) {

NSLog(@"CVOpenGLESTextureCacheCreateTextureFromImage %d" , cvRet);

return NO;

}

_textureChroma = CVOpenGLESTextureGetName(_cvchromaTexture);

glBindTexture(CVOpenGLESTextureGetTarget(_cvchromaTexture), _textureChroma);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

//glBindTexture(GL_TEXTURE_2D, 0);

return YES;

}

internalFormat、format参数设置:GL_LUMINANCE 、GL_LUMINANCE_ALPHA

可以尝试GL_RED_EXT、GL_RG_EXT,应该会有颜色上的变化。

对应的fragment shader

kCVPixelFormatType_420YpCbCr8BiPlanarFullRange:

//yuv420f/v

char fsh1[] = "varying highp vec2 textureCoordinate;\

precision mediump float;\

uniform sampler2D SamplerY;\

uniform sampler2D SamplerUV;\

uniform mat3 colorConversionMatrix;\

void main()\

{\

mediump vec3 yuv;\

lowp vec3 rgb;\

yuv.x = (texture2D(SamplerY, textureCoordinate).r);\

yuv.yz = (texture2D(SamplerUV, textureCoordinate).ra - vec2(0.5, 0.5));\

rgb = colorConversionMatrix * yuv;\

gl_FragColor = vec4(rgb,1);\

}";

kCVPixelFormatType_420YpCbCr8BiPlanarVideoRange:

//yuv420f/v

char fsh4[] = "varying highp vec2 textureCoordinate;\

precision mediump float;\

uniform sampler2D SamplerY;\

uniform sampler2D SamplerUV;\

uniform mat3 colorConversionMatrix;\

void main()\

{\

mediump vec3 yuv;\

lowp vec3 rgb;\

yuv.x = (texture2D(SamplerY, textureCoordinate).r - (16.0 / 255.0));\

yuv.yz = (texture2D(SamplerUV, textureCoordinate).ra - vec2(0.5, 0.5));\

rgb = colorConversionMatrix * yuv;\

gl_FragColor = vec4(rgb,1);\

}";

4、yuv420p

//glGenTextures(1, &(_textureY));

glBindTexture(GL_TEXTURE_2D, _textureY);

glTexImage2D(GL_TEXTURE_2D, 0, GL_LUMINANCE, self.imageWidth, self.imageHeight, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE, yuv_Y);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

//glGenTextures(1, &(_textureU));

glBindTexture(GL_TEXTURE_2D, _textureU);

glTexImage2D(GL_TEXTURE_2D, 0, GL_LUMINANCE, self.imageWidth/2, self.imageHeight/2, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE, yuv_U);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

//glGenTextures(1, &(_textureV));

glBindTexture(GL_TEXTURE_2D, _textureV);

glTexImage2D(GL_TEXTURE_2D, 0, GL_LUMINANCE, self.imageWidth/2, self.imageHeight/2, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE, yuv_V);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

对应的fragment shader:

char fsh3[] = "varying highp vec2 textureCoordinate;\

precision mediump float;\

uniform sampler2D SamplerY;\

uniform sampler2D SamplerU;\

uniform sampler2D SamplerV;\

uniform mat3 colorConversionMatrix;\

void main()\

{\

mediump vec3 yuv;\

lowp vec3 rgb;\

yuv.x = (texture2D(SamplerY, textureCoordinate).r - (16.0 / 255.0));\

yuv.y = (texture2D(SamplerU, textureCoordinate).r - 0.5);\

yuv.z = (texture2D(SamplerV, textureCoordinate).r - 0.5);\

rgb = colorConversionMatrix * yuv;\

gl_FragColor = vec4(rgb,1);\

}";

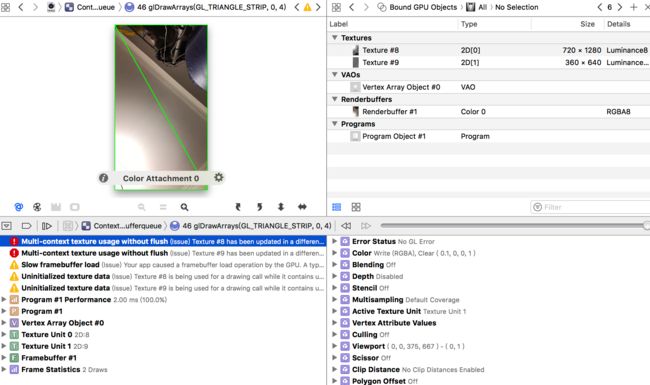

5、调试

首先可以通过Debug/Capture GPU Frame来抓取一帧画面

这样可以看到Y、UV纹理有没有创建成功,并且给出类RenderBuffer的渲染情况。

每一帧数据都需要flush

if (_cvchromaTexture) {

CFRelease(_cvchromaTexture);

_cvchromaTexture = NULL;

}

if (_cvlumaTexture) {

CFRelease(_cvlumaTexture);

_cvlumaTexture = NULL;

}