在大家做RAC安装测试搭建环境时,没有存储环境下,我来教大家怎么采用虚拟机来安装 ORACLE 10 rac,这样可以让大家更快学习好 ORACLE 10 RAC ,我会把很详细的安装写给大家。

1.安装前的准备

准备需要软件

10201_clusterware_linux_x86_64.cpio.gz

10201_database_linux_x86_64.cpio.gz

binutils-2.17.50.0.6-6.0.1.el5.x86_64.rpm

oracleasm-2.6.18-164.el5-2.0.5-1.el5.x86_64.rpm

oracleasmlib-2.0.4-1.el5.x86_64.rpm

oracleasm-support-2.1.7-1.el5.x86_64.rpm

主数据库节点

[root@node-rac1 ~]# cat /etc/hosts

# Do not remove the following line, or various programs

# that require network functionality will fail.

127.0.0.1 localhost.localdomain localhost

::1 localhost6.localdomain6 localhost6

172.19.0.81 node-rac1

172.19.0.82 node-rac2

172.19.0.83 node-vip1

172.19.0.84 node-vip2

172.19.0.91 node-priv1

172.19.0.92 node-priv2

备数据库节点

[root@node-rac2 ~]# cat /etc/hosts

# Do not remove the following line, or various programs

# that require network functionality will fail.

127.0.0.1 localhost.localdomain localhost

::1 localhost6.localdomain6 localhost6

172.19.0.81 node-rac1

172.19.0.82 node-rac2

172.19.0.83 node-vip1

172.19.0.84 node-vip2

172.19.0.91 node-priv1

172.19.0.92 node-priv2

2.安装依赖包(两台)

yum -y install make glibc libaio compat-libstdc++-33 compat-gcc-34 compat-gcc-34-c++ gcc libXp openmotif compat-db setarch kernel-headers glibc-headers glibc-devel libgomp binutils openmotif compat-db compat-gcc compat-gcc-c++ compat-libstdc++ compat-libstdc++-devel libaio-devel libaio elfutils-libelf-devel libgcc gcc-c++ glibc sysstat libstdc++ libstdc++-devel unixODBC-devel unixODBC

3.配置系统内核参数(两台)

[root@node-rac1 ~]# vi /etc/sysctl.conf 在后面增加

kernel.shmall = 2097152

kernel.shmmax = 4294967295

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

fs.file-max = 165536

net.ipv4.ip_local_port_range = 1024 65000

net.core.rmem_default = 1048576

net.core.rmem_max = 1048576

net.core.wmem_default = 262144

net.core.wmem_max = 262144

[root@node-rac1 ~]# /sbin/sysctl -p 生效配置参数

4.配置文件打开数(两台)

[root@node-rac1 ~]# vi /etc/security/limits.conf 后面增加

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

修改/etc/pam.d/login在最后增加

[root@node-rac1 ~]# vi /etc/pam.d/login

session required /lib/security/pam_limits.so

修改/etc/profile在最后增加

if [ $USER = "oracle" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

fi

重启LINUX

5.配置hangcheck-timer内核模块(两台)

[root@node-rac1 ~]# vi /etc/modprobe.conf 后面增加

options hangcheck-timer hangcheck_tick=30 hangcheck_margin=180

启动hangcheck

[root@node-rac1 ~]# /sbin/modprobe hangcheck_timer

把/sbin/modprobe hangcheck_timer增加到/etc/rc.local自动加载

查看是否成功

[root@node-rac1 ~]# grep hangcheck /var/log/messages | tail -2

Jul 29 20:39:28 node-rac1 kernel: Hangcheck: starting hangcheck timer 0.9.0 (tick is 30 seconds, margin is 180 seconds).

上面显示成功了。

6.关闭防火墙。(两台)

[root@node-rac1 ~]# /etc/init.d/iptables stop

[root@node-rac1 ~]# chkconfig iptables off

7.时间同步这里不需要介绍了。(两台)

8.创建oracle用户与组(两台)

[root@node-rac1 ~]# groupadd -g 1001 dba

[root@node-rac1 ~]# groupadd -g 1002 oinstall

[root@node-rac1 ~]# useradd -u 1001 -g oinstall -G dba oracle

设置oracle用户密码

[root@node-rac1 ~]# passwd oracle

9.设置Oracle 用户环境变量(两台)

[root@node-rac1 ~]# su - oracle

[oracle@node-rac1 ~]$ vi .bash_profile

主数据

export ORACLE_BASE=/u01/oracle

export ORACLE_HOME=$ORACLE_BASE/product/10201/rac_db

export ORA_CRS_HOME=/app/crs/product/10201/crs

export ORACLE_PATH=$ORACLE_BASE/common/oracle/sql:.:$ORACLE_HOME/rdbms/admin

export ORACLE_SID=racdb1

export NLS_LANS=AMERICAN_AMERICA.zhs16gbk

export NLS_DATE_FORMAT="YYYY-MM-DD HH24:MI:SS"

export PATH=.:${PATH}:$HOME/bin:$ORACLE_HOME/bin:$ORA_CRS_HOME/bin

export PATH=${PATH}:/usr/bin:/bin:/usr/bin/X11:/usr/local/bin

export PATH=${PATH}:$ORACLE_BASE/common/oracle/bin

export ORACLE_TERM=xterm

export TNS_ADMIN=$ORACLE_HOME/network/admin

export ORA_NLS10=$ORACLE_HOME/nls/data

export LD_LIBRARY_PATH=$ORACLE_HOME/lib

export LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:$ORACLE_HOME/oracm/lib

export LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:/lib/:/usr/lib:/usr/local/lib

export CLASSPATH=$ORACLE_HOME/JRE

export CLASSPATH=${CLASSPATH}:$ORACLE_HOME/jlib

export CLASSPATH=${CLASSPATH}:$ORACLE_HOME/rdbms/jlib

export CLASSPATH=${CLASSPATH}:$ORACLE_HOME/network/jlib

export THREADS_FLAG=native

export TEMP=/tmp

export TMPDIR=/tmp

备数据

export ORACLE_BASE=/u01/oracle

export ORACLE_HOME=$ORACLE_BASE/product/10201/rac_db

export ORA_CRS_HOME=/app/crs/product/10201/crs

export ORACLE_PATH=$ORACLE_BASE/common/oracle/sql:.:$ORACLE_HOME/rdbms/admin

export ORACLE_SID=racdb2

export NLS_LANS=AMERICAN_AMERICA.zhs16gbk

export NLS_DATE_FORMAT="YYYY-MM-DD HH24:MI:SS"

export PATH=.:${PATH}:$HOME/bin:$ORACLE_HOME/bin:$ORA_CRS_HOME/bin

export PATH=${PATH}:/usr/bin:/bin:/usr/bin/X11:/usr/local/bin

export PATH=${PATH}:$ORACLE_BASE/common/oracle/bin

export ORACLE_TERM=xterm

export TNS_ADMIN=$ORACLE_HOME/network/admin

export ORA_NLS10=$ORACLE_HOME/nls/data

export LD_LIBRARY_PATH=$ORACLE_HOME/lib

export LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:$ORACLE_HOME/oracm/lib

export LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:/lib/:/usr/lib:/usr/local/lib

export CLASSPATH=$ORACLE_HOME/JRE

export CLASSPATH=${CLASSPATH}:$ORACLE_HOME/jlib

export CLASSPATH=${CLASSPATH}:$ORACLE_HOME/rdbms/jlib

export CLASSPATH=${CLASSPATH}:$ORACLE_HOME/network/jlib

export THREADS_FLAG=native

export TEMP=/tmp

export TMPDIR=/tmp 10.各节点上创建目录(两台)

[root@node-rac1 ~]# mkdir -p /u01/oracle/product/10201/rac_db

[root@node-rac1 ~]# mkdir -p /app/crs/product/10201/crs

[root@node-rac1 ~]# chown -R oracle:oinstall /app

[root@node-rac1 ~]# chown -R oracle:oinstall /u01

[root@node-rac1 ~]# chmod -R 755 /app

[root@node-rac1 ~]# chmod -R 755 /u01

11.配置节点间ssh信任(两台)

在每个节点以oracle创建RSA密钥和公钥

[oracle@node-rac1 ~]$ mkdir ~/.ssh

[oracle@node-rac1 ~]$ chmod 700 ~/.ssh

[oracle@node-rac1 ~]$ cd .ssh/

[oracle@node-rac1 .ssh]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/oracle/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/oracle/.ssh/id_rsa.

Your public key has been saved in /home/oracle/.ssh/id_rsa.pub.

The key fingerprint is:

95:f6:99:00:c9:d1:4b:9b:1d:d5:ff:0f:b9:3f:0f:f9 oracle@node-rac1

两台操作完成后

做成公钥文件

[oracle@node-rac1 .ssh]$ ssh node-rac1 cat /home/oracle/.ssh/id_rsa.pub >> authorized_keys

[oracle@node-rac1 .ssh]$ ssh node-rac2 cat /home/oracle/.ssh/id_rsa.pub >> authorized_keys

[oracle@node-rac1 .ssh]$ chmod 600 ~/.ssh/authorized_keys

[oracle@node-rac1 .ssh]$ scp authorized_key node-rac2:/home/oracle/.ssh/

测试ssh信任

[oracle@node-rac1 ~]$ ssh node-rac1 date

[oracle@node-rac1 ~]$ ssh node-rac2 date

[oracle@node-rac2 .ssh]$ ssh node-rac1 date

[oracle@node-rac2 .ssh]$ ssh node-rac2 date

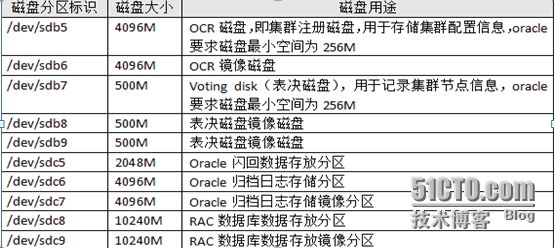

12.创建共享盘

没有存储磁盘可用,我们采用vmware虚拟磁盘进行模拟操作。

vmware-vdiskmanager.exe ssleay32.dll libeay32.dll

在安装vmware虚拟机中找到上个三个

把三个放在你F:/vm下面,注意你所在磁盘空间必须大于45G。

建立共享磁盘文件

vmware-vdiskmanager.exe -c -s 15Gb -a lsilogic -t 2 sdb.vmdk

vmware-vdiskmanager.exe -c -s 30Gb -a lsilogic -t 2 sdc.vmdk

在F:/vm中创建create.bat文件把上面文件加进去,点击创建共享磁盘。

闭关两台linux虚拟机。node-rac1与node-rac2

配置两台LINUX虚拟机找到vmx文件,在最后增加如下内容

scsi1.present = "TRUE"

scsi1.virtualDev = "lsilogic"

scsi1.sharedBus = "VIRTUAL"

scsi1:1.present = "TRUE"

scsi1:1.mode = "independent-persistent"

scsi1:1.fileName = "F:\vm\sdb.vmdk"

scsi1:1.deviceType = "disk"

scsi1:2.present = "TRUE"

scsi1:2.mode = "independent-persistent"

scsi1:2.fileName = "F:\vm\sdc.vmdk"

scsi1:2.deviceType = "disk"

disk.locking = "FALSE"

diskLib.dataCacheMaxSize = "0"

diskLib.dataCacheMaxReadAheadSize = "0"

diskLib.dataCacheMinReadAheadSize = "0"

diskLib.dataCachePageSize = "4096"

diskLib.maxUnsyncedWrites = "0"

13.启动LINUX进行分区

[root@node-rac1 ~]# fdisk /dev/sdb

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel. Changes will remain in memory only,

until you decide to write them. After that, of course, the previous

content won't be recoverable.

The number of cylinders for this disk is set to 1958.

There is nothing wrong with that, but this is larger than 1024,

and could in certain setups cause problems with:

1) software that runs at boot time (e.g., old versions of LILO)

2) booting and partitioning software from other OSs

(e.g., DOS FDISK, OS/2 FDISK)

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite)

Command (m for help): n

Command action

e extended

p primary partition (1-4)

e

Partition number (1-4): 1

First cylinder (1-1958, default 1):

Using default value 1

Last cylinder or +size or +sizeM or +sizeK (1-1958, default 1958):

Using default value 1958

Command (m for help): n

Command action

l logical (5 or over)

p primary partition (1-4)

l

First cylinder (1-1958, default 1):

Using default value 1

Last cylinder or +size or +sizeM or +sizeK (1-1958, default 1958): +4096

Value out of range.

Last cylinder or +size or +sizeM or +sizeK (1-1958, default 1958): +4096M

Command (m for help): n

Command action

l logical (5 or over)

p primary partition (1-4)

l

First cylinder (500-1958, default 500):

Using default value 500

Last cylinder or +size or +sizeM or +sizeK (500-1958, default 1958): +4096M

Command (m for help): N

Command action

l logical (5 or over)

p primary partition (1-4)

l

First cylinder (999-1958, default 999):

Using default value 999

Last cylinder or +size or +sizeM or +sizeK (999-1958, default 1958): +500M

Command (m for help): N

Command action

l logical (5 or over)

p primary partition (1-4)

l

First cylinder (1061-1958, default 1061):

Using default value 1061

Last cylinder or +size or +sizeM or +sizeK (1061-1958, default 1958): +500M

Command (m for help): n

Command action

l logical (5 or over)

p primary partition (1-4)

l

First cylinder (1123-1958, default 1123):

Using default value 1123

Last cylinder or +size or +sizeM or +sizeK (1123-1958, default 1958): +500M

Command (m for help): P

Disk /dev/sdb: 16.1 GB, 16106127360 bytes

255 heads, 63 sectors/track, 1958 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 1 1958 15727603+ 5 Extended

/dev/sdb5 1 499 4008154+ 83 Linux

/dev/sdb6 500 998 4008186 83 Linux

/dev/sdb7 999 1060 497983+ 83 Linux

/dev/sdb8 1061 1122 497983+ 83 Linux

/dev/sdb9 1123 1184 497983+ 83 Linux

Command (m for help): W

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

[root@node-rac1 ~]# fdisk /dev/sdc

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel. Changes will remain in memory only,

until you decide to write them. After that, of course, the previous

content won't be recoverable.

The number of cylinders for this disk is set to 3916.

There is nothing wrong with that, but this is larger than 1024,

and could in certain setups cause problems with:

1) software that runs at boot time (e.g., old versions of LILO)

2) booting and partitioning software from other OSs

(e.g., DOS FDISK, OS/2 FDISK)

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite)

Command (m for help): n

Command action

e extended

p primary partition (1-4)

e

Partition number (1-4): 1

First cylinder (1-3916, default 1):

Using default value 1

Last cylinder or +size or +sizeM or +sizeK (1-3916, default 3916):

Using default value 3916

Command (m for help): n

Command action

l logical (5 or over)

p primary partition (1-4)

l

First cylinder (1-3916, default 1):

Using default value 1

Last cylinder or +size or +sizeM or +sizeK (1-3916, default 3916): +2048M

Command (m for help): n

Command action

l logical (5 or over)

p primary partition (1-4)

l

First cylinder (251-3916, default 251):

Using default value 251

Last cylinder or +size or +sizeM or +sizeK (251-3916, default 3916): +4096M

Command (m for help): N

Command action

l logical (5 or over)

p primary partition (1-4)

l

First cylinder (750-3916, default 750):

Using default value 750

Last cylinder or +size or +sizeM or +sizeK (750-3916, default 3916): +4096M

Command (m for help): N

Command action

l logical (5 or over)

p primary partition (1-4)

l

First cylinder (1249-3916, default 1249):

Using default value 1249

Last cylinder or +size or +sizeM or +sizeK (1249-3916, default 3916): +10240M

Command (m for help): n

Command action

l logical (5 or over)

p primary partition (1-4)

l

First cylinder (2495-3916, default 2495):

Using default value 2495

Last cylinder or +size or +sizeM or +sizeK (2495-3916, default 3916): +10240M

Command (m for help): P

Disk /dev/sdc: 32.2 GB, 32212254720 bytes

255 heads, 63 sectors/track, 3916 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdc1 1 3916 31455238+ 5 Extended

/dev/sdc5 1 250 2008062 83 Linux

/dev/sdc6 251 749 4008186 83 Linux

/dev/sdc7 750 1248 4008186 83 Linux

/dev/sdc8 1249 2494 10008463+ 83 Linux

/dev/sdc9 2495 3740 10008463+ 83 Linux

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

14.建立和配置raw设备(两台都要操作)最后面增加如下:

[root@node-rac1 ~]# vi /etc/udev/rules.d/60-raw.rules

ACTION=="add",KERNEL=="sdb5",RUN+="/bin/raw /dev/raw/raw1 %N"

ACTION=="add",KERNEL=="sdb6",RUN+="/bin/raw /dev/raw/raw2 %N"

ACTION=="add",KERNEL=="sdb7",RUN+="/bin/raw /dev/raw/raw3 %N"

ACTION=="add",KERNEL=="sdb8",RUN+="/bin/raw /dev/raw/raw4 %N"

ACTION=="add",KERNEL=="sdb9",RUN+="/bin/raw /dev/raw/raw5 %N"

ACTION=="add",KERNEL=="sdc5",RUN+="/bin/raw /dev/raw/raw6 %N"

ACTION=="add",KERNEL=="sdc6",RUN+="/bin/raw /dev/raw/raw7 %N"

ACTION=="add",KERNEL=="sdc7",RUN+="/bin/raw /dev/raw/raw8 %N"

ACTION=="add",KERNEL=="sdc8",RUN+="/bin/raw /dev/raw/raw9 %N"

ACTION=="add",KERNEL=="sdc9",RUN+="/bin/raw /dev/raw/raw10 %N"

KERNEL=="raw1", OWNER="oracle", GROUP="oinstall", MODE="660"

KERNEL=="raw2", OWNER="oracle", GROUP="oinstall", MODE="660"

KERNEL=="raw3", OWNER="oracle", GROUP="oinstall", MODE="644"

KERNEL=="raw4", OWNER="oracle", GROUP="oinstall", MODE="644"

KERNEL=="raw5", OWNER="oracle", GROUP="oinstall", MODE="644"

KERNEL=="raw6", OWNER="oracle", GROUP="oinstall", MODE="660"

KERNEL=="raw7", OWNER="oracle", GROUP="oinstall", MODE="660"

KERNEL=="raw8", OWNER="oracle", GROUP="oinstall", MODE="660"

KERNEL=="raw9", OWNER="oracle", GROUP="oinstall", MODE="660"

KERNEL=="raw10", OWNER="oracle", GROUP="oinstall", MODE="660"

配置完后在各节点启动

[root@node-rac1 ~]# start_udev

Starting udev: [ OK ]

查看是否生效

[root@node-rac1 ~]# ll /dev/raw/raw*

crw-rw---- 1 oracle oinstall 162, 1 Jul 29 23:48 /dev/raw/raw1

crw-rw---- 1 oracle oinstall 162, 10 Jul 29 23:48 /dev/raw/raw10

crw-rw---- 1 oracle oinstall 162, 2 Jul 29 23:48 /dev/raw/raw2

crw-r--r-- 1 oracle oinstall 162, 3 Jul 29 23:48 /dev/raw/raw3

crw-r--r-- 1 oracle oinstall 162, 4 Jul 29 23:48 /dev/raw/raw4

crw-r--r-- 1 oracle oinstall 162, 5 Jul 29 23:48 /dev/raw/raw5

crw-rw---- 1 oracle oinstall 162, 6 Jul 29 23:48 /dev/raw/raw6

crw-rw---- 1 oracle oinstall 162, 7 Jul 29 23:48 /dev/raw/raw7

crw-rw---- 1 oracle oinstall 162, 8 Jul 29 23:48 /dev/raw/raw8

crw-rw---- 1 oracle oinstall 162, 9 Jul 29 23:48 /dev/raw/raw9

如里没有生效,重启一下LINUX

15.解压软件包与安装补丁包

安装补丁包

[root@node-rac1 ~]# rpm -Uvh oracleasm-support-2.1.7-1.el5.x86_64.rpm

[root@node-rac1 ~]# rpm -Uvh oracleasm-2.6.18-164.el5-2.0.5-1.el5.x86_64.rpm

[root@node-rac1 ~]# rpm -Uvh oracleasmlib-2.0.4-1.el5.x86_64.rpm

解压软件包

[root@node-rac1 ~]# cd /home/oracle/

[root@node-rac1 oracle]# ls

10201_clusterware_linux_x86_64.cpio.gz 10201_database_linux_x86_64.cpio.gz

[root@node-rac1 oracle]# chown -R oracle:oinstall 10201_*

[root@node-rac1 oracle]# chmod +x 10201_*

[root@node-rac1 oracle]# su - oracle

[oracle@node-rac1 ~]$ gunzip 10201_clusterware_linux_x86_64.cpio.gz

[oracle@node-rac1 ~]$ cpio -idmv < 10201_clusterware_linux_x86_64.cpio

[oracle@node-rac1 ~]$ gunzip 10201_database_linux_x86_64.cpio.gz

[oracle@node-rac1 ~]$ cpio -idmv < 10201_database_linux_x86_64.cpio

16.验证环境

[oracle@node-rac1 ~]$ cd clusterware/cluvfy/

[oracle@node-rac1 cluvfy]$ ./runcluvfy.sh stage -pre crsinst -n node-rac1,node-rac2 -verbose

出现

ERROR:

Could not find a suitable set of interfaces for VIPs. 这是10.2 RAC bug,后面会解决。

安装RAC前,我们需要安装远程桌面软件。使用Xmanager远程桌面登录(这个后期写给大家)

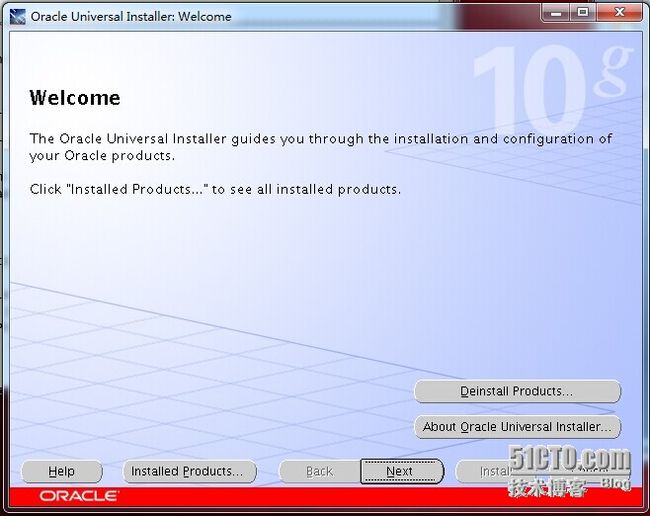

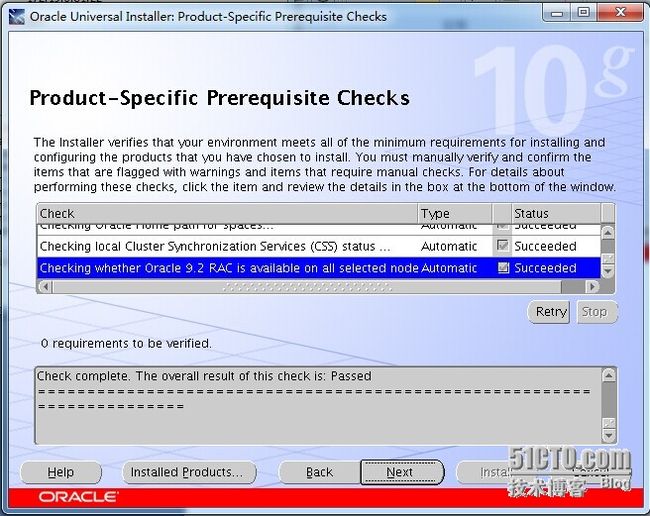

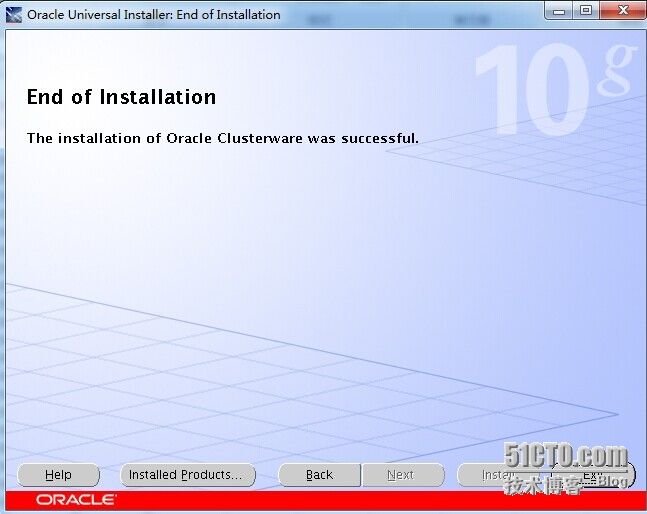

17.安装oracle clusterware

[oracle@node-rac1 ~]$ cd clusterware/

[oracle@node-rac1 clusterware]$ ./runInstaller

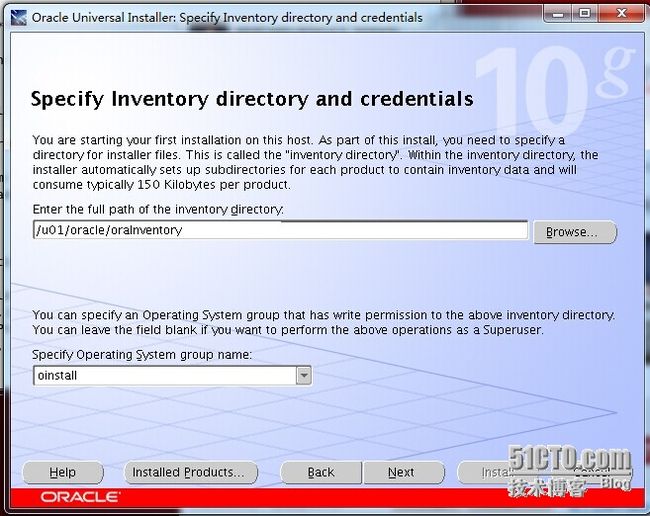

Next

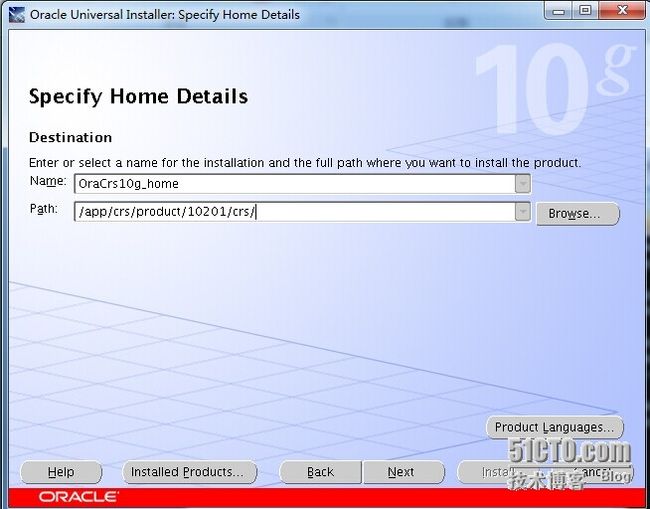

Next目录和上图片一样

目录/app/crs/product/10201/crs Next下一步

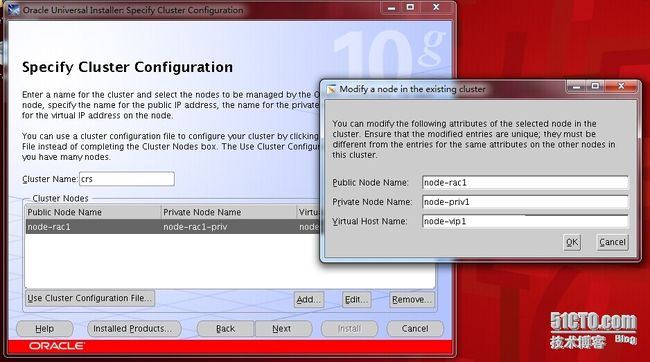

Next

Edit修改后如上图OK

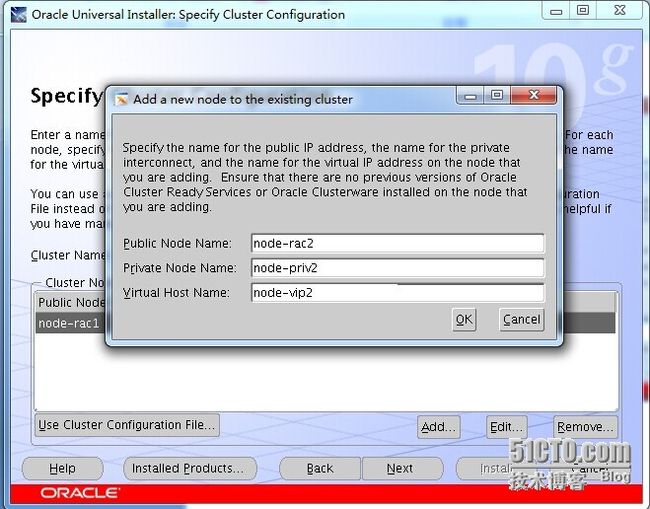

Add增加第二个节点如上图修改后OK

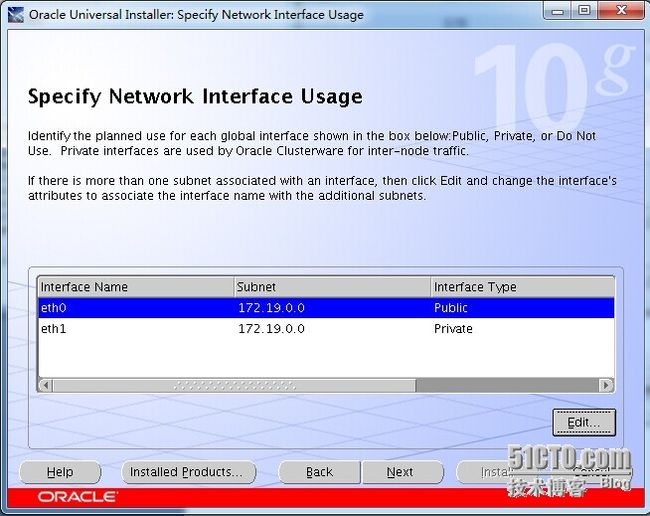

Next

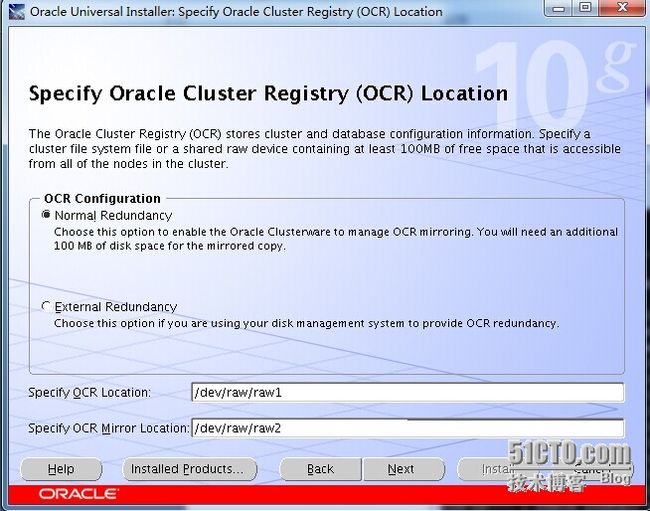

修改成上图一样,Next

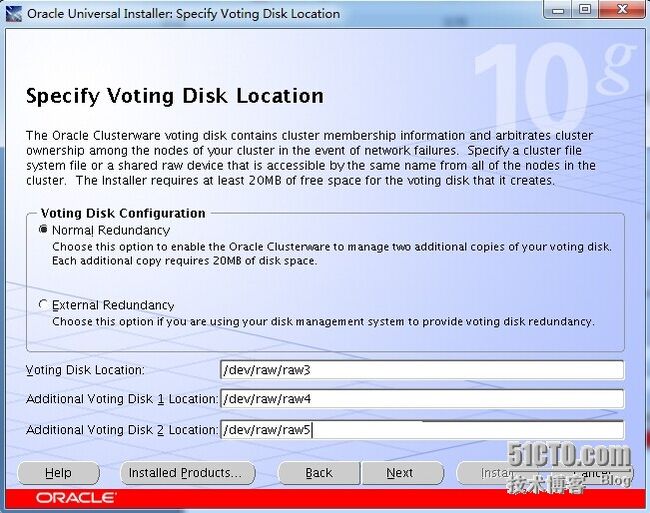

如上图 Next

Next

Install

如上图所示,用root用户分别执行上面脚本

[root@node-rac1 ~]# /u01/oracle/oraInventory/orainstRoot.sh

Changing permissions of /u01/oracle/oraInventory to 770.

Changing groupname of /u01/oracle/oraInventory to oinstall.

The execution of the script is complete

[root@node-rac2 ~]# /u01/oracle/oraInventory/orainstRoot.sh

Changing permissions of /u01/oracle/oraInventory to 770.

Changing groupname of /u01/oracle/oraInventory to oinstall.

[root@node-rac1 ~]# /app/crs/product/10201/crs/root.sh

WARNING: directory '/app/crs/product/10201' is not owned by root

WARNING: directory '/app/crs/product' is not owned by root

WARNING: directory '/app/crs' is not owned by root

WARNING: directory '/app' is not owned by root

Checking to see if Oracle CRS stack is already configured

/etc/oracle does not exist. Creating it now.

Setting the permissions on OCR backup directory

Setting up NS directories

Oracle Cluster Registry configuration upgraded successfully

WARNING: directory '/app/crs/product/10201' is not owned by root

WARNING: directory '/app/crs/product' is not owned by root

WARNING: directory '/app/crs' is not owned by root

WARNING: directory '/app' is not owned by root

Successfully accumulated necessary OCR keys.

Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897.

node

node 1: node-rac1 node-priv1 node-rac1

node 2: node-rac2 node-priv2 node-rac2

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

Now formatting voting device: /dev/raw/raw3

Now formatting voting device: /dev/raw/raw4

Now formatting voting device: /dev/raw/raw5

Format of 3 voting devices complete.

Startup will be queued to init within 90 seconds.

Adding daemons to inittab

Expecting the CRS daemons to be up within 600 seconds.

CSS is active on these nodes.

node-rac1

CSS is inactive on these nodes.

node-rac2

Local node checking complete.

Run root.sh on remaining nodes to start CRS daemons.

[root@node-rac2 ~]# /app/crs/product/10201/crs/root.sh

WARNING: directory '/app/crs/product/10201' is not owned by root

WARNING: directory '/app/crs/product' is not owned by root

WARNING: directory '/app/crs' is not owned by root

WARNING: directory '/app' is not owned by root

Checking to see if Oracle CRS stack is already configured

/etc/oracle does not exist. Creating it now.

Setting the permissions on OCR backup directory

Setting up NS directories

Oracle Cluster Registry configuration upgraded successfully

WARNING: directory '/app/crs/product/10201' is not owned by root

WARNING: directory '/app/crs/product' is not owned by root

WARNING: directory '/app/crs' is not owned by root

WARNING: directory '/app' is not owned by root

clscfg: EXISTING configuration version 3 detected.

clscfg: version 3 is 10G Release 2.

Successfully accumulated necessary OCR keys.

Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897.

node

node 1: node-rac1 node-priv1 node-rac1

node 2: node-rac2 node-priv2 node-rac2

clscfg: Arguments check out successfully.

NO KEYS WERE WRITTEN. Supply -force parameter to override.

-force is destructive and will destroy any previous cluster

configuration.

Oracle Cluster Registry for cluster has already been initialized

Startup will be queued to init within 90 seconds.

Adding daemons to inittab

Expecting the CRS daemons to be up within 600 seconds.

CSS is active on these nodes.

node-rac1

node-rac2

CSS is active on all nodes.

Waiting for the Oracle CRSD and EVMD to start

Waiting for the Oracle CRSD and EVMD to start

Waiting for the Oracle CRSD and EVMD to start

Oracle CRS stack installed and running under init(1M)

Running vipca(silent) for configuring nodeapps

/app/crs/product/10201/crs/jdk/jre//bin/java: error while loading shared libraries: libpthread.so.0: cannot open shared object file: No such file or directory

如上错误我们修改

[root@node-rac2 ~]# vi /app/crs/product/10201/crs/bin/vipca

找下下面修改

if [ "$arch" = "i686" -o "$arch" = "ia64" -o "$arch" = "x86_64" ]

then

LD_ASSUME_KERNEL=2.4.19

export LD_ASSUME_KERNEL

fi

#End workaround

unset LD_ASSUME_KERNEL #增加这个

[root@node-rac2 ~]# vi /app/crs/product/10201/crs/bin/srvctl

#Remove this workaround when the bug 3937317 is fixed

LD_ASSUME_KERNEL=2.4.19

export LD_ASSUME_KERNEL

unset LD_ASSUME_KERNEL#增加这个

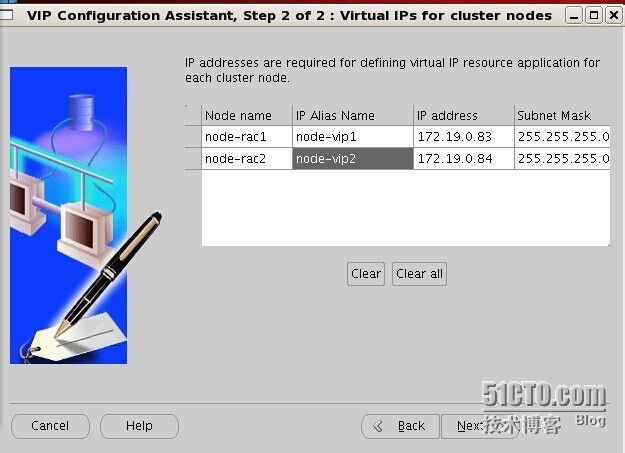

18.root用户下安装vip

next

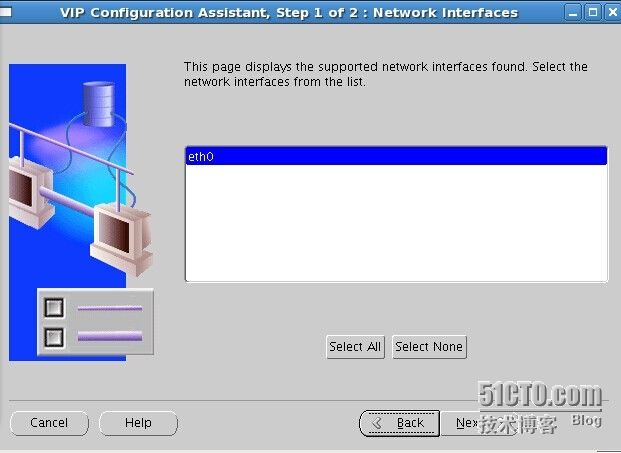

next

IP Alias Name 修改成node-vip1,node-vip2 点击Next

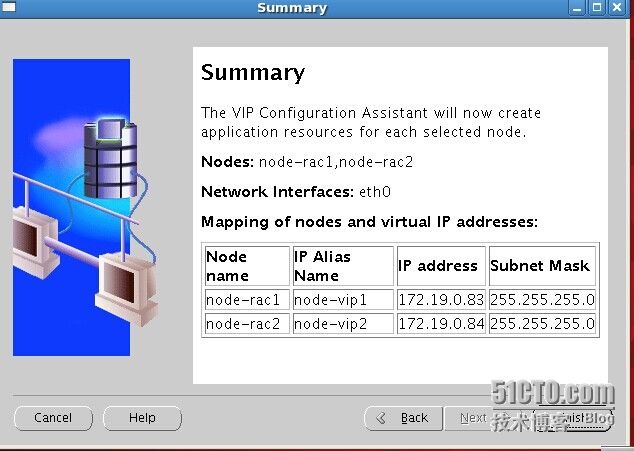

Finish

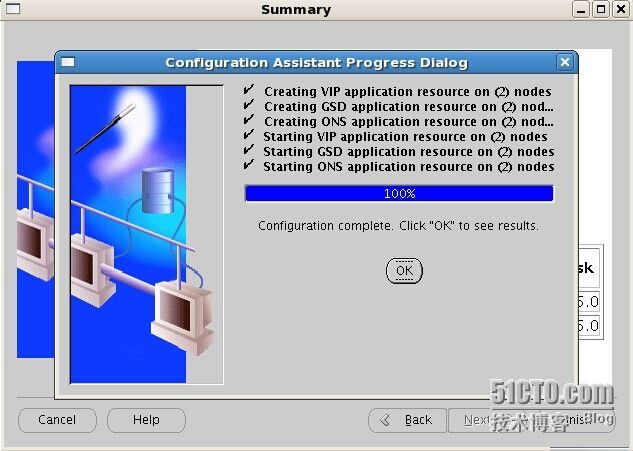

说明vip安装成功,点击ok

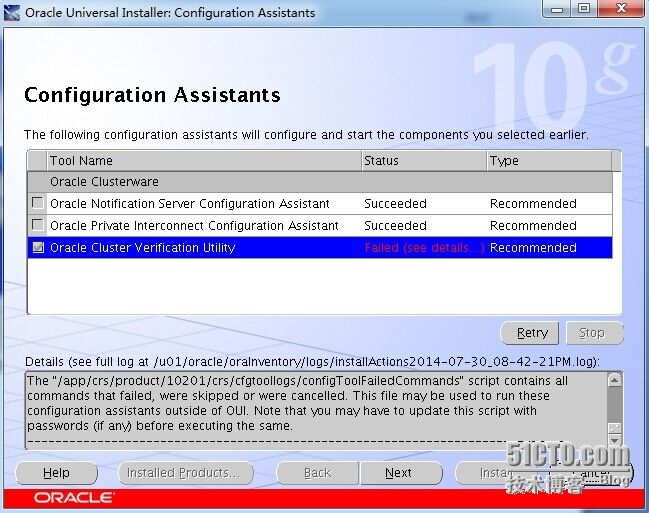

点击Retry一下

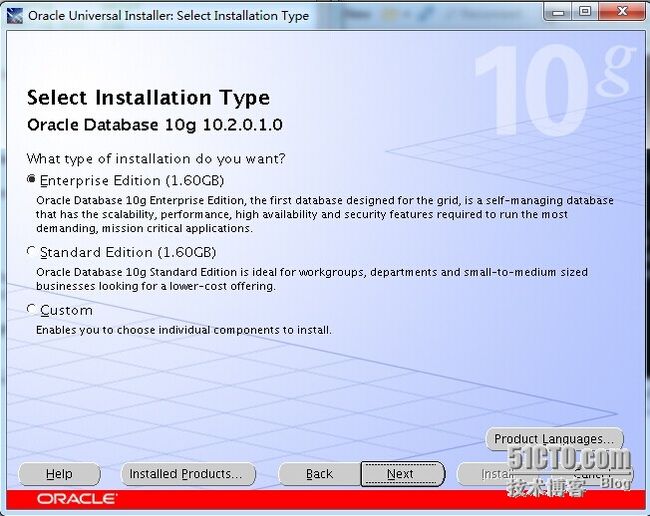

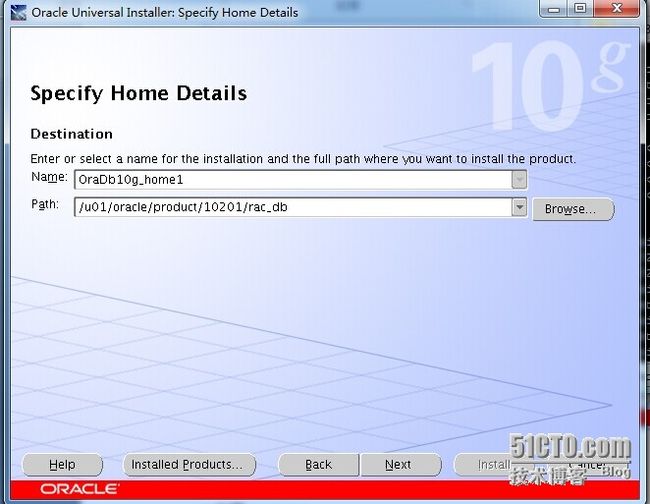

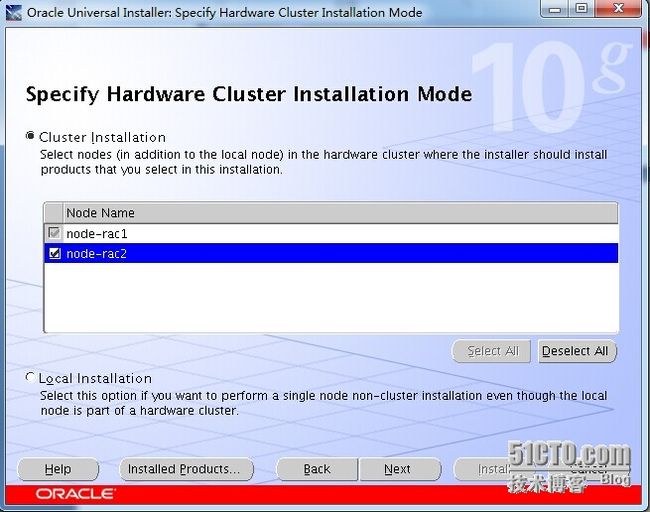

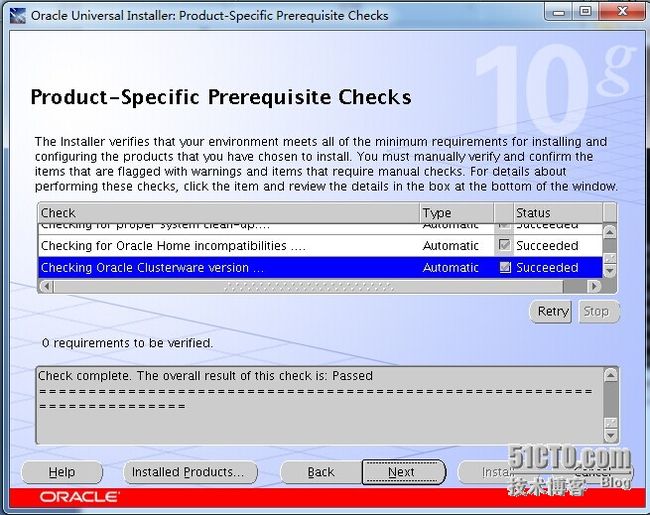

19.安装Oracle 数据库软件

[oracle@node-rac1 database]$ ./runInstaller

Next

修改成上图,Next

把node-rac2点上,Next

Next

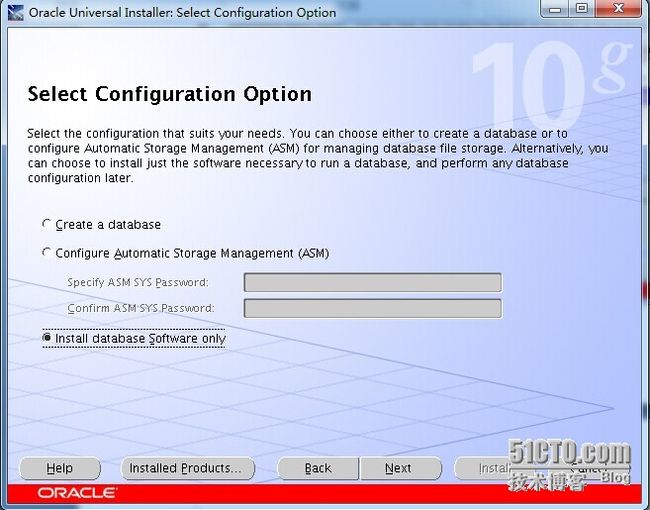

选择Install database software only 点击Next

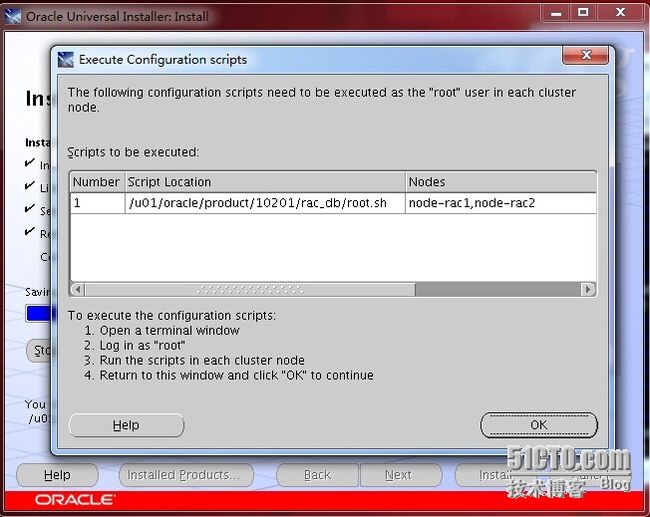

采用root用户分别执行

[root@node-rac1 bin]# /u01/oracle/product/10201/rac_db/root.sh

[root@node-rac2 rac_db]# /u01/oracle/product/10201/rac_db/root.sh

点击OK

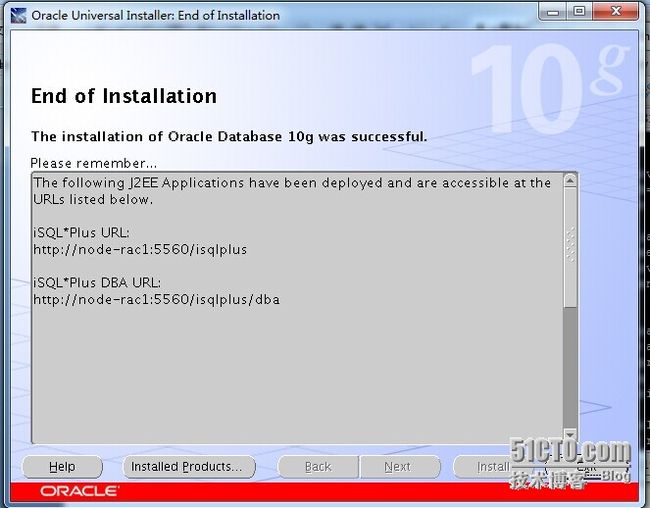

上图说明安装成功,点击Exit

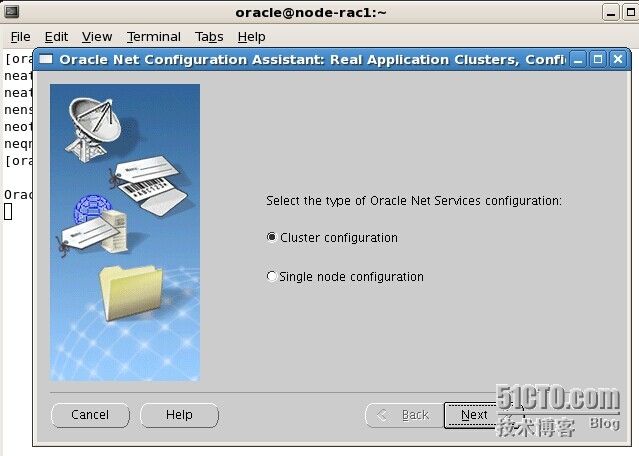

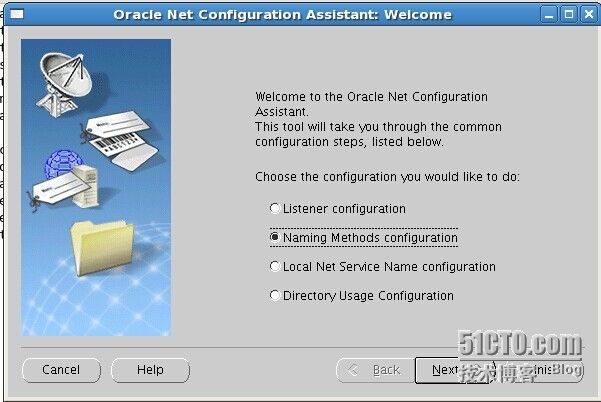

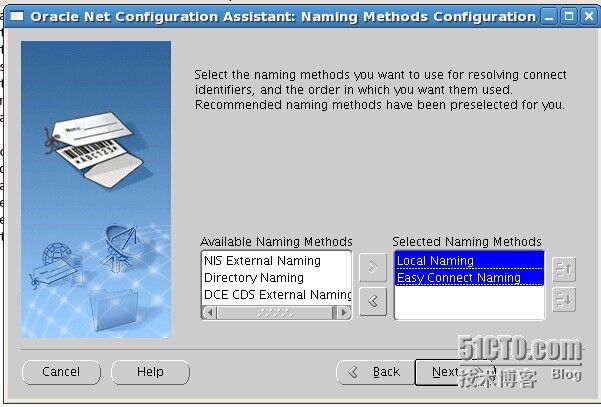

20.配置Oracle Net

[oracle@node-rac1 database]$ cd /u01/oracle/product/10201/rac_db/bin/

[oracle@node-rac1 bin]$ ./netca

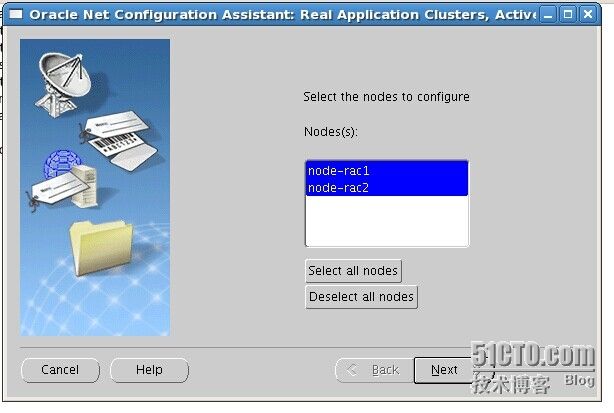

Next

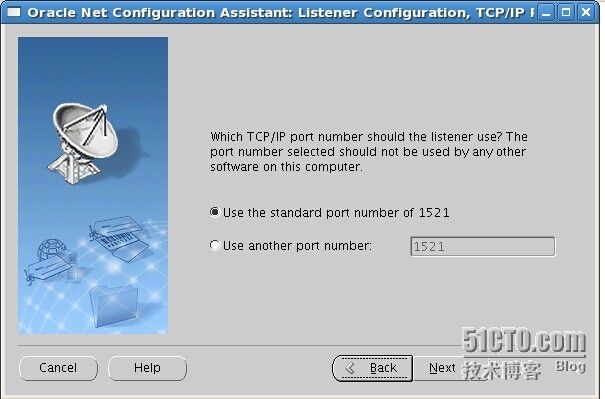

Next一直Next

Next

Next Next Finish

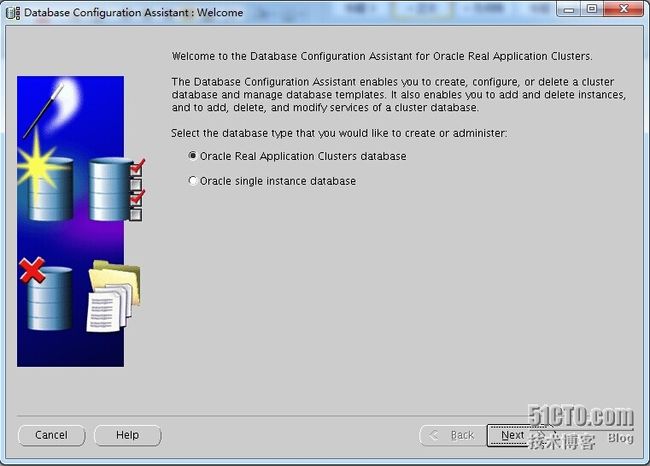

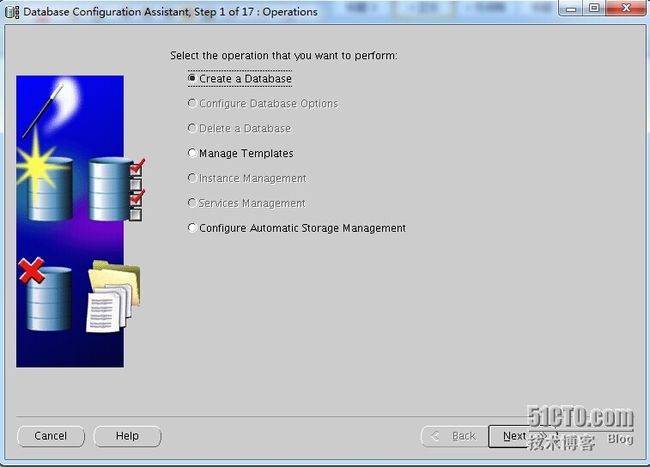

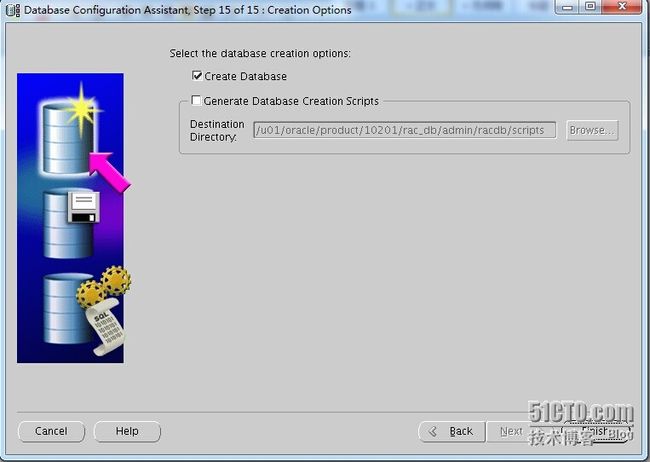

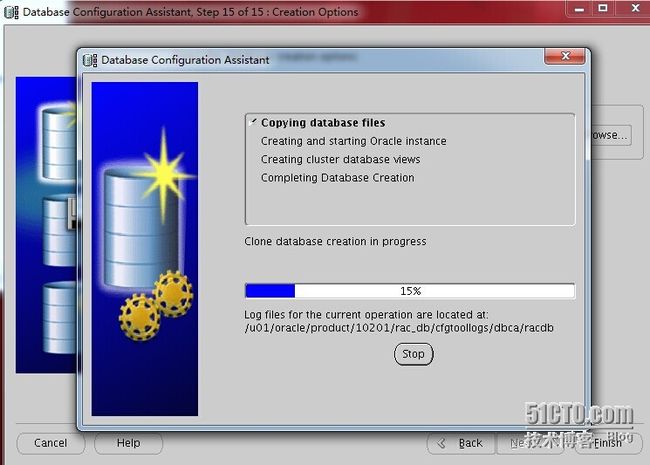

20.创建rac数据库

[oracle@node-rac1 ~]$ /u01/oracle/product/10201/rac_db/bin/dbca

Next

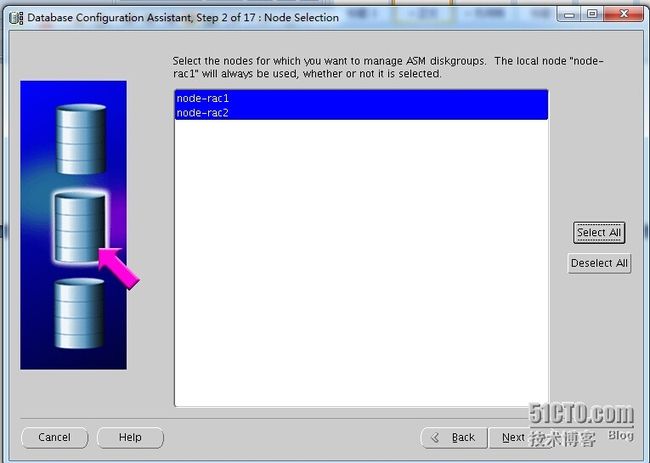

选择configure Automatic storage Managemen 点击Next

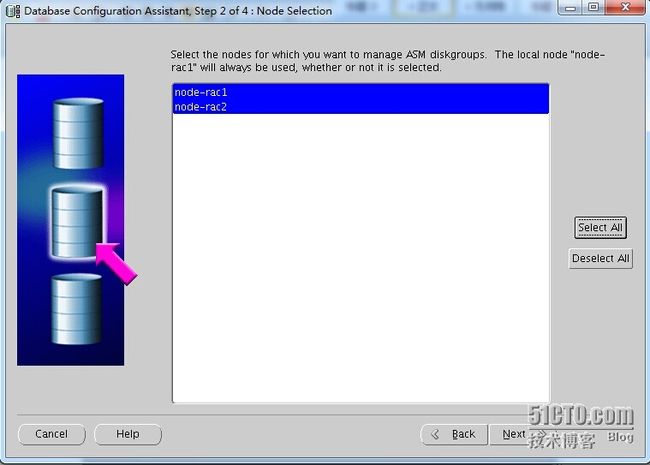

点击select all 在点击 Next

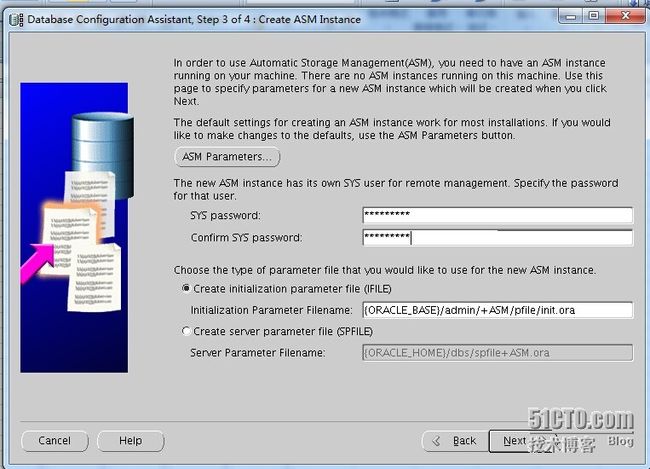

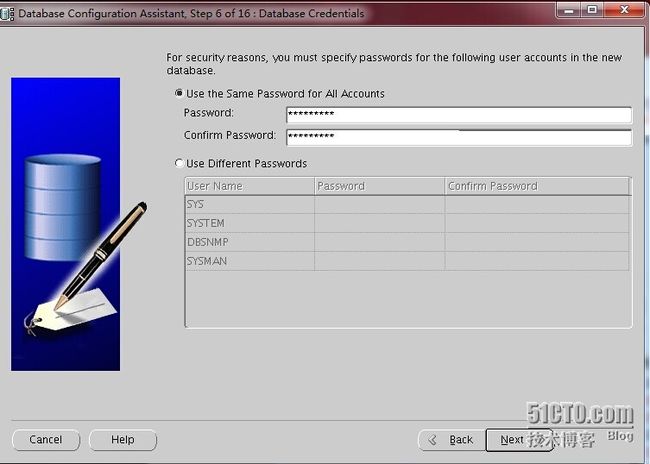

设置ASM实例sys用户口令 NEXT

单击Create New按钮,创建ASM

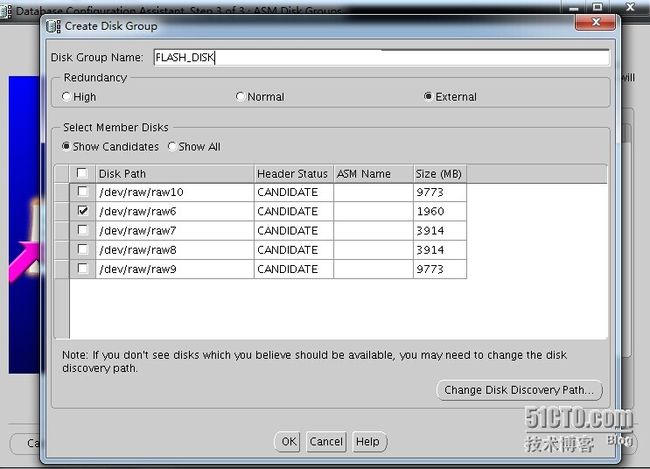

这里输入磁盘级名称为"FLASH_DISK",然后选择冗余策略为"Eexternal",最后选择磁盘设备"/dev/raw/raw6",点击OK

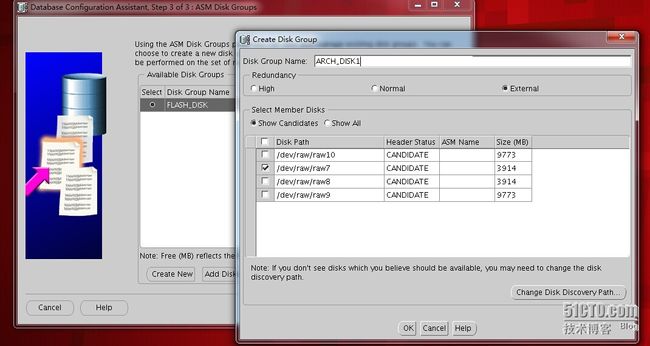

create new 在配置如图片,OK

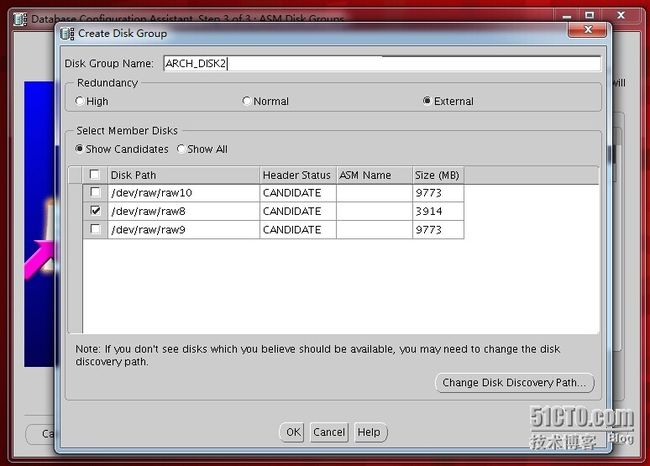

create new 在配置如图片,OK

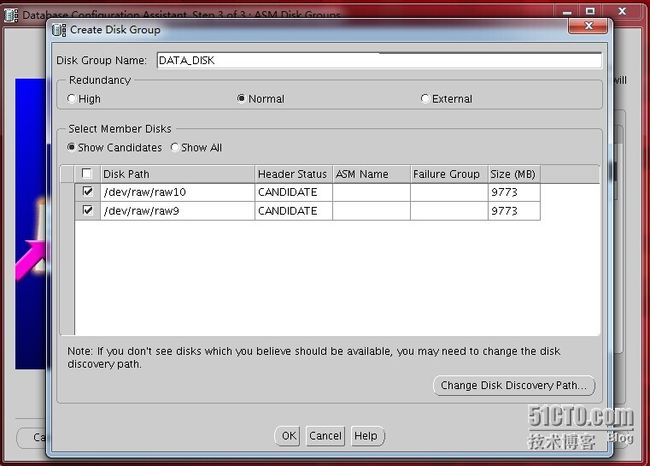

如图OK

后面点击finish按钮

选择 create database 点击next

点select All 在点击Next

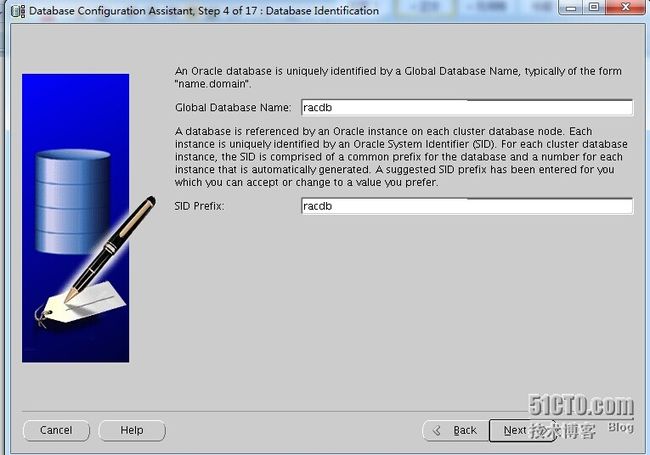

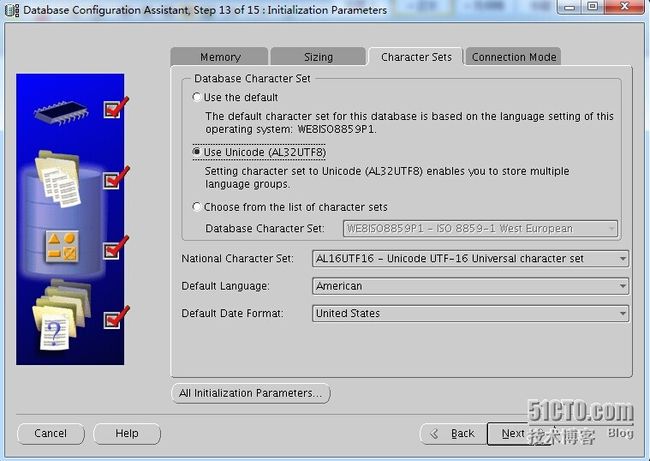

SID为racdb 点击Next

输入口令在Next

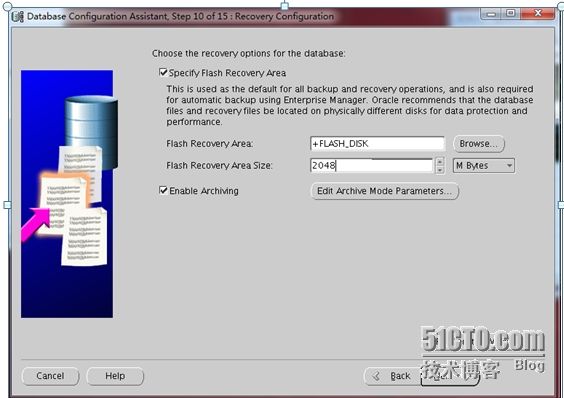

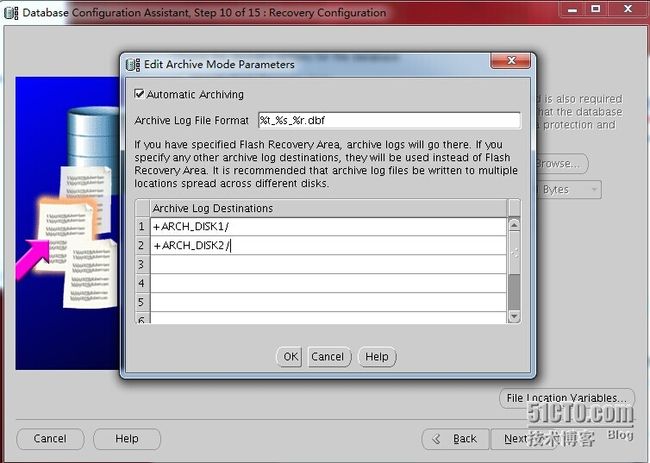

选择Automa (ASM) 点击Next

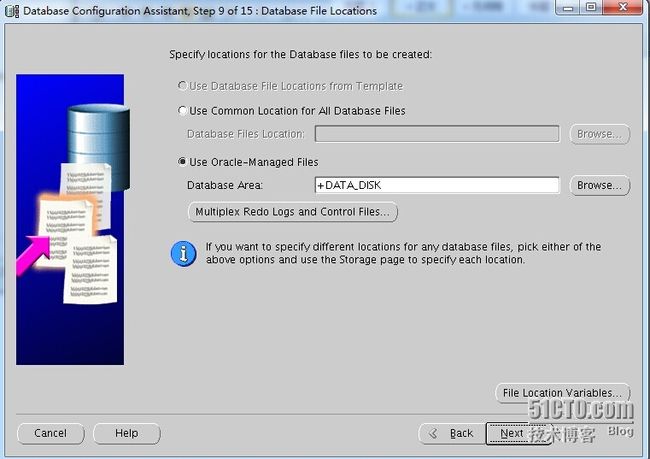

修改成如上图Next

Finish

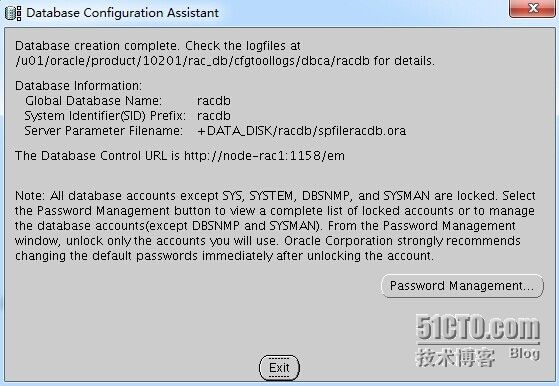

可以进行密码修改操作

点击Exit结束安装

[oracle@node-rac1 ~]$ crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora....SM1.asm application ONLINE ONLINE node-rac1

ora....C1.lsnr application ONLINE ONLINE node-rac1

ora....ac1.gsd application ONLINE ONLINE node-rac1

ora....ac1.ons application ONLINE ONLINE node-rac1

ora....ac1.vip application ONLINE ONLINE node-rac1

ora....SM2.asm application ONLINE ONLINE node-rac2

ora....C2.lsnr application ONLINE ONLINE node-rac2

ora....ac2.gsd application ONLINE ONLINE node-rac2

ora....ac2.ons application ONLINE ONLINE node-rac2

ora....ac2.vip application ONLINE ONLINE node-rac2

ora.racdb.db application ONLINE ONLINE node-rac2

ora....b1.inst application ONLINE ONLINE node-rac1

ora....b2.inst application ONLINE ONLINE node-rac2

如上面说明安装成功