RHCS

基础配置:

172.25.44.250 物理机[rhel7.2]

172.25.44.1 server1.example.com(node1)[rhel6.5]

172.25.44.2 server2.example.com(node2)[rhel6.5]

172.25.44.3 server3.example.com(luci)[rhel6.5]

1.安装基础所需服务:

node1 node2:

# yum install -y ricci

# /etc/init.d/ricci start 开启ricci

# chkconfig ricci on 设置开机自启

Luci:

# yum install -y luci

# /etc/init.d/luci start 开启luci

# chkconfig luci on 设置开机自启

2.利用浏览器访问 luci的8084端口:

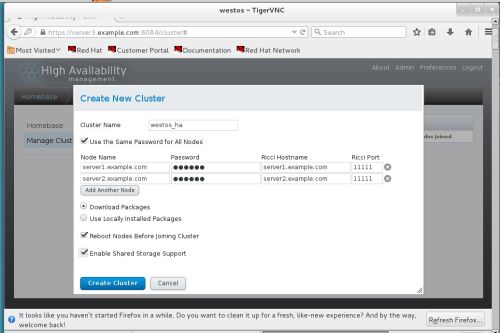

3.在客户端进行基础配置:

4.设置外部fence(物理机)

# yum install -yfence-virtd-multicast fence-virtd-libvirt

# systemctl start fence_virtd 开启fence

# mkdir /etc/cluter/

# dd if=/dev/urandomof=/etc/cluter/fence_xvm.key 制作key文件

# fence_virtd -c

Module search path[/usr/lib64/fence-virt]:

Available backends:

libvirt 0.1

Available listeners:

multicast 1.2

Listener modules are responsible foraccepting requests

from fencing clients.

Listener module [multicast]:

The multicast listener module isdesigned for use environments

where the guests and hosts maycommunicate over a network using

multicast.

The multicast address is the addressthat a client will use to

send fencing requests tofence_virtd.

Multicast IP Address [225.0.0.12]:

Using ipv4 as family.

Multicast IP Port [1229]:

Setting a preferred interface causesfence_virtd to listen only

on that interface. Normally, it listens on all interfaces.

In environments where the virtualmachines are using the host

machine as a gateway, this *must* beset (typically to virbr0).

Set to 'none' for no interface.

Interface [virbr0]:

The key file is the shared keyinformation which is used to

authenticate fencing requests. The contents of this file must

be distributed to each physical hostand virtual machine within

a cluster.

Key File[/etc/cluster/fence_xvm.key]:

Backend modules are responsible forrouting requests to

the appropriate hypervisor ormanagement layer.

Backend module [libvirt]:

Configuration complete.

=== Begin Configuration ===

fence_virtd {

listener= "multicast";

backend= "libvirt";

module_path= "/usr/lib64/fence-virt";

}

listeners {

multicast{

key_file= "/etc/cluster/fence_xvm.key";

address= "225.0.0.12";

interface= "virbr0";

family= "ipv4";

port= "1229";

}

}

backends {

libvirt{

uri= "qemu:///system";

}

}

=== End Configuration ===

Replace /etc/fence_virt.conf withthe above [y/N]? Y

# scp fence_xvm.key [email protected]:/etc/cluter/ 发送给node1 node2

# scp [email protected]:/etc/cluter/

添加node1 node2 的 uuid进入fence

5.添加apache:

1)配置集群:

2)配置集群所需服务:

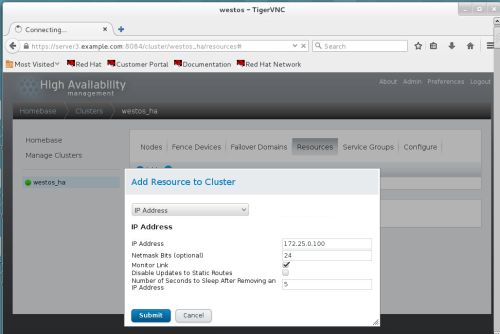

【1】VIP

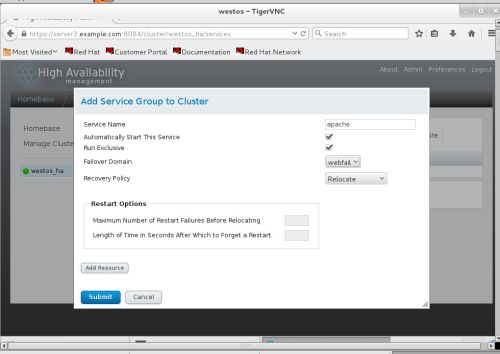

【2】HTTPD

3)建立服务组

客户端(node 1 2 )

yum install -y httpd 安装测试服务

vim /var/www/html/index.html 测试文件

『server1.example.com』

『server2.example.com』

6.测试

# /etc/init.d/httpd stop (server1或server2) 关闭服务

观测结果:

访问vip的web界面切换

#echo c >/proc/sysrq-trigger 挂掉内核

观测结果:

访问vip的web界面切换,且重新开启被夯住的虚拟机

7.服务端(server 3)

添加虚拟内存

### yum install scsi-* -y 安装必要服务

###vim /etc/tgt/targets.conf 修改配置文件

38

39 backing-store /dev/vdb

40 initiator-address 172.25.17.10

41 initiator-address 172.25.17.11

42

###/etc/init.d/tgtd start 开启服务

[root@server3 ~]# tgt-admin -s 显示所有目标(远程客户端)

Target 1:iqn.2017-o2.com.example:server.target1

System information:

Driver: iscsi

State: ready

I_T nexus information:

LUN information:

LUN: 0

Type: controller

SCSI ID: IET 00010000

SCSI SN: beaf10

Size: 0 MB, Block size: 1

Online: Yes

Removable media: No

Prevent removal: No

Readonly: No

Backing store type: null

Backing store path: None

Backing store flags:

LUN: 1

Type: disk

SCSI ID: IET 00010001

SCSI SN: beaf11

Size: 8590 MB, Block size: 512

Online: Yes

Removable media: No

Prevent removal: No

Readonly: No

Backing store type: rdwr

Backing store path: /dev/vdb

Backing store flags:

Account information:

ACL information:

172.25.17.10

172.25.17.11

客户端(server 1 2)

###yum install -y iscsi-* 安装必要服务

iscsiadm -m discovery -t st -p172.25.17.20 客户端远程发现存储

Starting iscsid: [ OK ]

172.25.17.20:3260,1iqn.2017-o2.com.example:server.target1

###iscsiadm -m node -l 列出所有存储节点

Logging in to [iface: default,target: iqn.2017-o2.com.example:server.target1, portal: 172.25.17.20,3260](multiple)

Login to [iface: default, target:iqn.2017-o2.com.example:server.target1, portal: 172.25.17.20,3260] successful.

###[root@server1 ~]# fdisk -l

Disk /dev/vda: 21.5 GB, 21474836480bytes

16 heads, 63 sectors/track, 41610cylinders

Units = cylinders of 1008 * 512 =516096 bytes

Sector size (logical/physical): 512bytes / 512 bytes

I/O size (minimum/optimal): 512bytes / 512 bytes

Disk identifier: 0x0008e924

Device Boot Start End Blocks Id System

/dev/vda1 * 3 1018 512000 83 Linux

Partition 1 does not end on cylinderboundary.

/dev/vda2 1018 41611 20458496 8e Linux LVM

Partition 2 does not end on cylinderboundary.

Disk /dev/mapper/VolGroup-lv_root:19.9 GB, 19906166784 bytes

255 heads, 63 sectors/track, 2420cylinders

Units = cylinders of 16065 * 512 =8225280 bytes

Sector size (logical/physical): 512bytes / 512 bytes

I/O size (minimum/optimal): 512bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/mapper/VolGroup-lv_swap:1040 MB, 1040187392 bytes

255 heads, 63 sectors/track, 126cylinders

Units = cylinders of 16065 * 512 =8225280 bytes

Sector size (logical/physical): 512bytes / 512 bytes

I/O size (minimum/optimal): 512bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sda: 8589 MB, 8589934592bytes

64 heads, 32 sectors/track, 8192cylinders

Units = cylinders of 2048 * 512 =1048576 bytes

Sector size (logical/physical): 512bytes / 512 bytes

I/O size (minimum/optimal): 512bytes / 512 bytes

Disk identifier: 0x00000000

*『仅server 1』

###fdisk -cu /dev/sda 分区

Device contains neither a valid DOSpartition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel withdisk identifier 0x76bc3334.

Changes will remain in memory only,until you decide to write them.

After that, of course, the previouscontent won't be recoverable.

Warning: invalid flag 0x0000 ofpartition table 4 will be corrected by w(rite)

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First sector (2048-16777215, default2048):

Using default value 2048

Last sector, +sectors or+size{K,M,G} (2048-16777215, default 16777215):

Using default value 16777215

Command (m for help): t

Selected partition 1

Hex code (type L to list codes): 8e

Changed system type of partition 1to 8e (Linux LVM)

Command (m for help): p

Disk /dev/sda: 8589 MB, 8589934592bytes

64 heads, 32 sectors/track, 8192cylinders, total 16777216 sectors

Units = sectors of 1 * 512 = 512bytes

Sector size (logical/physical): 512bytes / 512 bytes

I/O size (minimum/optimal): 512bytes / 512 bytes

Disk identifier: 0x76bc3334

Device Boot Start End Blocks Id System

/dev/sda1 2048 16777215 8387584 8e Linux LVM

Command (m for help): w

The partition table has beenaltered!

Calling ioctl() to re-read partitiontable.

Syncing disks.

客户端(server 1 2)

###fdisk -l 查看分区是否一致

Device Boot Start End Blocks Id System

/dev/sda1 2 8192 8387584 8e Linux LVM

[root@server1 ~]# pvcreate /dev/sda1 【server 1】创建pv

Physical volume "/dev/sda1" successfully created

[root@server1 ~]# pvs 刷新

PV VG Fmt Attr PSize PFree

/dev/sda1 lvm2 a-- 8.00g 8.00g

/dev/vda2 VolGroup lvm2 a-- 19.51g 0

[root@server1 ~]# vgcreate clustervg/dev/sda1 【server 1】创建vg

Clustered volume group "clustervg" successfully created

[root@server1 ~]# vgs 刷新

VG #PV #LV #SN Attr VSize VFree

VolGroup 1 2 0wz--n- 19.51g 0

clustervg 1 0 0wz--nc 8.00g 8.00g

[root@server1 ~]# lvcreate -L 4G -ndemo clustervg 【server 1】创建lv

Logical volume "demo" created

[root@server1 ~]# lvs 刷新

LV VG Attr LSize Pool Origin Data% Move LogCpy%Sync Convert

lv_root VolGroup -wi-ao---- 18.54g

lv_swap VolGroup -wi-ao----992.00m

demo clustervg -wi-a----- 4.00g

[root@server1 ~]# mkfs.ext4/dev/clustervg/demo 【server 1】格式化磁盘

mke2fs 1.41.12 (17-May-2010)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=0 blocks, Stripe width=0blocks

262144 inodes, 1048576 blocks

52428 blocks (5.00%) reserved forthe super user

First data block=0

Maximum filesystem blocks=1073741824

32 block groups

32768 blocks per group, 32768fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768,98304, 163840, 229376, 294912, 819200, 884736

Writing inode tables: done

Creating journal (32768 blocks):done

Writing superblocks and filesystemaccounting information: done

### mount /dev/clustervg/demo /mnt 挂载【1】

[root@server1 ~]# cd /mnt/

[root@server1 mnt]# vim index.html 创建测试文件【1】

[root@server1 ~]# umont /mnt/ 卸载【1】

####clusvcadm -d httpd 关闭服务【1】

###clusvcadm -e httpd 开启服务【1】

###clusvcadm -r httpd -mserver2.exmple.com 将服务从1移到2

###lvextend -L +2G/dev/clustervg/demo 扩容磁盘【2】

###resize2fs /dev/clustervg/demo 扩容文件系统【2】

###clusvcadm -d httpd 关闭服务【2】

### lvremove /dev/clustervg/demo 删除lv【2】

###lvcreate -L 2G -n demo clustervg 重做lv【2】

###mkfs.gfs2 -p lock_dlm -j 3 -thaha:mygfs2 /dev/clustervg/demo 重新格式化,改为gfs2【2】

###mount /dev/clustervg/demo /mnt 挂载【1 2】

### vim /mnt/index.html 写测试页面【1 或 2】

###umount /mnt/ 解除挂载【1 2】

### vim /etc/fstab 改为开机自动挂载【1 2】

/dev/clustervg/demo /var/www/html gfs2 _netdev 0 0

###mount -a 刷新挂载【1 2】

###df -h 查看【1 2】

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root 19G 1.1G 17G 6% /

tmpfs 499M 32M 468M 7% /dev/shm

/dev/vda1 485M 33M 427M 8% /boot

/dev/mapper/clustervg-demo 2.0G 388M 1.7G 19% /var/www/html

###clusvcadm -e httpd 开启服务【2】

###lvextend -L +5G/dev/clustervg/demo 扩容内存【2】

###gfs2_grow /dev/clustervg/demo 扩容系统文件【2】

[root@server2 ~]# gfs2_tool journals/dev/clustervg/demo 查看日志个数【2】

journal2 - 128MB

journal1 - 128MB

journal0 - 128MB

3 journal(s) found.

[root@server2 ~]# gfs2_jadd -j 3/dev/clustervg/demo 添加日志个数【2】

Filesystem: /var/www/html

Old Journals 3

New Journals 6

[root@server2 ~]# gfs2_tool journals/dev/clustervg/demo 刷新

journal2 - 128MB

journal3 - 128MB

journal1 - 128MB

journal5 - 128MB

journal4 - 128MB

journal0 - 128MB

6 journal(s) found.

[root@server2 ~]# gfs2_tool sb/dev/clustervg/demo table haha:mygfs2 更改名称【1 2】

You shouldn't change any of thesevalues if the filesystem is mounted.

Are you sure? [y/n] y

current lock table name ="haha:mygfs2"

new lock table name ="haha:mygfs2"

Done

[root@server2 ~]# gfs2_tool sb/dev/clustervg/demo all 显示块的所有信息【1 2】

mh_magic = 0x01161970

mh_type = 1

mh_format = 100

sb_fs_format = 1801

sb_multihost_format = 1900

sb_bsize = 4096

sb_bsize_shift = 12

no_formal_ino = 2

no_addr = 23

no_formal_ino = 1

no_addr = 22

sb_lockproto = lock_dlm

sb_locktable = haha:mygfs2

uuid = ef017251-3b40-8c81-37ce-523de9cf40e6