kubernetes是目前容器编排管理较为活跃的工具,本人最近参考书籍以及网上资料,在内网环境尝试手动安装并记录下来备忘

文中部分包可能需要科学上网,请自行解决

感谢该文作者:http://blog.csdn.net/newcrane/article/details/78952987

一:准备工作

准备3台主机,一台作为master节点,两台作为node节点

192.168.0.44 master

192.168.0.45 node1

192.168.0.46 node2

将上述记录写入三台主机的/etc/hosts文件中

2.关闭3个节点的selinux,swap,firewalld

3.编辑内核参数,写入文件并source

]# cat /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 vm.swappiness=0 ]# sysctl -p /etc/sysctl.d/k8s.conf

4.加载所需模块

]# modprobe br_netfilter ]# echo "modprobe br_netfilter" >> /etc/rc.local

5.设置iptables为ACCEPT

]# /sbin/iptables -P FORWARD ACCEPT ]# echo "sleep 60 && /sbin/iptables -P FORWARD ACCEPT" >> /etc/rc.local

6.安装依赖包

yum install -y epel-release yum install -y yum-utils device-mapper-persistent-data lvm2 net-tools conntrack-tools wget

二.创建CA证书以及秘钥文件

CA证书签名只需要在master节点上进行操作就可以了,完成之后将node所需证书拷贝过去即可。本文采用cfssl进行签名认证

安装cfssl

1)创建目录并cd进入

mkdir /usr/local/cfssl/ cd /usr/local/cfssl/

2)下载所需二进制文件

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

3)赋予执行权限

chmod +x *

4)修改PATH变量并使其生效

]# cat /etc/profile.d/cfssl.sh export PATH=$PATH:/usr/local/cfssl ]# source /etc/profile.d/cfssl.sh

2.创建CA配置文件

]# mkdir /etc/kubernetes/cfssl/

]# cd /etc/kubernetes/cfssl/

]# cat ca-config.json

{

"signing": {

"default": {

"expiry": "8760h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "8760h"

}

}

}

}

3.创建CA证书签名请求

]# cat ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Sichuan",

"L": "Chengdu",

"O": "k8s",

"OU": "System"

}

]

}

4.生成CA 证书和私钥

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

5.创建 kubernetes 证书签名请求文件并生成证书

]# cat kubernetes-csr.json

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.0.44",

"10.254.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Sichuan",

"L": "Chengdu",

"O": "k8s",

"OU": "System"

}

]

}

]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes

上述ip地址自行更改为自身系统中的IP地址10.254.0.1此IP地址为kubernetes服务虚拟地址,对应kube-apiserver服务中定义的地址段第一个可用地址

6.创建并生成admin证书及秘钥

]# cat admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Sichuan",

"L": "Chengdu",

"O": "system:masters",

"OU": "System"

}

]

}

]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

7.创建并生成kube-proxy证书秘钥

]# cat kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Sichuan",

"L": "Chengdu",

"O": "k8s",

"OU": "System"

}

]

}

]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

8.将生成的证书秘钥拷贝至node节点,保证3个节点上都有一份

scp *.pem 192.168.0.45:/etc/kubernetes/cfssl scp *.pem 192.168.0.46:/etc/kubernetes/cfssl

二.部署ETCD

etcd是kubernetes集群的主数据库,本次架构中只需要在主节点安装即可

下载并解压etcd

]# wget https://github.com/coreos/etcd/releases/download/v3.3.2/etcd-v3.3.2-linux-amd64.tar.gz ]# tar xzf etcd-v3.3.2-linux-amd64.tar.gz ]# mv etcd-v3.3.2-linux-amd64 /usr/local/etcd ## 添加PATH路径 ]# cat /etc/profile.d/etcd.sh export PATH=$PATH:/usr/local/etcd/ ]# source /etc/profile.d/etcd.sh

2.创建工作目录

mkdir /var/lib/etcd

3.创建systemd unit

]# cat /usr/lib/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/var/lib/etcd/ ExecStart=/usr/local/etcd/etcd \ --name master \ --cert-file=/etc/kubernetes/cfssl/kubernetes.pem \ --key-file=/etc/kubernetes/cfssl/kubernetes-key.pem \ --peer-cert-file=/etc/kubernetes/cfssl/kubernetes.pem \ --peer-key-file=/etc/kubernetes/cfssl/kubernetes-key.pem \ --trusted-ca-file=/etc/kubernetes/cfssl/ca.pem \ --peer-trusted-ca-file=/etc/kubernetes/cfssl/ca.pem \ --initial-advertise-peer-urls https://192.168.0.44:2380 \ --listen-peer-urls https://192.168.0.44:2380 \ --listen-client-urls https://192.168.0.44:2379,http://127.0.0.1:2379 \ --advertise-client-urls https://192.168.0.44:2379 \ --data-dir=/var/lib/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target

4.重载及启动服务

systemctl daemon-reload systemctl enable etcd systemctl start etcd systemctl status etcd

注:上述unit文件中参数配置也可以使用配置文件形式,只需在[Service]一栏中注明即可,具体配置文件配置方式可参考官网,或者自行上网搜索

EnvironmentFile=-/etc/etcd/etcd.conf

三.部署flannel

flannel是CoreOS提供用于解决Dokcer集群跨主机通讯的覆盖网络工具,也可以使用OVS等工具,3个节点均需要部署flannel

1.下载并安装flannel

]# mkdir /usr/local/flannel ]# cd /usr/local/flannel/ ]# wget https://github.com/coreos/flannel/releases/download/v0.9.1/flannel-v0.9.1-linux-amd64.tar.gz ]# tar -xzvf flannel-v0.9.1-linux-amd64.tar.gz ]# cat /etc/profile.d/flannel export PATH=$PATH:/usr/local/flannel/ ]# source /etc/profile.d/flannel

2.向 etcd 写入网段信息 ,只需要在master节点操作即可

etcdctl --endpoints=https://192.168.0.44:2379 \

--ca-file=/etc/kubernetes/cfssl/ca.pem \

--cert-file=/etc/kubernetes/cfssl/kubernetes.pem \

--key-file=/etc/kubernetes/cfssl/kubernetes-key.pem \

mkdir /kubernetes/network

etcdctl --endpoints=https://192.168.0.44:2379 \

--ca-file=/etc/kubernetes/cfssl/ca.pem \

--cert-file=/etc/kubernetes/cfssl/kubernetes.pem \

--key-file=/etc/kubernetes/cfssl/kubernetes-key.pem \

mk /kubernetes/network/config '{"Network":"172.30.0.0/16","SubnetLen":24,"Backend":{"Type":"vxlan"}}'

3.创建systemd unit 文件

~]# cat /usr/lib/systemd/system/flanneld.service [Unit] Description=Flanneld overlay address etcd agent After=network.target After=network-online.target Wants=network-online.target After=etcd.service Before=docker.service [Service] Type=notify ExecStart=/usr/local/flannel/flanneld \ -etcd-cafile=/etc/kubernetes/cfssl/ca.pem \ -etcd-certfile=/etc/kubernetes/cfssl/kubernetes.pem \ -etcd-keyfile=/etc/kubernetes/cfssl/kubernetes-key.pem \ -etcd-endpoints=https://192.168.0.44:2379 \ -etcd-prefix=/kubernetes/network ExecStartPost=/usr/local/flannel/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker Restart=on-failure [Install] WantedBy=multi-user.target RequiredBy=docker.service

4.重载并启动flannel

systemctl daemon-reload systemctl enable flanneld systemctl start flanneld systemctl status flanneld

可以通过以下命令查看flannel服务状态

~]# etcdctl --endpoints=https://192.168.0.44:2379 \ --ca-file=/etc/kubernetes/cfssl/ca.pem \ --cert-file=/etc/kubernetes/cfssl/kubernetes.pem \ --key-file=/etc/kubernetes/cfssl/kubernetes-key.pem \ ls /kubernetes/network/subnets /kubernetes/network/subnets/172.30.38.0-24 /kubernetes/network/subnets/172.30.37.0-24 /kubernetes/network/subnets/172.30.5.0-24

四.部署 kubectl 工具,创建kubeconfig文件

工具安装需要在3台节点上进行安装,配置文件生成可以在master主机上生成拷贝至node节点

1.下载kubectl并安装

~]# wget https://dl.k8s.io/v1.8.9/kubernetes-server-linux-amd64.tar.gz ~]# tar xzf kubernetes-server-linux-amd64.tar.gz ~]# mv kubernetes /usr/local/ ~]# cat /etc/profile.d/kubernetes.sh export PATH=$PATH:/usr/local/kubernetes/server/bin/

2.创建/root/.kube/config

# 设置集群参数,--server指定Master节点ip kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/cfssl/ca.pem \ --embed-certs=true \ --server=https://192.168.0.44:6443 # 设置客户端认证参数 kubectl config set-credentials admin \ --client-certificate=/etc/kubernetes/cfssl/admin.pem \ --embed-certs=true \ --client-key=/etc/kubernetes/cfssl/admin-key.pem # 设置上下文参数 kubectl config set-context kubernetes \ --cluster=kubernetes \ --user=admin # 设置默认上下文 kubectl config use-context kubernetes

3.创建bootstrap.kubeconfig

#生成token 变量export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ') cat > token.csv <4.生成kube-proxy.kubeconfig

# 设置集群参数 --server参数为master ip kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/cfssl/ca.pem \ --embed-certs=true \ --server=https://192.168.0.44:6443 \ --kubeconfig=kube-proxy.kubeconfig # 设置客户端认证参数 kubectl config set-credentials kube-proxy \ --client-certificate=/etc/kubernetes/cfssl/kube-proxy.pem \ --client-key=/etc/kubernetes/cfssl/kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=kube-proxy.kubeconfig # 设置上下文参数 kubectl config set-context default \ --cluster=kubernetes \ --user=kube-proxy \ --kubeconfig=kube-proxy.kubeconfig # 设置默认上下文 kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig mv kube-proxy.kubeconfig /etc/kubernetes/5.将生成的congfig文件拷贝至node节点

scp /etc/kubernetes/bootstrap.kubeconfig /etc/kubernetes/kube-proxy.kubeconfig 192.168.0.45:/etc/kubernetes/ scp /etc/kubernetes/bootstrap.kubeconfig /etc/kubernetes/kube-proxy.kubeconfig 192.168.0.46:/etc/kubernetes/

五.部署master节点

master节点上面需要部署3个组件,分别是kube-apiserver,kube-controller-manager,kube-scheduler

kube-apiserver:提供了HTTP Rest接口的关键服务进程,是kubernetes里所有增删改查等操作的唯一入口,也是集群控制的唯一入口

kube-controller-manager:kubernetes里所有资源的对象的自动化控制中心

kube-scheduler:负责资源调度的进程

1.编辑kube-apiserver的 systemd unit 文件

~]# cat /usr/lib/systemd/system/kube-apiserver.service [Unit] Description=Kubernetes API Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=network.target After=etcd.service [Service] ExecStart=/usr/local/kubernetes/server/bin/kube-apiserver \ --logtostderr=true \ --admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota,NodeRestriction \ --advertise-address=192.168.0.44 \ --bind-address=192.168.0.44 \ --insecure-bind-address=127.0.0.1 \ --authorization-mode=Node,RBAC \ --runtime-config=rbac.authorization.k8s.io/v1alpha1 \ --kubelet-https=true \ --enable-bootstrap-token-auth \ --token-auth-file=/etc/kubernetes/token.csv \ --service-cluster-ip-range=10.254.0.0/16 \ --service-node-port-range=8400-10000 \ --tls-cert-file=/etc/kubernetes/cfssl/kubernetes.pem \ --tls-private-key-file=/etc/kubernetes/cfssl/kubernetes-key.pem \ --client-ca-file=/etc/kubernetes/cfssl/ca.pem \ --service-account-key-file=/etc/kubernetes/cfssl/ca-key.pem \ --etcd-cafile=/etc/kubernetes/cfssl/ca.pem \ --etcd-certfile=/etc/kubernetes/cfssl/kubernetes.pem \ --etcd-keyfile=/etc/kubernetes/cfssl/kubernetes-key.pem \ --etcd-servers=https://192.168.0.44:2379 \ --enable-swagger-ui=true \ --allow-privileged=true \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=100 \ --audit-log-path=/var/lib/audit.log \ --event-ttl=1h \ --v=2 Restart=on-failure RestartSec=5 Type=notify LimitNOFILE=65536 [Install] WantedBy=multi-user.target

上述启动参数可以通过配置文件方式实现

~]# cat /usr/lib/systemd/system/kube-apiserver.service [Unit] Description=Kubernetes API Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=network.target After=etcd.service [Service] EnvironmentFile=-/etc/kubernetes/config EnvironmentFile=-/etc/kubernetes/apiserver User=kube ExecStart=/usr/bin/kube-apiserver \ $KUBE_LOGTOSTDERR \ $KUBE_LOG_LEVEL \ $KUBE_ETCD_SERVERS \ $KUBE_API_ADDRESS \ $KUBE_API_PORT \ $KUBELET_PORT \ $KUBE_ALLOW_PRIV \ $KUBE_SERVICE_ADDRESSES \ $KUBE_ADMISSION_CONTROL \ $KUBE_API_ARGS Restart=on-failure Type=notify LimitNOFILE=65536 [Install] WantedBy=multi-user.target ~]# cat /etc/kubernetes/apiserver ### # kubernetes system config # # The following values are used to configure the kube-apiserver # # The address on the local server to listen to. KUBE_API_ADDRESS="--insecure-bind-address=127.0.0.1" # The port on the local server to listen on. # KUBE_API_PORT="--port=8080" # Port minions listen on # KUBELET_PORT="--kubelet-port=10250" # Comma separated list of nodes in the etcd cluster KUBE_ETCD_SERVERS="--etcd-servers=http://127.0.0.1:2379" # Address range to use for services KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16" # default admission control policies KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota" # Add your own! KUBE_API_ARGS=""以上两种方式均可,请自行选择

2.重载并启动kube-apiserver

systemctl daemon-reload systemctl enable kube-apiserver systemctl start kube-apiserver systemctl status kube-apiserver3.编辑kube-controller-manager systemd unit文件

~]# cat /usr/lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service] ExecStart=/usr/local/kubernetes/server/bin/kube-controller-manager \ --logtostderr=true \ --address=127.0.0.1 \ --master=http://127.0.0.1:8080 \ --allocate-node-cidrs=true \ --service-cluster-ip-range=10.254.0.0/16 \ --cluster-cidr=172.30.0.0/16 \ --cluster-name=kubernetes \ --cluster-signing-cert-file=/etc/kubernetes/cfssl/ca.pem \ --cluster-signing-key-file=/etc/kubernetes/cfssl/ca-key.pem \ --service-account-private-key-file=/etc/kubernetes/cfssl/ca-key.pem \ --root-ca-file=/etc/kubernetes/cfssl/ca.pem \ --leader-elect=true \ --v=2 Restart=on-failure LimitNOFILE=65536 RestartSec=5 [Install] WantedBy=multi-user.target4.重载并启动kube-controller-manager

systemctl daemon-reload systemctl enable kube-controller-manager systemctl start kube-controller-manager systemctl status kube-controller-manager5.编辑kube-scheduler systemd unit文件

~]# cat /usr/lib/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service] ExecStart=/usr/local/kubernetes/server/bin/kube-scheduler \ --logtostderr=true \ --address=127.0.0.1 \ --master=http://127.0.0.1:8080 \ --leader-elect=true \ --v=2 Restart=on-failure LimitNOFILE=65536 RestartSec=5 [Install] WantedBy=multi-user.target6.重载并启动kube-scheduler

systemctl daemon-reload systemctl enable kube-scheduler systemctl start kube-scheduler systemctl status kube-scheduler7.验证master节点功能

~]# kubectl get componentstatuses NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-0 Healthy {"health":"true"}

六.部署node节点

node节点上主要部署两个主机kubelet,kube-proxy,docker容器的安装本文不在描述,读者自行查询资料安装,或者使用yum安装也行

kubelet:负责pod对应容器的创建,启停等任务,同时负责与master节点的通信协作

kube-proxy:实现kubernetes services的通信与负载均衡机制的组件

1.kubelet systemd unit的编辑

~]# cat /usr/lib/systemd/system/kubelet.service [Unit] Description=Kubernetes Kubelet Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=docker.service Requires=docker.service [Service] WorkingDirectory=/var/lib/kubelet ExecStart=/usr/local/kubernetes/server/bin/kubelet \ --address=192.168.0.45 \ --hostname-override=192.168.0.45 \ --pod-infra-container-image=docker.io/w564791/pod-infrastructure:latest \ --experimental-bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig \ --kubeconfig=/etc/kubernetes/kubelet.kubeconfig \ --require-kubeconfig \ --cert-dir=/etc/kubernetes/cfssl \ --container-runtime=docker \ --cluster-dns=10.254.0.2 \ --cluster-domain=cluster.local \ --hairpin-mode promiscuous-bridge \ --allow-privileged=true \ --serialize-image-pulls=false \ --register-node=true \ --logtostderr=true \ --cgroup-driver=systemd \ --v=2 Restart=on-failure KillMode=process LimitNOFILE=65536 RestartSec=5 [Install] WantedBy=multi-user.target2.重载并启动kubelet

systemctl daemon-reload systemctl enable kubelet systemctl start kubelet systemctl status kubelet3.对节点进行授权操作,在master节点上进行操作

~]# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-485JsNfjSZ0GCiXVCGpo5AmcrIOtwG2OpwJG2OpbQug 3d kubelet-bootstrap Pending node-csr-Vlaiy1ANgeptqU5OA77Xe8Fs-O6adY8891YVbTjYlpI 3d kubelet-bootstrap Pending ~]# kubectl certificate approve node-csr-485JsNfjSZ0GCiXVCGpo5AmcrIOtwG2OpwJG2OpbQug certificatesigningrequest "node-csr-485JsNfjSZ0GCiXVCGpo5AmcrIOtwG2OpwJG2OpbQug" approved ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION 192.168.0.45 Ready3d v1.8.9 192.168.0.46 Ready 3d v1.8.9 4.编辑 kube-proxy systemd unit文件

###创建工作目录 ~]# mkdir -p /var/lib/kube-proxy ~]# cat /usr/lib/systemd/system/kube-proxy.service [Unit] Description=Kubernetes Kube-Proxy Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=network.target [Service] WorkingDirectory=/var/lib/kube-proxy ExecStart=/usr/local/kubernetes/server/bin/kube-proxy \ --bind-address=192.168.0.45 \ --hostname-override=192.168.0.45 \ --cluster-cidr=10.254.0.0/16 \ --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig \ --logtostderr=true \ --v=2 Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target5.重载并启动kube-proxy

systemctl daemon-reload systemctl enable kube-proxy systemctl start kube-proxy systemctl status kube-proxy

七.安装部署一个tomcat+mysql应用

1.创建mysql.yaml文件

~]# cat mysql.yaml apiVersion: v1 kind: ReplicationController metadata: name: mysql spec: replicas: 1 selector: app: mysql template: metadata: labels: app: mysql spec: containers: - name: mysql image: docker.io/mysql:5.6 ports: - containerPort: 3306 env: - name: MYSQL_ROOT_PASSWORD value: "123456"变量MYSQL_ROOT_PASSWORD必须给出,否则容器启动不成功,密码给为123456的原因是作者使用的tomcat应用默认mysql密码为123456,本文作者并不想再去研究docker镜像制作

2.启动该RC

kubectl create -f mysql.yaml可以通过下列命令查看该rc运行状态

kubectl get rc kubectl get pods kubectl describe pod POD-NAME #其中POD-NAME为get pod 命令中查询到的mysql pod名3.创建与之对应的kubernetes service

~]# cat mysqlsvc.yaml apiVersion: v1 kind: Service metadata: name: mysql spec: ports: - name: mysql-svc port: 3306 targetPort: 3306 nodePort: 8606 selector: app: mysql type: NodePort文件中NodePort定义该服务可以通过外网地址访问

4.启动并查看运行状态

kubectl create -f mysqlsvc.yaml kubectl get svc5.编辑tomcat YAML文件并启动应用

~]# cat tomcat.yaml apiVersion: v1 kind: ReplicationController metadata: name: myweb spec: replicas: 2 selector: app: myweb template: metadata: labels: app: myweb spec: containers: - name: myweb image: docker.io/kubeguide/tomcat-app:v1 ports: - containerPort: 8080 env: - name: MYSQL_SERVICE_HOST value: '192.168.0.45' - name: MYSQL_SERVICE_PORT value: '8606' #### 上文中 MYSQL_SERVICE_HOST 参数可以通过pod详情能够看到,因为本文作者并没有完全理解内部应该怎么访问(试过配置为cluster_ip,mysql) #### 所以暂时使用外网地址进行访问 #### tomcat SVC 文件 ~]# cat tomcatsvc.yaml apiVersion: v1 kind: Service metadata: name: myweb spec: type: NodePort ports: - port: 8080 nodePort: 8500 selector: app: myweb启动应用以及SVC

kubectl create -f tomcat.yaml kubectl create -f tomcatsvc.yaml

同理可以通过kubectl get pod查看运行情况

6.访问

目前作者是通过访问node IP地址加上配置文件中的端口进行访问,其他访问形式后续再补充吧

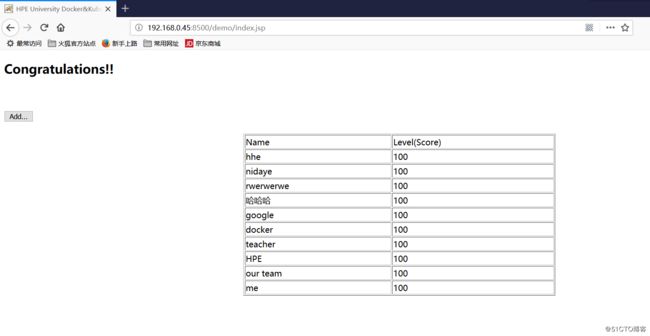

比如访问 http://192.168.0.45:8500/demo/ 结果如下图

最后:文章中的镜像请读者自行下载并导入本地docker中,命令如下

docker save docker.io/kubeguide/tomcat-app:v1 >tomcat_app.tar #### 将生成的tar包上传至需要安装的主机上 docker load < tomcat_app.tar然后在yaml文件中配置对应镜像安装即可