1. 日志采集方案

因为方案一在业界使用更为广泛,并且官方也更为推荐,所以我们基于方案一来做k8s的日志采集。

2. 架构选型

-

ELK

Filebeat(收集)、Logstash(过滤)、Kafka(缓冲)、Elasticsearch(存储)、Kibana(展示)

EFK

Fluentbit (收集)、Fluentd(过滤)、Elasticsearch(存储)、Kibana(展示)

本文使用Fluentd--->Elasticsearch -->Kibana

3. 部署Elasticsearch 集群

使用3个 Elasticsearch Pod 来避免高可用下多节点集群中出现的“脑裂”问题

创建一个名为 logging 的 namespace

$ kubectl create namespace logging

创建建一个名为 elasticsearch 的无头服务

elasticsearch-svc.yaml

kind: Service

apiVersion: v1

metadata:

name: elasticsearch

namespace: logging

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node

创建服务资源对象

$ kubectl create -f elasticsearch-svc.yaml

$ kubectl get svc -n logging

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch ClusterIP None 9200/TCP,9300/TCP 13d

创建ES持久化存储

创建StorageClass

创建elasticsearch-storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: es-data-db

provisioner: fuseim.pri/ifs

创建服务资源对象

$ kubectl create -f elasticsearch-storageclass.yaml

$ kubectl get storageclass

NAME PROVISIONER AGE

es-data-db fuseim.pri/ifs 13d

使用StatefulSet 创建Es Pod

Kubernetes StatefulSet 允许我们为 Pod 分配一个稳定的标识和持久化存储,Elasticsearch 需要稳定的存储来保证 Pod 在重新调度或者重启后的数据依然不变,所以需要使用 StatefulSet 来管理 Pod。

elasticsearch-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet #定义了名为 es-cluster 的 StatefulSet 对象

metadata:

name: es-cluster

namespace: logging

spec:

serviceName: elasticsearch # 和前面创建的 Service 相关联,这可以确保使用以下 DNS 地址访问 StatefulSet 中的每一个 Pod:es-cluster-[0,1,2].elasticsearch.logging.svc.cluster.local,其中[0,1,2]对应于已分配的 Pod 序号。

replicas: 3 #3个副本

selector:

matchLabels:

app: elasticsearch #设置匹配标签为app=elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec: #定义Pod模板

containers:

- name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch-oss:6.4.3

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

- containerPort: 9200

name: rest

protocol: TCP

- containerPort: 9300

name: inter-node

protocol: TCP

volumeMounts: #声明数据持久化目录

- name: data

mountPath: /usr/share/elasticsearch/data

env: #定义变量

- name: cluster.name #Elasticsearch 集群的名称

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: discovery.zen.ping.unicast.hosts #设置在 Elasticsearch 集群中节点相互连接的发现方法。

value: "es-cluster-0.elasticsearch,es-cluster-1.elasticsearch,es-cluster-2.elasticsearch" # 在一个namespace,EsPodDNS 域简写

- name: discovery.zen.minimum_master_nodes #我们将其设置为(N/2) + 1,N是我们的群集中符合主节点的节点的数量。我们有3个 Elasticsearch 节点,因此我们将此值设置为2(向下舍入到最接近的整数)

value: "2"

- name: ES_JAVA_OPTS #JVM限制

value: "-Xms512m -Xmx512m"

initContainers: #环境初始化容器

- name: fix-permissions

image: busybox

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

securityContext:

privileged: true

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: increase-vm-max-map

image: busybox

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

volumeClaimTemplates: #定义持久化模板

- metadata:

name: data

labels:

app: elasticsearch

spec:

accessModes: [ "ReadWriteOnce" ] #设置访问模式,这意味着它只能被 mount 到单个节点上进行读写

storageClassName: es-data-db #使用Storagerclass自动创建PV

resources:

requests:

storage: 100Gi #容量

创建服务资源对象

$ kubectl create -f elasticsearch-statefulset.yaml

statefulset.apps/es-cluster created

$ kubectl get pods -n logging

NAME READY STATUS RESTARTS AGE

es-cluster-0 1/1 Running 0 20h

es-cluster-1 1/1 Running 0 20h

es-cluster-2 1/1 Running 0 20h

验证ES服务是否正常

将本地端口9200转发到 Elasticsearch 节点(如es-cluster-0)对应的端口:

$ kubectl port-forward es-cluster-0 9200:9200 --namespace=logging

Forwarding from 127.0.0.1:9200 -> 9200

Forwarding from [::1]:9200 -> 9200

其他终端请求es

$ curl http://localhost:9200/_cluster/state?pretty

{

"cluster_name" : "k8s-logs",

"compressed_size_in_bytes" : 348,

"cluster_uuid" : "QD06dK7CQgids-GQZooNVw",

"version" : 3,

"state_uuid" : "mjNIWXAzQVuxNNOQ7xR-qg",

"master_node" : "IdM5B7cUQWqFgIHXBp0JDg",

"blocks" : { },

"nodes" : {

"u7DoTpMmSCixOoictzHItA" : {

"name" : "es-cluster-1",

"ephemeral_id" : "ZlBflnXKRMC4RvEACHIVdg",

"transport_address" : "10.244.4.191:9300",

"attributes" : { }

}

-------

4. 部署Kibana服务

kibana.yaml

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: logging

labels:

app: kibana

spec:

ports:

- port: 5601

type: NodePort

selector:

app: kibana

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: logging

labels:

app: kibana

spec:

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana-oss:6.4.3

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch:9200

ports:

- containerPort: 5601

使用 kubectl 工具创建:

$ kubectl create -f kibana.yaml

service/kibana created

deployment.apps/kibana created

$ kubectl get pods --namespace=logging

NAME READY STATUS RESTARTS AGE

es-cluster-0 1/1 Running 1 13d

es-cluster-1 1/1 Running 1 13d

es-cluster-2 1/1 Running 1 13d

kibana-7fc9f8c964-nnzx4 1/1 Running 1 13d

$ kubectl get svc --namespace=logging

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch ClusterIP None 9200/TCP,9300/TCP 13d

kibana NodePort 10.111.51.138 5601:31284/TCP 13d

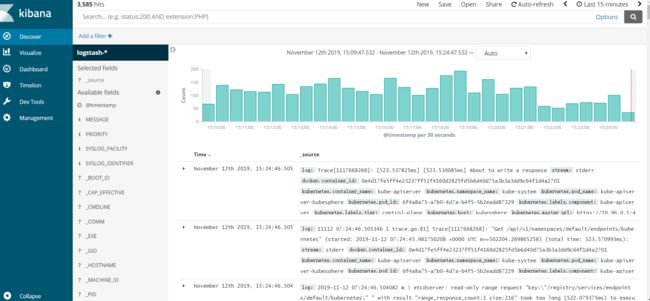

在浏览器中打开http://<任意节点IP>:31284

5. 部署Fluent

使用DasemonSet 控制器来部署 Fluentd 应用,确保在集群中的每个节点上始终运行一个 Fluentd 容器

新建fluentd-configmap.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: fluentd-config

namespace: logging

labels:

addonmanager.kubernetes.io/mode: Reconcile

data:

system.conf: |-

root_dir /tmp/fluentd-buffers/

containers.input.conf: |-

@id fluentd-containers.log #表示引用该日志源的唯一标识符,该标识可用于进一步过滤和路由结构化日志数据

@type tail #Fluentd 内置的指令,tail表示 Fluentd 从上次读取的位置通过 tail 不断获取数据,另外一个是http表示通过一个 GET 请求来收集数据。

path /var/log/containers/*.log #tail类型下的特定参数,告诉 Fluentd 采集/var/log/containers目录下的所有日志,这是 docker 在 Kubernetes 节点上用来存储运行容器 stdout 输出日志数据的目录。

pos_file /var/log/es-containers.log.pos #检查点,如果 Fluentd 程序重新启动了,它将使用此文件中的位置来恢复日志数据收集。

time_format %Y-%m-%dT%H:%M:%S.%NZ

localtime

tag raw.kubernetes.* #用来将日志源与目标或者过滤器匹配的自定义字符串,Fluentd 匹配源/目标标签来路由日志数据

format json

read_from_head true

@id raw.kubernetes

@type detect_exceptions

remove_tag_prefix raw

message log

stream stream

multiline_flush_interval 5

max_bytes 500000

max_lines 1000

system.input.conf: |-

@id journald-docker

@type systemd

filters [{ "_SYSTEMD_UNIT": "docker.service" }]

@type local

persistent true

read_from_head true

tag docker

@id journald-kubelet

@type systemd

filters [{ "_SYSTEMD_UNIT": "kubelet.service" }]

@type local

persistent true

read_from_head true

tag kubelet

forward.input.conf: |-

@type forward

output.conf: |-

@type kubernetes_metadata

#标识一个目标标签,后面是一个匹配日志源的正则表达式,我们这里想要捕获所有的日志并将它们发送给 Elasticsearch,所以需要配置成**。

@id elasticsearch

@type elasticsearch #输出到 Elasticsearch

@log_level info #要捕获的日志级别 INFO级别及以上

include_tag_key true

host elasticsearch

port 9200

logstash_format true #Elasticsearch 服务对日志数据构建反向索引进行搜索,将 logstash_format 设置为true,Fluentd 将会以 logstash 格式来转发结构化的日志数据。

request_timeout 30s

# Fluentd 允许在目标不可用时进行缓存,比如,如果网络出现故障或者 Elasticsearch 不可用的时候。缓冲区配置也有助于降低磁盘的 IO。

@type file

path /var/log/fluentd-buffers/kubernetes.system.buffer

flush_mode interval

retry_type exponential_backoff

flush_thread_count 2

flush_interval 5s

retry_forever

retry_max_interval 30

chunk_limit_size 2M

queue_limit_length 8

overflow_action block

配置 docker 容器日志目录以及 docker、kubelet 应用的日志的收集,收集到数据经过处理后发送到 elasticsearch:9200 服务

新建一个 fluentd-daemonset.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd-es

namespace: logging

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd-es

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups:

- ""

resources:

- "namespaces"

- "pods"

verbs:

- "get"

- "watch"

- "list"

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd-es

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

subjects:

- kind: ServiceAccount

name: fluentd-es

namespace: logging

apiGroup: ""

roleRef:

kind: ClusterRole

name: fluentd-es

apiGroup: ""

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd-es

namespace: logging

labels:

k8s-app: fluentd-es

version: v2.0.4

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: fluentd-es

version: v2.0.4

template:

metadata:

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

version: v2.0.4

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

serviceAccountName: fluentd-es

containers:

- name: fluentd-es

image: cnych/fluentd-elasticsearch:v2.0.4

env:

- name: FLUENTD_ARGS

value: --no-supervisor -q

resources:

limits:

memory: 500Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: config-volume

mountPath: /etc/fluent/config.d

nodeSelector: #节点选择

beta.kubernetes.io/fluentd-ds-ready: "true" #节点需有这个标签才会部署收集

tolerations: #添加容忍

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: config-volume

configMap:

name: fluentd-config

为节点添加标签

$ kubectl label nodes k8s-node01 beta.kubernetes.io/fluentd-ds-ready=true

$ kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

k8s-node01 Ready 18d v1.15.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/fluentd-ds-ready=true,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node01,kubernetes.io/os=linux

kubesphere Ready master 20d v1.15.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/fluentd-ds-ready=true,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=kubesphere,kubernetes.io/os=linux,node-role.kubernetes.io/master=

部署资源对象

$ kubectl create -f fluentd-configmap.yaml

configmap "fluentd-config" created

$ kubectl create -f fluentd-daemonset.yaml

serviceaccount "fluentd-es" created

clusterrole.rbac.authorization.k8s.io "fluentd-es" created

clusterrolebinding.rbac.authorization.k8s.io "fluentd-es" created

daemonset.apps "fluentd-es" created

$ kubectl get pods -n logging

NAME READY STATUS RESTARTS AGE

es-cluster-0 1/1 Running 1 18d

es-cluster-1 1/1 Running 1 18d

es-cluster-2 1/1 Running 1 18d

fluentd-es-442jk 1/1 Running 0 4d22h

fluentd-es-knp2k 1/1 Running 0 4d22h

kibana-7fc9f8c964-nnzx4 1/1 Running 1 18d

$ kubectl get svc -n logging

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch ClusterIP None 9200/TCP,9300/TCP 18d

kibana NodePort 10.111.51.138 5601:31284/TCP 18d

访问Kibana页面添加索引

参考:

https://www.qikqiak.com/k8s-book/docs/62.%E6%90%AD%E5%BB%BA%20EFK%20%E6%97%A5%E5%BF%97%E7%B3%BB%E7%BB%9F.html