1.hadoop集群的启动

cd $HADOOP_HOME //进入hadoop安装目录

sbin/start-dfs.sh //启动hdfs

sbin/start-yarn.sh //启动yarn

(或start-all.sh)

集群关闭:

cd $HADOOP_HOME //进入hadoop安装目录

sbin/stop-yarn.sh //启动hdfs

sbin/stop-dfs.sh //启动yarn

(或stop-all.sh)

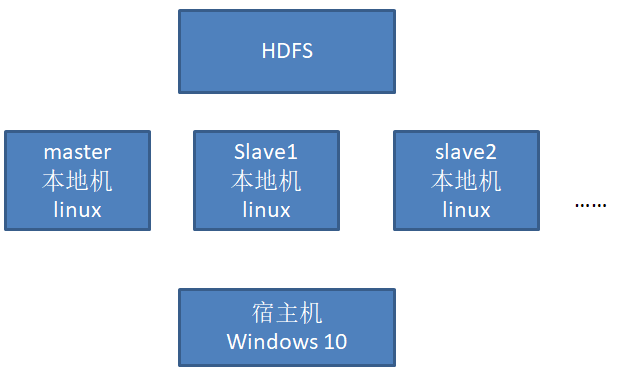

集群的架构:

master节点的ip是192.168.26.110

查看集群的运行状态:

http://192.168.56.110:50070/

查看集群的目录结构:

http://192.168.56.110:50070/explorer.html

2:查看、创建、删除目录:

[root@master conf]# hadoop fs -ls / ##查看根目录

Hadoop fs -mkdir /test ##创建目录

Hadoop fs -mkdir -p /test/a/b ##创建多级文件

删除文件和目录

Hadoop fs -rm /hello.txt ##删除文件

Hadoop fs -rm -R /test ##递归删除文件夹

4:上传文件

方法1:hadoop fs -put localfile /user/hadoop/hadoopfile

案例: hadoop fs -put word.txt /user/root/word1.txt

方法2:Usage: hadoop fs -copyFromLocal

案例:hadoop fs –copyFromLocal word.txt /user/root

方法3:Usage: hadoop fs -moveFromLocal

案例:hadoop fs -moveFromLocal word.txt /user/root/word2.txt

5:下载文件

方法1:hadoop fs -get /user/hadoop/file localfile

案例:hadoop fs -get /user/root/word1.txt .

方法2:Usage: hadoop fs -copyToLocal [-ignorecrc] [-crc] URI

案例:hadoop fs –copyToLocal /user/root/word.txt . ##.下载到当前目录

方法3:Usage: hadoop fs -moveToLocal [-crc]

案例:hadoop fs –moveToLocal /user/root/word2.txt .

Displays a “Not implemented yet” message.

6:查看内容

hadoop fs -cat hdfs://nn1.example.com/file1

案例:hadoop fs -cat /user/root/word.txt

hadoop fs -tail pathname

案例:hadoop fs -tail /user/root/word2.txt

7:删除文件

hadoop fs -rm hdfs://nn.example.com/file /user/hadoop/emptydir

案例:hadoop fs –rm /user/root/word2.txt