人人都可以掌握的实用数据分析。内容涵盖:网络爬虫,数据分析,数据可视化,数据保存到 csv 和 excel 文件,以及命令行传参。麻雀虽小,五脏俱全。

1. 准备工作

1.1 用到技术

- python3

- requests: http 爬取 json 数据

- pandas: 分析,保存数据

- matplotlib: 数据可视化分析

1.2 安装

如已安装,请跳过。

pip install requests

pip install pandas

pip install matplotlib

1.3 导入

import requests

import pandas as pd

import matplotlib.pyplot as plt

%matplotlib inline

2. URL分析

2.1 复制 json 接口请求 URL

进入掘金个人主页,打开开发者工具,点击“专栏” tab ,在开发者工具"Network->XHR->Name->get_entry_by_self->Headers->Request URL" 复制 url。

例如我的是:'https://timeline-merger-ms.juejin.im/v1/get_entry_by_self?src=web&uid=5bd2b8b25188252a784d19d7&device_id=1567748420039&token=eyJhY2Nlc3NfdG9rZWJTTHVPcVRGQ1BseWdTZHF4IiwicmVmcmVzaF90b2tlbiI6ImJHZkJDVDlWQkZiQUNMdTYiLCJ0b2tlbl90eXBlIjoibWFjIiwiZXhwaXJlX2luIjoyNTkyMDAwfQ%3D%3D&targetUid=5bd2b8b25188252a784d19d7&type=post&limit=20&order=createdAt'

如果,你已经迫不及待想要看到效果,可以在复制这个 url 后,直接跳到本文 第 6 节。

不管怎样,还是建议花点时间看完整篇文章,了解足够多细节,以便灵活运用本文提供的方法。

2.2 分析 URL

将上面复制的 url 赋值给下方代码中 juejin_zhuanlan_api_full_url 变量。

juejin_zhuanlan_api_full_url = 'https://timeline-merger-ms.juejin.im/v1/get_entry_by_self?src=web&uid=5bd2b8b25188252a784d19d7&device_id=1567748420039&token=eyJhY2Nlc3NfdG9rZW4iOiJTTcVRGQ1BseWdTZHF4IiwicmVmcmVzaF90b2tlbiI6ImJHZkJDVDlWQkZiQUNMdTYiLCJ0b2tlbl90eXBlIjoibWFjIiwiZXhwaXJlX2luIjoyNTkyMDAwfQ%3D%3D&targetUid=5bd2b8b25188252a784d19d7&type=post&limit=20&order=createdAt'

def decode_url(url):

adr, query = url.split("?")

params = { kv.split("=")[0]:kv.split("=")[1] for kv in query.split("&")}

return adr, params

decode_url(juejin_zhuanlan_api_full_url)

('https://timeline-merger-ms.juejin.im/v1/get_entry_by_self',

{'src': 'web',

'uid': '5bd2b8b25188252a784d19d7',

'device_id': '156774842039',

'token': 'eyJhY2Nlc3NfdG9rZW4iOiJTTHVPcVRGeWdTZHF4IiwicmVmcmVzaF90b2tlbiI6ImJHZkJDVDlWQkZiQUNMdTYiLCJ0b2tlbl90eXBlIjoibWFjIiwiZXhwaXJlX2luIjoyNTkyMDAwfQ%3D%3D',

'targetUid': '5bd2b8b25188252a784d19d7',

'type': 'post',

'limit': '20',

'order': 'createdAt'})

3. 抓取数据

def encode_url(url, params):

query = "&".join(["{}={}".format(k, v) for k, v in params.items()])

return "{}?{}".format(url, query)

def get_juejin_url(uid, device_id, token):

url = "https://timeline-merger-ms.juejin.im/v1/get_entry_by_self"

params = {'src': 'web',

'uid': uid,

'device_id': device_id,

'token': token,

'targetUid': uid,

'type': 'post',

'limit': 20,

'order': 'createdAt'}

return encode_url(url, params)

uid='5bd2b8b25188252a784d19d7'

device_id='1567748420039'

token='eyJhY2Nlc3NfdG9rZW4iOiJTTHVPcVRGBseWdTZHF4IiwicmVmcmVzaF90b2tlbiI6ImJHZkJDVDlWQkZiQUNMdTYiLCJ0b2tlbl90eXBlIjoibWFjIiwiZXhwaXJlX2luIjoyNTkyMDAwfQ%3D%3D'

url = get_juejin_url(uid, device_id, token)

headers = {

'Origin': 'https://juejin.im',

'Referer': 'https://juejin.im/user/5bd2b8b25188252a784d19d7/posts',

'Sec-Fetch-Mode': 'cors',

'User-Agent': 'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.132 Mobile Safari/537.36'

}

res = requests.get(url, headers=headers)

if res.status_code == 200:

json_data = res.json()

else:

print('数据获取失败,请检查token是否失效')

4. 分析数据

4.1 分析 json 数据 找到文章列表字段

for k, v in json_data.items():

print(k, ':', v)

s : 1

m : ok

d : {'total': 11, 'entrylist': [{'collectionCount': 1, 'userRankIndex': 1.3086902928294, 'buildTime': 1568185450.1271, 'commentsCount': 0, 'gfw': False, 'objectId': '5d7832be6fb9a06ad4516f67', 'checkStatus': True, 'isEvent': False, ...

4.2 分析单篇文章 找到感兴趣字段

article_list = json_data['d']['entrylist']

article = article_list[0]

article['title'], article['viewsCount'], article['rankIndex'], article['createdAt']

('python 科学计算的基石 numpy(一)', 57, 0.49695221772782, '2019-09-10T23:33:19.013Z')

interest_list = [(article['title'], article['viewsCount'], article['rankIndex'], article['createdAt']) for article in article_list]

print('{}\t{}\t{}\t{}'.format('createdAt', 'rankIndex', 'viewsCount', 'title'))

for title, viewsCount, rankIndex, createdAt in interest_list:

print('{}\t{}\t{}\t{}'.format(createdAt.split('T')[0], round(rankIndex, 10), viewsCount, title))

createdAt rankIndex viewsCount title

2019-09-10 0.4969522177 57 python 科学计算的基石 numpy(一)

2019-09-10 0.064785953 115 python 数据分析工具包 pandas(一)

2019-09-09 0.0265007422 108 OpenCv-Python 开源计算机视觉库 (一)

2019-09-08 0.0063690043 31 python 数据可视化工具包 matplotlib

2019-09-07 0.0078304184 104 python 图像处理类库 PIL (二)

2019-09-06 0.0021220196 10 python 标准库:os

2019-09-06 0.0025458508 26 python 图像处理类库 PIL (一)

2019-09-06 0.0073875365 144 python 标准库:random

2019-09-06 0.0013268195 6 jpeg 图像存储数据格式

2019-09-06 0.0079665762 6 python 内置函数

2019-09-06 0.0015289955 8 jupyter notebook 安装 C/C++ kernel

4.3 pandas 数据分析

4.3.1 创建 DataFrame 对象

df = pd.DataFrame(interest_list, columns=['title', 'viewsCount', 'rankIndex', 'createdAt'])

df_sort_desc = df.sort_values('viewsCount', ascending=False).reset_index(drop=True)

df_sort_desc

| title | viewsCount | rankIndex | createdAt | |

|---|---|---|---|---|

| 0 | python 标准库:random | 144 | 0.007388 | 2019-09-06T06:09:08.408Z |

| 1 | python 数据分析工具包 pandas(一) | 115 | 0.064786 | 2019-09-10T07:37:53.507Z |

| 2 | OpenCv-Python 开源计算机视觉库 (一) | 108 | 0.026501 | 2019-09-09T06:18:14.059Z |

| 3 | python 图像处理类库 PIL (二) | 104 | 0.007830 | 2019-09-07T11:25:48.391Z |

| 4 | python 科学计算的基石 numpy(一) | 57 | 0.496952 | 2019-09-10T23:33:19.013Z |

| 5 | python 数据可视化工具包 matplotlib | 31 | 0.006369 | 2019-09-08T09:29:00.172Z |

| 6 | python 图像处理类库 PIL (一) | 26 | 0.002546 | 2019-09-06T06:10:48.300Z |

| 7 | python 标准库:os | 10 | 0.002122 | 2019-09-06T11:06:17.881Z |

| 8 | jupyter notebook 安装 C/C++ kernel | 8 | 0.001529 | 2019-09-06T06:00:19.673Z |

| 9 | jpeg 图像存储数据格式 | 6 | 0.001327 | 2019-09-06T06:07:25.442Z |

| 10 | python 内置函数 | 6 | 0.007967 | 2019-09-06T06:04:26.794Z |

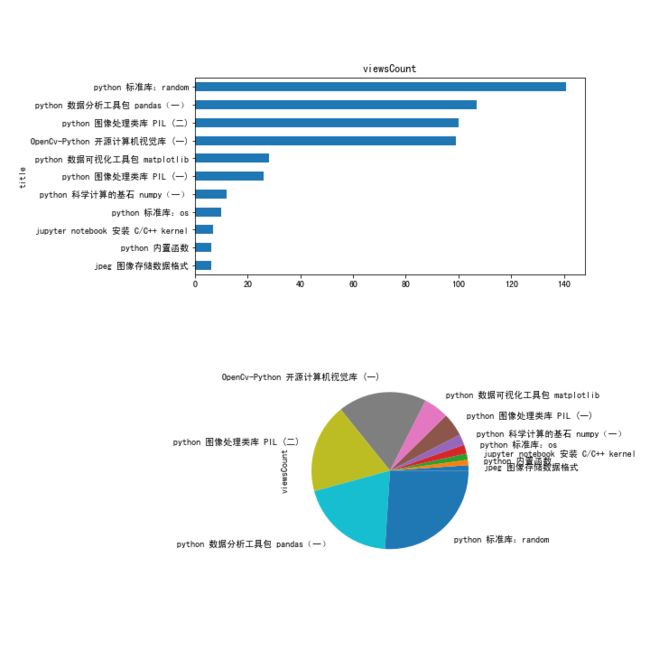

4.3.2 可视化分析

fig, axes = plt.subplots(2, 1, figsize=(10, 10))

plt.subplots_adjust(wspace=0.5, hspace=0.5)

df = df.sort_values('viewsCount')

df.plot(subplots=True, ax=axes[0], x='title', y='viewsCount', kind='barh', legend=False)

df.plot(subplots=True, ax=axes[1], labels=df['title'], y='viewsCount', kind='pie', legend=False, labeldistance=1.2)

plt.subplots_adjust(left=0.3)

plt.show()

5. 保存数据

5.1 保存到 csv 文件

import time

filename = time.strftime("%Y-%m-%d_%H-%M-%S", time.localtime())

# 保存 csv 文件

csv_file = filename + '.csv'

df.to_csv(csv_file, index=False)

5.2 保存到 excel 文件

# 保存 excel 文件

excel_file = filename + '.xls'

sheet_name = time.strftime("%Y-%m-%d", time.localtime())

df.to_excel(excel_file, sheet_name=sheet_name, index=False)

6. 命令行运行

6.1 代码封装

将以下代码保存到文件 crawl_juejin.py:

#!/usr/local/bin/python

import requests

import pandas as pd

import matplotlib.pyplot as plt

import time

import argparse

headers = {

'Origin': 'https://juejin.im',

'Referer': 'https://juejin.im/user/5bd2b8b25188252a784d19d7/posts',

'Sec-Fetch-Mode': 'cors',

'User-Agent': 'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.132 Mobile Safari/537.36'

}

def anlysis_by_url(url):

res = requests.get(url, headers=headers)

print("\ncrawl posts data on juejin.\n-----------\nget posts from {} ...\n".format(url))

if res.status_code != 200:

print('数据获取失败,请检查token是否失效')

return

json_data = res.json()

article_list = json_data['d']['entrylist']

total = json_data['d']['total']

print('got {} posts.\n'.format(total))

interest_list = [(article['title'], article['viewsCount'], article['rankIndex'], article['createdAt'])

for article in article_list]

df = pd.DataFrame(interest_list, columns=['title', 'viewsCount', 'rankIndex', 'createdAt'])

df_sort_desc = df.sort_values('viewsCount', ascending=False).reset_index(drop=True)

print('data visualization ...\n')

fig, axes = plt.subplots(2, 1, figsize=(10, 10))

plt.subplots_adjust(wspace=0.5, hspace=0.5)

df = df.sort_values('viewsCount')

df.plot(subplots=True, ax=axes[0], x='title', y='viewsCount', kind='barh', legend=False)

df.plot(subplots=True, ax=axes[1], labels=df['title'], y='viewsCount', kind='pie', legend=False, labeldistance=1.2)

plt.subplots_adjust(left=0.3)

plt.show()

filename = time.strftime("%Y-%m-%d_%H-%M-%S", time.localtime())

# 保存 csv 文件

csv_file = filename + '.csv'

df_sort_desc.to_csv(csv_file, index=False)

print("saved to {}.\n".format(csv_file))

# 保存 excel 文件

excel_file = filename + '.xls'

sheet_name = time.strftime("%Y-%m-%d", time.localtime())

df_sort_desc.to_excel(excel_file, sheet_name=sheet_name, index=False)

print("saved to {}.\n".format(excel_file))

print("\n-----------\npersonal website: https://kenblog.top\njuejin: https://juejin.im/user/5bd2b8b25188252a784d19d7")

def get_cli_args():

juejin_zhuanlan_api_full_url = 'https://timeline-merger-ms.juejin.im/v1/get_entry_by_self?src=web&uid=5bd2b8b25188252a784d19d7&device_id=1567748420039&token=eyJhY2Nlc3NfdG9rZW4iOiJTTHVPcVRGQ1BseWdTZHF4IiwicmVmcmVzaF90b2tlbiI6ImJHZkJDVDlWQkZiQUNMdTYiLCJ0b2tlbl90eXBlIjoibWFjIiwiZXhwaXJlX2luIjoyNTkyMDAwfQ%3D%3D&targetUid=5bd2b8b25188252a784d19d7&type=post&limit=20&order=createdAt'

parser = argparse.ArgumentParser()

parser.add_argument('-u', '--url', type=str, default=juejin_zhuanlan_api_full_url, help='URL')

args = parser.parse_args()

return args

if __name__ == '__main__':

args = get_cli_args()

anlysis_by_url(args.url)

6.2 命令行运行

按照 2.1 中介绍方法,找到自己专栏的接口地址 url 。形如:

https://timeline-merger-ms.juejin.im/v1/get_entry_by_self?src=web&uid=5bd2b8b25188252a784d19d7&device_id=1567748420039&token=eyJhY2Nlc3NfdG9rZW4iOiJTTHVPcVRGeWdTZHF4IiwicmVmcmVzaF90b2tlbiI6ImJHZkJDVDlWQkZiQUNMdTYiLCJ0b2tlbl90eXBlIjoibWFjIiwiZXhwaXJlX2luIjoyNTkyMDAwfQ%3D%3D&targetUid=5bd2b8b25188252a784d19d7&type=post&limit=20&order=createdAt

以上是笔者的自己的 URL。如果不提供 url 参数,将默认使用这个地址,但只是作为测试脚本是否正常工作,建议只用自己的。

python crawl_juejin.py --url "https://timeline-merger-ms.juejin.im..."

运行后,会爬取自己专栏的文章,同时,可视化显示出来。关闭显示窗口后,将以当前日期和时间作为文件名,在当前目录下保存 csv 文件和 excel 文件。

猜你喜欢

- [1] python 数据分析工具包 pandas(一)

- [2] python 数据可视化工具包 matplotlib