Use the information provided by a face tracking AR session to place and animate 3D content.

使用人脸跟踪AR会话提供的信息放置和动画3D内容。

Overview

This sample app presents a simple interface allowing you to choose between four augmented reality (AR) visualizations on devices with a TrueDepth front-facing camera (see iOS Device Compatibility Reference).

1.The camera view alone, without any AR content.

2.The face mesh provided by ARKit, with automatic estimation of the real-world directional lighting environment.

3.Virtual 3D content that appears to attach to (and be obscured by parts of) the user’s real face.

4.A simple robot character whose facial expression is animated to match that of the user.

Use the “+” button in the sample app to switch between these modes, as shown below.

概述

此示例应用程序提供了一个简单的界面,允许您通过TrueDepth前置摄像头在设备上选择四个增强现实(AR)可视化脸模型(请参阅iOS设备兼容性参考)。

1.单独摄像头视图,没有任何AR内容。

2. ARKit提供的人脸网格,可自动估计真实世界的定向照明环境。

3.虚拟3D内容似乎附着于用户的真实脸部(并被其部分遮挡)。

4.一个简单的机器人角色,其面部表情动画以匹配用户的面部表情。

使用示例应用程序中的“+”按钮在这些模式之间切换,如下所示。

Start a Face Tracking Session in a SceneKit View

Like other uses of ARKit, face tracking requires configuring and running a session (an ARSession object) and rendering the camera image together with virtual content in a view. For more detailed explanations of session and view setup, see About Augmented Reality and ARKitand Building Your First AR Experience. This sample uses SceneKit to display an AR experience, but you can also use SpriteKit or build your own renderer using Metal (see ARSKView and Displaying an AR Experience with Metal).

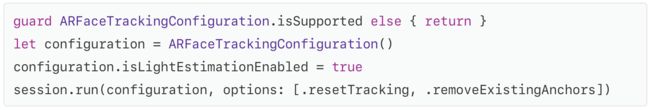

Face tracking differs from other uses of ARKit in the class you use to configure the session. To enable face tracking, create an instance of ARFaceTrackingConfiguration, configure its properties, and pass it to the runWithConfiguration:options: method of the AR session associated with your view, as shown below.

在SceneKit视图中启动脸部跟踪会话

和其他ARKit使用一样,人脸追踪需要配置和运行一个Session(一个ARSession对象),并将相机图像与视图中的虚拟内容一起渲染。 有关Session和视图设置的更详细说明,请参阅关于增强现实和ARKit并且构建您的第一个AR体验。 此示例使用SceneKit显示AR体验,但您也可以使用SpriteKit或使用Metal构建您自己的渲染器(请参阅ARSKView和显示带金属的AR体验)。

脸部跟踪与用于配置Session的类中的其他ARKit用法不同。 要启用人脸跟踪,请创建ARFaceTrackingConfiguration实例,配置其属性,并将其传递给与您的视图关联的AR会话的runWithConfiguration:options:方法,如下所示。

Before offering your user features that require a face tracking AR session, check the isSupported property on the ARFaceTrackingConfiguration class to determine whether the current device supports ARKit face tracking.

在提供需要人脸跟踪AR Session的用户功能之前,请检查ARFaceTrackingConfiguration类的isSupported属性,以确定当前设备是否支持ARKit人脸跟踪。

Track the Position and Orientation of a Face

When face tracking is active, ARKit automatically adds ARFaceAnchor objects to the running AR session, containing information about the user’s face, including its position and orientation.

Note

ARKit detects and provides information about only one user’s face. If multiple faces are present in the camera image, ARKit chooses the largest or most clearly recognizable face.

In a SceneKit-based AR experience, you can add 3D content corresponding to a face anchor in the renderer:didAddNode:forAnchor: method (from the ARSCNViewDelegateprotocol). ARKit adds a SceneKit node for the anchor, and updates that node’s position and orientation on each frame, so any SceneKit content you add to that node automatically follows the position and orientation of the user’s face.

跟踪人脸的位置和方向

面部跟踪处于活动状态时,ARKit会自动将ARFaceAnchor对象添加到正在运行的AR Session中,其中包含有关用户面部的信息,包括其位置和方向。

注意

ARKit检测并提供关于一个用户脸部的信息。 如果相机图像中存在多个人脸,ARKit会选择最大或最清晰可识别的人脸。

在基于SceneKit的AR体验中,可以在渲染器中添加与面部锚点对应的3D内容:didAddNode:forAnchor:method(来自ARSCNViewDelegate协议)。 ARKit为定位点添加一个SceneKit节点,并更新该节点在每个框架上的位置和方向,因此添加到该节点的任何SceneKit内容都会自动跟随用户脸部的位置和方向。

In this example, the renderer:didAddNode:forAnchor: method calls the setupFaceNodeContent method to add SceneKit content to the faceNode. For example, if you change the showsCoordinateOrigin variable in the sample code, the app adds a visualization of the x/y/z axes to the node, indicating the origin of the face anchor’s coordinate system.

在此示例中,渲染器:didAddNode:forAnchor:方法调用setupFaceNodeContent方法以将SceneKit内容添加到faceNode。 例如,如果您在示例代码中更改showsCoordinateOrigin变量,则该应用程序将x / y / z轴的可视化文件添加到节点,以指示面部锚点坐标系的原点。

Use Face Geometry to Model the User’s Face

ARKit provides a coarse 3D mesh geometry matching the size, shape, topology, and current facial expression of the user’s face. ARKit also provides the ARSCNFaceGeometry class, offering an easy way to visualize this mesh in SceneKit.

Your AR experience can use this mesh to place or draw content that appears to attach to the face. For example, by applying a semitransparent texture to this geometry you could paint virtual tattoos or makeup onto the user’s skin.

To create a SceneKit face geometry, initialize an ARSCNFaceGeometry object with the Metal device your SceneKit view uses for rendering:

使用面几何来建模用户的面部

ARKit提供与用户脸部的大小,形状,拓扑和当前面部表情相匹配的粗糙三维网格几何图形。 ARKit还提供了ARSCNFaceGeometry类,提供了一种在SceneKit中可视化该网格的简单方法。

您的AR体验可以使用此网格来放置或绘制看起来附着在脸上的内容。 例如,通过对此几何图形应用半透明纹理,您可以在用户的皮肤上绘制虚拟纹身或化妆。

要创建SceneKit面几何,请使用SceneKit视图用于渲染的Metal设备初始化ARSCNFaceGeometry对象:

The sample code’s setupFaceNodeContent method (mentioned above) adds a node containing the face geometry to the scene. By making that node a child of the node provided by the face anchor, the face model automatically tracks the position and orientation of the user’s face.

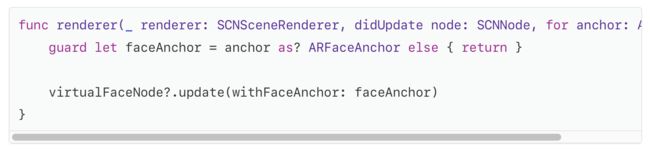

To also make the face model onscreen conform to the shape of the user’s face, even as the user blinks, talks, and makes various facial expressions, you need to retrieve an updated face mesh in the renderer:didUpdateNode:forAnchor: delegate callback.

示例代码的setupFaceNodeContent方法(如上所述)将包含面几何的节点添加到场景中。 通过使该节点成为面部锚点提供的节点的子节点,面部模型自动跟踪用户面部的位置和方向。

为了使屏幕上的脸部模型符合用户脸部的形状,即使用户在闪烁,说话并制作各种脸部表情时,也需要在呈现器中检索更新后的脸部网格:didUpdateNode:forAnchor:delegate回调。

Then, update the ARSCNFaceGeometry object in your scene to match by passing the new face mesh to its updateFromFaceGeometry: method:

然后,通过将新面部网格传递给其updateFromFaceGeometry:方法来更新场景中的ARSCNFaceGeometry对象以进行匹配:

Place 3D Content on the User’s Face

Another use of the face mesh that ARKit provides is to create occlusion geometry in your scene. An occlusion geometry is a 3D model that doesn’t render any visible content (allowing the camera image to show through), but obstructs the camera’s view of other virtual content in the scene.

This technique creates the illusion that the real face interacts with virtual objects, even though the face is a 2D camera image and the virtual content is a rendered 3D object. For example, if you place an occlusion geometry and virtual glasses on the user’s face, the face can obscure the frame of the glasses.

To create an occlusion geometry for the face, start by creating an ARSCNFaceGeometryobject as in the previous example. However, instead of configuring that object’s SceneKit material with a visible appearance, set the material to render depth but not color during rendering:

将3D内容放置在用户的脸上

ARKit提供的脸部网格的另一个用途是在场景中创建遮挡几何体。 遮挡几何是一种3D模型,它不会呈现任何可见内容(允许摄像机图像显示),但会阻挡相机查看场景中其他虚拟内容的视图。

即使脸部是2D照相机图像,而虚拟内容是渲染的3D对象,该技术也会创建真实脸部与虚拟对象交互的错觉。 例如,如果您在用户的脸上放置遮挡几何图形和虚拟眼镜,则脸部可能会遮挡眼镜的框架。

要为面部创建遮挡几何图形,请先按照前面的示例创建ARSCNFaceGeometry对象。 但是,不要使用可见外观配置该对象的SceneKit材质,而在渲染过程中应将材质设置为渲染深度而不是颜色:

Because the material renders depth, other objects rendered by SceneKit correctly appear in front of it or behind it. But because the material doesn’t render color, the camera image appears in its place. The sample app combines this technique with a SceneKit object positioned in front of the user’s eyes, creating an effect where the object is realistically obscured by the user’s nose.

由于材质渲染深度,SceneKit渲染的其他对象正确显示在其前面或后面。 但由于材质不呈现颜色,相机图像显示在其位置上。 示例应用程序将此技术与位于用户眼睛前方的SceneKit对象结合在一起,从而创建一种效果,使用者的鼻子逼真地遮挡物体。

Animate a Character with Blend Shapes

In addition to the face mesh shown in the above examples, ARKit also provides a more abstract model of the user’s facial expressions in the form of a blendShapes dictionary. You can use the named coefficient values in this dictionary to control the animation parameters of your own 2D or 3D assets, creating a character (such as an avatar or puppet) that follows the user’s real facial movements and expressions.

As a basic demonstration of blend shape animation, this sample includes a simple model of a robot character’s head, created using SceneKit primitive shapes. (See the robotHead.scn file in the source code.)

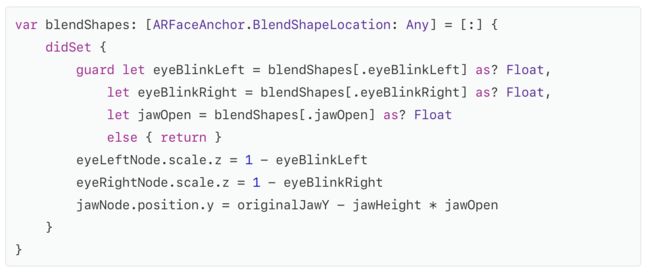

To get the user’s current facial expression, read the blendShapes dictionary from the face anchor in the renderer:didUpdateNode:forAnchor: delegate callback:

使用混合形状动画人物

除了上面示例中显示的人脸网格外,ARKit还以blendShapes字典的形式提供了用户面部表情的更抽象模型。 您可以使用此字典中的命名系数值来控制您自己的2D或3D资源的动画参数,创建一个跟随用户真实面部动作和表情的角色(例如化身或木偶)。

作为混合形状动画的基本演示,此示例包含一个机器人角色头部的简单模型,使用SceneKit原始形状创建。 (请参阅源代码中的robotHead.scn文件。)

要获取用户的当前面部表情,请从渲染器中的face anchor中读取blendShapes字典:didUpdateNode:forAnchor:delegate callback:

Then, examine the key-value pairs in that dictionary to calculate animation parameters for your model. There are 52 unique ARBlendShapeLocation coefficients. Your app can use as few or as many of them as neccessary to create the artistic effect you want. In this sample, the RobotHead class performs this calculation, mapping the ARBlendShapeLocationEyeBlinkLeft and ARBlendShapeLocationEyeBlinkRight parameters to one axis of the scale factor of the robot’s eyes, and the ARBlendShapeLocationJawOpen parameter to offset the position of the robot’s jaw.

然后,检查该字典中的键值对,以计算模型的动画参数。 有52个独特的ARBlendShapeLocation系数。 您的应用程序可以使用尽可能少的或尽可能多的必要条件来创建您想要的艺术效果。 在此示例中,RobotHead类执行此计算,将ARBlendShapeLocationEyeBlinkLeft和ARBlendShapeLocationEyeBlinkRight参数映射到机器人眼睛比例因子的一个轴,并使用ARBlendShapeLocationJawOpen参数来偏移机器人颌部的位置。