Error due to Bias - Accuracy and Underfitting

Bias occurs when a model has enough data but is not complex enough to capture the underlying relationships. As a result, the model consistently and systematically misrepresents the data, leading to low accuracy in prediction. This is known as underfitting.

Simply put, bias occurs when we have an inadequate model. An example might be when we have objects that are classified by color and shape, for example easter eggs, but our model can only partition and classify objects by color. It would therefore consistently mislabel future objects--for example labeling rainbows as easter eggs because they are colorful.

Another example would be continuous data that is polynomial in nature, with a model that can only represent linear relationships. In this case it does not matter how much data we feed the model because it cannot represent the underlying relationship. To overcome error from bias, we need a more complex model.1

Error due to Variance - Precision and Overfitting

When training a model, we typically use a limited number of samples from a larger population. If we repeatedly train a model with randomly selected subsets of data, we would expect its predictons to be different based on the specific examples given to it. Here variance is a measure of how much the predictions vary for any given test sample.

Some variance is normal, but too much variance indicates that the model is unable to generalize its predictions to the larger population. High sensitivity to the training set is also known as overfitting, and generally occurs when either the model is too complex or when we do not have enough data to support it.

We can typically reduce the variability of a model's predictions and increase precision by training on more data. If more data is unavailable, we can also control variance by limiting our model's complexity.

发生overfitting 的主要原因是:

(1)使用过于复杂的模型;

(2)数据噪音;

(3)有限的训练数据。

噪音与数据规模

我们可以理解地简单些:有噪音时,更复杂的模型会尽量去覆盖噪音点,即对数据过拟合!

这样,即使训练误差很小(接近于零),由于没有描绘真实的数据趋势,测试误差反而会更大。

即噪音严重误导了我们的假设。

还有一种情况,如果数据是由我们不知道的某个非常非常复杂的模型产生的,实际上有限的数据很难去“代表”这个复杂模型曲线。我们采用不恰当的假设去尽量拟合这些数据,效果一样会很差,因为部分数据对于我们不恰当的复杂假设就像是“噪音”,误导我们进行过拟合。

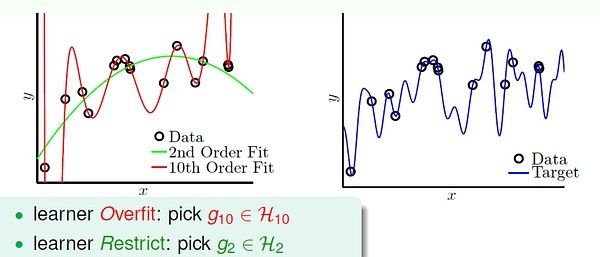

如下面的例子,假设数据是由50次幂的曲线产生的(下图右边),与其通过10次幂的假设曲线去拟合它们,还不如采用简单的2次幂曲线来描绘它的趋势。

随机噪音与确定性噪音 (Deterministic Noise)

之前说的噪音一般指随机噪音(stochastic noise),服从高斯分布;还有另一种“噪音”,就是前面提到的由未知的复杂函数f(X) 产生的数据,对于我们的假设也是噪音,这种是确定性噪音。

数据规模一定时,随机噪音越大,或者确定性噪音越大(即目标函数越复杂),越容易发生overfitting。总之,容易导致overfitting 的因素是:数据过少;随机噪音过多;确定性噪音过多;假设过于复杂(excessive power)。

解决过拟合问题

对应导致过拟合发生的几种条件,我们可以想办法来避免过拟合。

(1) 随机噪音 => 数据清洗

(2) 假设过于复杂(excessive dvc) => start from simple model

or

(3) 数据规模太小 => 收集更多数据,或根据某种规律“伪造”更多数据 正规化(regularization) 也是限制模型复杂度的(加惩罚项,对复杂模型进行惩罚)。

数据清洗(data ckeaning/Pruning)

将错误的label 纠正或者删除错误的数据。

Data Hinting: “伪造”更多数据, add "virtual examples"

例如,在数字识别的学习中,将已有的数字通过平移、旋转等,变换出更多的数据。

Underfitting vs. Overfitting

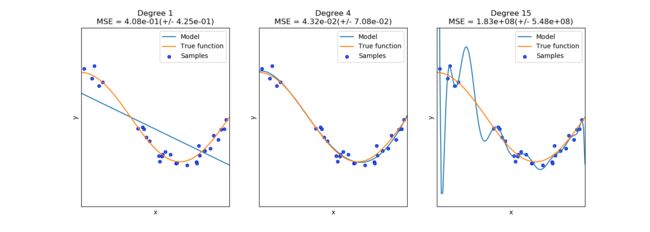

This example demonstrates the problems of underfitting and overfitting and how we can use linear regression with polynomial features to approximate nonlinear functions. The plot shows the function that we want to approximate, which is a part of the cosine function. In addition, the samples from the real function and the approximations of different models are displayed. The models have polynomial features of different degrees. We can see that a linear function (polynomial with degree 1) is not sufficient to fit the training samples. This is called underfitting. A polynomial of degree 4 approximates the true function almost perfectly. However, for higher degrees the model will overfit the training data, i.e. it learns the noise of the training data. We evaluate quantitatively overfitting / underfitting by using cross-validation. We calculate the mean squared error (MSE) on the validation set, the higher, the less likely the model generalizes correctly from the training data.

underfiting vs. fitting vs. overfittingLearning Curves

Learning Curves

A learning curve in machine learning is a graph that compares the performance of a model on training and testing data over a varying number of training instances.

When we look at the relationship between the amount of training data and performance, we should generally see performance improve as the number of training points increases.

By separating training and testing sets and graphing performance on each separately, we can get a better idea of how well the model can generalize to unseen data.

A learning curve allows us to verify when a model has learned as much as it can about the data. When this occurs, the performance on both training and testing sets plateau and there is a consistent gap between the two error rates.

Bias

When the training and testing errors converge and are quite high this usually means the model is biased. No matter how much data we feed it, the model cannot represent the underlying relationship and therefore has systematic high errors.

Variance

When there is a large gap between the training and testing error this generally means the model suffers from high variance. Unlike a biased model, models that suffer from variance generally require more data to improve. We can also limit variance by simplifying the model to represent only the most important features of the data.

Ideal Learning Curve

The ultimate goal for a model is one that has good performance that generalizes well to unseen data. In this case, both the testing and training curves converge at similar values. The smaller the gap between the training and testing sets, the better our model generalizes. The better the performance on the testing set, the better our model performs.

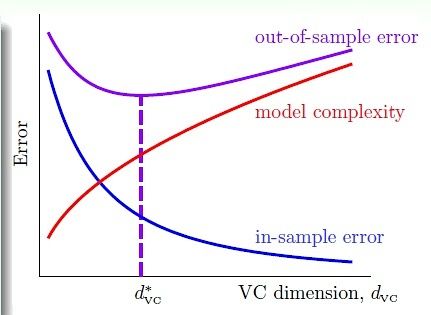

Model Complexity

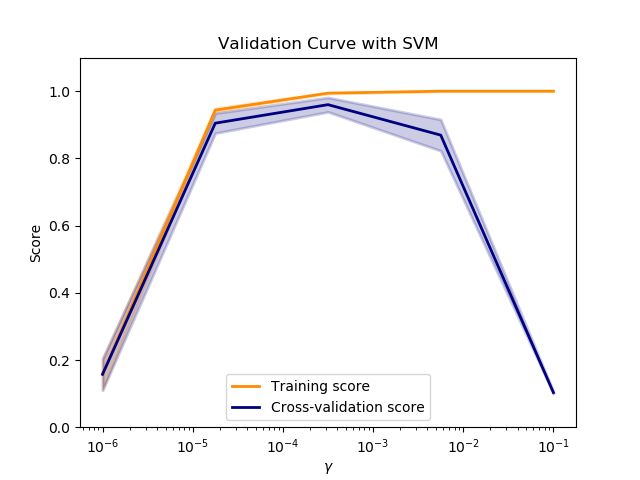

The visual technique of graphing performance is not limited to learning. With most models, we can change the complexity by changing the inputs or parameters.

A model complexity graph looks at training and testing curves as the model's complexity varies. The most common trend is that as a model's complexity increases, bias will fall off and variance will rise

Scikit-learn provides a tool for validation curves which can be used to monitor model complexity by varying the parameters of a model. We'll explore the specifics of how these parameters affect complexity in the next course on supervised learning.

随着模型复杂的上升,模型对数据的表征能力增强。但模型过于复杂会导致对training data overfitting,对数据的泛化能力下降。

Learning Curves and Model Complexity

So what is the relationship between learning curves and model complexity?

If we were to take the learning curves of the same machine learning algorithm with the same fixed set of data, but create several graphs at different levels of model complexity, all the learning curve graphs would fit together into a 3D model complexity graph.

If we took the final testing and training errors for each model complexity and visualized them along the complexity of the model we would be able to see how well the model performs as the model complexity increases.

Learning curve of overfitting