一、ELKStack简介

Elstaicsearch:存储和搜索 logstash:收集 kibana:展示.专门为ES设计的展示平台

二、ELK之使用消息队列收取日志

1、环境准备

环境准备

IP 主机名 操作系统 192.168.56.11 linux-node1 centos7 192.168.56.12 linux-node2 centos7

2、流程分析

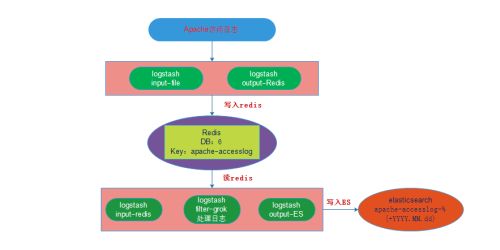

日志-->logstash(flume)-->MQ(redis,rabbitmq)-->logstash(python scripts)-->es

官网参考链接 https://www.elastic.co/guide/en/logstash/current/input-plugins.html

流程图如下:

三、安装配置redis

1、安装redis

编译安装或者yum安装均可,这里我们选择yum安装

在192.168.56.12上执行如下命令: yum -y install redis

2、配置redis

编辑redis配置文件,这里需要修改的有两处

vim /etc/redis.conf daemonize yes #是否以后台daemon方式运行 bind 192.168.56.12 #绑定主机 保存退出

3、启动redis并测试连接

启动redis: systemctl start redis 测试连接: [root@linux-node2 ~]# redis-cli -h 192.168.56.12 -p 6379 192.168.56.12:6379>

四、分析利用redis收取日志的过程

1、实现数据能写入redis

在192.168.56.12上编写redis.conf配置文件,实现数据从标准输入写入redis

[root@linux-node2 ~]# cat /etc/logstash/conf.d/redis.conf

input{

stdin{}

}

output{

redis{

host => "192.168.56.12" #主机地址

port => "6379" #redis默认端口是6379

db => "6" #redis默认db是0,这里我们写入到db 6

data_type => "list" #我们选择list作为数据类型

key => "demo" #数据写入redis时所用的key

}

}

启动logstash,并输入内容进行验证

[root@linux-node2 conf.d]# /opt/logstash/bin/logstash -f redis.conf Settings: Default pipeline workers: 1 Pipeline main started papapapa

连接redis,选择db,查看数据是否写入redis

[root@linux-node2 ~]# redis-cli -h 192.168.56.12 -p 6379

192.168.56.12:6379> select 6

OK

192.168.56.12:6379[6]> keys *

1) "demo"

192.168.56.12:6379[6]> llen demo

(integer) 1

192.168.56.12:6379[6]> lindex demo -1

"{\"message\":\"papapapa\",\"@version\":\"1\",\"@timestamp\":\"2016-09-04T08:08:11.998Z\",\"host\":\"linux-node2\"}"

192.168.56.12:6379[6]>

我们看到可以把数据写入redis

2、实现从文件中读取日志,并写入redis

在192.168.56.11上编写/etc/logstash/conf.d/apache.conf配置文件

[root@linux-node1 /var/log/httpd]# vim /etc/logstash/conf.d/apache.conf

input {

file {

path => "/var/log/httpd/access_log"

start_position => "beginning"

type => "apache-accesslog"

}

file{

path => "/var/log/elasticsearch/myes.log"

type => "es-log"

start_position => "beginning"

codec => multiline{

pattern => "^\["

negate => true

what => "previous"

}

}

}

output {

if [type] == "apache-accesslog" {

redis {

host => "192.168.56.12"

port => "6379"

db => "6"

data_type => "list"

key => "apache-accesslog"

}

if [type] == "es-log"{

redis {

host => "192.168.56.12"

port => "6379"

db => "6"

data_type => "list"

key => "es-log"

}

}

}

启动logstash

[root@linux-node1 /etc/logstash/conf.d]# /opt/logstash/bin/logstash -f apache.conf Settings: Default pipeline workers: 4 Pipeline main started

在redis上查看是否已经写入数据,连接redis,选择db 6,执行keys *

192.168.56.12:6379[6]> keys *

1) "es-log"

2) "demo"

3) "apache-accesslog"

192.168.56.12:6379[6]> llen es-log

(integer) 44

192.168.56.12:6379[6]> lindex es-log -1

"{\"@timestamp\":\"2016-09-04T20:34:23.717Z\",\"message\":\"[2016-09-05 03:52:18,878][INFO ][cluster.routing.allocation] [linux-node1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[system-log-2016.09][3]] ...]).\",\"@version\":\"1\",\"path\":\"/var/log/elasticsearch/myes.log\",\"host\":\"linux-node1\",\"type\":\"es-log\"}"

192.168.56.12:6379[6]> llen apache-acccesslog

(integer) 0

192.168.56.12:6379[6]> lindex apache-accesslog -1

"{\"message\":\"papapapa\",\"@version\":\"1\",\"@timestamp\":\"2016-09-04T20:46:03.164Z\",\"path\":\"/var/log/httpd/access_log\",\"host\":\"linux-node1\",\"type\":\"apache-accesslog\"}"

可以看到不仅能收取apache-accesslog,还能收入es-log

3、实现从redis读取数据

在192.168.56.12上编写/etc/logstash/conf.d/input_redis.conf配置文件,并打印到标准输出

[root@linux-node2 conf.d]# cat input_redis.conf

input{

redis {

type => "apache-accesslog"

host => "192.168.56.12"

port => "6379"

db => "6"

data_type => "list"

key => "apache-accesslog"

}

redis {

type => "es-log"

host => "192.168.56.12"

port => "6379"

db => "6"

data_type => "list"

key => "es-log"

}

}

output{

stdout {

codec => rubydebug

}

}

启动logstash,logstash启动后,从redis读取的日志内容会立即打印到标准输出

[root@linux-node2 conf.d]# /opt/logstash/bin/logstash -f input_redis.conf

Settings: Default pipeline workers: 1

Pipeline main started

{

"message" => "::1 - - [05/Sep/2016:03:28:31 +0800] \"OPTIONS * HTTP/1.0\" 200 - \"-\" \"Apache/2.4.6 (CentOS) OpenSSL/1.0.1e-fips PHP/5.4.16 mod_wsgi/3.4 Python/2.7.5 (internal dummy connection)\"",

"@version" => "1",

"@timestamp" => "2016-09-04T20:34:22.928Z",

"path" => "/var/log/httpd/access_log",

"host" => "linux-node1",

"type" => "apache-accesslog"

}

{

"message" => "::1 - - [05/Sep/2016:03:28:31 +0800] \"OPTIONS * HTTP/1.0\" 200 - \"-\" \"Apache/2.4.6 (CentOS) OpenSSL/1.0.1e-fips PHP/5.4.16 mod_wsgi/3.4 Python/2.7.5 (internal dummy connection)\"",

"@version" => "1",

"@timestamp" => "2016-09-04T20:34:23.266Z",

"path" => "/var/log/httpd/access_log",

"host" => "linux-node1",

"type" => "apache-accesslog"

}

{

"@timestamp" => "2016-09-04T20:34:22.942Z",

"message" => "[2016-09-05 01:06:18,066][WARN ][monitor.jvm ] [linux-node1] [gc][young][10361][1114] duration [8.4s], collections [1]/[8.5s], total [8.4s]/[27.8s], memory [172.1mb]->[108.5mb]/[990.7mb], all_pools {[young] [66.4mb]->[1.5mb]/[266.2mb]}{[survivor] [3.8mb]->[4.9mb]/[33.2mb]}{[old] [101.8mb]->[102.2mb]/[691.2mb]}",

"@version" => "1",

"path" => "/var/log/elasticsearch/myes.log",

"host" => "linux-node1",

"type" => "es-log"

}

{

"@timestamp" => "2016-09-04T20:34:23.277Z",

"message" => "[2016-09-05 03:39:50,356][INFO ][node ] [linux-node1] stopping ...",

"@version" => "1",

"path" => "/var/log/elasticsearch/myes.log",

"host" => "linux-node1",

"type" => "es-log"

}

4、格式化apache的日志

使用filter插件中的grok插件,格式化apache日志

[root@linux-node2 conf.d]# cat input_redis.conf

input{

redis {

type => "apache-accesslog"

host => "192.168.56.12"

port => "6379"

db => "6"

data_type => "list"

key => "apache-accesslog"

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

}

}

output{

stdout {

codec => rubydebug

}

}

重新启动logstash,并查看标准输出,日志内容已变成json格式

[root@linux-node2 conf.d]# /opt/logstash/bin/logstash -f input_redis.conf

Settings: Default pipeline workers: 1

Pipeline main started

{

"message" => "192.168.56.1 - - [05/Sep/2016:05:31:39 +0800] \"GET / HTTP/1.1\" 200 67 \"-\" \"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/46.0.2490.86 Safari/537.36\"",

"@version" => "1",

"@timestamp" => "2016-09-04T21:31:40.819Z",

"path" => "/var/log/httpd/access_log",

"host" => "linux-node1",

"type" => "apache-accesslog",

"clientip" => "192.168.56.1",

"ident" => "-",

"auth" => "-",

"timestamp" => "05/Sep/2016:05:31:39 +0800",

"verb" => "GET",

"request" => "/",

"httpversion" => "1.1",

"response" => "200",

"bytes" => "67",

"referrer" => "\"-\"",

"agent" => "\"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/46.0.2490.86 Safari/537.36\""

}

{

"message" => "192.168.56.1 - - [05/Sep/2016:05:31:40 +0800] \"GET /favicon.ico HTTP/1.1\" 404 209 \"http://192.168.56.11:81/\" \"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/46.0.2490.86 Safari/537.36\"",

"@version" => "1",

"@timestamp" => "2016-09-04T21:31:40.825Z",

"path" => "/var/log/httpd/access_log",

"host" => "linux-node1",

"type" => "apache-accesslog",

"clientip" => "192.168.56.1",

"ident" => "-",

"auth" => "-",

"timestamp" => "05/Sep/2016:05:31:40 +0800",

"verb" => "GET",

"request" => "/favicon.ico",

"httpversion" => "1.1",

"response" => "404",

"bytes" => "209",

"referrer" => "\"http://192.168.56.11:81/\"",

"agent" => "\"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/46.0.2490.86 Safari/537.36\""

}

5、实现通过redis收取日志

分别在192.168.56.11和192.168.56.12上面启动redis,在192.168.56.11上实现读取日志apache日志到redis,在192.168.56.12实现从redis读数据,并转为json格式,然后写入es

在192.168.56.11上的配置文件

[root@linux-node1 /var/log/httpd]# cat /etc/logstash/conf.d/apache.conf

input {

file {

path => "/var/log/httpd/access_log"

start_position => "beginning"

type => "apache-accesslog"

}

}

output {

if [type] == "apache-accesslog" {

redis {

host => "192.168.56.12"

port => "6379"

db => "6"

data_type => "list"

key => "apache-accesslog"

}

}

}

在192.168.56.12上的配置文件

[root@linux-node2 conf.d]# cat in_redis_out.conf

input{

redis {

type => "apache-accesslog"

host => "192.168.56.12"

port => "6379"

db => "6"

data_type => "list"

key => "apache-accesslog"

}

}

filter {

if [tyep] == "apache-accesslog"{

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

}

}

}

output{

if [type] == "apache-accesslog"{

elasticsearch {

hosts => ["192.168.56.11:9200"]

index => "apache-accesslog-%{+YYYY.MM.dd}"

}

}

}

启动logstash

[root@linux-node1 /etc/logstash/conf.d]# /opt/logstash/bin/logstash -f apache.conf Settings: Default pipeline workers: 4 Pipeline main started

[root@linux-node2 conf.d]# /opt/logstash/bin/logstash -f in_redis_out.conf Settings: Default pipeline workers: 1 Pipeline main started

注意:为了便于测试,我都是在前台启动;如果执行/etc/init.d/logstash start命令,这样会把目录/etc/logstash/conf.d/下的所有配置文件都加载,会对测试造成干扰。

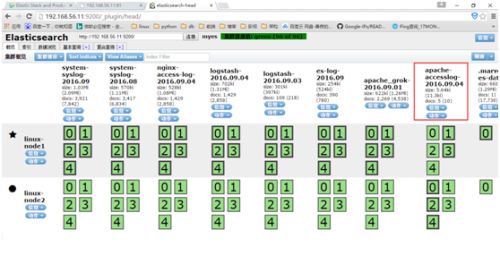

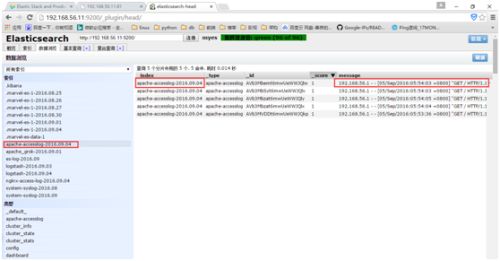

6、通过ES查看日志内容

访问192.168.56.11上面的apache,以便于产生访问日志。

接下来访问http://192.168.56.11:9200/_plugin/head

我们可以看到apache-accesslog-2016.09.04的索引已经产生,如图所示:

点击数据浏览