0x00 教程内容

- Dockerfile的编写

- Hadoop相关配置文件

- 构建脚本

- 启动脚本

0x01 Dockerfile的编写

1. Dockerfile文件

FROM ubuntu

MAINTAINER shaonaiyi [email protected]

ENV BUILD_ON 2017-10-12

RUN apt-get update -qqy

RUN apt-get -qqy install vim wget net-tools iputils-ping openssh-server

ADD ./jdk-8u161-linux-x64.tar.gz /usr/local/

ADD ./hadoop-2.7.5.tar.gz /usr/local

#增加JAVA_HOME环境变量

ENV JAVA_HOME /usr/local/jdk1.8.0_161

ENV HADOOP_HOME /usr/local/hadoop-2.7.5

ENV PATH $HADOOP_HOME/bin:$JAVA_HOME/bin:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$PATH

RUN ssh-keygen -t rsa -f ~/.ssh/id_rsa -P '' && \

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys && \

chmod 600 ~/.ssh/authorized_keys

COPY config /tmp

RUN mv /tmp/ssh_config ~/.ssh/config && \

mv /tmp/hadoop-env.sh $HADOOP_HOME/etc/hadoop/hadoop-env.sh && \

mv /tmp/hdfs-site.xml $HADOOP_HOME/etc/hadoop/hdfs-site.xml && \

mv /tmp/core-site.xml $HADOOP_HOME/etc/hadoop/core-site.xml && \

mv /tmp/yarn-site.xml $HADOOP_HOME/etc/hadoop/yarn-site.xml && \

mv /tmp/mapred-site.xml $HADOOP_HOME/etc/hadoop/mapred-site.xml && \

mv /tmp/master $HADOOP_HOME/etc/hadoop/master && \

mv /tmp/slaves $HADOOP_HOME/etc/hadoop/slaves && \

mv /tmp/start-hadoop.sh ~/start-hadoop.sh && \

mkdir -p /usr/local/hadoop2.7/dfs/data && \

mkdir -p /usr/local/hadoop2.7/dfs/name

RUN echo $JAVA_HOME

WORKDIR /root

RUN /etc/init.d/ssh start

CMD ["/bin/bash"]

0x02 Hadoop相关配置文件

1. ssh_config

Host localhost

StrictHostKeyChecking no

Host 0.0.0.0

StrictHostKeyChecking no

Host hadoop-*

StrictHostKeyChecking no

2. profile

# /etc/profile: system-wide .profile file for the Bourne shell (sh(1))

# and Bourne compatible shells (bash(1), ksh(1), ash(1), ...).

if [ "$PS1" ]; then

if [ "$BASH" ] && [ "$BASH" != "/bin/sh" ]; then

# The file bash.bashrc already sets the default PS1.

# PS1='\h:\w\$ '

if [ -f /etc/bash.bashrc ]; then

. /etc/bash.bashrc

fi

else

if [ "`id -u`" -eq 0 ]; then

PS1='# '

else

PS1='$ '

fi

fi

fi

if [ -d /etc/profile.d ]; then

for i in /etc/profile.d/*.sh; do

if [ -r $i ]; then

. $i

fi

done

unset i

fi

export JAVA_HOME=/usr/local/jdk1.8.0_161

export SCALA_HOME=/usr/local/scala-2.11.8

export HADOOP_HOME=/usr/local/hadoop-2.7.5

export SPARK_HOME=/usr/local/spark-2.2.0-bin-hadoop2.7

export PATH=$JAVA_HOME/bin:$SCALA_HOME/bin:$HADOOP_HOME/bin:$SPARK_HOME/bin:$PATH

3. hadoop-env.sh

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Set Hadoop-specific environment variables here.

# The only required environment variable is JAVA_HOME. All others are

# optional. When running a distributed configuration it is best to

# set JAVA_HOME in this file, so that it is correctly defined on

# remote nodes.

# The java implementation to use.

export JAVA_HOME=/usr/local/jdk1.8.0_161

# The jsvc implementation to use. Jsvc is required to run secure datanodes

# that bind to privileged ports to provide authentication of data transfer

# protocol. Jsvc is not required if SASL is configured for authentication of

# data transfer protocol using non-privileged ports.

#export JSVC_HOME=${JSVC_HOME}

export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-"/etc/hadoop"}

# Extra Java CLASSPATH elements. Automatically insert capacity-scheduler.

for f in $HADOOP_HOME/contrib/capacity-scheduler/*.jar; do

if [ "$HADOOP_CLASSPATH" ]; then

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$f

else

export HADOOP_CLASSPATH=$f

fi

done

# The maximum amount of heap to use, in MB. Default is 1000.

#export HADOOP_HEAPSIZE=

#export HADOOP_NAMENODE_INIT_HEAPSIZE=""

# Extra Java runtime options. Empty by default.

export HADOOP_OPTS="$HADOOP_OPTS -Djava.net.preferIPv4Stack=true"

# Command specific options appended to HADOOP_OPTS when specified

export HADOOP_NAMENODE_OPTS="-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_NAMENODE_OPTS"

export HADOOP_DATANODE_OPTS="-Dhadoop.security.logger=ERROR,RFAS $HADOOP_DATANODE_OPTS"

export HADOOP_SECONDARYNAMENODE_OPTS="-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_SECONDARYNAMENODE_OPTS"

export HADOOP_NFS3_OPTS="$HADOOP_NFS3_OPTS"

export HADOOP_PORTMAP_OPTS="-Xmx512m $HADOOP_PORTMAP_OPTS"

# The following applies to multiple commands (fs, dfs, fsck, distcp etc)

export HADOOP_CLIENT_OPTS="-Xmx512m $HADOOP_CLIENT_OPTS"

#HADOOP_JAVA_PLATFORM_OPTS="-XX:-UsePerfData $HADOOP_JAVA_PLATFORM_OPTS"

# On secure datanodes, user to run the datanode as after dropping privileges.

# This **MUST** be uncommented to enable secure HDFS if using privileged ports

# to provide authentication of data transfer protocol. This **MUST NOT** be

# defined if SASL is configured for authentication of data transfer protocol

# using non-privileged ports.

export HADOOP_SECURE_DN_USER=${HADOOP_SECURE_DN_USER}

# Where log files are stored. $HADOOP_HOME/logs by default.

#export HADOOP_LOG_DIR=${HADOOP_LOG_DIR}/$USER

# Where log files are stored in the secure data environment.

export HADOOP_SECURE_DN_LOG_DIR=${HADOOP_LOG_DIR}/${HADOOP_HDFS_USER}

###

# HDFS Mover specific parameters

###

# Specify the JVM options to be used when starting the HDFS Mover.

# These options will be appended to the options specified as HADOOP_OPTS

# and therefore may override any similar flags set in HADOOP_OPTS

#

# export HADOOP_MOVER_OPTS=""

###

# Advanced Users Only!

###

# The directory where pid files are stored. /tmp by default.

# NOTE: this should be set to a directory that can only be written to by

# the user that will run the hadoop daemons. Otherwise there is the

# potential for a symlink attack.

export HADOOP_PID_DIR=${HADOOP_PID_DIR}

export HADOOP_SECURE_DN_PID_DIR=${HADOOP_PID_DIR}

# A string representing this instance of hadoop. $USER by default.

export HADOOP_IDENT_STRING=$USER

4. core-site.xml

fs.defaultFS

hdfs://hadoop-maste:9000/

hadoop.tmp.dir

file:/usr/local/hadoop/tmp

hadoop.proxyuser.root.hosts

*

hadoop.proxyuser.root.groups

*

hadoop.proxyuser.oozie.hosts

*

hadoop.proxyuser.oozie.groups

*

5. hdfs-site.xml

dfs.namenode.name.dir

file:/usr/local/hadoop2.7/dfs/name

dfs.datanode.data.dir

file:/usr/local/hadoop2.7/dfs/data

dfs.webhdfs.enabled

true

dfs.replication

2

dfs.permissions.enabled

false

6. master

hadoop-maste

7. slaves

hadoop-node1

hadoop-node2

8. mapred-site.xml

mapreduce.framework.name

yarn

mapreduce.jobhistory.address

hadoop-maste:10020

mapreduce.map.memory.mb

4096

mapreduce.reduce.memory.mb

8192

yarn.app.mapreduce.am.staging-dir

/stage

mapreduce.jobhistory.done-dir

/mr-history/done

mapreduce.jobhistory.intermediate-done-dir

/mr-history/tmp

9. yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.aux-services.mapreduce_shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

yarn.resourcemanager.hostname

hadoop-maste

yarn.resourcemanager.address

hadoop-maste:8032

yarn.resourcemanager.scheduler.address

hadoop-maste:8030

yarn.resourcemanager.resource-tracker.address

hadoop-maste:8035

yarn.resourcemanager.admin.address

hadoop-maste:8033

yarn.resourcemanager.webapp.address

hadoop-maste:8088

yarn.log-aggregation-enable

true

yarn.nodemanager.vmem-pmem-ratio

5

yarn.nodemanager.resource.memory-mb

22528

每个节点可用内存,单位MB

yarn.scheduler.minimum-allocation-mb

4096

单个任务可申请最少内存,默认1024MB

yarn.scheduler.maximum-allocation-mb

16384

单个任务可申请最大内存,默认8192MB

0x03 构建脚本

1. 构建容器网络脚本(build.sh)

echo create network

docker network create --subnet=172.20.0.0/16 bigdata

echo create success

docker network ls

2. 构建容器脚本(build_network.sh)

echo build hadoop images

docker build -t="hadoop" .

0x04 启动脚本

1. 启动容器(start_containers.sh)

echo start hadoop-maste container...

docker rm -f hadoop-master &> /dev/null

docker run -itd --restart=always \--net bigdata \--ip 172.20.0.2 \--privileged \-p 18032:8032 \-p 28080:18080 \-p 29888:19888 \-p 17077:7077 \-p 51070:50070 \-p 18088:8088 \-p 19000:9000 \-p 11100:11000 \-p 51030:50030 \-p 18050:8050 \-p 18081:8081 \-p 18900:8900 \--name hadoop-maste \

--hostname hadoop-maste \--add-host hadoop-node1:172.20.0.3 \--add-host hadoop-node2:172.20.0.4 shaonaiyi/hadoop /bin/bash

echo "start hadoop-node1 container..."

docker run -itd --restart=always \--net bigdata \--ip 172.20.0.3 \--privileged \-p 18042:8042 \-p 51010:50010 \-p 51020:50020 \--name hadoop-node1 \--hostname hadoop-node1 \--add-host hadoop-maste:172.20.0.2 \--add-host hadoop-node2:172.20.0.4 shaonaiyi/hadoop /bin/bash

echo "start hadoop-node2 container..."

docker run -itd --restart=always \--net bigdata \--ip 172.20.0.4 \--privileged \-p 18043:8042 \-p 51011:50011 \-p 51021:50021 \--name hadoop-node2 \--hostname hadoop-node2 --add-host hadoop-maste:172.20.0.2 \--add-host hadoop-node1:172.20.0.3 shaonaiyi/hadoop /bin/bash

echo start sshd...

docker exec -it hadoop-maste /etc/init.d/ssh start

docker exec -it hadoop-node1 /etc/init.d/ssh start

docker exec -it hadoop-node2 /etc/init.d/ssh start

echo finished

docker ps

2. 停止容器(start_containers.sh)

docker stop hadoop-maste

docker stop hadoop-node1

docker stop hadoop-node2

echo stop containers

docker rm hadoop-maste

docker rm hadoop-node1

docker rm hadoop-node2

echo rm containers

docker ps

3. 启动Hadoop集群(start_hadoop.sh)

docker stop hadoop-maste

docker stop hadoop-node1

docker stop hadoop-node2

echo stop containers

docker rm hadoop-maste

docker rm hadoop-node1

docker rm hadoop-node2

echo rm containers

docker ps

0xFF 总结

- 本篇教程为Docker搭建Hadoop大数据集群的资源篇,适合有基础的同学,没有基础也没关系。请继续学习:D001.7 Docker搭建Hadoop集群(实践篇)

-

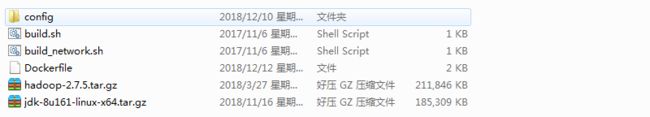

目录结构如下:

-

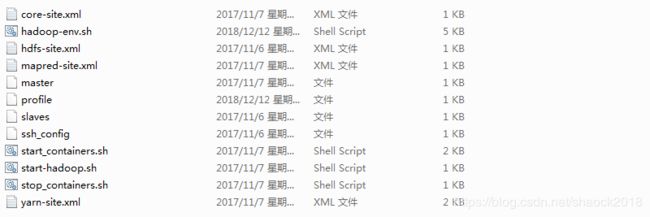

config文件夹如下:

- 常规安装请查看教程:Hadoop核心组件之HDFS的安装与配置

- 如需要获取资源,除了参考本博文,还可以加微信:shaonaiyi888获取

**作者简介:邵奈一

大学大数据讲师、大学市场洞察者、专栏编辑**

公众号、微博、CSDN:邵奈一

本系列课均为本人:邵奈一 原创,如转载请标明出处