private MediaFormat mediaFormat;

private MediaCodec mediaCodec;

private MediaCodec.BufferInfo info;

private Surface surface;//这个是OpenGL渲染的Surface

/**

* 初始化MediaCodec

*

* @param codecName

* @param width

* @param height

* @param csd_0

* @param csd_1

*/

public void initMediaCodec(String codecName, int width, int height, byte[] csd_0, byte[] csd_1) {

try {

if (surface != null) {

String mime = VideoSupportUtil.findVideoCodecName(codecName);

mediaFormat = MediaFormat.createVideoFormat(mime, width, height);

mediaFormat.setInteger(MediaFormat.KEY_MAX_INPUT_SIZE, width * height);

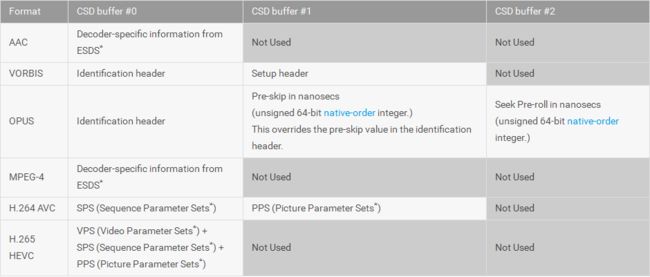

mediaFormat.setByteBuffer("csd-0", ByteBuffer.wrap(csd_0));

mediaFormat.setByteBuffer("csd-1", ByteBuffer.wrap(csd_1));

MyLog.d(mediaFormat.toString());

mediaCodec = MediaCodec.createDecoderByType(mime);

info = new MediaCodec.BufferInfo();

if(mediaCodec == null) {

MyLog.d("craete mediaCodec wrong");

return;

}

mediaCodec.configure(mediaFormat, surface, null, 0);

mediaCodec.start();

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

VideoSupportUtil.java

import android.media.MediaCodecList;

import java.util.HashMap;

import java.util.Map;

public class VideoSupportUtil {

private static Map codecMap = new HashMap<>();

static {

codecMap.put("h264", "video/avc");

}

public static String findVideoCodecName(String ffcodename){

if(codecMap.containsKey(ffcodename))

{

return codecMap.get(ffcodename);

}

return "";

}

public static boolean isSupportCodec(String ffcodecname){

boolean supportvideo = false;

int count = MediaCodecList.getCodecCount();

for(int i = 0; i < count; i++){

String[] tyeps = MediaCodecList.getCodecInfoAt(i).getSupportedTypes();

for(int j = 0; j < tyeps.length; j++){

if(tyeps[j].equals(findVideoCodecName(ffcodecname))){

supportvideo = true;

break;

}

}

if(supportvideo){

break;

}

}

return supportvideo;

}

}

c++层:

const char* codecName = ((const AVCodec*)avCodecContext->codec)->name;

onCallInitMediacodec(

codecName,

avCodecContext->width,

avCodecContext->height,

avCodecContext->extradata_size,

avCodecContext->extradata_size,

avCodecContext->extradata,

avCodecContext->extradata

);

//获取jmid_initmediacodec

jclass jlz = jniEnv->GetObjectClass(jobj);

jmethodID jmid_initmediacodec = env->GetMethodID(jlz, "initMediaCodec", "(Ljava/lang/String;II[B[B)V");

//在子线程

void onCallInitMediacodec(const char* mime, int width, int height, int csd0_size, int csd1_size, uint8_t *csd_0, uint8_t *csd_1) {

JNIEnv *jniEnv;

if(javaVM->AttachCurrentThread(&jniEnv, 0) != JNI_OK)

{

if(LOG_DEBUG)

{

LOGE("call onCallComplete worng");

}

}

jstring type = jniEnv->NewStringUTF(mime);

jbyteArray csd0 = jniEnv->NewByteArray(csd0_size);

jniEnv->SetByteArrayRegion(csd0, 0, csd0_size, reinterpret_cast(csd_0));

jbyteArray csd1 = jniEnv->NewByteArray(csd1_size);

jniEnv->SetByteArrayRegion(csd1, 0, csd1_size, reinterpret_cast(csd_1));

jniEnv->CallVoidMethod(jobj, jmid_initmediacodec, type, width, height, csd0, csd1);

jniEnv->DeleteLocalRef(csd0);

jniEnv->DeleteLocalRef(csd1);

jniEnv->DeleteLocalRef(type);

javaVM->DetachCurrentThread();

}

解码AvPacket数据

public void decodeAVPacket(int datasize, byte[] data) {

if (surface != null && datasize > 0 && data != null) {

int intputBufferIndex = mediaCodec.dequeueInputBuffer(10);

if (intputBufferIndex >= 0) {

ByteBuffer byteBuffer = mediaCodec.getOutputBuffers()[intputBufferIndex];

byteBuffer.clear();

byteBuffer.put(data);

mediaCodec.queueInputBuffer(intputBufferIndex, 0, datasize, 0, 0);

}

//这里拿到outputBufferIndex然后就可以获取到数据,这里会通过surface达到渲染

int outputBufferIndex = mediaCodec.dequeueOutputBuffer(info, 10);

while (outputBufferIndex >= 0) {

mediaCodec.releaseOutputBuffer(outputBufferIndex, true);

outputBufferIndex = mediaCodec.dequeueOutputBuffer(info, 10);

}

}

}

c++层回调decodeAVPacket

datasize = avPacket->size;

data = avPacket->data;//jni这里需要把uint8_t转为jbyteArray,类似初始化那