版本记录

| 版本号 | 时间 |

|---|---|

| V1.0 | 2018.08.18 |

前言

AVFoundation框架是ios中很重要的框架,所有与视频音频相关的软硬件控制都在这个框架里面,接下来这几篇就主要对这个框架进行介绍和讲解。感兴趣的可以看我上几篇。

1. AVFoundation框架解析(一)—— 基本概览

2. AVFoundation框架解析(二)—— 实现视频预览录制保存到相册

3. AVFoundation框架解析(三)—— 几个关键问题之关于框架的深度概括

4. AVFoundation框架解析(四)—— 几个关键问题之AVFoundation探索(一)

5. AVFoundation框架解析(五)—— 几个关键问题之AVFoundation探索(二)

6. AVFoundation框架解析(六)—— 视频音频的合成(一)

7. AVFoundation框架解析(七)—— 视频组合和音频混合调试

8. AVFoundation框架解析(八)—— 优化用户的播放体验

9. AVFoundation框架解析(九)—— AVFoundation的变化(一)

10. AVFoundation框架解析(十)—— AVFoundation的变化(二)

11. AVFoundation框架解析(十一)—— AVFoundation的变化(三)

12. AVFoundation框架解析(十二)—— AVFoundation的变化(四)

13. AVFoundation框架解析(十三)—— 构建基本播放应用程序

14. AVFoundation框架解析(十四)—— VAssetWriter和AVAssetReader的Timecode支持(一)

15. AVFoundation框架解析(十五)—— VAssetWriter和AVAssetReader的Timecode支持(二)

16. AVFoundation框架解析(十六)—— 一个简单示例之播放、录制以及混合视频(一)

源码

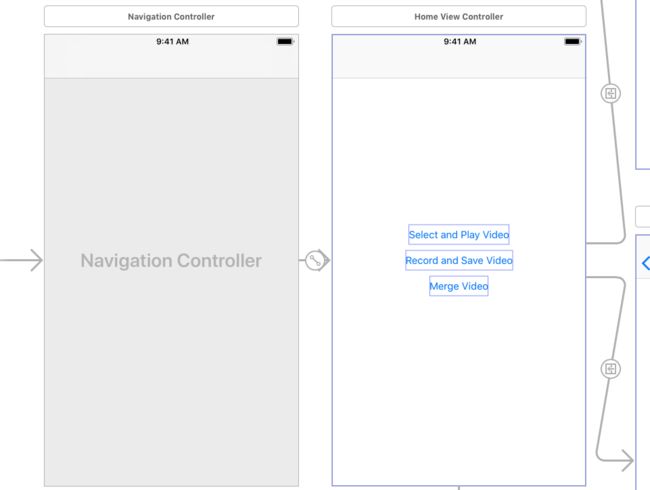

首先我们看一下工程组织结构。

下面就一起看一下源码。

1. HomeViewController.swift

import UIKit

class HomeViewController: UIViewController {

}

2. MergeVideoViewController.swift

import UIKit

import MobileCoreServices

import MediaPlayer

import Photos

class MergeVideoViewController: UIViewController {

var firstAsset: AVAsset?

var secondAsset: AVAsset?

var audioAsset: AVAsset?

var loadingAssetOne = false

@IBOutlet var activityMonitor: UIActivityIndicatorView!

func savedPhotosAvailable() -> Bool {

guard !UIImagePickerController.isSourceTypeAvailable(.savedPhotosAlbum) else { return true }

let alert = UIAlertController(title: "Not Available", message: "No Saved Album found", preferredStyle: .alert)

alert.addAction(UIAlertAction(title: "OK", style: UIAlertActionStyle.cancel, handler: nil))

present(alert, animated: true, completion: nil)

return false

}

func exportDidFinish(_ session: AVAssetExportSession) {

// Cleanup assets

activityMonitor.stopAnimating()

firstAsset = nil

secondAsset = nil

audioAsset = nil

guard session.status == AVAssetExportSessionStatus.completed,

let outputURL = session.outputURL else { return }

let saveVideoToPhotos = {

PHPhotoLibrary.shared().performChanges({ PHAssetChangeRequest.creationRequestForAssetFromVideo(atFileURL: outputURL) }) { saved, error in

let success = saved && (error == nil)

let title = success ? "Success" : "Error"

let message = success ? "Video saved" : "Failed to save video"

let alert = UIAlertController(title: title, message: message, preferredStyle: .alert)

alert.addAction(UIAlertAction(title: "OK", style: UIAlertActionStyle.cancel, handler: nil))

self.present(alert, animated: true, completion: nil)

}

}

// Ensure permission to access Photo Library

if PHPhotoLibrary.authorizationStatus() != .authorized {

PHPhotoLibrary.requestAuthorization({ status in

if status == .authorized {

saveVideoToPhotos()

}

})

} else {

saveVideoToPhotos()

}

}

@IBAction func loadAssetOne(_ sender: AnyObject) {

if savedPhotosAvailable() {

loadingAssetOne = true

VideoHelper.startMediaBrowser(delegate: self, sourceType: .savedPhotosAlbum)

}

}

@IBAction func loadAssetTwo(_ sender: AnyObject) {

if savedPhotosAvailable() {

loadingAssetOne = false

VideoHelper.startMediaBrowser(delegate: self, sourceType: .savedPhotosAlbum)

}

}

@IBAction func loadAudio(_ sender: AnyObject) {

let mediaPickerController = MPMediaPickerController(mediaTypes: .any)

mediaPickerController.delegate = self

mediaPickerController.prompt = "Select Audio"

present(mediaPickerController, animated: true, completion: nil)

}

@IBAction func merge(_ sender: AnyObject) {

guard let firstAsset = firstAsset, let secondAsset = secondAsset else { return }

activityMonitor.startAnimating()

// 1 - Create AVMutableComposition object. This object will hold your AVMutableCompositionTrack instances.

let mixComposition = AVMutableComposition()

// 2 - Create two video tracks

guard let firstTrack = mixComposition.addMutableTrack(withMediaType: .video,

preferredTrackID: Int32(kCMPersistentTrackID_Invalid)) else { return }

do {

try firstTrack.insertTimeRange(CMTimeRangeMake(kCMTimeZero, firstAsset.duration),

of: firstAsset.tracks(withMediaType: .video)[0],

at: kCMTimeZero)

} catch {

print("Failed to load first track")

return

}

guard let secondTrack = mixComposition.addMutableTrack(withMediaType: .video,

preferredTrackID: Int32(kCMPersistentTrackID_Invalid)) else { return }

do {

try secondTrack.insertTimeRange(CMTimeRangeMake(kCMTimeZero, secondAsset.duration),

of: secondAsset.tracks(withMediaType: .video)[0],

at: firstAsset.duration)

} catch {

print("Failed to load second track")

return

}

// 2.1

let mainInstruction = AVMutableVideoCompositionInstruction()

mainInstruction.timeRange = CMTimeRangeMake(kCMTimeZero, CMTimeAdd(firstAsset.duration, secondAsset.duration))

// 2.2

let firstInstruction = VideoHelper.videoCompositionInstruction(firstTrack, asset: firstAsset)

firstInstruction.setOpacity(0.0, at: firstAsset.duration)

let secondInstruction = VideoHelper.videoCompositionInstruction(secondTrack, asset: secondAsset)

// 2.3

mainInstruction.layerInstructions = [firstInstruction, secondInstruction]

let mainComposition = AVMutableVideoComposition()

mainComposition.instructions = [mainInstruction]

mainComposition.frameDuration = CMTimeMake(1, 30)

mainComposition.renderSize = CGSize(width: UIScreen.main.bounds.width, height: UIScreen.main.bounds.height)

// 3 - Audio track

if let loadedAudioAsset = audioAsset {

let audioTrack = mixComposition.addMutableTrack(withMediaType: .audio, preferredTrackID: 0)

do {

try audioTrack?.insertTimeRange(CMTimeRangeMake(kCMTimeZero, CMTimeAdd(firstAsset.duration, secondAsset.duration)),

of: loadedAudioAsset.tracks(withMediaType: .audio)[0] ,

at: kCMTimeZero)

} catch {

print("Failed to load Audio track")

}

}

// 4 - Get path

guard let documentDirectory = FileManager.default.urls(for: .documentDirectory, in: .userDomainMask).first else { return }

let dateFormatter = DateFormatter()

dateFormatter.dateStyle = .long

dateFormatter.timeStyle = .short

let date = dateFormatter.string(from: Date())

let url = documentDirectory.appendingPathComponent("mergeVideo-\(date).mov")

// 5 - Create Exporter

guard let exporter = AVAssetExportSession(asset: mixComposition, presetName: AVAssetExportPresetHighestQuality) else { return }

exporter.outputURL = url

exporter.outputFileType = AVFileType.mov

exporter.shouldOptimizeForNetworkUse = true

exporter.videoComposition = mainComposition

// 6 - Perform the Export

exporter.exportAsynchronously() {

DispatchQueue.main.async {

self.exportDidFinish(exporter)

}

}

}

}

extension MergeVideoViewController: UIImagePickerControllerDelegate {

func imagePickerController(_ picker: UIImagePickerController, didFinishPickingMediaWithInfo info: [String : Any]) {

dismiss(animated: true, completion: nil)

guard let mediaType = info[UIImagePickerControllerMediaType] as? String,

mediaType == (kUTTypeMovie as String),

let url = info[UIImagePickerControllerMediaURL] as? URL

else { return }

let avAsset = AVAsset(url: url)

var message = ""

if loadingAssetOne {

message = "Video one loaded"

firstAsset = avAsset

} else {

message = "Video two loaded"

secondAsset = avAsset

}

let alert = UIAlertController(title: "Asset Loaded", message: message, preferredStyle: .alert)

alert.addAction(UIAlertAction(title: "OK", style: UIAlertActionStyle.cancel, handler: nil))

present(alert, animated: true, completion: nil)

}

}

extension MergeVideoViewController: UINavigationControllerDelegate {

}

extension MergeVideoViewController: MPMediaPickerControllerDelegate {

func mediaPicker(_ mediaPicker: MPMediaPickerController, didPickMediaItems mediaItemCollection: MPMediaItemCollection) {

dismiss(animated: true) {

let selectedSongs = mediaItemCollection.items

guard let song = selectedSongs.first else { return }

let url = song.value(forProperty: MPMediaItemPropertyAssetURL) as? URL

self.audioAsset = (url == nil) ? nil : AVAsset(url: url!)

let title = (url == nil) ? "Asset Not Available" : "Asset Loaded"

let message = (url == nil) ? "Audio Not Loaded" : "Audio Loaded"

let alert = UIAlertController(title: title, message: message, preferredStyle: .alert)

alert.addAction(UIAlertAction(title: "OK", style: .cancel, handler:nil))

self.present(alert, animated: true, completion: nil)

}

}

func mediaPickerDidCancel(_ mediaPicker: MPMediaPickerController) {

dismiss(animated: true, completion: nil)

}

}

3. PlayVideoViewController.swift

import UIKit

import AVKit

import MobileCoreServices

class PlayVideoViewController: UIViewController {

@IBAction func playVideo(_ sender: AnyObject) {

VideoHelper.startMediaBrowser(delegate: self, sourceType: .savedPhotosAlbum)

}

}

// MARK: - UIImagePickerControllerDelegate

extension PlayVideoViewController: UIImagePickerControllerDelegate {

func imagePickerController(_ picker: UIImagePickerController, didFinishPickingMediaWithInfo info: [String : Any]) {

guard let mediaType = info[UIImagePickerControllerMediaType] as? String,

mediaType == (kUTTypeMovie as String),

let url = info[UIImagePickerControllerMediaURL] as? URL

else { return }

dismiss(animated: true) {

let player = AVPlayer(url: url)

let vcPlayer = AVPlayerViewController()

vcPlayer.player = player

self.present(vcPlayer, animated: true, completion: nil)

}

}

}

// MARK: - UINavigationControllerDelegate

extension PlayVideoViewController: UINavigationControllerDelegate {

}

4. RecordVideoViewController.swift

import UIKit

import MobileCoreServices

class RecordVideoViewController: UIViewController {

@IBAction func record(_ sender: AnyObject) {

VideoHelper.startMediaBrowser(delegate: self, sourceType: .camera)

}

@objc func video(_ videoPath: String, didFinishSavingWithError error: Error?, contextInfo info: AnyObject) {

let title = (error == nil) ? "Success" : "Error"

let message = (error == nil) ? "Video was saved" : "Video failed to save"

let alert = UIAlertController(title: title, message: message, preferredStyle: .alert)

alert.addAction(UIAlertAction(title: "OK", style: UIAlertActionStyle.cancel, handler: nil))

present(alert, animated: true, completion: nil)

}

}

// MARK: - UIImagePickerControllerDelegate

extension RecordVideoViewController: UIImagePickerControllerDelegate {

func imagePickerController(_ picker: UIImagePickerController, didFinishPickingMediaWithInfo info: [String : Any]) {

dismiss(animated: true, completion: nil)

guard let mediaType = info[UIImagePickerControllerMediaType] as? String,

mediaType == (kUTTypeMovie as String),

let url = info[UIImagePickerControllerMediaURL] as? URL,

UIVideoAtPathIsCompatibleWithSavedPhotosAlbum(url.path)

else { return }

// Handle a movie capture

UISaveVideoAtPathToSavedPhotosAlbum(url.path, self, #selector(video(_:didFinishSavingWithError:contextInfo:)), nil)

}

}

// MARK: - UINavigationControllerDelegate

extension RecordVideoViewController: UINavigationControllerDelegate {

}

5. VideoHelper.swift

import UIKit

import MobileCoreServices

import AVFoundation

class VideoHelper {

static func startMediaBrowser(delegate: UIViewController & UINavigationControllerDelegate & UIImagePickerControllerDelegate, sourceType: UIImagePickerControllerSourceType) {

guard UIImagePickerController.isSourceTypeAvailable(sourceType) else { return }

let mediaUI = UIImagePickerController()

mediaUI.sourceType = sourceType

mediaUI.mediaTypes = [kUTTypeMovie as String]

mediaUI.allowsEditing = true

mediaUI.delegate = delegate

delegate.present(mediaUI, animated: true, completion: nil)

}

static func orientationFromTransform(_ transform: CGAffineTransform) -> (orientation: UIImageOrientation, isPortrait: Bool) {

var assetOrientation = UIImageOrientation.up

var isPortrait = false

if transform.a == 0 && transform.b == 1.0 && transform.c == -1.0 && transform.d == 0 {

assetOrientation = .right

isPortrait = true

} else if transform.a == 0 && transform.b == -1.0 && transform.c == 1.0 && transform.d == 0 {

assetOrientation = .left

isPortrait = true

} else if transform.a == 1.0 && transform.b == 0 && transform.c == 0 && transform.d == 1.0 {

assetOrientation = .up

} else if transform.a == -1.0 && transform.b == 0 && transform.c == 0 && transform.d == -1.0 {

assetOrientation = .down

}

return (assetOrientation, isPortrait)

}

static func videoCompositionInstruction(_ track: AVCompositionTrack, asset: AVAsset) -> AVMutableVideoCompositionLayerInstruction {

let instruction = AVMutableVideoCompositionLayerInstruction(assetTrack: track)

let assetTrack = asset.tracks(withMediaType: AVMediaType.video)[0]

let transform = assetTrack.preferredTransform

let assetInfo = orientationFromTransform(transform)

var scaleToFitRatio = UIScreen.main.bounds.width / assetTrack.naturalSize.width

if assetInfo.isPortrait {

scaleToFitRatio = UIScreen.main.bounds.width / assetTrack.naturalSize.height

let scaleFactor = CGAffineTransform(scaleX: scaleToFitRatio, y: scaleToFitRatio)

instruction.setTransform(assetTrack.preferredTransform.concatenating(scaleFactor), at: kCMTimeZero)

} else {

let scaleFactor = CGAffineTransform(scaleX: scaleToFitRatio, y: scaleToFitRatio)

var concat = assetTrack.preferredTransform.concatenating(scaleFactor)

.concatenating(CGAffineTransform(translationX: 0, y: UIScreen.main.bounds.width / 2))

if assetInfo.orientation == .down {

let fixUpsideDown = CGAffineTransform(rotationAngle: CGFloat(Double.pi))

let windowBounds = UIScreen.main.bounds

let yFix = assetTrack.naturalSize.height + windowBounds.height

let centerFix = CGAffineTransform(translationX: assetTrack.naturalSize.width, y: yFix)

concat = fixUpsideDown.concatenating(centerFix).concatenating(scaleFactor)

}

instruction.setTransform(concat, at: kCMTimeZero)

}

return instruction

}

}

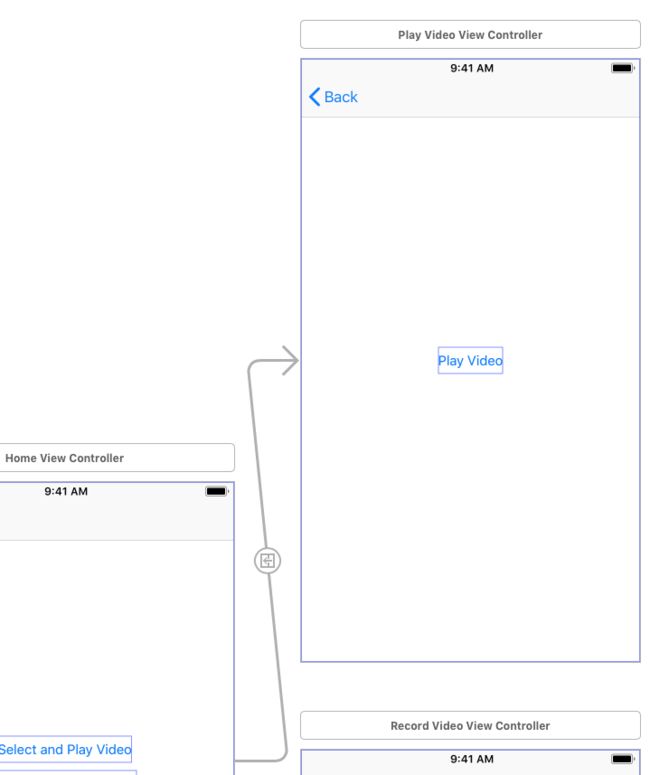

下面看一下sb文件中的内容。

效果展示

1. Select and Play Video - 选择并播放视频

效果展示如下所示:

2. Record and Save Video - 录制和保存视频

效果展示如下所示:

这里我录制了两次,第一次录制的时候App会申请很多的权限,包括麦克风什么的,这里不是第一次录制了,所有不会有权限申请了。

3. Merge Video - 视频合并

效果展示如下所示:

可见,两个视频以及背景音乐就这么合并到一起了。

后记

本篇主要讲述了一个简单示例之播放、录制以及混合视频的源码和效果展示,感兴趣的给个赞或者关注~~~