在开发的时候,要实现微信小视频的效果只用系统框架中的picker是不行的,它是个navi 而我们需要一个view把它放到tableview后边

所以我们需要自定义一个摄像界面

下边的文章摘抄自http://course.gdou.com/blog/Blog.pzs/archive/2011/12/14/10882.html

看完了相信对你会有很大的帮助

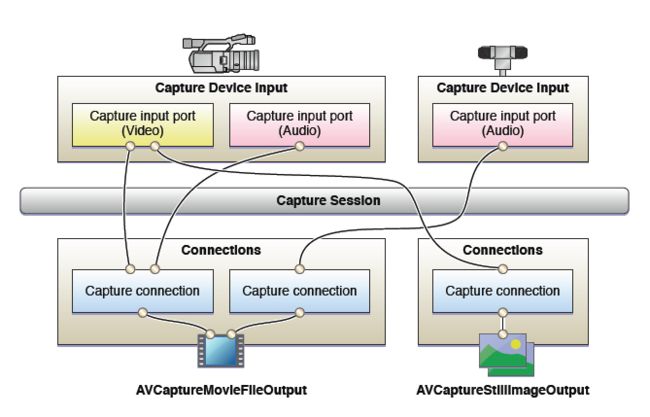

在进行视频捕获时,有输入设备及输出设备,程序通过 AVCaptureSession 的一个实例来协调、组织数据在它们之间的流动。

程序中至少需要:

●An instance ofAVCaptureDeviceto represent the input device, such as a camera or microphone

●An instance of a concrete subclass ofAVCaptureInputto configure the ports from the input device

●An instance of a concrete subclass ofAVCaptureOutputto manage the output to a movie file or still image

●An instance ofAVCaptureSessionto coordinate the data flow from the input to the output

由上图可以看出,一个AVCaptureSession 可以协调多个输入设备及输出设备。通过 AVCaptureSession 的 addInput、addOutput 方法可将输入、输出设备加入 AVCaptureSession 中。

capture input 及 capture out 之间的联系由

AVCaptureConnection 对象表示,capture input (AVCaptureInput)

有一个或多个输入端口(AVCaptureInputPort 实例) ,capture out(AVCaptureOutput

实例)可接收来自一个或多个输入源的数据。

当一个输入或一个输出被加入到 AVCaptureSession 中时,该 session 会“贪婪地” 在所有兼容的输入端口和输出之间建立连接(AVCaptureConnection),因此,一般不需要手工在输入、输出间建立连接。

输入设备:

AVCaptureDeviceInput *captureInput = [AVCaptureDeviceInput deviceInputWithDevice:

[AVCaptureDevicedefaultDeviceWithMediaType:AVMediaTypeVideo]error:nil];

种类有:

AVMediaTypeVideo

AVMediaTypeAudio

输出设备有:

AVCaptureMovieFileOutput 输出到文件

AVCaptureVideoDataOutput 可用于处理被捕获的视频帧

AVCaptureAudioDataOutput 可用于处理被捕获的音频数据

AVCaptureStillImageOutput 可用于捕获带有元数据(MetaData)的静止图像

输出设备对象的创建:

AVCaptureMovieFileOutput *captureOutput= [[AVCaptureMovieFileOutputalloc]init;

一、捕获到视频文件

此时输出设备指定为:AVCaptureMovieFileOutput,其子类 AVCaptureFileOutput 的2个方法用于启动、停止编码输出:

- (void)startRecordingToOutputFileURL:(NSURL*)outputFileURLrecordingDelegate:(id

- (void)stopRecording

程序开始编码输出时,应先启动 AVCaptureSession,再用以上方法启动编码输出。整个步骤:

创建输入、输出设备、AVCaptureSession对象:

AVCaptureDeviceInput*captureInput = [AVCaptureDeviceInputdeviceInputWithDevice:[AVCaptureDevicedefaultDeviceWithMediaType:AVMediaTypeVideo]error:nil];

AVCaptureDeviceInput*microphone = [AVCaptureDeviceInputdeviceInputWithDevice:[AVCaptureDevicedefaultDeviceWithMediaType:AVMediaTypeAudio]error:nil];

/*We setupt the output*/

captureOutput= [[AVCaptureMovieFileOutputalloc]init];

self.captureSession= [[AVCaptureSessionalloc]init];

加入输入、输出设备:

[self.captureSessionaddInput:captureInput];

[self.captureSessionaddInput:microphone];

[self.captureSessionaddOutput:self.captureOutput];

设置Session 属性:

/*We use medium quality, ont the iPhone 4 this demo would be laging too much, the conversion in UIImage and CGImage demands too much ressources for a 720p resolution.*/

[self.captureSessionsetSessionPreset:AVCaptureSessionPresetMedium];

其他预置属性如下:

不同设备的情况:

开始编码,视频编码为H.264、音频编码为AAC:

[selfperformSelector:@selector(startRecording)withObject:nilafterDelay:10.0];

[self.captureSessionstartRunning];

- (void) startRecording

{

[captureOutputstartRecordingToOutputFileURL:[selftempFileURL]recordingDelegate:self];

}

处理编码过程中事件的类必须符合 VCaptureFileOutputRecordingDelegate 协议,并在以下2个方法中进行处理:

- (void)captureOutput:(AVCaptureFileOutput*)captureOutput didStartRecordingToOutputFileAtURL:(NSURL*)fileURL fromConnections:(NSArray*)connections

{

NSLog(@"start record video");

}

- (void)captureOutput:(AVCaptureFileOutput*)captureOutput didFinishRecordingToOutputFileAtURL:(NSURL*)outputFileURL fromConnections:(NSArray*)connections error:(NSError*)error

{

ALAssetsLibrary*library = [[ALAssetsLibraryalloc]init];

// 将临时文件夹中的视频文件复制到照片文件夹中,以便存取

[librarywriteVideoAtPathToSavedPhotosAlbum:outputFileURL

completionBlock:^(NSURL*assetURL,NSError*error) {

if(error) {

_myLabel.text=@"Error";

}

else

_myLabel.text= [assetURLpath];

}];

[libraryrelease];}

通过 AVCaptureFileOutput 的 stopRecording 方法停止编码。

二、捕获用于处理视频帧

三、捕获为静止图像

此时输出设备对象为:AVCaptureStillImageOutput,session 的预置(preset)信息决定图像分辨率:

图像格式:

例:

AVCaptureStillImageOutput *stillImageOutput = [[AVCaptureStillImageOutput alloc] init];

NSDictionary *outputSettings = [[NSDictionary alloc] initWithObjectsAndKeys: AVVideoCodecJPEG, AVVideoCodecKey, nil];

[stillImageOutput setOutputSettings:outputSettings];

当需要捕获静止图像时,可向输出设备对象发送:captureStillImageAsynchronouslyFromConnection:completionHandler:

消息。第一个参数为欲进行图像捕获的连接对象(AVCaptureConnection),你必须找到具有视频采集输入端口(port)的连接:

AVCaptureConnection *videoConnection = nil;

for (AVCaptureConnection *connection in stillImageOutput.connections) {

for (AVCaptureInputPort *port in [connection inputPorts]) {

if ([[port mediaType] isEqual:AVMediaTypeVideo] ) {

videoConnection = connection;

break;

}

}

if (videoConnection) { break; }

}

第二个参数是一个块(block),它有2个参数,第一个参数是包含图像数据的 CMSampleBuffer,可用于处理图像:

[[selfstillImageOutput]captureStillImageAsynchronouslyFromConnection:stillImageConnection

completionHandler:^(CMSampleBufferRefimageDataSampleBuffer,NSError*error) {

ALAssetsLibraryWriteImageCompletionBlockcompletionBlock = ^(NSURL*assetURL,NSError*error) {

if(error) {

// error handling

}

}

};

if(imageDataSampleBuffer !=NULL) {

NSData*imageData = [AVCaptureStillImageOutputjpegStillImageNSDataRepresentation:imageDataSampleBuffer];

ALAssetsLibrary*library = [[ALAssetsLibraryalloc]init];

UIImage*image = [[UIImagealloc]initWithData:imageData];

// 将图像保存到“照片” 中

[librarywriteImageToSavedPhotosAlbum:[imageCGImage] orientation:(ALAssetOrientation)[imageimageOrientation]

completionBlock:completionBlock];

[imagerelease];

[libraryrelease];

}

else

completionBlock(nil, error);

if([[selfdelegate]respondsToSelector:@selector(captureManagerStillImageCaptured:)]) {

[[selfdelegate]captureManagerStillImageCaptured:self];

}

}];