安装部署GUI插件:

准备镜像:

[root@hdss7-200 ~]# docker pull k8scn/kubernetes-dashboard-amd64:v1.8.3 v1.8.3: Pulling from k8scn/kubernetes-dashboard-amd64 a4026007c47e: Pull complete Digest: sha256:ebc993303f8a42c301592639770bd1944d80c88be8036e2d4d0aa116148264ff Status: Downloaded newer image for k8scn/kubernetes-dashboard-amd64:v1.8.3 docker.io/k8scn/kubernetes-dashboard-amd64:v1.8.3 [root@hdss7-200 ~]# docker images |grep dash [root@hdss7-200 ~]# docker push harbor.od.com/public/dashboard:v1.8.3 The push refers to repository [harbor.od.com/public/dashboard] 23ddb8cbb75a: Mounted from public/dashboard.od.com v1.8.3: digest: sha256:ebc993303f8a42c301592639770bd1944d80c88be8036e2d4d0aa116148264ff size: 529

准备资源配置清单:

mkdir -p /data/k8s-yaml/dashboard

# cat rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard-admin

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard-admin

namespace: kube-system

# cat dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

priorityClassName: system-cluster-critical

containers:

- name: kubernetes-dashboard

image: harbor.od.com/public/dashboard:v1.8.3

resources:

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 50m

memory: 100Mi

ports:

- containerPort: 8443

protocol: TCP

args:

# PLATFORM-SPECIFIC ARGS HERE

- --auto-generate-certificates

volumeMounts:

- name: tmp-volume

mountPath: /tmp

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard-admin

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

# cat svc.yaml

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 443

targetPort: 8443

# cat ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kubernetes-dashboard

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: dashboard.od.com

http:

paths:

- backend:

serviceName: kubernetes-dashboard

servicePort: 443

应用资源配置清单:

[root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/rbac.yaml serviceaccount/kubernetes-dashboard-admin created clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-admin created [root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/dp.yaml deployment.apps/kubernetes-dashboard created [root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/svc.yaml service/kubernetes-dashboard created [root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/ingress.yaml ingress.extensions/kubernetes-dashboard created [root@hdss7-22 ~]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-6b6c4f9648-j7cv9 1/1 Running 0 5h8m kubernetes-dashboard-76dcdb4677-vhf5p 1/1 Running 0 8m24s

到我们自建的dns上面配置域名解析:

[root@hdss7-11 named]# cat od.com.zone $ORIGIN od.com. $TTL 600; 10 minutes @ IN SOAdns.od.com. dnsadmin.od.com. ( 2019111005 ; serial 10800 ; refresh (3 hours) 900 ; retry (15 minutes) 604800 ; expire (1 week) 86400 ; minimum (1 day) ) NS dns.od.com. $TTL 60; 1 minute dns A 10.4.7.11 harbor A 10.4.7.200 k8s-yaml A 10.4.7.200 fraefik A 10.4.7.11 dashboard A 10.4.7.11 [root@hdss7-11 named]# systemctl restart named [root@hdss7-11 named]# dig -t A dashboard.od.com +short 10.4.7.11

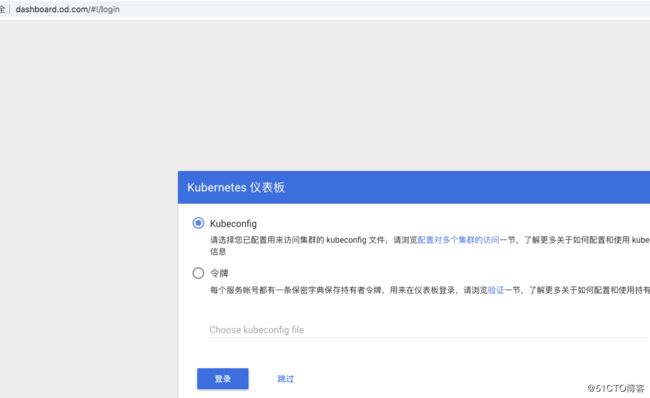

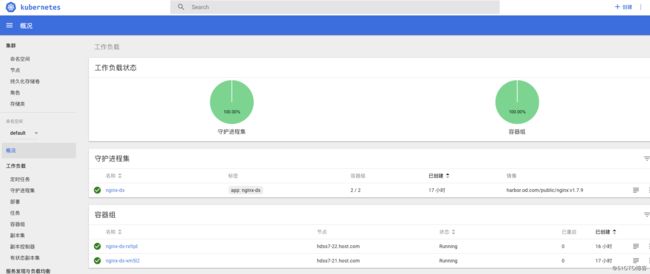

浏览器访问:

我们先点击跳过:

k8s自1.6版本起默认使用基于角色的访问控制即RBAC,相较于ABAC(基于属性的访问控制)和webHook等鉴权机制,对集群中的资源的权限实现了完整覆盖,并且支持权限的动态调整,无需重启apiserver

可以使用kubectl查看k8s集群中所有权限:

[root@hdss7-22 ~]# kubectl get clusterrole NAME AGE admin 2d14h cluster-admin 2d14h edit 2d14h system:aggregate-to-admin 2d14h system:aggregate-to-edit 2d14h system:aggregate-to-view 2d14h system:auth-delegator 2d14h system:basic-user 2d14h system:certificates.k8s.io:certificatesigningrequests:nodeclient 2d14h system:certificates.k8s.io:certificatesigningrequests:selfnodeclient 2d14h system:controller:attachdetach-controller 2d14h system:controller:certificate-controller 2d14h system:controller:clusterrole-aggregation-controller 2d14h system:controller:cronjob-controller 2d14h system:controller:daemon-set-controller 2d14h system:controller:deployment-controller 2d14h

我们使用openssl给dashboard的域名配置自签的https证书,使其支持https访问:

[root@hdss7-200 certs]# (umask 077; openssl genrsa -out dashboard.od.com.key 2048) Generating RSA private key, 2048 bit long modulus ................................................+++ ..........................................+++ e is 65537 (0x10001) [root@hdss7-200 certs]# [root@hdss7-200 certs]# [root@hdss7-200 certs]# ll 总用量 104 -rw-r--r-- 1 root root 1257 3月 26 22:54 apiserver.csr -rw-r--r-- 1 root root 587 3月 26 22:52 apiserver-csr.json -rw------- 1 root root 1679 3月 26 22:54 apiserver-key.pem -rw-r--r-- 1 root root 1602 3月 26 22:54 apiserver.pem -rw-r--r-- 1 root root 836 3月 25 21:37 ca-config.json -rw-r--r-- 1 root root 993 3月 25 21:19 ca.csr -rw-r--r-- 1 root root 326 3月 25 21:18 ca-csr.json -rw------- 1 root root 1675 3月 25 21:19 ca-key.pem -rw-r--r-- 1 root root 1338 3月 25 21:19 ca.pem -rw-r--r-- 1 root root 993 3月 25 23:12 client.csr -rw-r--r-- 1 root root 280 3月 25 23:07 client-csr.json -rw------- 1 root root 1679 3月 25 23:12 client-key.pem -rw-r--r-- 1 root root 1363 3月 25 23:12 client.pem -rw------- 1 root root 1675 3月 28 14:39 dashboard.od.com.key -rw-r--r-- 1 root root 1062 3月 25 21:41 etcd-peer.csr -rw-r--r-- 1 root root 363 3月 25 21:38 etcd-peer-csr.json -rw------- 1 root root 1679 3月 25 21:41 etcd-peer-key.pem -rw-r--r-- 1 root root 1424 3月 25 21:41 etcd-peer.pem -rw-r--r-- 1 root root 1123 3月 26 22:55 kubelet.csr -rw-r--r-- 1 root root 469 3月 26 22:52 kubelet-csr.json -rw------- 1 root root 1675 3月 26 22:55 kubelet-key.pem -rw-r--r-- 1 root root 1472 3月 26 22:55 kubelet.pem -rw-r--r-- 1 root root 1005 3月 26 22:36 kube-proxy-client.csr -rw------- 1 root root 1679 3月 26 22:36 kube-proxy-client-key.pem -rw-r--r-- 1 root root 1375 3月 26 22:36 kube-proxy-client.pem -rw-r--r-- 1 root root 267 3月 26 22:33 kube-proxy-csr.json [root@hdss7-200 certs]# openssl req -new -key dashboard.od.com.key -out dashboard.od.com.csr -subj "/CN=dashboard.od.com/C=CN/ST=BJ/L=Beijing/O=DayaZZ/OU=ops" [root@hdss7-200 certs]# openssl x509 -req -in dashboard.od.com.csr -CA ca.pem -CAkey ca-key.pem -CAcreateserial -out dashboard.od.com.crt -days 3650 Signature ok subject=/CN=dashboard.od.com/C=CN/ST=BJ/L=Beijing/O=DayaZZ/OU=ops Getting CA Private Key

将https证书拷贝到11主机上:

[root@hdss7-11 nginx]# mkdir -p /etc/nginx/certs

[root@hdss7-11 certs]# ll

总用量 8

-rw-r--r-- 1 root root 1188 3月 28 14:42 dashboard.od.com.crt

-rw------- 1 root root 1675 3月 28 14:43 dashboard.od.com.key

[root@hdss7-11 certs]# pwd

/etc/nginx/certs

[root@hdss7-11 conf.d]# cat dashboard.od.com.conf

server {

listen 80;

server_name dashboard.od.com 116.85.5.4;

rewrite ^(.*)$ https://${server_name}$1 permanent;

}

server {

listen 443 ssl;

server_name dashboard.od.com 116.85.5.4;

ssl_certificate "certs/dashboard.od.com.crt";

ssl_certificate_key "certs/dashboard.od.com.key";

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 10m;

ssl_ciphers HIGH:!aNULL:!MD5;

ssl_prefer_server_ciphers on;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host dashboard.od.com;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

nginx -s reload

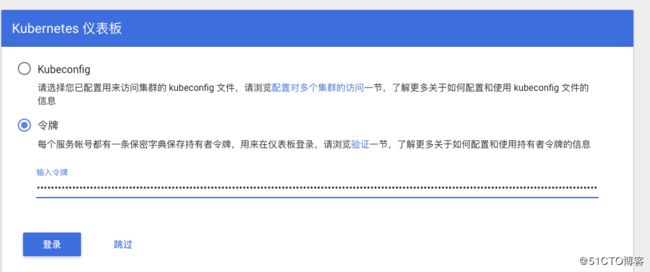

然后取出我们的dashboard令牌:

[root@hdss7-22 ~]# kubectl get secret -n kube-system NAME TYPE DATA AGE coredns-token-dkd6k kubernetes.io/service-account-token 3 6h25m default-token-q462s kubernetes.io/service-account-token 3 41h kubernetes-dashboard-admin-token-npgjj kubernetes.io/service-account-token 3 84m kubernetes-dashboard-key-holder Opaque 2 84m traefik-ingress-controller-token-w8thz kubernetes.io/service-account-token 3 5h12m [root@hdss7-22 ~]# kubectl describe kubernetes-dashboard-admin-token-npgjj -n kube-system error: the server doesn't have a resource type "kubernetes-dashboard-admin-token-npgjj" [root@hdss7-22 ~]# kubectl describe secret kubernetes-dashboard-admin-token-npgjj -n kube-system Name: kubernetes-dashboard-admin-token-npgjj Namespace: kube-system Labels:Annotations: kubernetes.io/service-account.name: kubernetes-dashboard-admin kubernetes.io/service-account.uid: b36a01a3-a99f-4cf4-ad53-df8e55e26aec Type: kubernetes.io/service-account-token Data ==== ca.crt: 1338 bytes namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbi10b2tlbi1ucGdqaiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImIzNmEwMWEzLWE5OWYtNGNmNC1hZDUzLWRmOGU1NWUyNmFlYyIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiJ9.iQSC15Mjo1MHjhQ56f3muLUHbfGfOWAIFUiwvjB_qer1xAAc_k3GB-G2ESRuKJQgbI3L9WBICAG_hXKTFWpKXwY2BKoma7XKFUf3662PME4p2QIdCyESbxIdVWdfwu7fw_S6mYc2R_uIoVOZjlJ2DlrUhs-KyBBzOIazOuXgOcH08Igpj2xBDiXKEPh8IQOhL8O9dmPWMXQWAboDvEDY23WDgBHLQofmtfx-o9IEb_Cuh9xhelbPNHfiSFVVvlBQBmOynz7OpNYsIJ1T1hcbfg0aXMv0NIIk13HrUSXcCL3PUnrWvtVdOjoed-seEnqmj4B6Eqif2p0arK1SF2S_wQ

复制token,到dashboard的登录页面,粘贴进去:

如下表示登录成功: