本文收录在javaskill.cn中,内有完整的JAVA知识地图,欢迎访问

canal是什么

摘自项目github

基于数据库增量日志解析,提供增量数据订阅&消费,目前主要支持了mysql

原理相对比较简单:

canal模拟mysql slave的交互协议,伪装自己为mysql slave,向mysql master发送dump协议

mysql master收到dump请求,开始推送binary log给slave(也就是canal)

canal解析binary log对象(原始为byte流)

canal解决了什么问题

起源:

早期,阿里巴巴B2B公司因为存在杭州和美国双机房部署,存在跨机房同步的业务需求。不过早期的数据库同步业务,主要是基于trigger的方式获取增量变更,不过从2010年开始,阿里系公司开始逐步的尝试基于数据库的日志解析,获取增量变更进行同步,由此衍生出了增量订阅&消费的业务,从此开启了一段新纪元。

本文在探讨什么

canal的基础操作,本文不再赘述,可参考github上的quick start和client api,包含了demo

本文探讨的是,基于canal的流式api的消息分发,以及如何防止消息丢失和重复处理

什么是流式API

摘自项目github

流式api设计:

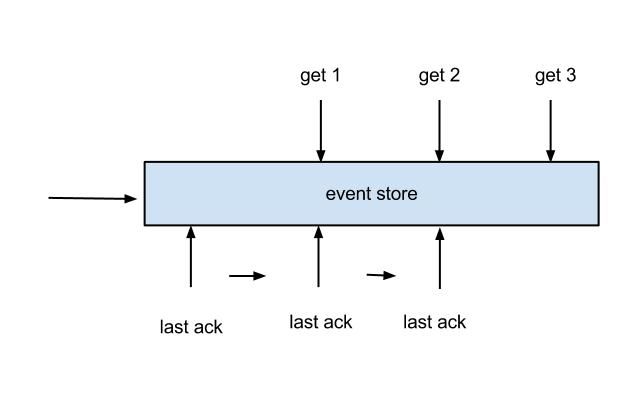

- 每次get操作都会在meta中产生一个mark,mark标记会递增,保证运行过程中mark的唯一性

- 每次的get操作,都会在上一次的mark操作记录的cursor继续往后取,如果mark不存在,则在last ack cursor继续往后取

- 进行ack时,需要按照mark的顺序进行数序ack,不能跳跃ack. ack会删除当前的mark标记,并将对应的mark位置更新为last ack cursor

- 一旦出现异常情况,客户端可发起rollback情况,重新置位:删除所有的mark, 清理get请求位置,下次请求会从last ack cursor继续往后取

关注点

异步的ack带来了更好的性能,也带来了一些问题

rollback后,mark会清空,回到上次ack的位置。如果get的速度比ack快,当rollback()后,就会出现重复消息

本文针对这个问题,给出一个较为简单的解决方案

思路

mark清空后,再次get,获取到的batchId会继续递增(保存在服务端),但是消息是已经处理过的,此时我们不希望消息继续被分发或者处理

如何判断消息是否消费过,或者说,该次数据库变更,是否已经解析过

1.在业务上进行判断

2.更好的方式,通过当前处理的消息在binlog中的位置进行判断

String logFileName = entry.getHeader().getLogfileName();

long offset = entry.getHeader().getLogfileOffset();

使用这两行代码,就可以方便的获取当前消息在具体哪个binlog文件的哪个位置,输出类似如下

logfileName = mysql-bin.000001,offset = 41919

代码

测试代码,请勿用于生产

public static void main(String args[]) {

new Thread(SimpleCanalClient::receiver).start();

new Thread(SimpleCanalClient::ack).start();

}

开局启动两个线程,一个接收,一个确认

private static void receiver() {

// 创建链接

CanalConnector connector = CanalConnectors.newSingleConnector(new InetSocketAddress(AddressUtils.getHostIp(),

11111), "example", "", "");

int batchSize = 1;

int count = 0;

try {

connector.connect();

connector.subscribe(".*\\..*");

connector.rollback();

int total = 20;

while (count < total) {

count++;

Message message = connector.getWithoutAck(batchSize); // 获取指定数量的数据

long batchId = message.getId();

int size = message.getEntries().size();

if (batchId != -1 && size != 0) {

printEntry(message.getEntries(),batchId);

}

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

}

}

STOP.set(true);

System.out.println("receiver exit");

} finally {

connector.disconnect();

}

}

接收者,客户端连上服务端后,每秒钟获取一次数据,一次获取1条,20秒后关闭

一秒钟是模拟业务处理时间

调用了printEntry的业务处理方法

注意刚启动时的rollback(),把上次停止后未提交的数据回滚,因为不确定是否已处理

private static void printEntry(List entries,long batchId) {

for (Entry entry : entries) {

if (entry.getEntryType() == EntryType.TRANSACTIONBEGIN || entry.getEntryType() == EntryType.TRANSACTIONEND) {

continue;

}

String logFileName = entry.getHeader().getLogfileName();

long offset = entry.getHeader().getLogfileOffset();

long lastOffset = getTagFromRedis(logFileName);

if(offset<=lastOffset){

System.out.println("Already processed ! logfileName = "+logFileName+",offset = "+offset+",batchId "+batchId);

}else{

System.out.println("processing logfileName = "+logFileName+",offset = "+offset+",batchId "+batchId);

saveTagToRedis(logFileName,offset);

}

}

BATCH_ID_QUEUE.offer(batchId);

}

printEntry方法进行简单的消费,生产上应该在这里分发给具体的业务处理类

获取了最后处理的offset,如果当前offset小于最后处理的offset,则不处理

处理完后,把offset存入redis中,(saveTagToRedis)

最后把batchId放到了一个Queue中,方便顺序ack

private static void saveTagToRedis(String logFileName, long offset) {

try(Jedis jedis = jedisPool.getResource()){

jedis.set(logFileName, String.valueOf(offset));

jedis.append("processed-"+logFileName,String.valueOf(offset)+"\n");

}

}

private static long getTagFromRedis(String logFileName){

try (Jedis jedis = jedisPool.getResource()){

String position = jedis.get(logFileName);

if(StringUtils.isNotBlank(position)){

return Long.valueOf(position);

}else{

return -1L;

}

}

}

保存和获取最后处理位置的代码,简单的放到了redis中

private static void ack() {

CanalConnector connector = CanalConnectors.newSingleConnector(new InetSocketAddress(AddressUtils.getHostIp(),

11111), "example", "", "");

try {

connector.connect();

connector.subscribe(".*\\..*");

while (!STOP.get()) {

Long batchId = BATCH_ID_QUEUE.poll();

if(batchId!=null){

connector.ack(batchId);

System.out.println("batchId "+batchId+" ack");

}

try {

Thread.sleep(5000);

} catch (InterruptedException ignored) {

}

}

System.out.println("ack exit");

} finally {

connector.disconnect();

}

}

ack方法,从queue中获取最后的batchId(每次停止,未处理的都自动释放了)

模拟网络延迟,5秒钟才能确认一个batchId

测试

测试方法:

开启数据库,不断修改数据,看日志

第一次启动(前面消费都确认完),日志如下

"C:\Program Files\Java\jdk-10.0.1\bin\java.exe" "-javaagent:C:\Program Files\JetBrains\IntelliJ IDEA 2018.1.5\lib\idea_rt.jar=57322:C:\Program Files\JetBrains\IntelliJ IDEA 2018.1.5\bin" -Dfile.encoding=UTF-8 -classpath C:\Users\LiYang\IdeaProjects\canal-test\target\classes;C:\Users\LiYang\.m2\repository\com\alibaba\otter\canal.client\1.0.25\canal.client-1.0.25.jar;C:\Users\LiYang\.m2\repository\com\alibaba\otter\canal.protocol\1.0.25\canal.protocol-1.0.25.jar;C:\Users\LiYang\.m2\repository\com\alibaba\otter\canal.common\1.0.25\canal.common-1.0.25.jar;C:\Users\LiYang\.m2\repository\io\netty\netty-all\4.1.6.Final\netty-all-4.1.6.Final.jar;C:\Users\LiYang\.m2\repository\org\apache\zookeeper\zookeeper\3.4.5\zookeeper-3.4.5.jar;C:\Users\LiYang\.m2\repository\org\jboss\netty\netty\3.2.2.Final\netty-3.2.2.Final.jar;C:\Users\LiYang\.m2\repository\com\101tec\zkclient\0.10\zkclient-0.10.jar;C:\Users\LiYang\.m2\repository\commons-io\commons-io\2.4\commons-io-2.4.jar;C:\Users\LiYang\.m2\repository\commons-codec\commons-codec\1.9\commons-codec-1.9.jar;C:\Users\LiYang\.m2\repository\com\alibaba\fastjson\1.2.28\fastjson-1.2.28.jar;C:\Users\LiYang\.m2\repository\com\google\guava\guava\18.0\guava-18.0.jar;C:\Users\LiYang\.m2\repository\ch\qos\logback\logback-core\1.1.3\logback-core-1.1.3.jar;C:\Users\LiYang\.m2\repository\ch\qos\logback\logback-classic\1.1.3\logback-classic-1.1.3.jar;C:\Users\LiYang\.m2\repository\org\slf4j\jcl-over-slf4j\1.7.12\jcl-over-slf4j-1.7.12.jar;C:\Users\LiYang\.m2\repository\org\slf4j\slf4j-api\1.7.12\slf4j-api-1.7.12.jar;C:\Users\LiYang\.m2\repository\org\springframework\spring-core\3.2.9.RELEASE\spring-core-3.2.9.RELEASE.jar;C:\Users\LiYang\.m2\repository\commons-logging\commons-logging\1.1.3\commons-logging-1.1.3.jar;C:\Users\LiYang\.m2\repository\org\springframework\spring-aop\3.2.9.RELEASE\spring-aop-3.2.9.RELEASE.jar;C:\Users\LiYang\.m2\repository\aopalliance\aopalliance\1.0\aopalliance-1.0.jar;C:\Users\LiYang\.m2\repository\org\springframework\spring-beans\3.2.9.RELEASE\spring-beans-3.2.9.RELEASE.jar;C:\Users\LiYang\.m2\repository\org\springframework\spring-context\3.2.9.RELEASE\spring-context-3.2.9.RELEASE.jar;C:\Users\LiYang\.m2\repository\org\springframework\spring-expression\3.2.9.RELEASE\spring-expression-3.2.9.RELEASE.jar;C:\Users\LiYang\.m2\repository\org\springframework\spring-jdbc\3.2.9.RELEASE\spring-jdbc-3.2.9.RELEASE.jar;C:\Users\LiYang\.m2\repository\org\springframework\spring-tx\3.2.9.RELEASE\spring-tx-3.2.9.RELEASE.jar;C:\Users\LiYang\.m2\repository\org\springframework\spring-orm\3.2.9.RELEASE\spring-orm-3.2.9.RELEASE.jar;C:\Users\LiYang\.m2\repository\com\google\protobuf\protobuf-java\2.6.1\protobuf-java-2.6.1.jar;C:\Users\LiYang\.m2\repository\commons-lang\commons-lang\2.6\commons-lang-2.6.jar;C:\Users\LiYang\.m2\repository\redis\clients\jedis\2.9.0\jedis-2.9.0.jar;C:\Users\LiYang\.m2\repository\org\apache\commons\commons-pool2\2.4.2\commons-pool2-2.4.2.jar com.github.liyang0211.canal.test.SimpleCanalClient

processing logfileName = mysql-bin.000001,offset = 43107,batchId 151

processing logfileName = mysql-bin.000001,offset = 43404,batchId 152

processing logfileName = mysql-bin.000001,offset = 43701,batchId 153

processing logfileName = mysql-bin.000001,offset = 43998,batchId 154

batchId 151 ack

processing logfileName = mysql-bin.000001,offset = 44295,batchId 155

processing logfileName = mysql-bin.000001,offset = 44592,batchId 156

processing logfileName = mysql-bin.000001,offset = 44889,batchId 157

processing logfileName = mysql-bin.000001,offset = 45186,batchId 158

processing logfileName = mysql-bin.000001,offset = 45483,batchId 159

batchId 152 ack

processing logfileName = mysql-bin.000001,offset = 45780,batchId 160

processing logfileName = mysql-bin.000001,offset = 46077,batchId 161

processing logfileName = mysql-bin.000001,offset = 46374,batchId 162

processing logfileName = mysql-bin.000001,offset = 46671,batchId 162

processing logfileName = mysql-bin.000001,offset = 46968,batchId 163

processing logfileName = mysql-bin.000001,offset = 47265,batchId 164

batchId 153 ack

processing logfileName = mysql-bin.000001,offset = 47562,batchId 165

processing logfileName = mysql-bin.000001,offset = 47859,batchId 166

processing logfileName = mysql-bin.000001,offset = 48156,batchId 167

processing logfileName = mysql-bin.000001,offset = 48453,batchId 168

processing logfileName = mysql-bin.000001,offset = 48750,batchId 169

processing logfileName = mysql-bin.000001,offset = 49047,batchId 169

batchId 154 ack

receiver exit

ack exit

Process finished with exit code 0

可以看到,已经处理到了batchId 169,由于时间差,ack才到154就退出了,再次启动服务,日志如下:

"C:\Program Files\Java\jdk-10.0.1\bin\java.exe" "-javaagent:C:\Program Files\JetBrains\IntelliJ IDEA 2018.1.5\lib\idea_rt.jar=57362:C:\Program Files\JetBrains\IntelliJ IDEA 2018.1.5\bin" -Dfile.encoding=UTF-8 -classpath C:\Users\LiYang\IdeaProjects\canal-test\target\classes;C:\Users\LiYang\.m2\repository\com\alibaba\otter\canal.client\1.0.25\canal.client-1.0.25.jar;C:\Users\LiYang\.m2\repository\com\alibaba\otter\canal.protocol\1.0.25\canal.protocol-1.0.25.jar;C:\Users\LiYang\.m2\repository\com\alibaba\otter\canal.common\1.0.25\canal.common-1.0.25.jar;C:\Users\LiYang\.m2\repository\io\netty\netty-all\4.1.6.Final\netty-all-4.1.6.Final.jar;C:\Users\LiYang\.m2\repository\org\apache\zookeeper\zookeeper\3.4.5\zookeeper-3.4.5.jar;C:\Users\LiYang\.m2\repository\org\jboss\netty\netty\3.2.2.Final\netty-3.2.2.Final.jar;C:\Users\LiYang\.m2\repository\com\101tec\zkclient\0.10\zkclient-0.10.jar;C:\Users\LiYang\.m2\repository\commons-io\commons-io\2.4\commons-io-2.4.jar;C:\Users\LiYang\.m2\repository\commons-codec\commons-codec\1.9\commons-codec-1.9.jar;C:\Users\LiYang\.m2\repository\com\alibaba\fastjson\1.2.28\fastjson-1.2.28.jar;C:\Users\LiYang\.m2\repository\com\google\guava\guava\18.0\guava-18.0.jar;C:\Users\LiYang\.m2\repository\ch\qos\logback\logback-core\1.1.3\logback-core-1.1.3.jar;C:\Users\LiYang\.m2\repository\ch\qos\logback\logback-classic\1.1.3\logback-classic-1.1.3.jar;C:\Users\LiYang\.m2\repository\org\slf4j\jcl-over-slf4j\1.7.12\jcl-over-slf4j-1.7.12.jar;C:\Users\LiYang\.m2\repository\org\slf4j\slf4j-api\1.7.12\slf4j-api-1.7.12.jar;C:\Users\LiYang\.m2\repository\org\springframework\spring-core\3.2.9.RELEASE\spring-core-3.2.9.RELEASE.jar;C:\Users\LiYang\.m2\repository\commons-logging\commons-logging\1.1.3\commons-logging-1.1.3.jar;C:\Users\LiYang\.m2\repository\org\springframework\spring-aop\3.2.9.RELEASE\spring-aop-3.2.9.RELEASE.jar;C:\Users\LiYang\.m2\repository\aopalliance\aopalliance\1.0\aopalliance-1.0.jar;C:\Users\LiYang\.m2\repository\org\springframework\spring-beans\3.2.9.RELEASE\spring-beans-3.2.9.RELEASE.jar;C:\Users\LiYang\.m2\repository\org\springframework\spring-context\3.2.9.RELEASE\spring-context-3.2.9.RELEASE.jar;C:\Users\LiYang\.m2\repository\org\springframework\spring-expression\3.2.9.RELEASE\spring-expression-3.2.9.RELEASE.jar;C:\Users\LiYang\.m2\repository\org\springframework\spring-jdbc\3.2.9.RELEASE\spring-jdbc-3.2.9.RELEASE.jar;C:\Users\LiYang\.m2\repository\org\springframework\spring-tx\3.2.9.RELEASE\spring-tx-3.2.9.RELEASE.jar;C:\Users\LiYang\.m2\repository\org\springframework\spring-orm\3.2.9.RELEASE\spring-orm-3.2.9.RELEASE.jar;C:\Users\LiYang\.m2\repository\com\google\protobuf\protobuf-java\2.6.1\protobuf-java-2.6.1.jar;C:\Users\LiYang\.m2\repository\commons-lang\commons-lang\2.6\commons-lang-2.6.jar;C:\Users\LiYang\.m2\repository\redis\clients\jedis\2.9.0\jedis-2.9.0.jar;C:\Users\LiYang\.m2\repository\org\apache\commons\commons-pool2\2.4.2\commons-pool2-2.4.2.jar com.github.liyang0211.canal.test.SimpleCanalClient

Already processed ! logfileName = mysql-bin.000001,offset = 44295,batchId 170

Already processed ! logfileName = mysql-bin.000001,offset = 44592,batchId 170

Already processed ! logfileName = mysql-bin.000001,offset = 44889,batchId 170

Already processed ! logfileName = mysql-bin.000001,offset = 45186,batchId 170

Already processed ! logfileName = mysql-bin.000001,offset = 45483,batchId 170

Already processed ! logfileName = mysql-bin.000001,offset = 45780,batchId 170

Already processed ! logfileName = mysql-bin.000001,offset = 46077,batchId 171

Already processed ! logfileName = mysql-bin.000001,offset = 46374,batchId 171

Already processed ! logfileName = mysql-bin.000001,offset = 46671,batchId 171

Already processed ! logfileName = mysql-bin.000001,offset = 46968,batchId 171

Already processed ! logfileName = mysql-bin.000001,offset = 47265,batchId 171

Already processed ! logfileName = mysql-bin.000001,offset = 47562,batchId 171

Already processed ! logfileName = mysql-bin.000001,offset = 47859,batchId 172

Already processed ! logfileName = mysql-bin.000001,offset = 48156,batchId 172

Already processed ! logfileName = mysql-bin.000001,offset = 48453,batchId 172

Already processed ! logfileName = mysql-bin.000001,offset = 48750,batchId 172

Already processed ! logfileName = mysql-bin.000001,offset = 49047,batchId 172

processing logfileName = mysql-bin.000001,offset = 49344,batchId 172

processing logfileName = mysql-bin.000001,offset = 49641,batchId 173

processing logfileName = mysql-bin.000001,offset = 49938,batchId 173

batchId 170 ack

batchId 171 ack

batchId 172 ack

batchId 173 ack

receiver exit

可以看到,44295开始一直到49047位置的消息,又拉过来了,但是被检测到offset比最后处理的offset小,所以不处理

后面几条处理的,是服务停掉后,又修改的数据库

最终都确认成功,如果再次打开服务,会回到第一条日志的情况

redis内容如下

图2是记录了所有已处理过offset,可以看到,并没有重复处理

总结

原理非常简单,canal可以作为mq消息的一个补偿机制,也给以前需要定时全表扫描的任务提供了新的思路,另外可以关注阿里的otter,也是基于canal的,定位是“分布式数据库同步系统”,底层是canal

感谢阅读,如有错漏,欢迎指正