部署prometheus:

准备镜像:

[root@hdss7-200 ~]# docker pull prom/prometheus:v2.14.0 v2.14.0: Pulling from prom/prometheus 8e674ad76dce: Already exists e77d2419d1c2: Already exists 8674123643f1: Pull complete 21ee3b79b17a: Pull complete d9073bbe10c3: Pull complete 585b5cbc27c1: Pull complete 0b174c1d55cf: Pull complete a1b4e43b91a7: Pull complete 31ccb7962a7c: Pull complete e247e238102a: Pull complete 6798557a5ee4: Pull complete cbfcb065e0ae: Pull complete Digest: sha256:907e20b3b0f8b0a76a33c088fe9827e8edc180e874bd2173c27089eade63d8b8 Status: Downloaded newer image for prom/prometheus:v2.14.0 docker.io/prom/prometheus:v2.14.0 [root@hdss7-200 ~]# docker images|grep prom prom/prometheus v2.14.0 7317640d555e 4 months ago 130MB prom/blackbox-exporter v0.15.1 81b70b6158be 6 months ago 19.7MB [root@hdss7-200 ~]# docker tag 7317640d555e harbor.od.com/infra/prometheus:v2.14.0 [root@hdss7-200 ~]# docker push harbor.od.com/infra/prometheus:v2.14.0 The push refers to repository [harbor.od.com/infra/prometheus] fca78fb26e9b: Mounted from public/prometheus ccf6f2fbceef: Mounted from public/prometheus eb6f7e00328c: Mounted from public/prometheus 5da914e0fc1b: Mounted from public/prometheus b202797fdad0: Mounted from public/prometheus 39dc7810e736: Mounted from public/prometheus 8a9fe881edcd: Mounted from public/prometheus 5dd8539686e4: Mounted from public/prometheus 5c8b7d3229bc: Mounted from public/prometheus 062d51f001d9: Mounted from public/prometheus 3163e6173fcc: Mounted from public/prometheus 6194458b07fc: Mounted from public/prometheus v2.14.0: digest: sha256:3d53ce329b25cc0c1bfc4c03be0496022d81335942e9e0518ded6d50a5e6c638 size: 2824

准备资源配置清单:

[root@hdss7-200 prometheus]# cat rbac.yaml apiVersion: v1 kind: ServiceAccount metadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: prometheus namespace: infra --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: prometheus rules: - apiGroups: - "" resources: - nodes - nodes/metrics - services - endpoints - pods verbs: - get - list - watch - apiGroups: - "" resources: - configmaps verbs: - get - nonResourceURLs: - /metrics verbs: - get --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding Metadata:cd labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: prometheus roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: prometheus subjects: - kind: ServiceAccount name: prometheus namespace: infra [root@hdss7-200 prometheus]# cat dp.yaml apiVersion: extensions/v1beta1 kind: Deployment metadata: annotations: deployment.kubernetes.io/revision: "5" labels: name: prometheus name: prometheus namespace: infra spec: progressDeadlineSeconds: 600 replicas: 1 revisionHistoryLimit: 7 selector: matchLabels: app: prometheus strategy: rollingUpdate: maxSurge: 1 maxUnavailable: 1 type: RollingUpdate template: metadata: labels: app: prometheus spec: nodeName: hdss7-21.host.com #这里是随便加了一个node节点,给prometheus进行调度,实际的情况是我们要单独起一个node节点来运行prometheus,因为非常消耗资源 containers: - name: prometheus image: harbor.od.com/infra/prometheus:v2.12.0 imagePullPolicy: IfNotPresent command: - /bin/prometheus args: - --config.file=/data/etc/prometheus.yml - --storage.tsdb.path=/data/prom-db - --storage.tsdb.min-block-duration=10m - --storage.tsdb.retention=72h ports: - containerPort: 9090 protocol: TCP volumeMounts: - mountPath: /data name: data resources: requests: cpu: "1000m" memory: "1.5Gi" limits: cpu: "2000m" memory: "3Gi" imagePullSecrets: - name: harbor securityContext: runAsUser: 0 serviceAccountName: prometheus volumes: - name: data nfs: server: hdss7-200 path: /data/nfs-volume/prometheus [root@hdss7-200 prometheus]# cat svc.yaml apiVersion: v1 kind: Service metadata: name: prometheus namespace: infra spec: ports: - port: 9090 protocol: TCP targetPort: 9090 selector: app: prometheus [root@hdss7-200 prometheus]# cat ingress.yaml apiVersion: extensions/v1beta1 kind: Ingress metadata: annotations: kubernetes.io/ingress.class: traefik name: prometheus namespace: infra spec: rules: - host: prometheus.od.com http: paths: - path: / backend: serviceName: prometheus servicePort: 9090

准备prometheus配置文件:

[root@hdss7-200 data]# cd /data/nfs-volume/

[root@hdss7-200 nfs-volume]# ls

jenkins_home

[root@hdss7-200 nfs-volume]# mkdir prometheus/{etc,prom-db}

mkdir: 无法创建目录"prometheus/etc": 没有那个文件或目录

mkdir: 无法创建目录"prometheus/prom-db": 没有那个文件或目录

[root@hdss7-200 nfs-volume]# mkdir -pv prometheus/{etc,prom-db}

mkdir: 已创建目录 "prometheus"

mkdir: 已创建目录 "prometheus/etc"

mkdir: 已创建目录 "prometheus/prom-db"

将证书拷贝过来:

[root@hdss7-200 etc]# cp /opt/certs/ca.pem .

[root@hdss7-200 etc]# cp /opt/certs/client.pem .

[root@hdss7-200 etc]# cp /opt/certs/client-key.pem .

应用资源配置清单:

[root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/prometheus/rbac.yaml serviceaccount/prometheus unchanged clusterrole.rbac.authorization.k8s.io/prometheus unchanged clusterrolebinding.rbac.authorization.k8s.io/prometheus created [root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/prometheus/dp.yaml deployment.extensions/prometheus created [root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/prometheus/svc.yaml service/prometheus created [root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/prometheus/ingress.yaml [root@hdss7-22 ~]# kubectl get pod -n infra |grep prom prometheus-6767456ffb-w5d9k 1/1 Running 0 62s

浏览器访问页面:

准备grafana镜像:

[root@hdss7-200 ~]# docker pull grafana/grafana:5.4.2 5.4.2: Pulling from grafana/grafana a5a6f2f73cd8: Pull complete 08e6195c0f29: Pull complete b7bd3a2a524c: Pull complete d3421658103b: Pull complete cd7c84229877: Pull complete 49917e11f039: Pull complete Digest: sha256:b9a31857e86e9cf43552605bd7f3c990c123f8792ab6bea8f499db1a1bdb7d53 Status: Downloaded newer image for grafana/grafana:5.4.2 docker.io/grafana/grafana:5.4.2 [root@hdss7-200 ~]# docker images|grep grafana grafana/grafana 5.4.2 6f18ddf9e552 15 months ago 243MB [root@hdss7-200 ~]# docker tag 6f18ddf9e552 harbor.od.com/infra/grafana:v5.4.2 [root@hdss7-200 ~]# docker push harbor.od.com/infra/grafana:v5.4.2 The push refers to repository [harbor.od.com/infra/grafana] 8e6f0f1fe3f4: Pushed f8bf0b7b071d: Pushed 5dde66caf2d2: Pushing [============================> ] 91.36MB/158.6MB 5dde66caf2d2: Pushed 11f89658f27f: Pushed ef68f6734aa4: Pushing [========================================> ] 45MB/55.ef68f6734aa4: Pushed v5.4.2: digest: sha256:b9a31857e86e9cf43552605bd7f3c990c123f8792ab6bea8f499db1a1bdb7d53 size: 1576

准备资源配置清单:

[root@hdss7-200 ~]# mkdir /data/k8s-yaml/grafana [root@hdss7-200 ~]# cd /data/k8s-yaml/grafana [root@hdss7-200 grafana]# cat rbac.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: grafana rules: - apiGroups: - "*" resources: - namespaces - deployments - pods verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: grafana roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: grafana subjects: - kind: User name: k8s-node [root@hdss7-200 grafana]# cat dp.yaml apiVersion: extensions/v1beta1 kind: Deployment metadata: labels: app: grafana name: grafana name: grafana namespace: infra spec: progressDeadlineSeconds: 600 replicas: 1 revisionHistoryLimit: 7 selector: matchLabels: name: grafana strategy: rollingUpdate: maxSurge: 1 maxUnavailable: 1 type: RollingUpdate template: metadata: labels: app: grafana name: grafana spec: containers: - name: grafana image: harbor.od.com/infra/grafana:v5.4.2 imagePullPolicy: IfNotPresent ports: - containerPort: 3000 protocol: TCP volumeMounts: - mountPath: /var/lib/grafana name: data imagePullSecrets: - name: harbor securityContext: runAsUser: 0 volumes: - nfs: server: hdss7-200 path: /data/nfs-volume/grafana name: data [root@hdss7-200 grafana]# cat svc.yaml apiVersion: v1 kind: Service metadata: name: grafana namespace: infra spec: ports: - port: 3000 protocol: TCP targetPort: 3000 selector: app: grafana [root@hdss7-200 grafana]# cat ingress.yaml apiVersion: extensions/v1beta1 kind: Ingress metadata: name: grafana namespace: infra spec: rules: - host: grafana.od.com http: paths: - path: / backend: serviceName: grafana servicePort: 3000

nfs目录中创建grafana数据目录:

[root@hdss7-200 ~]# mkdir /data/nfs-volume/grafana

应用资源配置清单:

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/grafana/rbac.yaml clusterrole.rbac.authorization.k8s.io/grafana created clusterrolebinding.rbac.authorization.k8s.io/grafana created [root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/grafana/dp.yaml deployment.extensions/grafana created [root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/grafana/svc.yaml service/grafana created [root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/grafana/ingress.yaml ingress.extensions/grafana created [root@hdss7-21 ~]# kubectl get pod -n infra -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES apollo-portal-57bc86966d-2x4kl 1/1 Running 0 120m 172.7.21.5 hdss7-21.host.comdubbo-monitor-6676dd74cc-fccl4 1/1 Running 0 120m 172.7.21.14 hdss7-21.host.com grafana-d6588db94-sgl4j 1/1 Running 0 17s 172.7.22.7 hdss7-22.host.com

访问页面,默认用户名密码都是admin:

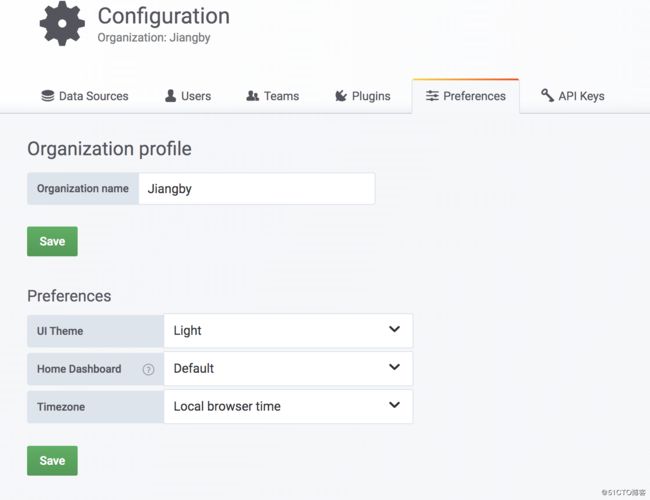

点击设置里的Preferences,修改下时间和背景颜色,时间我们选用浏览器时间模式:

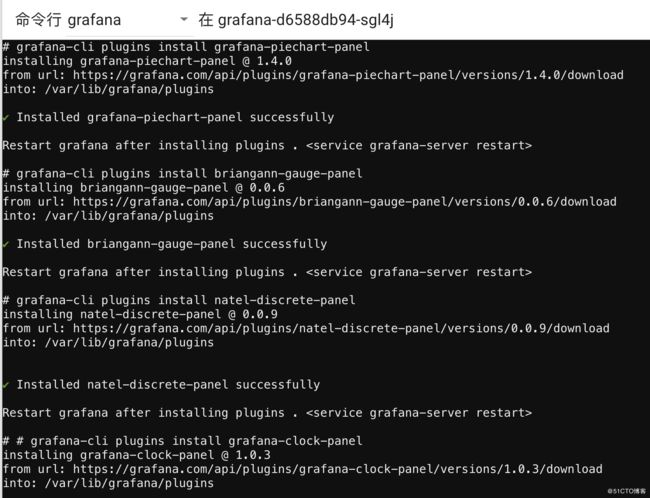

下面我们去安装grafana的插件,进入到grafana的容器中执行安装命令即可:

grafana-cli plugins install grafana-kubernetes-app grafana-cli plugins install grafana-clock-panel grafana-cli plugins install grafana-piechart-panel grafana-cli plugins install briangann-gauge-panel grafana-cli plugins install natel-discrete-panel

安装后其实就是在nfs的挂载目录下从官网下载并解压了一些zip包,当然你可以手动去官网下载后解压到这里,然后重启POD即可:

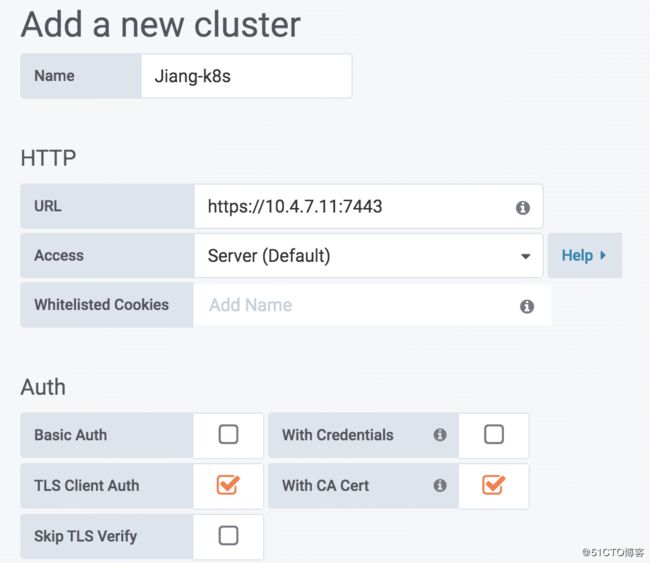

配置grafana数据源:

配置认证方式,选择证书认证,然后拷贝相关证书的内容进去即可:

配置plugins中的kubernetes,点击enable:

配置成功保存即可,稍等片刻,即可出图:

集群状态信息:

traefik状态信息: