零:训练模型从上下文判断下一个单词的选择范围(perplextity),选择范围越小,模型越好

一:训练,验证,测试数据下载

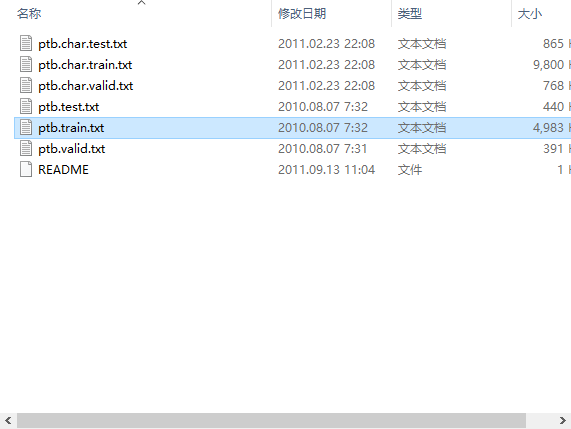

从Tomas Mikolov 网站上的PTB数据下载

二:此次所用的文件在simple-examples.tgz解压后的data文件夹里:ptb.test.text,train.txt,valid三个文件

三:代码

# -*- coding: utf-8 -*-

import numpy as np

import tensorflow as tf

import reader

DATA_PATH = 'F:/PycharmProjects/tmp/model/data'

HIDDEN_SIZE = 200 #隐藏层规模

NUM_LAYERS = 2

VOCAB_SIZE = 10000 #词典规模

LEARNING_RATE = 1.0

TRAIN_BATCH_SIZE = 20

TRAIN_NUM_STEP = 35 #训练数据截断长度

EVAL_BATCH_SIZE = 1

EVAL_NUM_STEP = 1

NUM_EPOCH = 2 #使用训练数据的轮数

KEEP_PROB = 0.5 #节点不被dropout的概率

MAX_GARD_NORM = 5 #用于控制梯度膨胀的参数

class PTBModel(object):

def __init__(self, is_training, batch_size, num_steps):

self.batch_size = batch_size

self.num_steps = num_steps

self.input_data = tf.placeholder(tf.int32, [batch_size, num_steps])

self.targets = tf.placeholder(tf.int32, [batch_size, num_steps])

#定义使用LSTM结构为循环体结构且使用dropout的深层循环神经网络

lstm_cell = tf.nn.rnn_cell.BasicLSTMCell(HIDDEN_SIZE)

if is_training:

lstm_cell = tf.nn.rnn_cell.DropoutWrapper(

lstm_cell, output_keep_prob=KEEP_PROB

)

cell = tf.nn.rnn_cell.MultiRNNCell([lstm_cell] * NUM_LAYERS)

self.initial_state = cell.zero_state(batch_size, tf.float32)

embedding = tf.get_variable('embedding', [VOCAB_SIZE, HIDDEN_SIZE])

inputs = tf.nn.embedding_lookup(embedding, self.input_data)

if is_training:

inputs = tf.nn.dropout(inputs, KEEP_PROB)

outputs = []

state = self.initial_state

with tf.variable_scope('RNN'):

for time_step in range(num_steps):

if time_step > 0: tf.get_variable_scope().reuse_variables()

cell_output, state = cell(inputs[:, time_step, :], state)

outputs.append(cell_output)

output = tf.reshape(tf.concat(outputs, 1), [-1, HIDDEN_SIZE])

weight = tf.get_variable('weight', [HIDDEN_SIZE, VOCAB_SIZE])

bias = tf.get_variable('bias', [VOCAB_SIZE])

logits = tf.matmul(output, weight) + bias

loss = tf.contrib.legacy_seq2seq.sequence_loss_by_example(

[logits],

[tf.reshape(self.targets, [-1])],

[tf.ones([batch_size * num_steps], dtype=tf.float32)]

)

self.cost = tf.reduce_sum(loss) / batch_size

self.final_state = state

if not is_training: return #只在训练模型时进行反向传播操作

trainable_variables = tf.trainable_variables()

grads, _ = tf.clip_by_global_norm(tf.gradients(self.cost, trainable_variables), MAX_GARD_NORM)

optimizer = tf.train.GradientDescentOptimizer(LEARNING_RATE)

self.train_op = optimizer.apply_gradients(zip(grads, trainable_variables))

def run_epoch(session, model, data, train_op, output_log):

total_costs = 0.0

iters = 0

state = session.run(model.initial_state)

for step, (x, y) in enumerate(reader.ptb_iterator(data, model.batch_size, model.num_steps)):

cost, state, _ = session.run(

[model.cost, model.final_state, train_op],

{model.input_data: x, model.targets: y,

model.initial_state: state}

)

total_costs += cost

iters += model.num_steps

if output_log and step % 100 == 0:

print('After %d steps, perplexity is %.3f' % (step, np.exp(total_costs / iters)))

return np.exp(total_costs / iters)

def main(_):

train_data, valid_data, test_data, _ = reader.ptb_raw_data(DATA_PATH)

initializer = tf.random_uniform_initializer(-0.05, 0.05)

with tf.variable_scope('language_model', reuse=tf.AUTO_REUSE, initializer=initializer):

train_model = PTBModel(True, TRAIN_BATCH_SIZE, TRAIN_NUM_STEP)

with tf.variable_scope('language_model', reuse=tf.AUTO_REUSE, initializer=initializer):

eval_model = PTBModel(True, EVAL_BATCH_SIZE, EVAL_NUM_STEP)

with tf.Session() as sess:

tf.initialize_all_variables().run()

for i in range(NUM_EPOCH):

print('In iteration: %d' % (i+1))

run_epoch(sess, train_model, train_data, train_model.train_op, True)

valid_perplexity = run_epoch(sess, eval_model, valid_data, tf.no_op(), False)

print('Epoch: %d Validation Perplexity: %.3f' % (i+1, valid_perplexity))

test_perplexity = run_epoch(sess, eval_model, test_data, tf.no_op(), False)

print('Test: Test Perplexity: %.3f' % test_perplexity)

if __name__ == '__main__':

tf.app.run()

导入的reader文件来自于:reader.把reader.py文件于主Python文件置于同一目录下。

四:训练结果

perplexity 最开始值为9982.331,这基本相当于从9982多个单词随机选择下一个单词。训练结束后选择的单词降到了236个。

In iteration: 1

After 0 steps, perplexity is 9982.331

After 100 steps, perplexity is 1373.757

After 200 steps, perplexity is 1014.514

After 300 steps, perplexity is 866.278

After 400 steps, perplexity is 765.193

After 500 steps, perplexity is 693.194

After 600 steps, perplexity is 640.376

After 700 steps, perplexity is 595.449

After 800 steps, perplexity is 555.005

After 900 steps, perplexity is 523.647

After 1000 steps, perplexity is 499.572

After 1100 steps, perplexity is 476.513

After 1200 steps, perplexity is 457.129

After 1300 steps, perplexity is 439.515

Epoch: 1 Validation Perplexity: 293.073

In iteration: 2

After 0 steps, perplexity is 378.070

After 100 steps, perplexity is 265.294

After 200 steps, perplexity is 270.458

After 300 steps, perplexity is 271.947

After 400 steps, perplexity is 268.950

After 500 steps, perplexity is 266.594

After 600 steps, perplexity is 266.106

After 700 steps, perplexity is 263.804

After 800 steps, perplexity is 259.322

After 900 steps, perplexity is 256.499

After 1000 steps, perplexity is 254.953

After 1100 steps, perplexity is 251.484

After 1200 steps, perplexity is 248.905

After 1300 steps, perplexity is 246.169

Epoch: 2 Validation Perplexity: 243.809

Test: Test Perplexity: 236.948