转:LAV Filter 源代码分析

1: 总体结构

LAV Filter 是一款视频分离和解码软件,他的分离器封装了FFMPEG中的libavformat,解码器则封装了FFMPEG中的libavcodec。它支持十分广泛的视音频格式。

源代码位于GitHub或Google Code:

https://github.com/Nevcairiel/LAVFilters

http://code.google.com/p/lavfilters/

本文分析了LAV Filter源代码的总体架构。

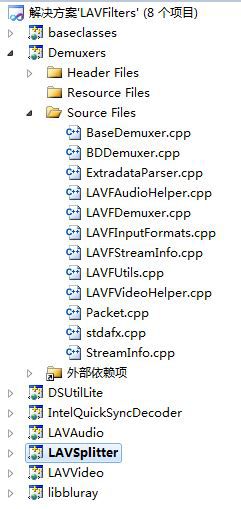

使用git获取LAV filter源代码之后,使用VC 2010 打开源代码,发现代码目录结构如图所示:

整个解决方案由8个工程组成,介绍一下我目前所知的几个工程:

baseclasses:DirectShow基类,在DirectShow的SDK中也有,是微软为了简化DirectShow开发而提供的。

Demuxers:解封装的基类,LAVSplitter需要调用其中的方法完成解封装操作。

LAVAudio:音频解码Filter。封装了libavcodec。

LAVSplitter:解封装Filter。封装了libavformat。

LAVVideo:视频解码Filter。封装了libavcodec。

libbluray:蓝光的支持。

以上标为咖啡色字体的是要重点分析的,也是最重要的工程。

2: LAV Splitter

LAV Filter 中最著名的就是 LAV Splitter,支持Matroska /WebM,MPEG-TS/PS,MP4/MOV,FLV,OGM / OGG,AVI等其他格式,广泛存在于各种视频播放器(暴风影音这类的)之中。

本文分析一下它的源代码。在分析之前,先看看它是什么样的。

使用GraphEdit随便打开一个视频文件,就可以看见LAV Filter:

可以右键点击这个Filter看一下它的属性页面,如图所示:

属性设置页面:

支持输入格式:

下面我们在 VC 2010 中看一下它的源代码:

从何看起呢?就先从directshow的注册函数看起吧,位于dllmain.cpp之中。部分代码的含义已经用注释标注上了。从代码可以看出,和普通的DirectShow Filter没什么区别。

dllmain.cpp

/* * Copyright (C) 2010-2013 Hendrik Leppkes * http://www.1f0.de * * This program is free software; you can redistribute it and/or modify * it under the terms of the GNU General Public License as published by * the Free Software Foundation; either version 2 of the License, or * (at your option) any later version. * * This program is distributed in the hope that it will be useful, * but WITHOUT ANY WARRANTY; without even the implied warranty of * MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the * GNU General Public License for more details. * * You should have received a copy of the GNU General Public License along * with this program; if not, write to the Free Software Foundation, Inc., * 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA. */ // Based on the SampleParser Template by GDCL // -------------------------------------------------------------------------------- // Copyright (c) GDCL 2004. All Rights Reserved. // You are free to re-use this as the basis for your own filter development, // provided you retain this copyright notice in the source. // http://www.gdcl.co.uk // -------------------------------------------------------------------------------- //各种定义。。。 #include "stdafx.h" // Initialize the GUIDs #include <InitGuid.h> #include <qnetwork.h> #include "LAVSplitter.h" #include "moreuuids.h" #include "registry.h" #include "IGraphRebuildDelegate.h" // The GUID we use to register the splitter media types DEFINE_GUID(MEDIATYPE_LAVSplitter, 0x9c53931c, 0x7d5a, 0x4a75, 0xb2, 0x6f, 0x4e, 0x51, 0x65, 0x4d, 0xb2, 0xc0); // --- COM factory table and registration code -------------- //注册时候的信息 const AMOVIESETUP_MEDIATYPE sudMediaTypes[] = { { &MEDIATYPE_Stream, &MEDIASUBTYPE_NULL }, }; //注册时候的信息(PIN) const AMOVIESETUP_PIN sudOutputPins[] = { { L"Output", // pin name FALSE, // is rendered? TRUE, // is output? FALSE, // zero instances allowed? TRUE, // many instances allowed? &CLSID_NULL, // connects to filter (for bridge pins) NULL, // connects to pin (for bridge pins) 0, // count of registered media types NULL // list of registered media types }, { L"Input", // pin name FALSE, // is rendered? FALSE, // is output? FALSE, // zero instances allowed? FALSE, // many instances allowed? &CLSID_NULL, // connects to filter (for bridge pins) NULL, // connects to pin (for bridge pins) 1, // count of registered media types &sudMediaTypes[0] // list of registered media types } }; //注册时候的信息(名称等) //CLAVSplitter const AMOVIESETUP_FILTER sudFilterReg = { &__uuidof(CLAVSplitter), // filter clsid L"LAV Splitter", // filter name MERIT_PREFERRED + 4, // merit 2, // count of registered pins sudOutputPins, // list of pins to register CLSID_LegacyAmFilterCategory }; //注册时候的信息(名称等) //CLAVSplitterSource const AMOVIESETUP_FILTER sudFilterRegSource = { &__uuidof(CLAVSplitterSource), // filter clsid L"LAV Splitter Source", // filter name MERIT_PREFERRED + 4, // merit 1, // count of registered pins sudOutputPins, // list of pins to register CLSID_LegacyAmFilterCategory }; // --- COM factory table and registration code -------------- // DirectShow base class COM factory requires this table, // declaring all the COM objects in this DLL // 注意g_Templates名称是固定的 CFactoryTemplate g_Templates[] = { // one entry for each CoCreate-able object { sudFilterReg.strName, sudFilterReg.clsID, CreateInstance<CLAVSplitter>, CLAVSplitter::StaticInit, &sudFilterReg }, { sudFilterRegSource.strName, sudFilterRegSource.clsID, CreateInstance<CLAVSplitterSource>, NULL, &sudFilterRegSource }, // This entry is for the property page. // 属性页 { L"LAV Splitter Properties", &CLSID_LAVSplitterSettingsProp, CreateInstance<CLAVSplitterSettingsProp>, NULL, NULL }, { L"LAV Splitter Input Formats", &CLSID_LAVSplitterFormatsProp, CreateInstance<CLAVSplitterFormatsProp>, NULL, NULL } }; int g_cTemplates = sizeof(g_Templates) / sizeof(g_Templates[0]); // self-registration entrypoint STDAPI DllRegisterServer() { std::list<LPCWSTR> chkbytes; // BluRay chkbytes.clear(); chkbytes.push_back(L"0,4,,494E4458"); // INDX (index.bdmv) chkbytes.push_back(L"0,4,,4D4F424A"); // MOBJ (MovieObject.bdmv) chkbytes.push_back(L"0,4,,4D504C53"); // MPLS RegisterSourceFilter(__uuidof(CLAVSplitterSource), MEDIASUBTYPE_LAVBluRay, chkbytes, NULL); // base classes will handle registration using the factory template table return AMovieDllRegisterServer2(true); } STDAPI DllUnregisterServer() { UnRegisterSourceFilter(MEDIASUBTYPE_LAVBluRay); // base classes will handle de-registration using the factory template table return AMovieDllRegisterServer2(false); } // if we declare the correct C runtime entrypoint and then forward it to the DShow base // classes we will be sure that both the C/C++ runtimes and the base classes are initialized // correctly extern "C" BOOL WINAPI DllEntryPoint(HINSTANCE, ULONG, LPVOID); BOOL WINAPI DllMain(HANDLE hDllHandle, DWORD dwReason, LPVOID lpReserved) { return DllEntryPoint(reinterpret_cast<HINSTANCE>(hDllHandle), dwReason, lpReserved); } void CALLBACK OpenConfiguration(HWND hwnd, HINSTANCE hinst, LPSTR lpszCmdLine, int nCmdShow) { HRESULT hr = S_OK; CUnknown *pInstance = CreateInstance<CLAVSplitter>(NULL, &hr); IBaseFilter *pFilter = NULL; pInstance->NonDelegatingQueryInterface(IID_IBaseFilter, (void **)&pFilter); if (pFilter) { pFilter->AddRef(); CBaseDSPropPage::ShowPropPageDialog(pFilter); } delete pInstance; }

接下来就要进入正题了,看一看核心的分离器(解封装器)的类CLAVSplitter的定义文件LAVSplitter.h。乍一看这个类确实了得,居然继承了那么多的父类,实在是碉堡了。先不管那么多,看看里面都有什么函数吧。主要的函数上面都加了注释。注意还有一个类 CLAVSplitterSource继承了CLAVSplitter。

LAVSplitter.h

/* * Copyright (C) 2010-2013 Hendrik Leppkes * http://www.1f0.de * * This program is free software; you can redistribute it and/or modify * it under the terms of the GNU General Public License as published by * the Free Software Foundation; either version 2 of the License, or * (at your option) any later version. * * This program is distributed in the hope that it will be useful, * but WITHOUT ANY WARRANTY; without even the implied warranty of * MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the * GNU General Public License for more details. * * You should have received a copy of the GNU General Public License along * with this program; if not, write to the Free Software Foundation, Inc., * 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA. * * Initial design and concept by Gabest and the MPC-HC Team, copyright under GPLv2 * Contributions by Ti-BEN from the XBMC DSPlayer Project, also under GPLv2 */ #pragma once #include <string> #include <list> #include <set> #include <vector> #include <map> #include "PacketQueue.h" #include "BaseDemuxer.h" #include "LAVSplitterSettingsInternal.h" #include "SettingsProp.h" #include "IBufferInfo.h" #include "ISpecifyPropertyPages2.h" #include "LAVSplitterTrayIcon.h" #define LAVF_REGISTRY_KEY L"Software\\LAV\\Splitter" #define LAVF_REGISTRY_KEY_FORMATS LAVF_REGISTRY_KEY L"\\Formats" #define LAVF_LOG_FILE L"LAVSplitter.txt" #define MAX_PTS_SHIFT 50000000i64 class CLAVOutputPin; class CLAVInputPin; #ifdef _MSC_VER #pragma warning(disable: 4355) #endif //核心解复用(分离器) //暴漏的接口在ILAVFSettings中 [uuid("171252A0-8820-4AFE-9DF8-5C92B2D66B04")] class CLAVSplitter : public CBaseFilter , public CCritSec , protected CAMThread , public IFileSourceFilter , public IMediaSeeking , public IAMStreamSelect , public IAMOpenProgress , public ILAVFSettingsInternal , public ISpecifyPropertyPages2 , public IObjectWithSite , public IBufferInfo { public: CLAVSplitter(LPUNKNOWN pUnk, HRESULT* phr); virtual ~CLAVSplitter(); static void CALLBACK StaticInit(BOOL bLoading, const CLSID *clsid); // IUnknown // DECLARE_IUNKNOWN; //暴露接口,使外部程序可以QueryInterface,关键! //翻译(“没有代表的方式查询接口”) STDMETHODIMP NonDelegatingQueryInterface(REFIID riid, void** ppv); // CBaseFilter methods //输入是一个,输出就不一定了! int GetPinCount(); CBasePin *GetPin(int n); STDMETHODIMP GetClassID(CLSID* pClsID); STDMETHODIMP Stop(); STDMETHODIMP Pause(); STDMETHODIMP Run(REFERENCE_TIME tStart); STDMETHODIMP JoinFilterGraph(IFilterGraph * pGraph, LPCWSTR pName); // IFileSourceFilter // 源Filter的接口方法 STDMETHODIMP Load(LPCOLESTR pszFileName, const AM_MEDIA_TYPE * pmt); STDMETHODIMP GetCurFile(LPOLESTR *ppszFileName, AM_MEDIA_TYPE *pmt); // IMediaSeeking STDMETHODIMP GetCapabilities(DWORD* pCapabilities); STDMETHODIMP CheckCapabilities(DWORD* pCapabilities); STDMETHODIMP IsFormatSupported(const GUID* pFormat); STDMETHODIMP QueryPreferredFormat(GUID* pFormat); STDMETHODIMP GetTimeFormat(GUID* pFormat); STDMETHODIMP IsUsingTimeFormat(const GUID* pFormat); STDMETHODIMP SetTimeFormat(const GUID* pFormat); STDMETHODIMP GetDuration(LONGLONG* pDuration); STDMETHODIMP GetStopPosition(LONGLONG* pStop); STDMETHODIMP GetCurrentPosition(LONGLONG* pCurrent); STDMETHODIMP ConvertTimeFormat(LONGLONG* pTarget, const GUID* pTargetFormat, LONGLONG Source, const GUID* pSourceFormat); STDMETHODIMP SetPositions(LONGLONG* pCurrent, DWORD dwCurrentFlags, LONGLONG* pStop, DWORD dwStopFlags); STDMETHODIMP GetPositions(LONGLONG* pCurrent, LONGLONG* pStop); STDMETHODIMP GetAvailable(LONGLONG* pEarliest, LONGLONG* pLatest); STDMETHODIMP SetRate(double dRate); STDMETHODIMP GetRate(double* pdRate); STDMETHODIMP GetPreroll(LONGLONG* pllPreroll); // IAMStreamSelect STDMETHODIMP Count(DWORD *pcStreams); STDMETHODIMP Enable(long lIndex, DWORD dwFlags); STDMETHODIMP Info(long lIndex, AM_MEDIA_TYPE **ppmt, DWORD *pdwFlags, LCID *plcid, DWORD *pdwGroup, WCHAR **ppszName, IUnknown **ppObject, IUnknown **ppUnk); // IAMOpenProgress STDMETHODIMP QueryProgress(LONGLONG *pllTotal, LONGLONG *pllCurrent); STDMETHODIMP AbortOperation(); // ISpecifyPropertyPages2 STDMETHODIMP GetPages(CAUUID *pPages); STDMETHODIMP CreatePage(const GUID& guid, IPropertyPage** ppPage); // IObjectWithSite STDMETHODIMP SetSite(IUnknown *pUnkSite); STDMETHODIMP GetSite(REFIID riid, void **ppvSite); // IBufferInfo STDMETHODIMP_(int) GetCount(); STDMETHODIMP GetStatus(int i, int& samples, int& size); STDMETHODIMP_(DWORD) GetPriority(); // ILAVFSettings STDMETHODIMP SetRuntimeConfig(BOOL bRuntimeConfig); STDMETHODIMP GetPreferredLanguages(LPWSTR *ppLanguages); STDMETHODIMP SetPreferredLanguages(LPCWSTR pLanguages); STDMETHODIMP GetPreferredSubtitleLanguages(LPWSTR *ppLanguages); STDMETHODIMP SetPreferredSubtitleLanguages(LPCWSTR pLanguages); STDMETHODIMP_(LAVSubtitleMode) GetSubtitleMode(); STDMETHODIMP SetSubtitleMode(LAVSubtitleMode mode); STDMETHODIMP_(BOOL) GetSubtitleMatchingLanguage(); STDMETHODIMP SetSubtitleMatchingLanguage(BOOL dwMode); STDMETHODIMP_(BOOL) GetPGSForcedStream(); STDMETHODIMP SetPGSForcedStream(BOOL bFlag); STDMETHODIMP_(BOOL) GetPGSOnlyForced(); STDMETHODIMP SetPGSOnlyForced(BOOL bForced); STDMETHODIMP_(int) GetVC1TimestampMode(); STDMETHODIMP SetVC1TimestampMode(int iMode); STDMETHODIMP SetSubstreamsEnabled(BOOL bSubStreams); STDMETHODIMP_(BOOL) GetSubstreamsEnabled(); STDMETHODIMP SetVideoParsingEnabled(BOOL bEnabled); STDMETHODIMP_(BOOL) GetVideoParsingEnabled(); STDMETHODIMP SetFixBrokenHDPVR(BOOL bEnabled); STDMETHODIMP_(BOOL) GetFixBrokenHDPVR(); STDMETHODIMP_(HRESULT) SetFormatEnabled(LPCSTR strFormat, BOOL bEnabled); STDMETHODIMP_(BOOL) IsFormatEnabled(LPCSTR strFormat); STDMETHODIMP SetStreamSwitchRemoveAudio(BOOL bEnabled); STDMETHODIMP_(BOOL) GetStreamSwitchRemoveAudio(); STDMETHODIMP GetAdvancedSubtitleConfig(LPWSTR *ppAdvancedConfig); STDMETHODIMP SetAdvancedSubtitleConfig(LPCWSTR pAdvancedConfig); STDMETHODIMP SetUseAudioForHearingVisuallyImpaired(BOOL bEnabled); STDMETHODIMP_(BOOL) GetUseAudioForHearingVisuallyImpaired(); STDMETHODIMP SetMaxQueueMemSize(DWORD dwMaxSize); STDMETHODIMP_(DWORD) GetMaxQueueMemSize(); STDMETHODIMP SetTrayIcon(BOOL bEnabled); STDMETHODIMP_(BOOL) GetTrayIcon(); STDMETHODIMP SetPreferHighQualityAudioStreams(BOOL bEnabled); STDMETHODIMP_(BOOL) GetPreferHighQualityAudioStreams(); STDMETHODIMP SetLoadMatroskaExternalSegments(BOOL bEnabled); STDMETHODIMP_(BOOL) GetLoadMatroskaExternalSegments(); STDMETHODIMP GetFormats(LPSTR** formats, UINT* nFormats); STDMETHODIMP SetNetworkStreamAnalysisDuration(DWORD dwDuration); STDMETHODIMP_(DWORD) GetNetworkStreamAnalysisDuration(); // ILAVSplitterSettingsInternal STDMETHODIMP_(LPCSTR) GetInputFormat() { if (m_pDemuxer) return m_pDemuxer->GetContainerFormat(); return NULL; } STDMETHODIMP_(std::set<FormatInfo>&) GetInputFormats(); STDMETHODIMP_(BOOL) IsVC1CorrectionRequired(); STDMETHODIMP_(CMediaType *) GetOutputMediatype(int stream); STDMETHODIMP_(IFilterGraph *) GetFilterGraph() { if (m_pGraph) { m_pGraph->AddRef(); return m_pGraph; } return NULL; } STDMETHODIMP_(DWORD) GetStreamFlags(DWORD dwStream) { if (m_pDemuxer) return m_pDemuxer->GetStreamFlags(dwStream); return 0; } STDMETHODIMP_(int) GetPixelFormat(DWORD dwStream) { if (m_pDemuxer) return m_pDemuxer->GetPixelFormat(dwStream); return AV_PIX_FMT_NONE; } STDMETHODIMP_(int) GetHasBFrames(DWORD dwStream){ if (m_pDemuxer) return m_pDemuxer->GetHasBFrames(dwStream); return -1; } // Settings helper std::list<std::string> GetPreferredAudioLanguageList(); std::list<CSubtitleSelector> GetSubtitleSelectors(); bool IsAnyPinDrying(); void SetFakeASFReader(BOOL bFlag) { m_bFakeASFReader = bFlag; } protected: // CAMThread enum {CMD_EXIT, CMD_SEEK}; DWORD ThreadProc(); HRESULT DemuxSeek(REFERENCE_TIME rtStart); HRESULT DemuxNextPacket(); HRESULT DeliverPacket(Packet *pPacket); void DeliverBeginFlush(); void DeliverEndFlush(); STDMETHODIMP Close(); STDMETHODIMP DeleteOutputs(); //初始化解复用器 STDMETHODIMP InitDemuxer(); friend class CLAVOutputPin; STDMETHODIMP SetPositionsInternal(void *caller, LONGLONG* pCurrent, DWORD dwCurrentFlags, LONGLONG* pStop, DWORD dwStopFlags); public: CLAVOutputPin *GetOutputPin(DWORD streamId, BOOL bActiveOnly = FALSE); STDMETHODIMP RenameOutputPin(DWORD TrackNumSrc, DWORD TrackNumDst, std::vector<CMediaType> pmts); STDMETHODIMP UpdateForcedSubtitleMediaType(); STDMETHODIMP CompleteInputConnection(); STDMETHODIMP BreakInputConnection(); protected: //相关的参数设置 STDMETHODIMP LoadDefaults(); STDMETHODIMP ReadSettings(HKEY rootKey); STDMETHODIMP LoadSettings(); STDMETHODIMP SaveSettings(); //创建图标 STDMETHODIMP CreateTrayIcon(); protected: CLAVInputPin *m_pInput; private: CCritSec m_csPins; //用vector存储输出PIN(解复用的时候是不确定的) std::vector<CLAVOutputPin *> m_pPins; //活动的 std::vector<CLAVOutputPin *> m_pActivePins; //不用的 std::vector<CLAVOutputPin *> m_pRetiredPins; std::set<DWORD> m_bDiscontinuitySent; std::wstring m_fileName; std::wstring m_processName; //有很多纯虚函数的基本解复用类 //注意:绝大部分信息都是从这获得的 //这里的信息是由其派生类从FFMPEG中获取到的 CBaseDemuxer *m_pDemuxer; BOOL m_bPlaybackStarted; BOOL m_bFakeASFReader; // Times REFERENCE_TIME m_rtStart, m_rtStop, m_rtCurrent, m_rtNewStart, m_rtNewStop; REFERENCE_TIME m_rtOffset; double m_dRate; BOOL m_bStopValid; // Seeking REFERENCE_TIME m_rtLastStart, m_rtLastStop; std::set<void *> m_LastSeekers; // flushing bool m_fFlushing; CAMEvent m_eEndFlush; std::set<FormatInfo> m_InputFormats; // Settings //设置 struct Settings { BOOL TrayIcon; std::wstring prefAudioLangs; std::wstring prefSubLangs; std::wstring subtitleAdvanced; LAVSubtitleMode subtitleMode; BOOL PGSForcedStream; BOOL PGSOnlyForced; int vc1Mode; BOOL substreams; BOOL MatroskaExternalSegments; BOOL StreamSwitchRemoveAudio; BOOL ImpairedAudio; BOOL PreferHighQualityAudio; DWORD QueueMaxSize; DWORD NetworkAnalysisDuration; std::map<std::string, BOOL> formats; } m_settings; BOOL m_bRuntimeConfig; IUnknown *m_pSite; CBaseTrayIcon *m_pTrayIcon; }; [uuid("B98D13E7-55DB-4385-A33D-09FD1BA26338")] class CLAVSplitterSource : public CLAVSplitter { public: // construct only via class factory CLAVSplitterSource(LPUNKNOWN pUnk, HRESULT* phr); virtual ~CLAVSplitterSource(); // IUnknown DECLARE_IUNKNOWN; //暴露接口,使外部程序可以QueryInterface,关键! //翻译(“没有代表的方式查询接口”) STDMETHODIMP NonDelegatingQueryInterface(REFIID riid, void** ppv); };

先来看一下查询接口的函数NonDelegatingQueryInterface()吧

//暴露接口,使外部程序可以QueryInterface,关键! STDMETHODIMP CLAVSplitter::NonDelegatingQueryInterface(REFIID riid, void** ppv) { CheckPointer(ppv, E_POINTER); *ppv = NULL; if (m_pDemuxer && (riid == __uuidof(IKeyFrameInfo) || riid == __uuidof(ITrackInfo) || riid == IID_IAMExtendedSeeking || riid == IID_IAMMediaContent)) { return m_pDemuxer->QueryInterface(riid, ppv); } //写法好特别啊,意思是一样的 return QI(IMediaSeeking) QI(IAMStreamSelect) QI(ISpecifyPropertyPages) QI(ISpecifyPropertyPages2) QI2(ILAVFSettings) QI2(ILAVFSettingsInternal) QI(IObjectWithSite) QI(IBufferInfo) __super::NonDelegatingQueryInterface(riid, ppv); }

这个NonDelegatingQueryInterface()的写法确实够特别的,不过其作用还是一样的:根据不同的REFIID,获得不同的接口指针。在这里就不多说了。

再看一下Load()函数

// IFileSourceFilter // 打开 STDMETHODIMP CLAVSplitter::Load(LPCOLESTR pszFileName, const AM_MEDIA_TYPE * pmt) { CheckPointer(pszFileName, E_POINTER); m_bPlaybackStarted = FALSE; m_fileName = std::wstring(pszFileName); HRESULT hr = S_OK; SAFE_DELETE(m_pDemuxer); LPWSTR extension = PathFindExtensionW(pszFileName); DbgLog((LOG_TRACE, 10, L"::Load(): Opening file '%s' (extension: %s)", pszFileName, extension)); // BDMV uses the BD demuxer, everything else LAVF if (_wcsicmp(extension, L".bdmv") == 0 || _wcsicmp(extension, L".mpls") == 0) { m_pDemuxer = new CBDDemuxer(this, this); } else { m_pDemuxer = new CLAVFDemuxer(this, this); } //打开 if(FAILED(hr = m_pDemuxer->Open(pszFileName))) { SAFE_DELETE(m_pDemuxer); return hr; } m_pDemuxer->AddRef(); return InitDemuxer(); }

在这里我们要注意CLAVSplitter的一个变量:m_pDemuxer。这是一个指向 CBaseDemuxer的指针。因此在这里CLAVSplitter实际上调用了 CBaseDemuxer中的方法。

从代码中的逻辑我们可以看出:

1.寻找文件后缀

2.当文件后缀是:".bdmv"或者".mpls"的时候,m_pDemuxer指向一个CBDDemuxer(我推测这代表目标文件是蓝光文件什么的),其他情况下m_pDemuxer指向一个CLAVFDemuxer。

3.然后m_pDemuxer会调用Open()方法。

4.最后会调用一个InitDemuxer()方法。

在这里我们应该看看m_pDemuxer->Open()这个方法里面有什么。我们先考虑m_pDemuxer指向CLAVFDemuxer的情况。

// Demuxer Functions // 打开(就是一个封装) STDMETHODIMP CLAVFDemuxer::Open(LPCOLESTR pszFileName) { return OpenInputStream(NULL, pszFileName, NULL, TRUE); }

发现是一层封装,于是果断决定层层深入。

//实际的打开,使用FFMPEG STDMETHODIMP CLAVFDemuxer::OpenInputStream(AVIOContext *byteContext, LPCOLESTR pszFileName, const char *format, BOOL bForce) { CAutoLock lock(m_pLock); HRESULT hr = S_OK; int ret; // return code from avformat functions // Convert the filename from wchar to char for avformat char fileName[4100] = {0}; if (pszFileName) { ret = WideCharToMultiByte(CP_UTF8, 0, pszFileName, -1, fileName, 4096, NULL, NULL); } if (_strnicmp("mms:", fileName, 4) == 0) { memmove(fileName+1, fileName, strlen(fileName)); memcpy(fileName, "mmsh", 4); } AVIOInterruptCB cb = {avio_interrupt_cb, this}; trynoformat: // Create the avformat_context // FFMPEG中的函数 m_avFormat = avformat_alloc_context(); m_avFormat->pb = byteContext; m_avFormat->interrupt_callback = cb; if (m_avFormat->pb) m_avFormat->flags |= AVFMT_FLAG_CUSTOM_IO; LPWSTR extension = pszFileName ? PathFindExtensionW(pszFileName) : NULL; AVInputFormat *inputFormat = NULL; //如果指定了格式 if (format) { //查查有木有 inputFormat = av_find_input_format(format); } else if (pszFileName) { LPWSTR extension = PathFindExtensionW(pszFileName); for (int i = 0; i < countof(wszImageExtensions); i++) { if (_wcsicmp(extension, wszImageExtensions[i]) == 0) { if (byteContext) { inputFormat = av_find_input_format("image2pipe"); } else { inputFormat = av_find_input_format("image2"); } break; } } for (int i = 0; i < countof(wszBlockedExtensions); i++) { if (_wcsicmp(extension, wszBlockedExtensions[i]) == 0) { goto done; } } } // Disable loading of external mkv segments, if required if (!m_pSettings->GetLoadMatroskaExternalSegments()) m_avFormat->flags |= AVFMT_FLAG_NOEXTERNAL; m_timeOpening = time(NULL); //实际的打开 ret = avformat_open_input(&m_avFormat, fileName, inputFormat, NULL); //出错了 if (ret < 0) { DbgLog((LOG_ERROR, 0, TEXT("::OpenInputStream(): avformat_open_input failed (%d)"), ret)); if (format) { DbgLog((LOG_ERROR, 0, TEXT(" -> trying again without specific format"))); format = NULL; //实际的关闭 avformat_close_input(&m_avFormat); goto trynoformat; } goto done; } DbgLog((LOG_TRACE, 10, TEXT("::OpenInputStream(): avformat_open_input opened file of type '%S' (took %I64d seconds)"), m_avFormat->iformat->name, time(NULL) - m_timeOpening)); m_timeOpening = 0; //初始化AVFormat CHECK_HR(hr = InitAVFormat(pszFileName, bForce)); return S_OK; done: CleanupAVFormat(); return E_FAIL; }

看到这个函数,立马感受到了一种“拨云见日”的感觉。看到了很多FFMPEG的API函数。最重要的依据当属avformat_open_input()了,通过这个函数,打开了实际的文件。如果出现错误,则调用avformat_close_input()进行清理。

最后,还调用了InitAVFormat()函数:

//初始化AVFormat STDMETHODIMP CLAVFDemuxer::InitAVFormat(LPCOLESTR pszFileName, BOOL bForce) { HRESULT hr = S_OK; const char *format = NULL; //获取InputFormat信息(,短名称,长名称) lavf_get_iformat_infos(m_avFormat->iformat, &format, NULL); if (!bForce && (!format || !m_pSettings->IsFormatEnabled(format))) { DbgLog((LOG_TRACE, 20, L"::InitAVFormat() - format of type '%S' disabled, failing", format ? format : m_avFormat->iformat->name)); return E_FAIL; } m_pszInputFormat = format ? format : m_avFormat->iformat->name; m_bVC1SeenTimestamp = FALSE; LPWSTR extension = pszFileName ? PathFindExtensionW(pszFileName) : NULL; m_bMatroska = (_strnicmp(m_pszInputFormat, "matroska", 8) == 0); m_bOgg = (_strnicmp(m_pszInputFormat, "ogg", 3) == 0); m_bAVI = (_strnicmp(m_pszInputFormat, "avi", 3) == 0); m_bMPEGTS = (_strnicmp(m_pszInputFormat, "mpegts", 6) == 0); m_bMPEGPS = (_stricmp(m_pszInputFormat, "mpeg") == 0); m_bRM = (_stricmp(m_pszInputFormat, "rm") == 0); m_bPMP = (_stricmp(m_pszInputFormat, "pmp") == 0); m_bMP4 = (_stricmp(m_pszInputFormat, "mp4") == 0); m_bTSDiscont = m_avFormat->iformat->flags & AVFMT_TS_DISCONT; WCHAR szProt[24] = L"file"; if (pszFileName) { DWORD dwNumChars = 24; hr = UrlGetPart(pszFileName, szProt, &dwNumChars, URL_PART_SCHEME, 0); if (SUCCEEDED(hr) && dwNumChars && (_wcsicmp(szProt, L"file") != 0)) { m_avFormat->flags |= AVFMT_FLAG_NETWORK; DbgLog((LOG_TRACE, 10, TEXT("::InitAVFormat(): detected network protocol: %s"), szProt)); } } // TODO: make both durations below configurable // decrease analyze duration for network streams if (m_avFormat->flags & AVFMT_FLAG_NETWORK || (m_avFormat->flags & AVFMT_FLAG_CUSTOM_IO && !m_avFormat->pb->seekable)) { // require at least 0.2 seconds m_avFormat->max_analyze_duration = max(m_pSettings->GetNetworkStreamAnalysisDuration() * 1000, 200000); } else { // And increase it for mpeg-ts/ps files if (m_bMPEGTS || m_bMPEGPS) m_avFormat->max_analyze_duration = 10000000; } av_opt_set_int(m_avFormat, "correct_ts_overflow", !m_pBluRay, 0); if (m_bMatroska) m_avFormat->flags |= AVFMT_FLAG_KEEP_SIDE_DATA; m_timeOpening = time(NULL); //获取媒体流信息 int ret = avformat_find_stream_info(m_avFormat, NULL); if (ret < 0) { DbgLog((LOG_ERROR, 0, TEXT("::InitAVFormat(): av_find_stream_info failed (%d)"), ret)); goto done; } DbgLog((LOG_TRACE, 10, TEXT("::InitAVFormat(): avformat_find_stream_info finished, took %I64d seconds"), time(NULL) - m_timeOpening)); m_timeOpening = 0; // Check if this is a m2ts in a BD structure, and if it is, read some extra stream properties out of the CLPI files if (m_pBluRay) { m_pBluRay->ProcessClipLanguages(); } else if (pszFileName && m_bMPEGTS) { CheckBDM2TSCPLI(pszFileName); } SAFE_CO_FREE(m_stOrigParser); m_stOrigParser = (enum AVStreamParseType *)CoTaskMemAlloc(m_avFormat->nb_streams * sizeof(enum AVStreamParseType)); if (!m_stOrigParser) return E_OUTOFMEMORY; for(unsigned int idx = 0; idx < m_avFormat->nb_streams; ++idx) { AVStream *st = m_avFormat->streams[idx]; // Disable full stream parsing for these formats if (st->need_parsing == AVSTREAM_PARSE_FULL) { if (st->codec->codec_id == AV_CODEC_ID_DVB_SUBTITLE) { st->need_parsing = AVSTREAM_PARSE_NONE; } } if (m_bOgg && st->codec->codec_id == AV_CODEC_ID_H264) { st->need_parsing = AVSTREAM_PARSE_FULL; } // Create the parsers with the appropriate flags init_parser(m_avFormat, st); UpdateParserFlags(st); #ifdef DEBUG AVProgram *streamProg = av_find_program_from_stream(m_avFormat, NULL, idx); DbgLog((LOG_TRACE, 30, L"Stream %d (pid %d) - program: %d, codec: %S; parsing: %S;", idx, st->id, streamProg ? streamProg->pmt_pid : -1, avcodec_get_name(st->codec->codec_id), lavf_get_parsing_string(st->need_parsing))); #endif m_stOrigParser[idx] = st->need_parsing; if ((st->codec->codec_id == AV_CODEC_ID_DTS && st->codec->codec_tag == 0xA2) || (st->codec->codec_id == AV_CODEC_ID_EAC3 && st->codec->codec_tag == 0xA1)) st->disposition |= LAVF_DISPOSITION_SECONDARY_AUDIO; UpdateSubStreams(); if (st->codec->codec_type == AVMEDIA_TYPE_ATTACHMENT && (st->codec->codec_id == AV_CODEC_ID_TTF || st->codec->codec_id == AV_CODEC_ID_OTF)) { if (!m_pFontInstaller) { m_pFontInstaller = new CFontInstaller(); } m_pFontInstaller->InstallFont(st->codec->extradata, st->codec->extradata_size); } } CHECK_HR(hr = CreateStreams()); return S_OK; done: //关闭输入 CleanupAVFormat(); return E_FAIL; }

该函数通过avformat_find_stream_info()等获取到流信息,这里就不多说了。

3: LAV Video (1)

LAV Video 是使用很广泛的DirectShow Filter。它封装了FFMPEG中的libavcodec,支持十分广泛的视频格式的解码。在这里对其源代码进行详细的分析。

LAV Video 工程代码的结构如下图所示

直接看LAV Video最主要的类CLAVVideo吧,它的定义位于LAVVideo.h中。

LAVVideo.h

/* 雷霄骅 * 中国传媒大学/数字电视技术 * [email protected] * */ /* * Copyright (C) 2010-2013 Hendrik Leppkes * http://www.1f0.de * * This program is free software; you can redistribute it and/or modify * it under the terms of the GNU General Public License as published by * the Free Software Foundation; either version 2 of the License, or * (at your option) any later version. * * This program is distributed in the hope that it will be useful, * but WITHOUT ANY WARRANTY; without even the implied warranty of * MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the * GNU General Public License for more details. * * You should have received a copy of the GNU General Public License along * with this program; if not, write to the Free Software Foundation, Inc., * 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA. */ #pragma once #include "decoders/ILAVDecoder.h" #include "DecodeThread.h" #include "ILAVPinInfo.h" #include "LAVPixFmtConverter.h" #include "LAVVideoSettings.h" #include "H264RandomAccess.h" #include "FloatingAverage.h" #include "ISpecifyPropertyPages2.h" #include "SynchronizedQueue.h" #include "subtitles/LAVSubtitleConsumer.h" #include "subtitles/LAVVideoSubtitleInputPin.h" #include "BaseTrayIcon.h" #define LAVC_VIDEO_REGISTRY_KEY L"Software\\LAV\\Video" #define LAVC_VIDEO_REGISTRY_KEY_FORMATS L"Software\\LAV\\Video\\Formats" #define LAVC_VIDEO_REGISTRY_KEY_OUTPUT L"Software\\LAV\\Video\\Output" #define LAVC_VIDEO_REGISTRY_KEY_HWACCEL L"Software\\LAV\\Video\\HWAccel" #define LAVC_VIDEO_LOG_FILE L"LAVVideo.txt" #define DEBUG_FRAME_TIMINGS 0 #define DEBUG_PIXELCONV_TIMINGS 0 #define LAV_MT_FILTER_QUEUE_SIZE 4 typedef struct { REFERENCE_TIME rtStart; REFERENCE_TIME rtStop; } TimingCache; //解码核心类 //Transform Filter [uuid("EE30215D-164F-4A92-A4EB-9D4C13390F9F")] class CLAVVideo : public CTransformFilter, public ISpecifyPropertyPages2, public ILAVVideoSettings, public ILAVVideoStatus, public ILAVVideoCallback { public: CLAVVideo(LPUNKNOWN pUnk, HRESULT* phr); ~CLAVVideo(); static void CALLBACK StaticInit(BOOL bLoading, const CLSID *clsid); // IUnknown // 查找接口必须实现 DECLARE_IUNKNOWN; STDMETHODIMP NonDelegatingQueryInterface(REFIID riid, void** ppv); // ISpecifyPropertyPages2 // 属性页 // 获取或者创建 STDMETHODIMP GetPages(CAUUID *pPages); STDMETHODIMP CreatePage(const GUID& guid, IPropertyPage** ppPage); // ILAVVideoSettings STDMETHODIMP SetRuntimeConfig(BOOL bRuntimeConfig); STDMETHODIMP SetFormatConfiguration(LAVVideoCodec vCodec, BOOL bEnabled); STDMETHODIMP_(BOOL) GetFormatConfiguration(LAVVideoCodec vCodec); STDMETHODIMP SetNumThreads(DWORD dwNum); STDMETHODIMP_(DWORD) GetNumThreads(); STDMETHODIMP SetStreamAR(DWORD bStreamAR); STDMETHODIMP_(DWORD) GetStreamAR(); STDMETHODIMP SetPixelFormat(LAVOutPixFmts pixFmt, BOOL bEnabled); STDMETHODIMP_(BOOL) GetPixelFormat(LAVOutPixFmts pixFmt); STDMETHODIMP SetRGBOutputRange(DWORD dwRange); STDMETHODIMP_(DWORD) GetRGBOutputRange(); STDMETHODIMP SetDeintFieldOrder(LAVDeintFieldOrder fieldOrder); STDMETHODIMP_(LAVDeintFieldOrder) GetDeintFieldOrder(); STDMETHODIMP SetDeintForce(BOOL bForce); STDMETHODIMP_(BOOL) GetDeintForce(); STDMETHODIMP SetDeintAggressive(BOOL bAggressive); STDMETHODIMP_(BOOL) GetDeintAggressive(); STDMETHODIMP_(DWORD) CheckHWAccelSupport(LAVHWAccel hwAccel); STDMETHODIMP SetHWAccel(LAVHWAccel hwAccel); STDMETHODIMP_(LAVHWAccel) GetHWAccel(); STDMETHODIMP SetHWAccelCodec(LAVVideoHWCodec hwAccelCodec, BOOL bEnabled); STDMETHODIMP_(BOOL) GetHWAccelCodec(LAVVideoHWCodec hwAccelCodec); STDMETHODIMP SetHWAccelDeintMode(LAVHWDeintModes deintMode); STDMETHODIMP_(LAVHWDeintModes) GetHWAccelDeintMode(); STDMETHODIMP SetHWAccelDeintOutput(LAVDeintOutput deintOutput); STDMETHODIMP_(LAVDeintOutput) GetHWAccelDeintOutput(); STDMETHODIMP SetHWAccelDeintHQ(BOOL bHQ); STDMETHODIMP_(BOOL) GetHWAccelDeintHQ(); STDMETHODIMP SetSWDeintMode(LAVSWDeintModes deintMode); STDMETHODIMP_(LAVSWDeintModes) GetSWDeintMode(); STDMETHODIMP SetSWDeintOutput(LAVDeintOutput deintOutput); STDMETHODIMP_(LAVDeintOutput) GetSWDeintOutput(); STDMETHODIMP SetDeintTreatAsProgressive(BOOL bEnabled); STDMETHODIMP_(BOOL) GetDeintTreatAsProgressive(); STDMETHODIMP SetDitherMode(LAVDitherMode ditherMode); STDMETHODIMP_(LAVDitherMode) GetDitherMode(); STDMETHODIMP SetUseMSWMV9Decoder(BOOL bEnabled); STDMETHODIMP_(BOOL) GetUseMSWMV9Decoder(); STDMETHODIMP SetDVDVideoSupport(BOOL bEnabled); STDMETHODIMP_(BOOL) GetDVDVideoSupport(); STDMETHODIMP SetHWAccelResolutionFlags(DWORD dwResFlags); STDMETHODIMP_(DWORD) GetHWAccelResolutionFlags(); STDMETHODIMP SetTrayIcon(BOOL bEnabled); STDMETHODIMP_(BOOL) GetTrayIcon(); STDMETHODIMP SetDeinterlacingMode(LAVDeintMode deintMode); STDMETHODIMP_(LAVDeintMode) GetDeinterlacingMode(); // ILAVVideoStatus STDMETHODIMP_(const WCHAR *) GetActiveDecoderName() { return m_Decoder.GetDecoderName(); } // CTransformFilter // 核心的 HRESULT CheckInputType(const CMediaType* mtIn); HRESULT CheckTransform(const CMediaType* mtIn, const CMediaType* mtOut); HRESULT DecideBufferSize(IMemAllocator * pAllocator, ALLOCATOR_PROPERTIES *pprop); HRESULT GetMediaType(int iPosition, CMediaType *pMediaType); HRESULT SetMediaType(PIN_DIRECTION dir, const CMediaType *pmt); HRESULT EndOfStream(); HRESULT BeginFlush(); HRESULT EndFlush(); HRESULT NewSegment(REFERENCE_TIME tStart, REFERENCE_TIME tStop, double dRate); //处理的核心 //核心一般才有IMediaSample HRESULT Receive(IMediaSample *pIn); HRESULT CheckConnect(PIN_DIRECTION dir, IPin *pPin); HRESULT BreakConnect(PIN_DIRECTION dir); HRESULT CompleteConnect(PIN_DIRECTION dir, IPin *pReceivePin); int GetPinCount(); CBasePin* GetPin(int n); STDMETHODIMP JoinFilterGraph(IFilterGraph * pGraph, LPCWSTR pName); // ILAVVideoCallback STDMETHODIMP AllocateFrame(LAVFrame **ppFrame); STDMETHODIMP ReleaseFrame(LAVFrame **ppFrame); STDMETHODIMP Deliver(LAVFrame *pFrame); STDMETHODIMP_(LPWSTR) GetFileExtension(); STDMETHODIMP_(BOOL) FilterInGraph(PIN_DIRECTION dir, const GUID &clsid) { if (dir == PINDIR_INPUT) return FilterInGraphSafe(m_pInput, clsid); else return FilterInGraphSafe(m_pOutput, clsid); } STDMETHODIMP_(DWORD) GetDecodeFlags() { return m_dwDecodeFlags; } STDMETHODIMP_(CMediaType&) GetInputMediaType() { return m_pInput->CurrentMediaType(); } STDMETHODIMP GetLAVPinInfo(LAVPinInfo &info) { if (m_LAVPinInfoValid) { info = m_LAVPinInfo; return S_OK; } return E_FAIL; } STDMETHODIMP_(CBasePin*) GetOutputPin() { return m_pOutput; } STDMETHODIMP_(CMediaType&) GetOutputMediaType() { return m_pOutput->CurrentMediaType(); } STDMETHODIMP DVDStripPacket(BYTE*& p, long& len) { static_cast<CDeCSSTransformInputPin*>(m_pInput)->StripPacket(p, len); return S_OK; } STDMETHODIMP_(LAVFrame*) GetFlushFrame(); STDMETHODIMP ReleaseAllDXVAResources() { ReleaseLastSequenceFrame(); return S_OK; } public: // Pin Configuration const static AMOVIESETUP_MEDIATYPE sudPinTypesIn[]; const static int sudPinTypesInCount; const static AMOVIESETUP_MEDIATYPE sudPinTypesOut[]; const static int sudPinTypesOutCount; private: HRESULT LoadDefaults(); HRESULT ReadSettings(HKEY rootKey); HRESULT LoadSettings(); HRESULT SaveSettings(); HRESULT CreateTrayIcon(); HRESULT CreateDecoder(const CMediaType *pmt); HRESULT GetDeliveryBuffer(IMediaSample** ppOut, int width, int height, AVRational ar, DXVA2_ExtendedFormat dxvaExtFormat, REFERENCE_TIME avgFrameDuration); HRESULT ReconnectOutput(int width, int height, AVRational ar, DXVA2_ExtendedFormat dxvaExtFlags, REFERENCE_TIME avgFrameDuration, BOOL bDXVA = FALSE); HRESULT SetFrameFlags(IMediaSample* pMS, LAVFrame *pFrame); HRESULT NegotiatePixelFormat(CMediaType &mt, int width, int height); BOOL IsInterlaced(); HRESULT Filter(LAVFrame *pFrame); HRESULT DeliverToRenderer(LAVFrame *pFrame); HRESULT PerformFlush(); HRESULT ReleaseLastSequenceFrame(); HRESULT GetD3DBuffer(LAVFrame *pFrame); HRESULT RedrawStillImage(); HRESULT SetInDVDMenu(bool menu) { m_bInDVDMenu = menu; return S_OK; } enum {CNTRL_EXIT, CNTRL_REDRAW}; HRESULT ControlCmd(DWORD cmd) { return m_ControlThread->CallWorker(cmd); } private: friend class CVideoOutputPin; friend class CDecodeThread; friend class CLAVControlThread; friend class CLAVSubtitleProvider; friend class CLAVSubtitleConsumer; //解码线程 CDecodeThread m_Decoder; CAMThread *m_ControlThread; REFERENCE_TIME m_rtPrevStart; REFERENCE_TIME m_rtPrevStop; BOOL m_bForceInputAR; BOOL m_bSendMediaType; BOOL m_bFlushing; HRESULT m_hrDeliver; CLAVPixFmtConverter m_PixFmtConverter; std::wstring m_strExtension; DWORD m_bDXVAExtFormatSupport; DWORD m_bMadVR; DWORD m_bOverlayMixer; DWORD m_dwDecodeFlags; BOOL m_bInDVDMenu; AVFilterGraph *m_pFilterGraph; AVFilterContext *m_pFilterBufferSrc; AVFilterContext *m_pFilterBufferSink; LAVPixelFormat m_filterPixFmt; int m_filterWidth; int m_filterHeight; LAVFrame m_FilterPrevFrame; BOOL m_LAVPinInfoValid; LAVPinInfo m_LAVPinInfo; CLAVVideoSubtitleInputPin *m_pSubtitleInput; CLAVSubtitleConsumer *m_SubtitleConsumer; LAVFrame *m_pLastSequenceFrame; AM_SimpleRateChange m_DVDRate; BOOL m_bRuntimeConfig; struct VideoSettings { BOOL TrayIcon; DWORD StreamAR; DWORD NumThreads; BOOL bFormats[Codec_VideoNB]; BOOL bMSWMV9DMO; BOOL bPixFmts[LAVOutPixFmt_NB]; DWORD RGBRange; DWORD HWAccel; BOOL bHWFormats[HWCodec_NB]; DWORD HWAccelResFlags; DWORD HWDeintMode; DWORD HWDeintOutput; BOOL HWDeintHQ; DWORD DeintFieldOrder; LAVDeintMode DeintMode; DWORD SWDeintMode; DWORD SWDeintOutput; DWORD DitherMode; BOOL bDVDVideo; } m_settings; CBaseTrayIcon *m_pTrayIcon; #ifdef DEBUG FloatingAverage<double> m_pixFmtTimingAvg; #endif };

可见该类继承了CTransformFilter,其的功能真的是非常丰富的。在这里肯定无法对其进行一一分析,只能选择其中重点的函数进行一下分析。

该类中包含了解码线程类:CDecodeThread m_Decoder;,这里封装了解码功能。

同时该类中包含了函数Receive(IMediaSample *pIn);,是发挥解码功能的函数,其中pIn是输入的解码前的视频压缩编码数据。

下面来看看Receive()函数:

//处理的核心 //核心一般才有IMediaSample HRESULT CLAVVideo::Receive(IMediaSample *pIn) { CAutoLock cAutoLock(&m_csReceive); HRESULT hr = S_OK; AM_SAMPLE2_PROPERTIES const *pProps = m_pInput->SampleProps(); if(pProps->dwStreamId != AM_STREAM_MEDIA) { return m_pOutput->Deliver(pIn); } AM_MEDIA_TYPE *pmt = NULL; //获取媒体类型等等 if (SUCCEEDED(pIn->GetMediaType(&pmt)) && pmt) { CMediaType mt = *pmt; DeleteMediaType(pmt); if (mt != m_pInput->CurrentMediaType() || !(m_dwDecodeFlags & LAV_VIDEO_DEC_FLAG_DVD)) { DbgLog((LOG_TRACE, 10, L"::Receive(): Input sample contained media type, dynamic format change...")); m_Decoder.EndOfStream(); hr = m_pInput->SetMediaType(&mt); if (FAILED(hr)) { DbgLog((LOG_ERROR, 10, L"::Receive(): Setting new media type failed...")); return hr; } } } m_hrDeliver = S_OK; // Skip over empty packets if (pIn->GetActualDataLength() == 0) { return S_OK; } //解码 hr = m_Decoder.Decode(pIn); if (FAILED(hr)) return hr; if (FAILED(m_hrDeliver)) return m_hrDeliver; return S_OK; }

由代码我们可以看出,实际发挥出解码功能的函数是hr = m_Decoder.Decode(pIn);。

下面我们来看看CDecodeThread类的Decode()方法:

//解码线程的解码函数 STDMETHODIMP CDecodeThread::Decode(IMediaSample *pSample) { CAutoLock decoderLock(this); if (!CAMThread::ThreadExists()) return E_UNEXPECTED; // Wait until the queue is empty while(HasSample()) Sleep(1); // Re-init the decoder, if requested // Doing this inside the worker thread alone causes problems // when switching from non-sync to sync, so ensure we're in sync. if (m_bDecoderNeedsReInit) { CAMThread::CallWorker(CMD_REINIT); while (!m_evEOSDone.Check()) { m_evSample.Wait(); ProcessOutput(); } } m_evDeliver.Reset(); m_evSample.Reset(); m_evDecodeDone.Reset(); pSample->AddRef(); // Send data to worker thread, and wake it (if it was waiting) PutSample(pSample); // If we don't have thread safe buffers, we need to synchronize // with the worker thread and deliver them when they are available // and then let it know that we did so if (m_bSyncToProcess) { while (!m_evDecodeDone.Check()) { m_evSample.Wait(); ProcessOutput(); } } ProcessOutput(); return S_OK; }

这个方法乍一看感觉很抽象,好像没看见直接调用任何解码的函数。如果LAVVideo的封装的ffmpeg的libavcodec的话,应该是最终调用avcodec_decode_video2()才对啊。。。先来看看CDecodeThread这个类的定义吧!

DecodeThread.h

/* 雷霄骅 * 中国传媒大学/数字电视技术 * [email protected] * */ /* * Copyright (C) 2010-2013 Hendrik Leppkes * http://www.1f0.de * * This program is free software; you can redistribute it and/or modify * it under the terms of the GNU General Public License as published by * the Free Software Foundation; either version 2 of the License, or * (at your option) any later version. * * This program is distributed in the hope that it will be useful, * but WITHOUT ANY WARRANTY; without even the implied warranty of * MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the * GNU General Public License for more details. * * You should have received a copy of the GNU General Public License along * with this program; if not, write to the Free Software Foundation, Inc., * 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA. */ #pragma once #include "decoders/ILAVDecoder.h" #include "SynchronizedQueue.h" class CLAVVideo; class CDecodeThread : public ILAVVideoCallback, protected CAMThread, protected CCritSec { public: CDecodeThread(CLAVVideo *pLAVVideo); ~CDecodeThread(); // Parts of ILAVDecoder STDMETHODIMP_(const WCHAR*) GetDecoderName() { return m_pDecoder ? m_pDecoder->GetDecoderName() : NULL; } STDMETHODIMP_(long) GetBufferCount() { return m_pDecoder ? m_pDecoder->GetBufferCount() : 4; } STDMETHODIMP_(BOOL) IsInterlaced() { return m_pDecoder ? m_pDecoder->IsInterlaced() : TRUE; } STDMETHODIMP GetPixelFormat(LAVPixelFormat *pPix, int *pBpp) { ASSERT(m_pDecoder); return m_pDecoder->GetPixelFormat(pPix, pBpp); } STDMETHODIMP_(REFERENCE_TIME) GetFrameDuration() { ASSERT(m_pDecoder); return m_pDecoder->GetFrameDuration(); } STDMETHODIMP HasThreadSafeBuffers() { return m_pDecoder ? m_pDecoder->HasThreadSafeBuffers() : S_FALSE; } STDMETHODIMP CreateDecoder(const CMediaType *pmt, AVCodecID codec); STDMETHODIMP Close(); //解码线程的解码函数 STDMETHODIMP Decode(IMediaSample *pSample); STDMETHODIMP Flush(); STDMETHODIMP EndOfStream(); STDMETHODIMP InitAllocator(IMemAllocator **ppAlloc); STDMETHODIMP PostConnect(IPin *pPin); STDMETHODIMP_(BOOL) IsHWDecoderActive() { return m_bHWDecoder; } // ILAVVideoCallback STDMETHODIMP AllocateFrame(LAVFrame **ppFrame); STDMETHODIMP ReleaseFrame(LAVFrame **ppFrame); STDMETHODIMP Deliver(LAVFrame *pFrame); STDMETHODIMP_(LPWSTR) GetFileExtension(); STDMETHODIMP_(BOOL) FilterInGraph(PIN_DIRECTION dir, const GUID &clsid); STDMETHODIMP_(DWORD) GetDecodeFlags(); STDMETHODIMP_(CMediaType&) GetInputMediaType(); STDMETHODIMP GetLAVPinInfo(LAVPinInfo &info); STDMETHODIMP_(CBasePin*) GetOutputPin(); STDMETHODIMP_(CMediaType&) GetOutputMediaType(); STDMETHODIMP DVDStripPacket(BYTE*& p, long& len); STDMETHODIMP_(LAVFrame*) GetFlushFrame(); STDMETHODIMP ReleaseAllDXVAResources(); protected: //包含了对进程的各种操作,重要 DWORD ThreadProc(); private: STDMETHODIMP CreateDecoderInternal(const CMediaType *pmt, AVCodecID codec); STDMETHODIMP PostConnectInternal(IPin *pPin); STDMETHODIMP DecodeInternal(IMediaSample *pSample); STDMETHODIMP ClearQueues(); STDMETHODIMP ProcessOutput(); bool HasSample(); void PutSample(IMediaSample *pSample); IMediaSample* GetSample(); void ReleaseSample(); bool CheckForEndOfSequence(IMediaSample *pSample); private: //各种对进程进行的操作 enum {CMD_CREATE_DECODER, CMD_CLOSE_DECODER, CMD_FLUSH, CMD_EOS, CMD_EXIT, CMD_INIT_ALLOCATOR, CMD_POST_CONNECT, CMD_REINIT}; //注意DecodeThread像是一个处于中间位置的东西 //连接了Filter核心类CLAVVideo和解码器的接口ILAVDecoder CLAVVideo *m_pLAVVideo; ILAVDecoder *m_pDecoder; AVCodecID m_Codec; BOOL m_bHWDecoder; BOOL m_bHWDecoderFailed; BOOL m_bSyncToProcess; BOOL m_bDecoderNeedsReInit; CAMEvent m_evInput; CAMEvent m_evDeliver; CAMEvent m_evSample; CAMEvent m_evDecodeDone; CAMEvent m_evEOSDone; CCritSec m_ThreadCritSec; struct { const CMediaType *pmt; AVCodecID codec; IMemAllocator **allocator; IPin *pin; } m_ThreadCallContext; CSynchronizedQueue<LAVFrame *> m_Output; CCritSec m_SampleCritSec; IMediaSample *m_NextSample; IMediaSample *m_TempSample[2]; IMediaSample *m_FailedSample; std::wstring m_processName; };

从名字上我们可以判断,这个类用于管理解码的线程。在这里我们关注该类里面的两个指针变量:

CLAVVideo *m_pLAVVideo;

ILAVDecoder *m_pDecoder;

其中第一个指针变量就是这个工程中最核心的类CLAVVideo,而第二个指针变量则是解码器的接口。通过这个接口就可以调用具体解码器的相应方法了。(注:在源代码中发现,解码器不光包含libavcodec,也可以是wmv9等等,换句话说,是可以扩展其他种类的解码器的。不过就目前的情况来看,lavvideo似乎不如ffdshow支持的解码器种类多)

该类里面还有一个函数:

ThreadProc()

该函数中包含了对线程的各种操作,其中包含调用了ILAVDecoder接口的各种方法:

//包含了对进程的各种操作 DWORD CDecodeThread::ThreadProc() { HRESULT hr; DWORD cmd; BOOL bEOS = FALSE; BOOL bReinit = FALSE; SetThreadName(-1, "LAVVideo Decode Thread"); HANDLE hWaitEvents[2] = { GetRequestHandle(), m_evInput }; //不停转圈,永不休止 while(1) { if (!bEOS && !bReinit) { // Wait for either an input sample, or an request WaitForMultipleObjects(2, hWaitEvents, FALSE, INFINITE); } //根据操作命令的不同 if (CheckRequest(&cmd)) { switch (cmd) { //创建解码器 case CMD_CREATE_DECODER: { CAutoLock lock(&m_ThreadCritSec); //创建 hr = CreateDecoderInternal(m_ThreadCallContext.pmt, m_ThreadCallContext.codec); Reply(hr); m_ThreadCallContext.pmt = NULL; } break; case CMD_CLOSE_DECODER: { //关闭 ClearQueues(); SAFE_DELETE(m_pDecoder); Reply(S_OK); } break; case CMD_FLUSH: { //清楚 ClearQueues(); m_pDecoder->Flush(); Reply(S_OK); } break; case CMD_EOS: { bEOS = TRUE; m_evEOSDone.Reset(); Reply(S_OK); } break; case CMD_EXIT: { //退出 Reply(S_OK); return 0; } break; case CMD_INIT_ALLOCATOR: { CAutoLock lock(&m_ThreadCritSec); hr = m_pDecoder->InitAllocator(m_ThreadCallContext.allocator); Reply(hr); m_ThreadCallContext.allocator = NULL; } break; case CMD_POST_CONNECT: { CAutoLock lock(&m_ThreadCritSec); hr = PostConnectInternal(m_ThreadCallContext.pin); Reply(hr); m_ThreadCallContext.pin = NULL; } break; case CMD_REINIT: { //重启 CMediaType &mt = m_pLAVVideo->GetInputMediaType(); CreateDecoderInternal(&mt, m_Codec); m_TempSample[1] = m_NextSample; m_NextSample = m_FailedSample; m_FailedSample = NULL; bReinit = TRUE; m_evEOSDone.Reset(); Reply(S_OK); m_bDecoderNeedsReInit = FALSE; } break; default: ASSERT(0); } } if (m_bDecoderNeedsReInit) { m_evInput.Reset(); continue; } if (bReinit && !m_NextSample) { if (m_TempSample[0]) { m_NextSample = m_TempSample[0]; m_TempSample[0] = NULL; } else if (m_TempSample[1]) { m_NextSample = m_TempSample[1]; m_TempSample[1] = NULL; } else { bReinit = FALSE; m_evEOSDone.Set(); m_evSample.Set(); continue; } } //获得一份数据 IMediaSample *pSample = GetSample(); if (!pSample) { // Process the EOS now that the sample queue is empty if (bEOS) { bEOS = FALSE; m_pDecoder->EndOfStream(); m_evEOSDone.Set(); m_evSample.Set(); } continue; } //解码 DecodeInternal(pSample); // Release the sample //释放 SafeRelease(&pSample); // Indicates we're done decoding this sample m_evDecodeDone.Set(); // Set the Sample Event to unblock any waiting threads m_evSample.Set(); } return 0; }

先分析到这里了,至于ILAVDecoder接口方面的东西下篇文章再写。

4: LAV Video (2)

在这里继续上篇文章的内容。文章中提到LAVVideo主要通过CDecodeThread这个类进行解码线程的管理,其中有一个关键的管理函数:ThreadProc(),包含了对解码线程的各种操作。函数如下所示:

//包含了对进程的各种操作 DWORD CDecodeThread::ThreadProc() { HRESULT hr; DWORD cmd; BOOL bEOS = FALSE; BOOL bReinit = FALSE; SetThreadName(-1, "LAVVideo Decode Thread"); HANDLE hWaitEvents[2] = { GetRequestHandle(), m_evInput }; //不停转圈,永不休止 while(1) { if (!bEOS && !bReinit) { // Wait for either an input sample, or an request WaitForMultipleObjects(2, hWaitEvents, FALSE, INFINITE); } //根据操作命令的不同 if (CheckRequest(&cmd)) { switch (cmd) { //创建解码器 case CMD_CREATE_DECODER: { CAutoLock lock(&m_ThreadCritSec); //创建 hr = CreateDecoderInternal(m_ThreadCallContext.pmt, m_ThreadCallContext.codec); Reply(hr); m_ThreadCallContext.pmt = NULL; } break; case CMD_CLOSE_DECODER: { //关闭 ClearQueues(); SAFE_DELETE(m_pDecoder); Reply(S_OK); } break; case CMD_FLUSH: { //清楚 ClearQueues(); m_pDecoder->Flush(); Reply(S_OK); } break; case CMD_EOS: { bEOS = TRUE; m_evEOSDone.Reset(); Reply(S_OK); } break; case CMD_EXIT: { //退出 Reply(S_OK); return 0; } break; case CMD_INIT_ALLOCATOR: { CAutoLock lock(&m_ThreadCritSec); hr = m_pDecoder->InitAllocator(m_ThreadCallContext.allocator); Reply(hr); m_ThreadCallContext.allocator = NULL; } break; case CMD_POST_CONNECT: { CAutoLock lock(&m_ThreadCritSec); hr = PostConnectInternal(m_ThreadCallContext.pin); Reply(hr); m_ThreadCallContext.pin = NULL; } break; case CMD_REINIT: { //重启 CMediaType &mt = m_pLAVVideo->GetInputMediaType(); CreateDecoderInternal(&mt, m_Codec); m_TempSample[1] = m_NextSample; m_NextSample = m_FailedSample; m_FailedSample = NULL; bReinit = TRUE; m_evEOSDone.Reset(); Reply(S_OK); m_bDecoderNeedsReInit = FALSE; } break; default: ASSERT(0); } } if (m_bDecoderNeedsReInit) { m_evInput.Reset(); continue; } if (bReinit && !m_NextSample) { if (m_TempSample[0]) { m_NextSample = m_TempSample[0]; m_TempSample[0] = NULL; } else if (m_TempSample[1]) { m_NextSample = m_TempSample[1]; m_TempSample[1] = NULL; } else { bReinit = FALSE; m_evEOSDone.Set(); m_evSample.Set(); continue; } } //获得一份数据 IMediaSample *pSample = GetSample(); if (!pSample) { // Process the EOS now that the sample queue is empty if (bEOS) { bEOS = FALSE; m_pDecoder->EndOfStream(); m_evEOSDone.Set(); m_evSample.Set(); } continue; } //解码 DecodeInternal(pSample); // Release the sample //释放 SafeRelease(&pSample); // Indicates we're done decoding this sample m_evDecodeDone.Set(); // Set the Sample Event to unblock any waiting threads m_evSample.Set(); } return 0; }

该函数中,DecodeInternal(pSample)为实际上真正具有解码功能的函数,来看看它的源代码吧:

STDMETHODIMP CDecodeThread::DecodeInternal(IMediaSample *pSample) { HRESULT hr = S_OK; if (!m_pDecoder) return E_UNEXPECTED; //调用接口进行解码 hr = m_pDecoder->Decode(pSample); // If a hardware decoder indicates a hard failure, we switch back to software // This is used to indicate incompatible media if (FAILED(hr) && m_bHWDecoder) { DbgLog((LOG_TRACE, 10, L"::Receive(): Hardware decoder indicates failure, switching back to software")); m_bHWDecoderFailed = TRUE; // Store the failed sample for re-try in a moment m_FailedSample = pSample; m_FailedSample->AddRef(); // Schedule a re-init when the main thread goes there the next time m_bDecoderNeedsReInit = TRUE; // Make room in the sample buffer, to ensure the main thread can get in m_TempSample[0] = GetSample(); } return S_OK; }

该函数比较简短,从源代码中可以看出,调用了m_pDecoder的Decode()方法。其中m_pDecoder为ILAVDecoder类型的指针,而ILAVDecoder是一个接口,并不包含实际的方法,如下所示。注意,从程序注释中可以看出,每一个解码器都需要实现该接口规定的函数。

/** * Decoder interface * * Every decoder needs to implement this to interface with the LAV Video core */ //接口 interface ILAVDecoder { /** * Virtual destructor */ virtual ~ILAVDecoder(void) {}; /** * Initialize interfaces with the LAV Video core * This function should also be used to create all interfaces with external DLLs * * @param pSettings reference to the settings interface * @param pCallback reference to the callback interface * @return S_OK on success, error code if this decoder is lacking an external support dll */ STDMETHOD(InitInterfaces)(ILAVVideoSettings *pSettings, ILAVVideoCallback *pCallback) PURE; /** * Check if the decoder is functional */ STDMETHOD(Check)() PURE; /** * Initialize the codec to decode a stream specified by codec and pmt. * * @param codec Codec Id * @param pmt DirectShow Media Type * @return S_OK on success, an error code otherwise */ STDMETHOD(InitDecoder)(AVCodecID codec, const CMediaType *pmt) PURE; /** * Decode a frame. * * @param pSample Media Sample to decode * @return S_OK if decoding was successfull, S_FALSE if no frame could be extracted, an error code if the decoder is not compatible with the bitstream * * Note: When returning an actual error code, the filter will switch to the fallback software decoder! This should only be used for catastrophic failures, * like trying to decode a unsupported format on a hardware decoder. */ STDMETHOD(Decode)(IMediaSample *pSample) PURE; /** * Flush the decoder after a seek. * The decoder should discard any remaining data. * * @return unused */ STDMETHOD(Flush)() PURE; /** * End of Stream * The decoder is asked to output any buffered frames for immediate delivery * * @return unused */ STDMETHOD(EndOfStream)() PURE; /** * Query the decoder for the current pixel format * Mostly used by the media type creation logic before playback starts * * @return the pixel format used in the decoding process */ STDMETHOD(GetPixelFormat)(LAVPixelFormat *pPix, int *pBpp) PURE; /** * Get the frame duration. * * This function is not mandatory, and if you cannot provide any specific duration, return 0. */ STDMETHOD_(REFERENCE_TIME, GetFrameDuration)() PURE; /** * Query whether the format can potentially be interlaced. * This function should return false if the format can 100% not be interlaced, and true if it can be interlaced (but also progressive). */ STDMETHOD_(BOOL, IsInterlaced)() PURE; /** * Allows the decoder to handle an allocator. * Used by DXVA2 decoding */ STDMETHOD(InitAllocator)(IMemAllocator **ppAlloc) PURE; /** * Function called after connection is established, with the pin as argument */ STDMETHOD(PostConnect)(IPin *pPin) PURE; /** * Get the number of sample buffers optimal for this decoder */ STDMETHOD_(long, GetBufferCount)() PURE; /** * Get the name of the decoder */ STDMETHOD_(const WCHAR*, GetDecoderName)() PURE; /** * Get whether the decoder outputs thread-safe buffers */ STDMETHOD(HasThreadSafeBuffers)() PURE; /** * Get whether the decoder should sync to the main thread */ STDMETHOD(SyncToProcessThread)() PURE; };

下面来看看封装libavcodec库的类吧,该类的定义位于decoders文件夹下,名为avcodec.h,如图所示:

该类名字叫CDecAvcodec,其继承了CDecBase。而CDecBase继承了ILAVDecoder。

/* 雷霄骅 * 中国传媒大学/数字电视技术 * [email protected] * */ /* * Copyright (C) 2010-2013 Hendrik Leppkes * http://www.1f0.de * * This program is free software; you can redistribute it and/or modify * it under the terms of the GNU General Public License as published by * the Free Software Foundation; either version 2 of the License, or * (at your option) any later version. * * This program is distributed in the hope that it will be useful, * but WITHOUT ANY WARRANTY; without even the implied warranty of * MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the * GNU General Public License for more details. * * You should have received a copy of the GNU General Public License along * with this program; if not, write to the Free Software Foundation, Inc., * 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA. */ #pragma once #include "DecBase.h" #include "H264RandomAccess.h" #include <map> #define AVCODEC_MAX_THREADS 16 typedef struct { REFERENCE_TIME rtStart; REFERENCE_TIME rtStop; } TimingCache; //解码器(AVCODEC)(其实还有WMV9,CUVID等) class CDecAvcodec : public CDecBase { public: CDecAvcodec(void); virtual ~CDecAvcodec(void); // ILAVDecoder STDMETHODIMP InitDecoder(AVCodecID codec, const CMediaType *pmt); //解码 STDMETHODIMP Decode(const BYTE *buffer, int buflen, REFERENCE_TIME rtStart, REFERENCE_TIME rtStop, BOOL bSyncPoint, BOOL bDiscontinuity); STDMETHODIMP Flush(); STDMETHODIMP EndOfStream(); STDMETHODIMP GetPixelFormat(LAVPixelFormat *pPix, int *pBpp); STDMETHODIMP_(REFERENCE_TIME) GetFrameDuration(); STDMETHODIMP_(BOOL) IsInterlaced(); STDMETHODIMP_(const WCHAR*) GetDecoderName() { return L"avcodec"; } STDMETHODIMP HasThreadSafeBuffers() { return S_OK; } STDMETHODIMP SyncToProcessThread() { return m_pAVCtx && m_pAVCtx->thread_count > 1 ? S_OK : S_FALSE; } // CDecBase STDMETHODIMP Init(); protected: virtual HRESULT AdditionaDecoderInit() { return S_FALSE; } virtual HRESULT PostDecode() { return S_FALSE; } virtual HRESULT HandleDXVA2Frame(LAVFrame *pFrame) { return S_FALSE; } //销毁解码器,各种Free STDMETHODIMP DestroyDecoder(); private: STDMETHODIMP ConvertPixFmt(AVFrame *pFrame, LAVFrame *pOutFrame); protected: AVCodecContext *m_pAVCtx; AVFrame *m_pFrame; AVCodecID m_nCodecId; BOOL m_bDXVA; private: AVCodec *m_pAVCodec; AVCodecParserContext *m_pParser; BYTE *m_pFFBuffer; BYTE *m_pFFBuffer2; int m_nFFBufferSize; int m_nFFBufferSize2; SwsContext *m_pSwsContext; CH264RandomAccess m_h264RandomAccess; BOOL m_bNoBufferConsumption; BOOL m_bHasPalette; // Timing settings BOOL m_bFFReordering; BOOL m_bCalculateStopTime; BOOL m_bRVDropBFrameTimings; BOOL m_bInputPadded; BOOL m_bBFrameDelay; TimingCache m_tcBFrameDelay[2]; int m_nBFramePos; TimingCache m_tcThreadBuffer[AVCODEC_MAX_THREADS]; int m_CurrentThread; REFERENCE_TIME m_rtStartCache; BOOL m_bResumeAtKeyFrame; BOOL m_bWaitingForKeyFrame; int m_iInterlaced; };

从CDecAvcodec类的定义可以看出,包含了各种功能的函数。首先我们看看初始化函数Init()

// ILAVDecoder STDMETHODIMP CDecAvcodec::Init() { #ifdef DEBUG DbgSetModuleLevel (LOG_CUSTOM1, DWORD_MAX); // FFMPEG messages use custom1 av_log_set_callback(lavf_log_callback); #else av_log_set_callback(NULL); #endif //注册 avcodec_register_all(); return S_OK; }

可见其调用了ffmpeg的API函数avcodec_register_all()进行了解码器的注册。

我们再来看看其解码函数Decode():

//解码 STDMETHODIMP CDecAvcodec::Decode(const BYTE *buffer, int buflen, REFERENCE_TIME rtStartIn, REFERENCE_TIME rtStopIn, BOOL bSyncPoint, BOOL bDiscontinuity) { int got_picture = 0; int used_bytes = 0; BOOL bParserFrame = FALSE; BOOL bFlush = (buffer == NULL); BOOL bEndOfSequence = FALSE; //初始化Packet AVPacket avpkt; av_init_packet(&avpkt); if (m_pAVCtx->active_thread_type & FF_THREAD_FRAME) { if (!m_bFFReordering) { m_tcThreadBuffer[m_CurrentThread].rtStart = rtStartIn; m_tcThreadBuffer[m_CurrentThread].rtStop = rtStopIn; } m_CurrentThread = (m_CurrentThread + 1) % m_pAVCtx->thread_count; } else if (m_bBFrameDelay) { m_tcBFrameDelay[m_nBFramePos].rtStart = rtStartIn; m_tcBFrameDelay[m_nBFramePos].rtStop = rtStopIn; m_nBFramePos = !m_nBFramePos; } uint8_t *pDataBuffer = NULL; if (!bFlush && buflen > 0) { if (!m_bInputPadded && (!(m_pAVCtx->active_thread_type & FF_THREAD_FRAME) || m_pParser)) { // Copy bitstream into temporary buffer to ensure overread protection // Verify buffer size if (buflen > m_nFFBufferSize) { m_nFFBufferSize = buflen; m_pFFBuffer = (BYTE *)av_realloc_f(m_pFFBuffer, m_nFFBufferSize + FF_INPUT_BUFFER_PADDING_SIZE, 1); if (!m_pFFBuffer) { m_nFFBufferSize = 0; return E_OUTOFMEMORY; } } memcpy(m_pFFBuffer, buffer, buflen); memset(m_pFFBuffer+buflen, 0, FF_INPUT_BUFFER_PADDING_SIZE); pDataBuffer = m_pFFBuffer; } else { pDataBuffer = (uint8_t *)buffer; } if (m_nCodecId == AV_CODEC_ID_H264) { BOOL bRecovered = m_h264RandomAccess.searchRecoveryPoint(pDataBuffer, buflen); if (!bRecovered) { return S_OK; } } else if (m_nCodecId == AV_CODEC_ID_VP8 && m_bWaitingForKeyFrame) { if (!(pDataBuffer[0] & 1)) { DbgLog((LOG_TRACE, 10, L"::Decode(): Found VP8 key-frame, resuming decoding")); m_bWaitingForKeyFrame = FALSE; } else { return S_OK; } } } while (buflen > 0 || bFlush) { REFERENCE_TIME rtStart = rtStartIn, rtStop = rtStopIn; if (!bFlush) { //设置AVPacket中的数据 avpkt.data = pDataBuffer; avpkt.size = buflen; avpkt.pts = rtStartIn; if (rtStartIn != AV_NOPTS_VALUE && rtStopIn != AV_NOPTS_VALUE) avpkt.duration = (int)(rtStopIn - rtStartIn); else avpkt.duration = 0; avpkt.flags = AV_PKT_FLAG_KEY; if (m_bHasPalette) { m_bHasPalette = FALSE; uint32_t *pal = (uint32_t *)av_packet_new_side_data(&avpkt, AV_PKT_DATA_PALETTE, AVPALETTE_SIZE); int pal_size = FFMIN((1 << m_pAVCtx->bits_per_coded_sample) << 2, m_pAVCtx->extradata_size); uint8_t *pal_src = m_pAVCtx->extradata + m_pAVCtx->extradata_size - pal_size; for (int i = 0; i < pal_size/4; i++) pal[i] = 0xFF<<24 | AV_RL32(pal_src+4*i); } } else { avpkt.data = NULL; avpkt.size = 0; } // Parse the data if a parser is present // This is mandatory for MPEG-1/2 // 不一定需要 if (m_pParser) { BYTE *pOut = NULL; int pOut_size = 0; used_bytes = av_parser_parse2(m_pParser, m_pAVCtx, &pOut, &pOut_size, avpkt.data, avpkt.size, AV_NOPTS_VALUE, AV_NOPTS_VALUE, 0); if (used_bytes == 0 && pOut_size == 0 && !bFlush) { DbgLog((LOG_TRACE, 50, L"::Decode() - could not process buffer, starving?")); break; } // Update start time cache // If more data was read then output, update the cache (incomplete frame) // If output is bigger, a frame was completed, update the actual rtStart with the cached value, and then overwrite the cache if (used_bytes > pOut_size) { if (rtStartIn != AV_NOPTS_VALUE) m_rtStartCache = rtStartIn; } else if (used_bytes == pOut_size || ((used_bytes + 9) == pOut_size)) { // Why +9 above? // Well, apparently there are some broken MKV muxers that like to mux the MPEG-2 PICTURE_START_CODE block (which is 9 bytes) in the package with the previous frame // This would cause the frame timestamps to be delayed by one frame exactly, and cause timestamp reordering to go wrong. // So instead of failing on those samples, lets just assume that 9 bytes are that case exactly. m_rtStartCache = rtStartIn = AV_NOPTS_VALUE; } else if (pOut_size > used_bytes) { rtStart = m_rtStartCache; m_rtStartCache = rtStartIn; // The value was used once, don't use it for multiple frames, that ends up in weird timings rtStartIn = AV_NOPTS_VALUE; } bParserFrame = (pOut_size > 0); if (pOut_size > 0 || bFlush) { if (pOut && pOut_size > 0) { if (pOut_size > m_nFFBufferSize2) { m_nFFBufferSize2 = pOut_size; m_pFFBuffer2 = (BYTE *)av_realloc_f(m_pFFBuffer2, m_nFFBufferSize2 + FF_INPUT_BUFFER_PADDING_SIZE, 1); if (!m_pFFBuffer2) { m_nFFBufferSize2 = 0; return E_OUTOFMEMORY; } } memcpy(m_pFFBuffer2, pOut, pOut_size); memset(m_pFFBuffer2+pOut_size, 0, FF_INPUT_BUFFER_PADDING_SIZE); avpkt.data = m_pFFBuffer2; avpkt.size = pOut_size; avpkt.pts = rtStart; avpkt.duration = 0; const uint8_t *eosmarker = CheckForEndOfSequence(m_nCodecId, avpkt.data, avpkt.size, &m_MpegParserState); if (eosmarker) { bEndOfSequence = TRUE; } } else { avpkt.data = NULL; avpkt.size = 0; } //真正的解码 int ret2 = avcodec_decode_video2 (m_pAVCtx, m_pFrame, &got_picture, &avpkt); if (ret2 < 0) { DbgLog((LOG_TRACE, 50, L"::Decode() - decoding failed despite successfull parsing")); got_picture = 0; } } else { got_picture = 0; } } else { used_bytes = avcodec_decode_video2 (m_pAVCtx, m_pFrame, &got_picture, &avpkt); } if (FAILED(PostDecode())) { av_frame_unref(m_pFrame); return E_FAIL; } // Decoding of this frame failed ... oh well! if (used_bytes < 0) { av_frame_unref(m_pFrame); return S_OK; } // When Frame Threading, we won't know how much data has been consumed, so it by default eats everything. // In addition, if no data got consumed, and no picture was extracted, the frame probably isn't all that useufl. // The MJPEB decoder is somewhat buggy and doesn't let us know how much data was consumed really... if ((!m_pParser && (m_pAVCtx->active_thread_type & FF_THREAD_FRAME || (!got_picture && used_bytes == 0))) || m_bNoBufferConsumption || bFlush) { buflen = 0; } else { buflen -= used_bytes; pDataBuffer += used_bytes; } // Judge frame usability // This determines if a frame is artifact free and can be delivered // For H264 this does some wicked magic hidden away in the H264RandomAccess class // MPEG-2 and VC-1 just wait for a keyframe.. if (m_nCodecId == AV_CODEC_ID_H264 && (bParserFrame || !m_pParser || got_picture)) { m_h264RandomAccess.judgeFrameUsability(m_pFrame, &got_picture); } else if (m_bResumeAtKeyFrame) { if (m_bWaitingForKeyFrame && got_picture) { if (m_pFrame->key_frame) { DbgLog((LOG_TRACE, 50, L"::Decode() - Found Key-Frame, resuming decoding at %I64d", m_pFrame->pkt_pts)); m_bWaitingForKeyFrame = FALSE; } else { got_picture = 0; } } } // Handle B-frame delay for frame threading codecs if ((m_pAVCtx->active_thread_type & FF_THREAD_FRAME) && m_bBFrameDelay) { m_tcBFrameDelay[m_nBFramePos] = m_tcThreadBuffer[m_CurrentThread]; m_nBFramePos = !m_nBFramePos; } if (!got_picture || !m_pFrame->data[0]) { if (!avpkt.size) bFlush = FALSE; // End flushing, no more frames av_frame_unref(m_pFrame); continue; } /////////////////////////////////////////////////////////////////////////////////////////////// // Determine the proper timestamps for the frame, based on different possible flags. /////////////////////////////////////////////////////////////////////////////////////////////// if (m_bFFReordering) { rtStart = m_pFrame->pkt_pts; if (m_pFrame->pkt_duration) rtStop = m_pFrame->pkt_pts + m_pFrame->pkt_duration; else rtStop = AV_NOPTS_VALUE; } else if (m_bBFrameDelay && m_pAVCtx->has_b_frames) { rtStart = m_tcBFrameDelay[m_nBFramePos].rtStart; rtStop = m_tcBFrameDelay[m_nBFramePos].rtStop; } else if (m_pAVCtx->active_thread_type & FF_THREAD_FRAME) { unsigned index = m_CurrentThread; rtStart = m_tcThreadBuffer[index].rtStart; rtStop = m_tcThreadBuffer[index].rtStop; } if (m_bRVDropBFrameTimings && m_pFrame->pict_type == AV_PICTURE_TYPE_B) { rtStart = AV_NOPTS_VALUE; } if (m_bCalculateStopTime) rtStop = AV_NOPTS_VALUE; /////////////////////////////////////////////////////////////////////////////////////////////// // All required values collected, deliver the frame /////////////////////////////////////////////////////////////////////////////////////////////// LAVFrame *pOutFrame = NULL; AllocateFrame(&pOutFrame); AVRational display_aspect_ratio; int64_t num = (int64_t)m_pFrame->sample_aspect_ratio.num * m_pFrame->width; int64_t den = (int64_t)m_pFrame->sample_aspect_ratio.den * m_pFrame->height; av_reduce(&display_aspect_ratio.num, &display_aspect_ratio.den, num, den, 1 << 30); pOutFrame->width = m_pFrame->width; pOutFrame->height = m_pFrame->height; pOutFrame->aspect_ratio = display_aspect_ratio; pOutFrame->repeat = m_pFrame->repeat_pict; pOutFrame->key_frame = m_pFrame->key_frame; pOutFrame->frame_type = av_get_picture_type_char(m_pFrame->pict_type); pOutFrame->ext_format = GetDXVA2ExtendedFlags(m_pAVCtx, m_pFrame); if (m_pFrame->interlaced_frame || (!m_pAVCtx->progressive_sequence && (m_nCodecId == AV_CODEC_ID_H264 || m_nCodecId == AV_CODEC_ID_MPEG2VIDEO))) m_iInterlaced = 1; else if (m_pAVCtx->progressive_sequence) m_iInterlaced = 0; pOutFrame->interlaced = (m_pFrame->interlaced_frame || (m_iInterlaced == 1 && m_pSettings->GetDeinterlacingMode() == DeintMode_Aggressive) || m_pSettings->GetDeinterlacingMode() == DeintMode_Force) && !(m_pSettings->GetDeinterlacingMode() == DeintMode_Disable); LAVDeintFieldOrder fo = m_pSettings->GetDeintFieldOrder(); pOutFrame->tff = (fo == DeintFieldOrder_Auto) ? m_pFrame->top_field_first : (fo == DeintFieldOrder_TopFieldFirst); pOutFrame->rtStart = rtStart; pOutFrame->rtStop = rtStop; PixelFormatMapping map = getPixFmtMapping((AVPixelFormat)m_pFrame->format); pOutFrame->format = map.lavpixfmt; pOutFrame->bpp = map.bpp; if (m_nCodecId == AV_CODEC_ID_MPEG2VIDEO || m_nCodecId == AV_CODEC_ID_MPEG1VIDEO) pOutFrame->avgFrameDuration = GetFrameDuration(); if (map.conversion) { ConvertPixFmt(m_pFrame, pOutFrame); } else { for (int i = 0; i < 4; i++) { pOutFrame->data[i] = m_pFrame->data[i]; pOutFrame->stride[i] = m_pFrame->linesize[i]; } pOutFrame->priv_data = av_frame_alloc(); av_frame_ref((AVFrame *)pOutFrame->priv_data, m_pFrame); pOutFrame->destruct = lav_avframe_free; } if (bEndOfSequence) pOutFrame->flags |= LAV_FRAME_FLAG_END_OF_SEQUENCE; if (pOutFrame->format == LAVPixFmt_DXVA2) { pOutFrame->data[0] = m_pFrame->data[4]; HandleDXVA2Frame(pOutFrame); } else { Deliver(pOutFrame); } if (bEndOfSequence) { bEndOfSequence = FALSE; if (pOutFrame->format == LAVPixFmt_DXVA2) { HandleDXVA2Frame(m_pCallback->GetFlushFrame()); } else { Deliver(m_pCallback->GetFlushFrame()); } } if (bFlush) { m_CurrentThread = (m_CurrentThread + 1) % m_pAVCtx->thread_count; } av_frame_unref(m_pFrame); } return S_OK; }

终于,我们从这个函数中看到了很多的ffmpeg的API,结构体,以及变量。比如解码视频的函数avcodec_decode_video2()。

解码器初始化函数:InitDecoder()

//创建解码器 STDMETHODIMP CDecAvcodec::InitDecoder(AVCodecID codec, const CMediaType *pmt) { //要是有,先销毁 DestroyDecoder(); DbgLog((LOG_TRACE, 10, L"Initializing ffmpeg for codec %S", avcodec_get_name(codec))); BITMAPINFOHEADER *pBMI = NULL; videoFormatTypeHandler((const BYTE *)pmt->Format(), pmt->FormatType(), &pBMI); //查找解码器 m_pAVCodec = avcodec_find_decoder(codec); CheckPointer(m_pAVCodec, VFW_E_UNSUPPORTED_VIDEO); //初始化上下文环境 m_pAVCtx = avcodec_alloc_context3(m_pAVCodec); CheckPointer(m_pAVCtx, E_POINTER); if(codec == AV_CODEC_ID_MPEG1VIDEO || codec == AV_CODEC_ID_MPEG2VIDEO || pmt->subtype == FOURCCMap(MKTAG('H','2','6','4')) || pmt->subtype == FOURCCMap(MKTAG('h','2','6','4'))) { m_pParser = av_parser_init(codec); } DWORD dwDecFlags = m_pCallback->GetDecodeFlags(); LONG biRealWidth = pBMI->biWidth, biRealHeight = pBMI->biHeight; if (pmt->formattype == FORMAT_VideoInfo || pmt->formattype == FORMAT_MPEGVideo) { VIDEOINFOHEADER *vih = (VIDEOINFOHEADER *)pmt->Format(); if (vih->rcTarget.right != 0 && vih->rcTarget.bottom != 0) { biRealWidth = vih->rcTarget.right; biRealHeight = vih->rcTarget.bottom; } } else if (pmt->formattype == FORMAT_VideoInfo2 || pmt->formattype == FORMAT_MPEG2Video) { VIDEOINFOHEADER2 *vih2 = (VIDEOINFOHEADER2 *)pmt->Format(); if (vih2->rcTarget.right != 0 && vih2->rcTarget.bottom != 0) { biRealWidth = vih2->rcTarget.right; biRealHeight = vih2->rcTarget.bottom; } } //各种赋值 m_pAVCtx->codec_id = codec; m_pAVCtx->codec_tag = pBMI->biCompression; m_pAVCtx->coded_width = pBMI->biWidth; m_pAVCtx->coded_height = abs(pBMI->biHeight); m_pAVCtx->bits_per_coded_sample = pBMI->biBitCount; m_pAVCtx->error_concealment = FF_EC_GUESS_MVS | FF_EC_DEBLOCK; m_pAVCtx->err_recognition = AV_EF_CAREFUL; m_pAVCtx->workaround_bugs = FF_BUG_AUTODETECT; m_pAVCtx->refcounted_frames = 1; if (codec == AV_CODEC_ID_H264) m_pAVCtx->flags2 |= CODEC_FLAG2_SHOW_ALL; // Setup threading int thread_type = getThreadFlags(codec); if (thread_type) { // Thread Count. 0 = auto detect int thread_count = m_pSettings->GetNumThreads(); if (thread_count == 0) { thread_count = av_cpu_count() * 3 / 2; } m_pAVCtx->thread_count = max(1, min(thread_count, AVCODEC_MAX_THREADS)); m_pAVCtx->thread_type = thread_type; } else { m_pAVCtx->thread_count = 1; } if (dwDecFlags & LAV_VIDEO_DEC_FLAG_NO_MT) { m_pAVCtx->thread_count = 1; } //初始化AVFrame m_pFrame = av_frame_alloc(); CheckPointer(m_pFrame, E_POINTER); m_h264RandomAccess.SetAVCNALSize(0); // Process Extradata //处理ExtraData BYTE *extra = NULL; size_t extralen = 0; getExtraData(*pmt, NULL, &extralen); BOOL bH264avc = FALSE; if (extralen > 0) { DbgLog((LOG_TRACE, 10, L"-> Processing extradata of %d bytes", extralen)); // Reconstruct AVC1 extradata format if (pmt->formattype == FORMAT_MPEG2Video && (m_pAVCtx->codec_tag == MAKEFOURCC('a','v','c','1') || m_pAVCtx->codec_tag == MAKEFOURCC('A','V','C','1') || m_pAVCtx->codec_tag == MAKEFOURCC('C','C','V','1'))) { MPEG2VIDEOINFO *mp2vi = (MPEG2VIDEOINFO *)pmt->Format(); extralen += 7; extra = (uint8_t *)av_mallocz(extralen + FF_INPUT_BUFFER_PADDING_SIZE); extra[0] = 1; extra[1] = (BYTE)mp2vi->dwProfile; extra[2] = 0; extra[3] = (BYTE)mp2vi->dwLevel; extra[4] = (BYTE)(mp2vi->dwFlags ? mp2vi->dwFlags : 4) - 1; // Actually copy the metadata into our new buffer size_t actual_len; getExtraData(*pmt, extra+6, &actual_len); // Count the number of SPS/PPS in them and set the length // We'll put them all into one block and add a second block with 0 elements afterwards // The parsing logic does not care what type they are, it just expects 2 blocks. BYTE *p = extra+6, *end = extra+6+actual_len; BOOL bSPS = FALSE, bPPS = FALSE; int count = 0; while (p+1 < end) { unsigned len = (((unsigned)p[0] << 8) | p[1]) + 2; if (p + len > end) { break; } if ((p[2] & 0x1F) == 7) bSPS = TRUE; if ((p[2] & 0x1F) == 8) bPPS = TRUE; count++; p += len; } extra[5] = count; extra[extralen-1] = 0; bH264avc = TRUE; m_h264RandomAccess.SetAVCNALSize(mp2vi->dwFlags); } else if (pmt->subtype == MEDIASUBTYPE_LAV_RAWVIDEO) { if (extralen < sizeof(m_pAVCtx->pix_fmt)) { DbgLog((LOG_TRACE, 10, L"-> LAV RAW Video extradata is missing..")); } else { extra = (uint8_t *)av_mallocz(extralen + FF_INPUT_BUFFER_PADDING_SIZE); getExtraData(*pmt, extra, NULL); m_pAVCtx->pix_fmt = *(AVPixelFormat *)extra; extralen -= sizeof(AVPixelFormat); memmove(extra, extra+sizeof(AVPixelFormat), extralen); } } else { // Just copy extradata for other formats extra = (uint8_t *)av_mallocz(extralen + FF_INPUT_BUFFER_PADDING_SIZE); getExtraData(*pmt, extra, NULL); } // Hack to discard invalid MP4 metadata with AnnexB style video if (codec == AV_CODEC_ID_H264 && !bH264avc && extra[0] == 1) { av_freep(&extra); extralen = 0; } m_pAVCtx->extradata = extra; m_pAVCtx->extradata_size = (int)extralen; } else { if (codec == AV_CODEC_ID_VP6 || codec == AV_CODEC_ID_VP6A || codec == AV_CODEC_ID_VP6F) { int cropH = pBMI->biWidth - biRealWidth; int cropV = pBMI->biHeight - biRealHeight; if (cropH >= 0 && cropH <= 0x0f && cropV >= 0 && cropV <= 0x0f) { m_pAVCtx->extradata = (uint8_t *)av_mallocz(1 + FF_INPUT_BUFFER_PADDING_SIZE); m_pAVCtx->extradata_size = 1; m_pAVCtx->extradata[0] = (cropH << 4) | cropV; } } } m_h264RandomAccess.flush(m_pAVCtx->thread_count); m_CurrentThread = 0; m_rtStartCache = AV_NOPTS_VALUE; LAVPinInfo lavPinInfo = {0}; BOOL bLAVInfoValid = SUCCEEDED(m_pCallback->GetLAVPinInfo(lavPinInfo)); m_bInputPadded = dwDecFlags & LAV_VIDEO_DEC_FLAG_LAVSPLITTER; // Setup codec-specific timing logic BOOL bVC1IsPTS = (codec == AV_CODEC_ID_VC1 && !(dwDecFlags & LAV_VIDEO_DEC_FLAG_VC1_DTS)); // Use ffmpegs logic to reorder timestamps // This is required for H264 content (except AVI), and generally all codecs that use frame threading // VC-1 is also a special case. Its required for splitters that deliver PTS timestamps (see bVC1IsPTS above) m_bFFReordering = ( codec == AV_CODEC_ID_H264 && !(dwDecFlags & LAV_VIDEO_DEC_FLAG_H264_AVI)) || codec == AV_CODEC_ID_VP8 || codec == AV_CODEC_ID_VP3 || codec == AV_CODEC_ID_THEORA || codec == AV_CODEC_ID_HUFFYUV || codec == AV_CODEC_ID_FFVHUFF || codec == AV_CODEC_ID_MPEG2VIDEO || codec == AV_CODEC_ID_MPEG1VIDEO || codec == AV_CODEC_ID_DIRAC || codec == AV_CODEC_ID_UTVIDEO || codec == AV_CODEC_ID_DNXHD || codec == AV_CODEC_ID_JPEG2000 || (codec == AV_CODEC_ID_MPEG4 && pmt->formattype == FORMAT_MPEG2Video) || bVC1IsPTS; // Stop time is unreliable, drop it and calculate it m_bCalculateStopTime = (codec == AV_CODEC_ID_H264 || codec == AV_CODEC_ID_DIRAC || (codec == AV_CODEC_ID_MPEG4 && pmt->formattype == FORMAT_MPEG2Video) || bVC1IsPTS); // Real Video content has some odd timestamps // LAV Splitter does them allright with RV30/RV40, everything else screws them up m_bRVDropBFrameTimings = (codec == AV_CODEC_ID_RV10 || codec == AV_CODEC_ID_RV20 || ((codec == AV_CODEC_ID_RV30 || codec == AV_CODEC_ID_RV40) && (!(dwDecFlags & LAV_VIDEO_DEC_FLAG_LAVSPLITTER) || (bLAVInfoValid && (lavPinInfo.flags & LAV_STREAM_FLAG_RV34_MKV))))); // Enable B-Frame delay handling m_bBFrameDelay = !m_bFFReordering && !m_bRVDropBFrameTimings; m_bWaitingForKeyFrame = TRUE; m_bResumeAtKeyFrame = codec == AV_CODEC_ID_MPEG2VIDEO || codec == AV_CODEC_ID_VC1 || codec == AV_CODEC_ID_RV30 || codec == AV_CODEC_ID_RV40 || codec == AV_CODEC_ID_VP3 || codec == AV_CODEC_ID_THEORA || codec == AV_CODEC_ID_MPEG4; m_bNoBufferConsumption = codec == AV_CODEC_ID_MJPEGB || codec == AV_CODEC_ID_LOCO || codec == AV_CODEC_ID_JPEG2000; m_bHasPalette = m_pAVCtx->bits_per_coded_sample <= 8 && m_pAVCtx->extradata_size && !(dwDecFlags & LAV_VIDEO_DEC_FLAG_LAVSPLITTER) && (codec == AV_CODEC_ID_MSVIDEO1 || codec == AV_CODEC_ID_MSRLE || codec == AV_CODEC_ID_CINEPAK || codec == AV_CODEC_ID_8BPS || codec == AV_CODEC_ID_QPEG || codec == AV_CODEC_ID_QTRLE || codec == AV_CODEC_ID_TSCC); if (FAILED(AdditionaDecoderInit())) { return E_FAIL; } if (bLAVInfoValid) { // Setting has_b_frames to a proper value will ensure smoother decoding of H264 if (lavPinInfo.has_b_frames >= 0) { DbgLog((LOG_TRACE, 10, L"-> Setting has_b_frames to %d", lavPinInfo.has_b_frames)); m_pAVCtx->has_b_frames = lavPinInfo.has_b_frames; } } // Open the decoder //打开解码器 int ret = avcodec_open2(m_pAVCtx, m_pAVCodec, NULL); if (ret >= 0) { DbgLog((LOG_TRACE, 10, L"-> ffmpeg codec opened successfully (ret: %d)", ret)); m_nCodecId = codec; } else { DbgLog((LOG_TRACE, 10, L"-> ffmpeg codec failed to open (ret: %d)", ret)); DestroyDecoder(); return VFW_E_UNSUPPORTED_VIDEO; } m_iInterlaced = 0; for (int i = 0; i < countof(ff_interlace_capable); i++) { if (codec == ff_interlace_capable[i]) { m_iInterlaced = -1; break; } } // Detect chroma and interlaced if (m_pAVCtx->extradata && m_pAVCtx->extradata_size) { if (codec == AV_CODEC_ID_MPEG2VIDEO) { CMPEG2HeaderParser mpeg2Parser(extra, extralen); if (mpeg2Parser.hdr.valid) { if (mpeg2Parser.hdr.chroma < 2) { m_pAVCtx->pix_fmt = AV_PIX_FMT_YUV420P; } else if (mpeg2Parser.hdr.chroma == 2) { m_pAVCtx->pix_fmt = AV_PIX_FMT_YUV422P; } m_iInterlaced = mpeg2Parser.hdr.interlaced; } } else if (codec == AV_CODEC_ID_H264) { CH264SequenceParser h264parser; if (bH264avc) h264parser.ParseNALs(extra+6, extralen-6, 2); else h264parser.ParseNALs(extra, extralen, 0); if (h264parser.sps.valid) m_iInterlaced = h264parser.sps.interlaced; } else if (codec == AV_CODEC_ID_VC1) { CVC1HeaderParser vc1parser(extra, extralen); if (vc1parser.hdr.valid) m_iInterlaced = (vc1parser.hdr.interlaced ? -1 : 0); } } if (codec == AV_CODEC_ID_DNXHD) m_pAVCtx->pix_fmt = AV_PIX_FMT_YUV422P10; else if (codec == AV_CODEC_ID_FRAPS) m_pAVCtx->pix_fmt = AV_PIX_FMT_BGR24; if (bLAVInfoValid && codec != AV_CODEC_ID_FRAPS && m_pAVCtx->pix_fmt != AV_PIX_FMT_DXVA2_VLD) m_pAVCtx->pix_fmt = lavPinInfo.pix_fmt; DbgLog((LOG_TRACE, 10, L"AVCodec init successfull. interlaced: %d", m_iInterlaced)); return S_OK; }

解码器销毁函数:DestroyDecoder()

//销毁解码器,各种Free STDMETHODIMP CDecAvcodec::DestroyDecoder() { DbgLog((LOG_TRACE, 10, L"Shutting down ffmpeg...")); m_pAVCodec = NULL; if (m_pParser) { av_parser_close(m_pParser); m_pParser = NULL; } if (m_pAVCtx) { avcodec_close(m_pAVCtx); av_freep(&m_pAVCtx->extradata); av_freep(&m_pAVCtx); } av_frame_free(&m_pFrame); av_freep(&m_pFFBuffer); m_nFFBufferSize = 0; av_freep(&m_pFFBuffer2); m_nFFBufferSize2 = 0; if (m_pSwsContext) { sws_freeContext(m_pSwsContext); m_pSwsContext = NULL; } m_nCodecId = AV_CODEC_ID_NONE; return S_OK; }