VMware Lab setup - A virtualized lab for testing HA and DRS

VMware vSphere offers some extremely powerful virtualization technology for businesses and enterprises to use. If you are still new to the technology or even virtualization in general and are looking to get a lab or testing environment set up to try it out, the task can be quite daunting. Sure, you could go ahead and install one of VMware’s hypervisors on a single server and throw a few Virtual Machines (VMs) on this, storing them on local storage to get started, but this way you don’t get to see the really cool features vSphere has to offer. These features are such things as High Availability for your Virtual Machines, Distributed Resource Scheduling to manage resources, and the potential energy efficiency gains that become apparent once you start running multiple VMs on a handful of hosts are just a few great features a fully configured vSphere vCenter environment can offer

To setup a vSphere cluster, the bare essentials you will need are:

- at least two host servers (hypervisors),

- shared storage,

- a Windows domain and

- a vCenter server to link everything together.

This would normally be quite a bit of physical hardware to source for a test lab or demo; however it is possible to create all of this in a “nested” ” (Running Virtual Machines inside of other Virtual machines) virtualized set, all running on top of a normal Windows host PC.

In this article, we’ll go through setting up a VMware lab environment, all hosted and run off one PC. As this is a lab environment, you should note that this will in no way be tuned for performance. To even begin tuning this for performance, you would need dedicated hypervisors, shared storage (Fibre Channel or iSCSI SAN) and networking equipment – as you would use in a production environment.

Once we have our lab environment set up, you’ll be able to create VMs, use vMotion to migrate them across your Host servers with zero downtime, as well as test VMware HA (High Availability) and DRS (Distributed Resource Scheduler) – two of the great features I mentioned above as being some of the product’s strongest selling points.

Prerequisites

This is a list of what we need (and what we will be creating in terms of VMs) to satisfy our requirements for a fully functional lab environment.

- The Physical Host PC:

- At least a dual core processor with either AMD-V or Intel VT virtualization technology support.

- 8 GB RAM minimum – preferably 10 to 12 GB.

- VMware Workstation 7.x.

- A 64-bit operating system that supports VMware Workstation (such as Windows 7 for example).

- Enough hard disk space to host all of our VMs (I would estimate this at around 100 GB).

- The VMs we will create and run in VMware Workstation on the Physical Host PC:

- ESXi VM #1 (Hypervisor)

- ESXi VM #2 (Hypervisor)

- Windows Server 2003 or 2008 Domain Controller

- Windows Server 2003 x64, 2008 x64, or 2008 R2 x64 vCenter server

- Shared Storage VM (I use the excellent, opensource FreeNAS)

As you can see, we do need a fairly beefy host PC as this will be running everything in our lab! The main requirement here is that the CPU supports the necessary virtualization features and the machine has a lot of RAM. For VMware Workstation, you could use a 30 day trial version, but a license is not very expensive and is very useful to have for other projects. A free alternative may be to use VMware Server 2.0, although I have not tested this myself.

Our first two VMs that we will create will have VMware ESXi 4.1 installed on them. You can register for and download a 60 day trial of ESXi from VMware’s website. The next machine we need to create will be our Windows Server 2003 or 2008 Domain Controller, with Active Directory and DNS Server roles installed on it. Thirdly, we need a VM to run VMware vCenter Server. This is the server that provides unified management for all the host servers and VMs in our environment. It also allows us to view performance metrics for all managed objects, and allows us to automate our environment with features like DRS and HA. Lastly, we will need shared storage that both of our ESXi hosts can see. In this lab environment we can use something simple like FreeNAS or OpenFiler – two opensource solutions that allow us to create and share NFS or iSCSI storage. In this article we will opt for FreeNAS and will be using this to provide NFS storage to our two ESXi hosts.

For networking, I will be using VMware Workstation’s option to “Bridge” each VM’s network adapter to the physical network (i.e. the network your physical host PC is using). Therefore all vSphere host, management and storage networking will be on the same physical network. Note that this is definitely not recommended in a production environment for both performance and reliability reasons, but as this is just a lab setup, so this won’t be a problem for us.

Installation

Start off by installing VMware Workstation on your host PC. Once this is running, use the New Virtual Machine wizard to create the following VMs.

- Windows Server Domain Controller. I used an existing Domain Controller VM I already had. If you don’t already have one to use for your lab environment, create a new Window Server 2003 or 2008 VM, put it on the same network as your host PC (all your VMs will be on this network) and install the Domain Controller role using dcpromo. You will also need something to manage DNS, so make sure you also install the DNS server role. Specifications for the VM can be as follows:

Windows Server 2003 version- 1 vCPU

- 192 MB RAM

- 8 GB Hard drive

- Guest operating system – Windows Server 2003 (choose 32 or 64 bit depending on your OS)

- 1 vCPU

- 1 GB RAM

- 12 GB Hard drive

- Guest operating system – Windows Server 2008 (choose 32 or 64 bit depending on your OS)

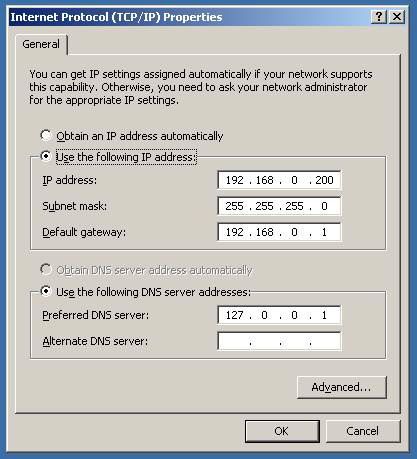

To keep this lab nice and simple, your domain controller should exist on the same network as all of your other VMs. Therefore, assign it a static IP address on your local physical network. My lab network is sharing the local physical network at home with my other PCs and equipment. (I simply bridge all VMs to the physical network in VMware Workstation). Therefore I set my DC up with an IP address of 192.168.0.200, a subnet mask of 255.255.255.0 and pointed the default gateway to my router. DNS is handled by the same server with its DNS role installed, so this is set at localhost (127.0.0.1) once the DNS role is installed

Figure IP settings for the Domain Controller

When running dcpromo, you can choose most of the default options to set up a simple lab domain controller. Here is a guide I did a while back on creating a set of Domain Controllers for use as lab DCs. Note that you only really need one DC here as we are not going for high availability, so follow the steps through to create a primary DC. For my lab I used the following basic settings:

- Server / host name of VM – your choice

- Domain in a New Forest

- Full DNS name for new domain: noobs.local

- Domain NetBIOS name: NOOBS

- Install and configure the DNS server on this computer, and set this computer to use the DNS server as its preferred DNS server.

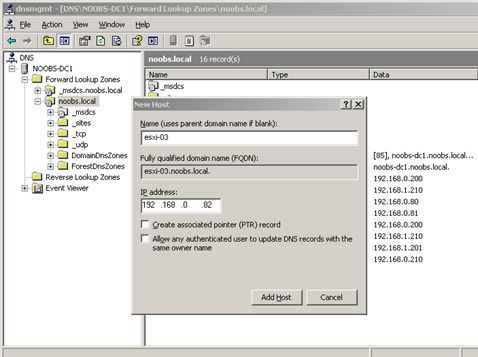

After setting up your DC, open up DNS Management and get your two A (Host name) records created for your future ESXi hosts. Use these FQDN hostnames when you configure your ESXi host DNS names later on using the ESXi console networking configuration.

Figure 2: Creating A records for our two ESXi hosts on the DNS Server.

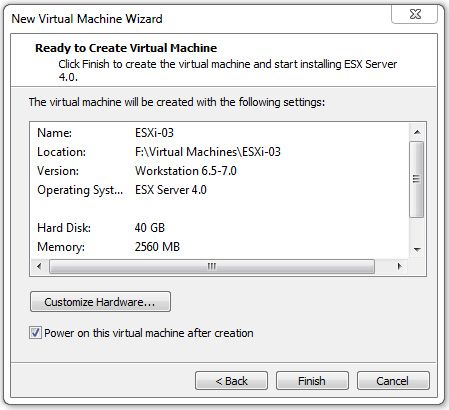

- 2 x ESXi 4.1 Hosts. These hosts will be our “workhorses” – they will be managed by vCenter Server and will be the hypervisors that run our nested Virtual Machines. HA and DRS will look after these two hosts, managing high availability of Virtual Machines if one were to fail and distributing resources between the two based on their individual workloads. Note that the minimum amount of RAM required for ESXi is 2 GB, but the HA agent that needs to install on each host will be likely to fail to initialize if we don’t have at least 2.5 GB RAM per host. In a production environment, these hosts would be physical rack-mounted servers or blade servers. Create two of these VMs using the new custom VM wizard and give each ESXi Host the following specifications:

- 1 vCPU

- 2560 MB RAM (More if you can, as each VM that the host runs requires RAM from the ESXi’s pool of RAM)

- 40 GB Hard drive (Thin provisioned)

- Networking – use bridged networking

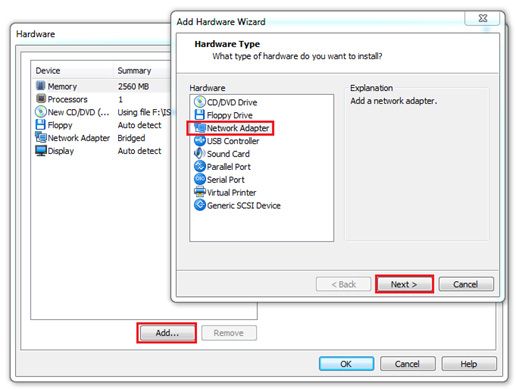

- 1 x extra vNIC (Network adapter).

- Guest operating system – VMware ESX

Don’t forget to add the additional NIC using Bridged mode – this is so that we can simulate management network redundancy (and therefore not get an annoying warning in vCenter about not having this in place). It won’t be true redundancy, but will at least keep vCenter happy. Before completing the wizard, choose the “Customize Hardware” button to add this extra NIC. Here is a screenshot of what your VM settings should look like, followed by a screenshot showing the second NIC being added.

Figure 3: Settings summary for your ESXi VMs

Figure 4: Adding an extra NIC to each ESXi host VM.

For the installation ISO, download the 60 day trial of ESXi from VMware’s website in ISO format.. You’ll need to register a new account with VMware if you don’t already have one. You may also receive a free product key for ESXi – when setting up our ESXi hosts, don’t use this, as we want to keep the full functionality that the trial version gives us, leaving it in 60 day trial mode. Specify the installation ISO in the CD/DVD Drive properties on the ESXi host VM or when the new VM wizard asks for an installation disc.

Power up the host VM, and follow the prompts of the ESXi installer. Whilst there are some best practises you can follow and specific steps you can take when installing hosts in a production environment, for the purposes of building our lab environment we can just leave all options at their defaults. So step through the installation wizard choosing all the defaults. After this is done, following a reboot, you should be greeted by the familiar “yellow” ESXi console screen. Your default password is blank (i.e., empty) for the user “root”. Press F2 and login to the configuration page with these credentials.

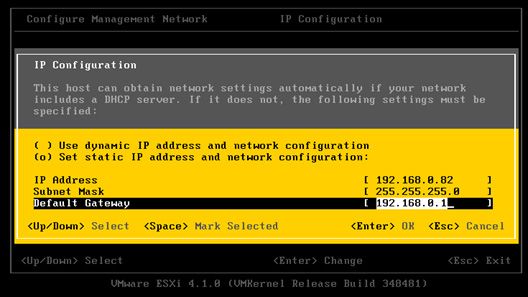

First things first, let’s change the root password from the default blank, to something else (Configure Password). After this, navigate to the “Configure Management Network” option, and then choose “IP Configuration”. Your host should be bridged on to your physical network and would have probably picked up an IP from your local DHCP server. Change this to a static IP address on your local network, and specify your default gateway.

Figure 5: Setting a static IP address for ESXi on your network.

Now, go to “DNS Configuration” and ensure your DNS server(s) are specified. Change your hostname to the same name that you created A records for on your Windows Server DNS (ensure the IP you chose for your host is the same as specified for your A record too). Press Enter, then ESC and when prompted, choose to restart the management network to apply changes.

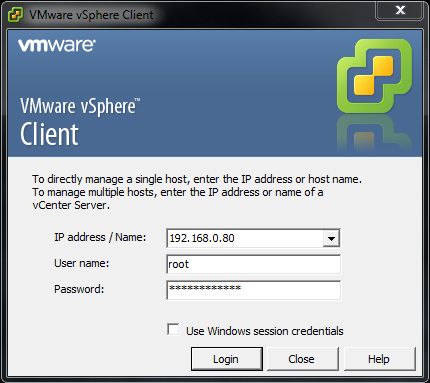

Follow the same procedure above for your second host, choosing a different static IP and hostname on the same network this time, but keeping all the other options the same. Remember the FQDN host name records for each ESXi host we created in DNS Management on our DNS server earlier? Well, we’ll now test both of these A records by pinging the hostnames of our ESXi servers from your Windows Server Domain Controller to ensure they both resolve to their respective IP addresses. Remember that we are keeping all of our VMs on the same local subnet. In my lab, I used IP addresses of 192.168.0.x with a subnet mask of 255.255.255.0 for all my VMs. Therefore my ESXi FQDN host names in DNS resolved to 192.168.0.80 and 192.168.0.81.

- FreeNAS VM (shared storage). Now we will need to setup our shared storage which both ESXi hosts need to have access to in order to use clustering capabilities such as HA and DRS.

You can follow this guide to download a preconfigured VM for VMware Workstation and get everything you need for your NFS shares setup. It also has a section near the end that demonstrates adding the shared storage to each ESXi host. When you download the FreeNAS VM as explained in this guide, you’ll just need to use VMware Workstation to open the “.vmx” file that comes with the download to get going. - vCenter VM. This VM requires a 64-bit Windows Server 2003 or 2008 / R2 Guest operating system. So get a VM setup with one of these, and add it to your Windows domain. Once you are ready to begin, register for and download the vSphere vCenter Server software from VMware – if in ISO format, just attach this to your VM, by going into your VM’s settings in Workstation and connecting it. Start the installer and again, we will be following through with all the default options as this is just a lab setup. To begin with just ensure you select the “Create a standalone server instance” for vCenter installation. You will get to the Database section in the setup soon – choose the default of a SQL Server Express instance for the database. This is fine for smaller deployments of ESX/ESXi hosts and vCenter. Larger production environments would usually go for a SQL Server Standard or Enterprise or Oracle DB setup and specify a dedicated database server at this stage. Complete the setup and restart the VM afterwards.

- 1 vCPU

- 2 GB RAM

- 40 GB Hard drive (Thin provisioned)

- Guest operating system – choose the appropriate Windows OS here.

- Individual ESXi host configurations. Now we need to configure each ESXi host using the vSphere client from our host PC. We need to get them to match each other identically in terms of setup so that HA and DRS work well between the two host servers. In a production environment you should be using a feature called “Host profiles” to establish a baseline host profile, and would then be able to easily provision host servers off of that profile. (Feature available in vCenter Enterprise Plus only). However we only have two hosts to do here, so we’ll configure them manually.

Open a web browser on your local PC, and browse to the IP address of one of your ESXi hosts using the prefix of https://. You’ll get to a banner page, which should offer you a download of the vSphere client for Windows. Download and install this on your local host PC. Run the vSphere client and login to your first ESXi host using the root credentials you configured earlier – accept the security certificate warning you get when you click Login.

Figure 6: Specify the IP address of the ESXi host you are connecting to and login.

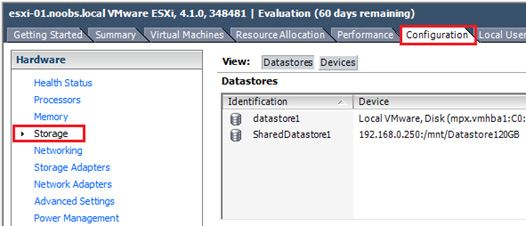

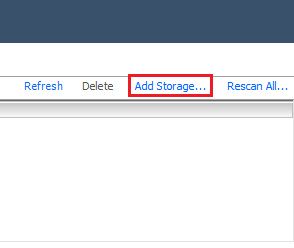

Once the management GUI appears, we’ll be able to start configuring the ESXi host, by adding our shared storage first. Navigate to the Storage Configuration area for your host and click the “Add Storage...” link near the top right of the GUI.

Figure 7: Adding storage to our first ESXi host

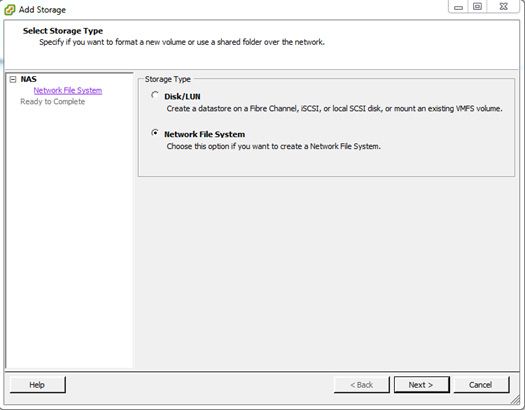

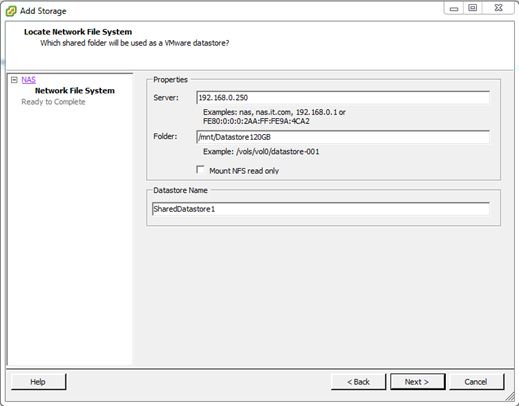

As we have configured an NFS share for our storage, we’ll choose the option for “Network File System”. On the next page, we’ll enter the details about our NFS share we want to connect to.

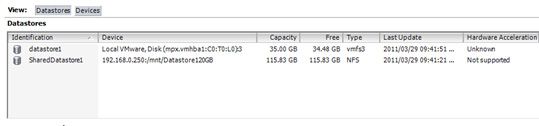

Click Next, review the summary page to ensure you are happy, then finish the wizard to complete adding your Shared Storage. Remember to keep the Datastore Names the same across all your ESXi hosts for consistency. Under Datastores in the vSphere client, you should now be able to see your shared storage and the host server will now be able to use this to access and run VMs from.

Figure 8: datastore1 refers to the ESXi's local storage. SharedDatastore1 is our shared storage which we'll be using.

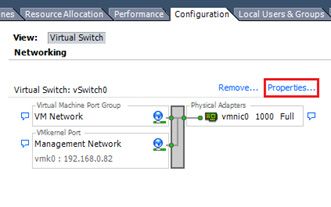

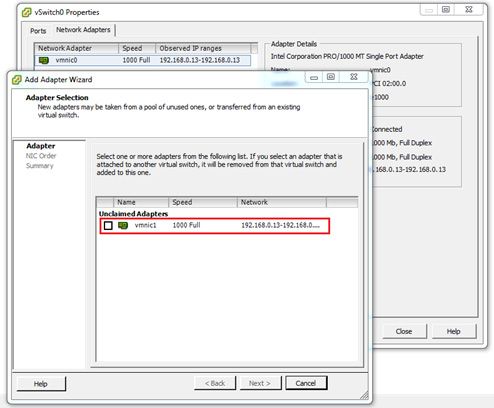

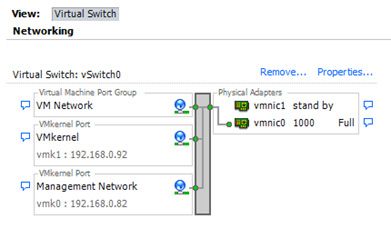

Next, we’ll do the network configuration. Start by clicking “Networking” under the Configuration area in the vSphere client. Click on “Properties” for “vSwitch0” and we’ll get our second NIC added and configured for “standby mode”. A vSwitch in VMware is a “virtual switch” – try to think of it as a “logical” switch as it is not much different to the real thing!

Figure 9: Open the vSwitch0 Properties to start configuring your host's networking.

Click the “Network Adapters” tab then select the “Add” button. A page will appear which should list your unclaimed virtual network adapter with the name “vmnic1”. (vmnic0 is already being used as our active NIC). Tick this adapter (vmnic1), and then click Next.

Figure 10: Claim vmnic1 to start using it for vSwitch0.

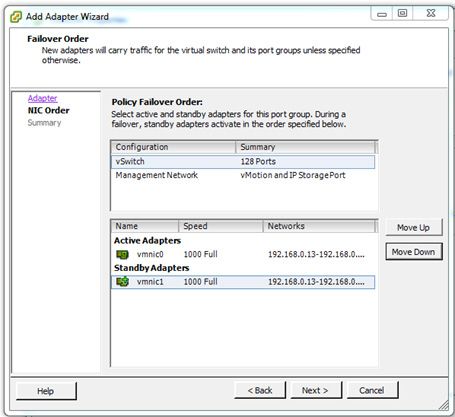

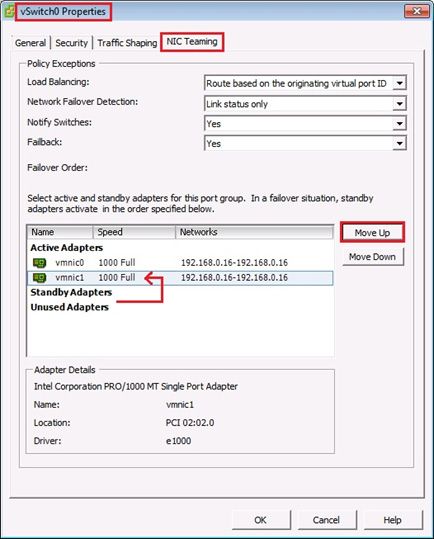

On the next page, we’ll move vmnic1 down to a Standby adapter. Highlight it, then click the “Move down” button and finish the wizard.

Figure 11: Assign vmnic1 as a Standby adapter.

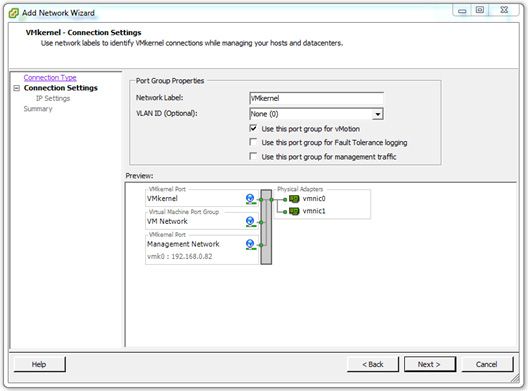

Now click on the “Ports” tab under “vSwitch0 Properties” and click “Add...” We are now going to add a VMkernel port group which is going to be responsible for our vMotion network traffic (used for VMware HA and DRS in this instance). VMkernel port groups are used to connect to NFS / iSCSI storage, or for vMotion traffic between hosts when moving Virtual Machines around. Configure the page as per the screenshot below; give your VMkernel port a manual unused IP address on your network along with your subnet mask (i.e. the same internal network being used for all your other VMs), keeping your usual default gateway for the VMkernel default gateway. In my lab I am using my ADSL router as the default gateway for all my network traffic, so I used the IP address of 192.168.0.1. Remember to tick the “Use this port group for vMotion” tickbox as we’ll want to be able to use vMotion in our lab. Finish the wizard, which takes you back to the “vSwitch0 Properties” window.

Figure 12: Adding a VMkernel port group.

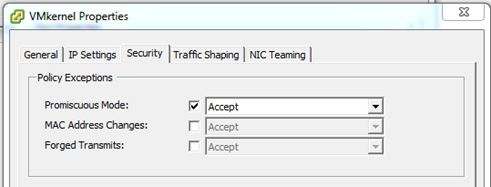

At the vSwitch0 Properties window, highlight your new VMkernel port group in the list and then click “Edit”. We’ll now configure a security policy on this port group to “Accept” for “Promiscuous mode”. Click the “Security” tab and configure as per the screenshot below. This will allow us to use vMotion in our “nested” ESXi host configuration.

Finally, click OK and then Close to complete our networking configuration for the ESXi host. Here is a summary of our vSwitch0 Virtual Switch configuration:

Another way of configuring your network adapters for vSwitch0, as Duncan Epping recommends would be to set both adapters as “Active” on your vSwitch0 (provided your physical switch allows you to). In our case it does, because we are “bridging” the network adapters to our physical network via our host physical PC network connection. (i.e. VMware Workstation is essentially our physical switch). This configuration would then enable us to use both NICs for the types of traffic we have defined on our vSwitch instead of just one handling active traffic and one sitting in standby mode. This configuration still maintains network redundancy – you can test this by running a VM on one of the hosts with two adapters in “Active” mode, setting the guest operating system in the VM to ping a location inside or outside your network, then removing one of the active NICs from your vSwitch0 – you shouldn’t see any dropped packets.

To set one of your Standby NICs back to an Active NIC, configure your vSwitch0 as per this screenshot, using the “NIC Teaming” tab:

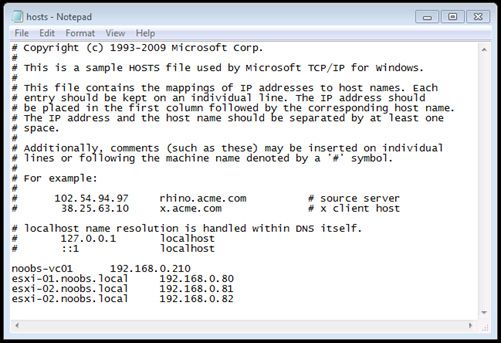

Setting up vCenter and our Cluster

Our final stage of configuration will now take place in vCenter, using our vSphere Client from our local host PC. Before we begin though, lets setup our PC’s hosts file to add some “A” records for our ESXi hosts and vCenter server. These “A” records are for the vSphere client you’ll be running on your physical host to correctly identify the actual ESXi host servers. For example, when you view the consoles of your VMs in vCenter, your PC needs to know which host to open the VM console on, so it will need to match up each ESXi host server’s hostname with it’s correct IP address. Open the hosts file located in C:\Windows\System32\drivers\etc\hosts with Notepad and edit it to point the FQDN’s of each ESXi host to their correct IP addresses. Below is an example of the hosts file configuration on my Windows 7 PC which is running the entire lab. Note that I added a simple entry for my vCenter server too (noobs-vc01) so that I can connect to this name using the vSphere client instead of the IP of my vCenter server.

Ensure that all your lab VMs are powered up – i.e. DC, FreeNAS, two ESXi hosts and vCenter (in that order too). Use your vSphere Client to connect to your vCenter server by IP or hostname. You can use your domain administrator account to login with, although best practise is of course to create normal domain users in Active Directory to use for vCenter administration. Accept the message stating you have 60 days left of your trial, and you should land on a welcome / summary area of the GUI when the log in is complete.

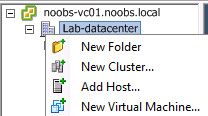

Before we can add any objects to the vCenter Server inventory, we need to create a datacenter object. This is for all intents and purposes, a container object, and can often be thought of as the “root” of your vCenter environment. The items visible within the datacenter object will depend on which Inventory view you have selected in the vSphere client. For example, “Hosts and Clusters” will show your Cluster objects, ESX/ESXi hosts and VMs under the datacenter object. Click on “Create a datacenter” under Basic Tasks to define yourself a datacenter object and call it anything you like. I named mine “Lab-datacenter”. .

Next up, we’ll want to create a cluster object for our ESXi hosts to be a part of. A cluster is a group of hosts (ESX or ESXi host servers) that are used for collective resource management. We’ll use this cluster to setup DRS and HA for our hosts as these features can only be enabled on clusters. Right-click on your datacenter object and choose the option for “New Cluster”.

Figure 13: Define a New Cluster under your Datacenter object.

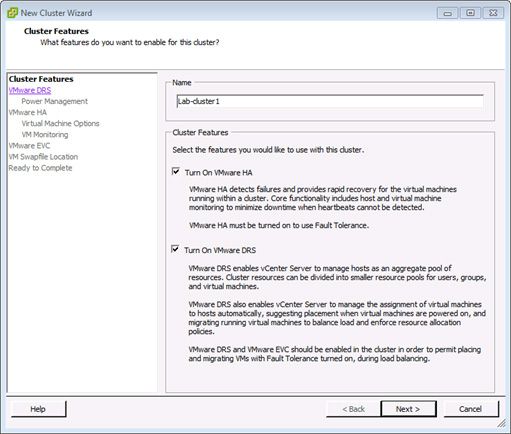

Now we can give our cluster a name. I chose “Lab-cluster1”. Check the boxes for Turning on VMware HA and DRS for this cluster, and then click Next.

Figure 14: Enable HA & DRS for your cluster.

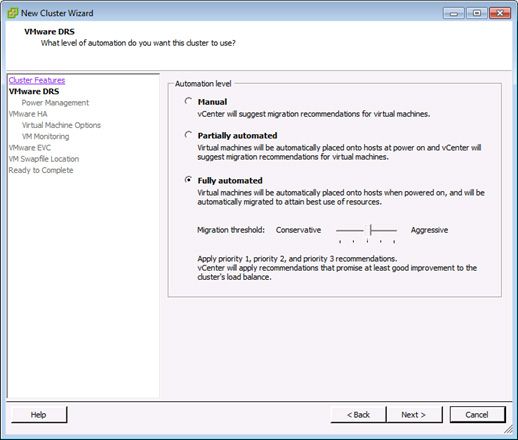

You can now choose your DRS Automation level. The cluster settings are quite easy to return to and change at a later stage if you would like to experiment with them (kind of what the lab is all about really!). So choose an automation level you would like for your VMs. The default is Fully Automated, which means DRS will make all the decisions for you when it comes to managing your host resources and deciding which VM runs on which host server.

Figure 15: DRS Automation level settings.

The next option page in the New Cluster wizard is for DPM (Distributed Power Management). We won’t be covering this feature in our lab, so just leave it off, as it is by default.

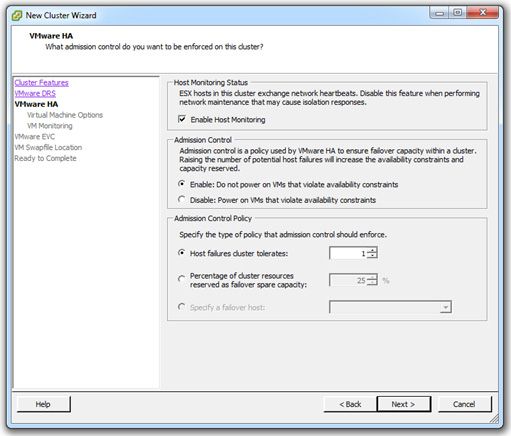

We’ll now setup VMware HA for our cluster on the next page. Host monitoring essentially monitors for host failures (physical, network, etc.) and when a failure occurs, monitoring allows HA to restart all of the “downed” ESX or ESXi host’s VMs on another host server. Leave this enabled. (In a production environment, you should disable this option when performing network maintenance as connectivity issues could trigger a host isolation response which could potentially result in VMs being restarted for no reason at all!) We’ll leave the Admission Control settings at their defaults. The next page allows you to set some default cluster settings. Leave these at their defaults too. On the next page we see some options for VM Monitoring – leave this disabled. Next up is EVC (Enhanced vMotion Compatibility). This is a very useful feature for when you have a variety of physical hosts with slightly different CPU architectures – it allows for vMotion to be compatible with all hosts in your cluster by establishing a kind of “baseline” for CPU feature sets. In our case, we are running virtualized ESXi hosts – therefore their virtual CPUs will all be of the same type as they are all running from one physical host machine, therefore allowing us to keep this feature disabled. Keep the recommended option for storing the VM swapfile with the Virtual Machine on the next options page, and then finish the wizard.

Figure 16: VMware HA options to configure for the cluster.

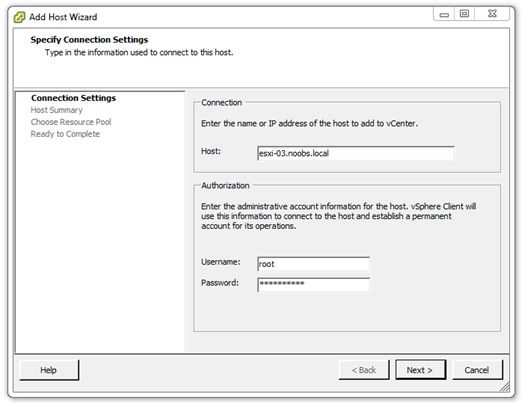

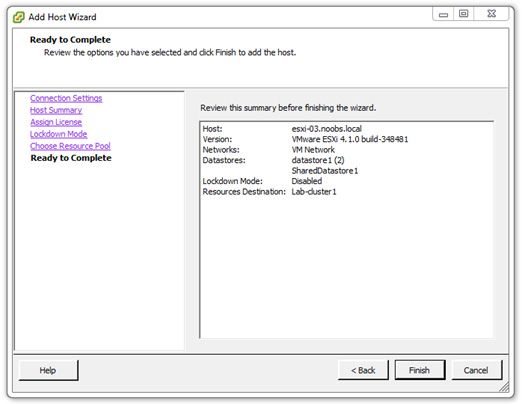

Remember those two ESXi host servers we configured earlier? Well our cluster is now ready to have those servers added to it. Right-click the new cluster and choose the option to “Add Host”. Enter the details of your first ESXi host, including the root username and password you configured for the hsot earlier. You’ll get a Security Alert message about the certificate being untrusted – just click Yes to accept this, it won’t bug you again. Run through the Add Host Wizard, leaving all the default options selected, and opting for the Evaluation mode license. Your summary page should look similar to the screenshot below. Finish off the wizard, and vCenter will add your host to the cluster and configure the vCenter and HA agents on the host for you. Repeat this process for your second host server as well.

Figure 17: Add your ESXi hosts to the cluster by hostname (the FQDN). This is also a good test that your DNS is setup correctly.

Figure 18: vCenter configuring various agents on the host once added to the cluster.

Closing off and Summary

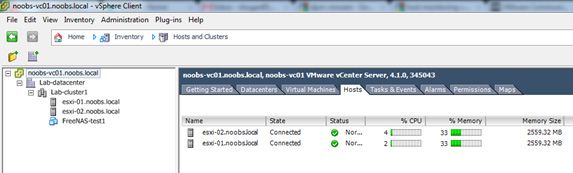

You should now have everything you need for your vSphere lab. You have two ESXi hosts in a cluster, with High Availability and DRS, linked to shared storage. Everything should be ready to run a few VMs now and to test vMotion / HA / DRS. Here is how my lab looks in the vSphere client after completing the setup.

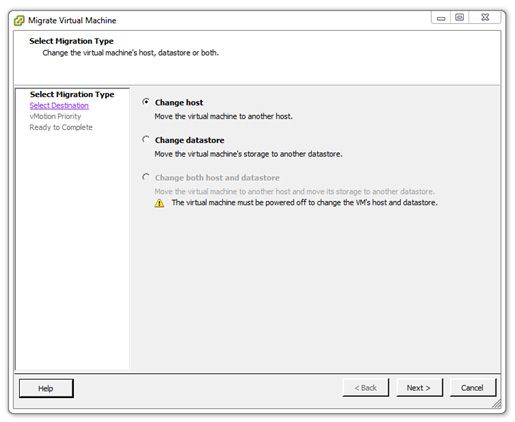

So here is the fun part – get a few VMs up and running on your cluster. Right-click on one of your hosts and select “New Virtual Machine”, use the wizard to create a few VMs with different operating systems. I created another FreeNAS VM using my ISO I had already downloaded just to play around with. If you start it up on your first ESXi host, then while it is running, open a console (right-click -> open console on the VM), and then try migrating it between ESXi hosts. You can accomplish this by right-clicking the VM, selecting “Migrate” then completing the migration wizard, by choosing your second host as the target to migrate to.

Upon completing the wizard, your VM will live migrate (using vMotion) to your second host all the while continuing to stay powered up and running whatever services has and applications are active upon it. As a fun test, why not set your guest Operating System in your test VM to ping a device on your outside network – for example a router or switch, or another PC on your network. While it is pinging this device, get it to migrate between hosts and see if you get any dropped packets. The worst I have seen is a slightly higher latency on one of the ICMP responses (and bear in mind this is on a low-performance lab setup!)

You now have everything you need to test out some of the great features of vSphere and vCenter Server, all hosted from one physical PC / server! Create some more VMs, run some services / torture tests in the guest operating systems and watch how DRS handles your hosts and available resources. Do some reading and try out some of the other features that vSphere offers. You have 60 days to run your vCenter trial so make good use of it! If you ever need to try it out again after your trial expires, you’ll need a new vCenter server and trial license – just follow this guide again – keeping everything in Virtual Machines makes setup and provisioning a breeze and you can keep your entire vSphere lab on just one PC, laptop or server.