企业日志分析——ELK集群搭建手册

ELK集群搭建

环境介绍

| 主机名 | 备注 |

|---|---|

| web | 运行apache web服务 |

| node1 | 作为elk节点1,kibana将安装在此节点 |

| node2 | 作为elk节点2 |

所有主机修改主机名,以node1

[root@localhost ~]# hostnamectl set-hostname node1

所有主机添加主机名解析

[root@localhost ~]# vim /etc/hosts

192.168.218.6 node1

192.168.218.7 node2

192.168.218.142 web

所有主机停止防火墙并安装java

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

yum install -y java-1.8.0

elasticsearch部署

两台node节点安装elasticsearch

[root@node1 opt]# yum -y install elasticsearch-5.5.0.rpm

[root@node2 opt]# yum -y install elasticsearch-5.5.0.rpm

修改elasticsearch配置文件

# 先备份

[root@node1 opt]# cp /etc/elasticsearch/elasticsearch.yml /etc/elasticsearch/elasticsearch.yml.bak

[root@node1 opt]# vim /etc/elasticsearch/elasticsearch.yml

# 集群名字

cluster.name: my-elk-cluster

# 节点名字,注意node2上要修改为node2

node.name: node1

# 数据存放的路径

path.data: /data/elk_data

# 日志存放的路径

path.logs: /var/log/elasticsearch/

# 不在启动的时候锁定内存

bootstrap.memory_lock: false

# 提供服务的IP地址,0.0.0.0代表本机所有IP地址

network.host: 0.0.0.0

# 监听端口

http.port: 9200

为避免出错,直接将node1上的配置文件发送到node2上

[root@node1s opt]# scp /etc/elasticsearch/elasticsearch.yml root@node2:/etc/elasticsearch/elasticsearch.yml

记得要在node2上将/etc/elasticsearch/elasticsearch.yml中的节点名字修改为node2

node1、node2上创建数据存放路径并授权

[root@node1 opt]# mkdir -p /data/elk_data

[root@node1 opt]# chown elasticsearch:elasticsearch /data/elk_data/

[root@node2 opt]# mkdir -p /data/elk_data

[root@node2 opt]# chown elasticsearch:elasticsearch /data/elk_data/

启动服务

[root@node1 opt]# systemctl daemon-reload

[root@node1 opt]# systemctl enable elasticsearch.service

[root@node1 opt]# systemctl start elasticsearch.service

[root@node2 opt]# systemctl daemon-reload

[root@node2 opt]# systemctl enable elasticsearch.service

[root@node2 opt]# systemctl start elasticsearch.service

查看监听状态,若没有输出结果则需要等待一会

netstat -antp |grep 9200

待服务开启后,可使用浏览器或curl命令查看集群健康状态

[root@node1 opt]# curl node1:9200/_cluster/health?pretty

{

"cluster_name" : "web-elk-cluster",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 2,

"number_of_data_nodes" : 2,

"active_primary_shards" : 0,

"active_shards" : 0,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

[root@node1 opt]# curl node2:9200/_cluster/health?pretty

{

"cluster_name" : "web-elk-cluster",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 2,

"number_of_data_nodes" : 2,

"active_primary_shards" : 0,

"active_shards" : 0,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

部署elasticsearch-head

elasticsearch-head插件可更加直观地反应集群的状况,方便管理

部署node组件

在两个节点上部署node

[root@node1 opt]# tar -xf node-v8.2.1.tar.gz

[root@node1 opt]# cd node-v8.2.1/

[root@node1 node-v8.2.1]# ./configure

[root@node1 node-v8.2.1]# make -j4

[root@node1 node-v8.2.1]# make install

[root@node2 opt]# tar -xf node-v8.2.1.tar.gz

[root@node2 opt]# cd node-v8.2.1/

[root@node2 node-v8.2.1]# ./configure

[root@node2 node-v8.2.1]# make -j4

[root@node2 node-v8.2.1]# make install

安装phantomjs

[root@node1 opt]# tar -xf phantomjs-2.1.1-linux-x86_64.tar.bz2

[root@node1 opt]# mv phantomjs-2.1.1-linux-x86_64 /usr/local/src/

[root@node1 opt]# cp /usr/local/src/phantomjs-2.1.1-linux-x86_64/bin/phantomjs /usr/local/bin/

[root@node2 opt]# tar -xf phantomjs-2.1.1-linux-x86_64.tar.bz2

[root@node2 opt]# mv phantomjs-2.1.1-linux-x86_64 /usr/local/src/

[root@node2 opt]# cp /usr/local/src/phantomjs-2.1.1-linux-x86_64/bin/phantomjs /usr/local/bin/

安装elasticsearch-head

[root@node1 opt]# tar -xf elasticsearch-head.tar.gz

[root@node1 opt]# mv elasticsearch-head /usr/local/src/

[root@node1 opt]# cd /usr/local/src/elasticsearch-head/

[root@node1 elasticsearch-head]# npm install

[root@node2 opt]# tar -xf elasticsearch-head.tar.gz

[root@node2 opt]# mv elasticsearch-head /usr/local/src/

[root@node2 opt]# cd /usr/local/src/elasticsearch-head/

[root@node2 elasticsearch-head]# npm install

修改配置文件,重新启动服务,启动elasticsearch-head

[root@node1 elasticsearch-head]# vim /etc/elasticsearch/elasticsearch.yml

# 添加

# 开启跨域访问支持

http.cors.enabled: true

# 跨域访问允许的域名地址

http.cors.allow-origin: "*"

[root@node1 elasticsearch-head]# systemctl restart elasticsearch

[root@node2 elasticsearch-head]# vim /etc/elasticsearch/elasticsearch.yml

http.cors.enabled: true

http.cors.allow-origin: "*"

[root@node2 elasticsearch-head]# systemctl restart elasticsearch

[root@node1 elasticsearch-head]# npm run start &

[root@node2 elasticsearch-head]# npm run start &

# 检查是否开启elasticsearch-head

netstat -ntap |grep 9100

返回node节点,创建索引

[root@node1 elasticsearch-head]# curl -XPUT 'localhost:9200/index-demo/test/1?pretty&pretty' -H 'content-Type: application/json' -d '{"user":"zhangsan","mesg":"hello world"}'

{

"_index" : "index-demo",

"_type" : "test",

"_id" : "1",

"_version" : 1,

"result" : "created",

"_shards" : {

"total" : 2,

"successful" : 2,

"failed" : 0

},

"created" : true

}

[root@node2 elasticsearch-head]# curl -XPUT 'localhost:9200/index-demo/test/1?pretty&pretty' -H 'content-Type: application/json' -d '{"user":"zhangsan","mesg":"hello world"}'

{

"_index" : "index-demo",

"_type" : "test",

"_id" : "1",

"_version" : 2,

"result" : "updated",

"_shards" : {

"total" : 2,

"successful" : 2,

"failed" : 0

},

"created" : false

}

浏览器访问http://node-ip:9100/

安装logstash

在web服务器上

logstash-5.5.1.rpm

[root@web opt]# yum -y install logstash-5.5.1.rpm

[root@web opt]# systemctl start logstash

[root@web opt]# systemctl enable logstash

[root@web opt]# systemctl status logstash

[root@web opt]# ln -s /usr/share/logstash/bin/logstash /usr/local/bin/

与elasticsearch对接

使用logstash命令

Logstash这个命令测试

参数解释:

-f 指定配置文件

-e 后面跟着字符串 该字符串可以被当做logstash的配置(如果是” ”,则默认使用stdin做为输入、stdout作为输出)

-t 测试配置文件是否正确,然后退出

[root@web opt]# logstash -e 'input { stdin{} } output { stdout{} }'

...省略部分内容...

# 看到如下提示说明对接成功

21:45:06.584 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

# 等待输入

www.sina.com

2020-04-05T13:47:34.138Z web www.sina.com

www.aliyun.com

2020-04-05T13:47:49.727Z web www.aliyun.com

# CTRL + c 退出

^C21:47:52.599 [SIGINT handler] WARN logstash.runner - SIGINT received. Shutting down the agent.

21:47:52.629 [LogStash::Runner] WARN logstash.agent - stopping pipeline {:id=>"main"}

使用rubydebug显示详细输出

[root@web opt]# logstash -e 'input { stdin{} } output { stdout{ codec=>rubydebug } }'

...省略部分内容...

21:49:09.775 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

# 等待输入

www.sina.com

{

"@timestamp" => 2020-04-05T13:50:11.001Z,

"@version" => "1",

"host" => "web",

"message" => "www.sina.com"

}

# 测试OK,CTRL + c 退出

^C21:50:18.422 [SIGINT handler] WARN logstash.runner - SIGINT received. Shutting down the agent.

21:50:18.429 [LogStash::Runner] WARN logstash.agent - stopping pipeline {:id=>"main"}

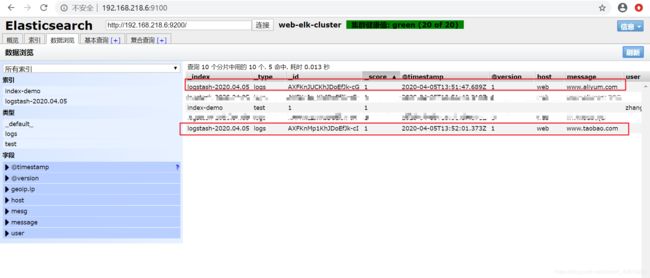

使用logstash将信息写入elasticsearch中

# 选择node1的IP地址

[root@web opt]# logstash -e 'input { stdin{} } output { elasticsearch { hosts=>["192.168.218.6:9200"] } }'

...省略部分内容...

21:51:29.580 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

www.aliyum.com

www.taobao.com

# CTRL + c 退出

^C21:52:05.812 [SIGINT handler] WARN logstash.runner - SIGINT received. Shutting down the agent.

21:52:05.823 [LogStash::Runner] WARN logstash.agent - stopping pipeline {:id=>"main"}

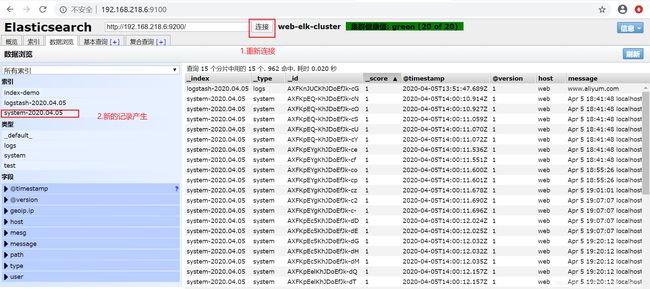

在web上做对接配置

[root@web opt]# chmod o+r /var/log/messages

[root@web opt]# vim /etc/logstash/conf.d/system.conf

# 写入

input {

file{

path => "/var/log/messages"

type => "system"

start_position => "beginning"

}

}

output {

elasticsearch {

hosts => ["192.168.218.6:9200"] # 注意替换IP

index => "system-%{+YYYY.MM.dd}"

}

}

[root@web opt]# systemctl restart logstash

浏览访问node1

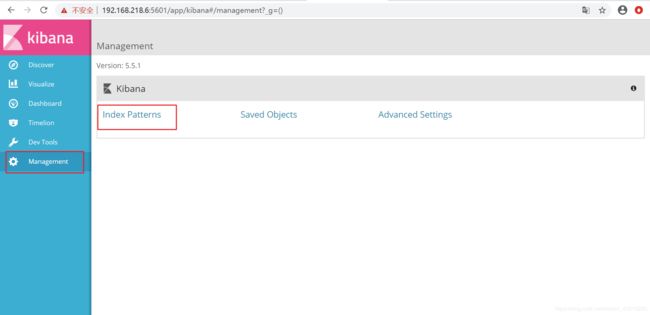

安装kibana

在node1上安装

[root@node1 opt]# yum -y install kibana-5.5.1-x86_64.rpm

[root@node1 opt]# cd /etc/kibana/

[root@node1 kibana]# cp kibana.yml kibana.yml.bak

[root@node1 kibana]# vim kibana.yml

# 取消注释或修改

# 监听端口

server.port: 5601

# 监听地址

server.host: "0.0.0.0"

# 对接elasticsearch

elasticsearch.url: "http://node1:9200"

# 添加.kibana索引

kibana.index: ".kibana"

[root@node1 kibana]# systemctl start kibana

[root@node1 kibana]# systemctl enable kibana

对接apache的日志

[root@web opt]# cd /etc/logstash/conf.d/

[root@web conf.d]# ls

system.conf

[root@web conf.d]# vim apache_log.conf

# 注意替换IP地址为node1的IP地址

input {

file{

path => "/etc/httpd/logs/access_log"

type => "access"

start_position => "beginning"

}

file{

path => "/etc/httpd/logs/error_log"

type => "error"

start_position => "beginning"

}

}

output {

if [type] == "access" {

elasticsearch {

hosts => ["192.168.218.6:9200"]

index => "apache_access-%{+YYYY.MM.dd}"

}

}

if [type] == "error" {

elasticsearch {

hosts => ["192.168.218.6:9200"]

index => "apache_error-%{+YYYY.MM.dd}"

}

}

}

# 指定配置文件,测试

[root@web conf.d]# logstash -f apache_log.conf

...省略...

22:23:23.347 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9601}

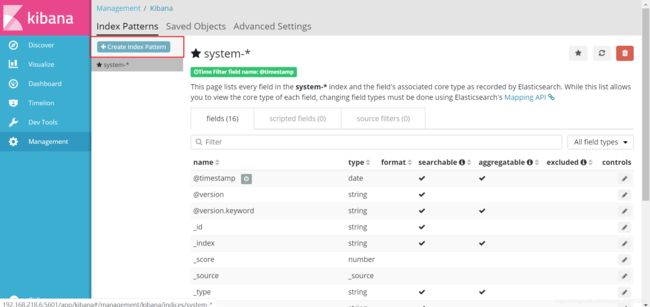

访问http://node1-ip:9100,可以看到新索引

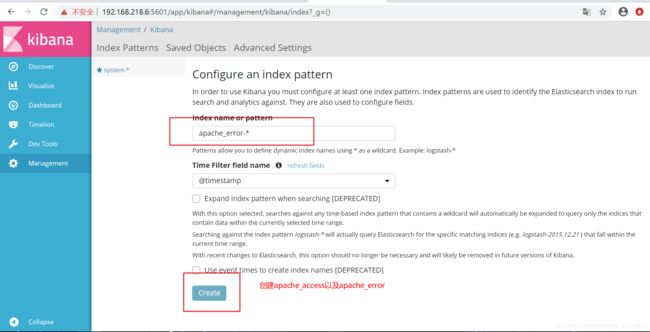

访问kibana,创建httpd的访问和错误索引