JevonWei

blog: http://119.23.52.191/

实战之elasticsearch集群及filebeat server和logstash server

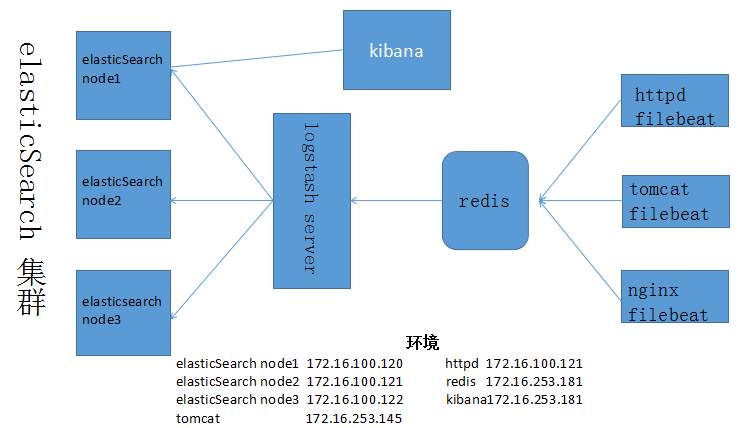

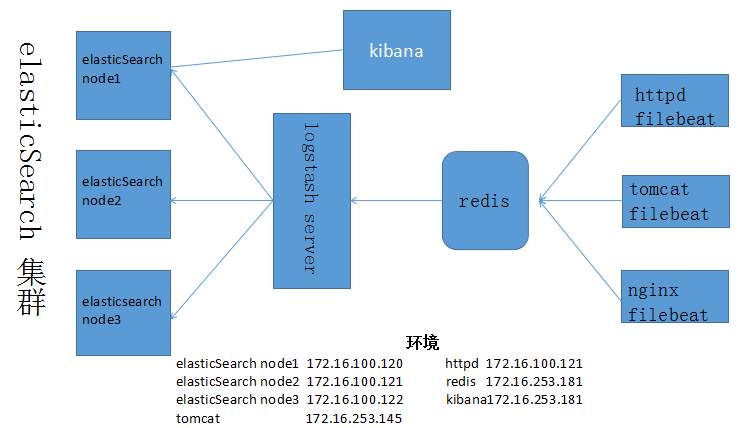

环境

elasticsearch集群节点环境为172.16.100.120:9200,172.16.100.121:9200,172.16.100.122:9200

logstash server服务端为172.16.100.121

filebeat server服务端为172.16.100.121

httpd服务产生日志信息 172.16.100.121

redis服务端172.16.253.181

kibana服务端172.16.253.181

tomcat server端172.16.253.145

网络拓扑图

elasticsearch集群构建如上,在此省略

filebeat server

下载filebeat程序包

https://www.elastic.co/downloads/beats/filebeat

[root@node4 ~]# ls filebeat-5.5.1-x86_64.rpm

filebeat-5.5.1-x86_64.rpm

安装filebeat

[root@node4 ~]# yum -y install filebeat-5.5.1-x86_64.rpm

[root@node4 ~]# rpm -ql filebeat

编辑filebeat.yml文件

[root@node2 ~]# vim /etc/filebeat/filebeat.yml

- input_type: log

- /var/log/httpd/access_log* 指定数据的输入路径为access_log开头的所有文件

output.logstash: \\数据输出到logstash中

# The Logstash hosts

hosts: ["172.16.100.121:5044"] \\指定logstash服务端

启动服务

[root@node4 ~]# systemctl start filebeat

logstash server

安装java环境

[root@node2 ~]# yum -y install java-1.8.0-openjdk-devel

下载logstash程序

https://www.elastic.co/downloads/logstash

安装logstash程序

[root@node2 ~]# ll logstash-5.5.1.rpm

-rw-r--r--. 1 root root 94158545 Aug 21 17:06 logstash-5.5.1.rpm

[root@node4 ~]# rpm -ivh logstash-5.5.1.rpm

编辑logstash的配置文件

[root@node2 ~]# vim /etc/logstash/logstash.yml文件配置

path.data: /var/lib/logstash 数据存放路径

path.config: /etc/logstash/conf.d 配置文件的读取路径

path.logs: /var/log/logstash 日志文件的保存路径

[root@node2 ~]# vim /etc/logstash/jvm.options环境文件

-Xms256m 启用的内存大小

-Xmx1g

编辑/etc/logstash/conf.d/test.conf 文件

[root@node4 ~]# vim /etc/logstash/conf.d/test.conf

input {

beats {

port => 5044

}

}

filter {

grok {

match => {

"message" => "%{COMBINEDAPACHELOG}"

}

remove_field => "message" \\只显示message字段的数据

}

}

output {

elasticsearch {

hosts => ["http://172.16.100.120:9200","http://172.16.100.121:9200","http://172.16.100.122:9200"]

index => "logstash-%{+YYYY.MM.dd}"

action => "index"

}

}

测试/etc/logstash/conf.d/test.conf文件语法

[root@node2 ~]# /usr/share/logstash/bin/logstash -t -f /etc/logstash/conf.d/test.conf

执行/etc/logstash/conf.d/test.conf文件

[root@node2 ~]#/usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/test.conf

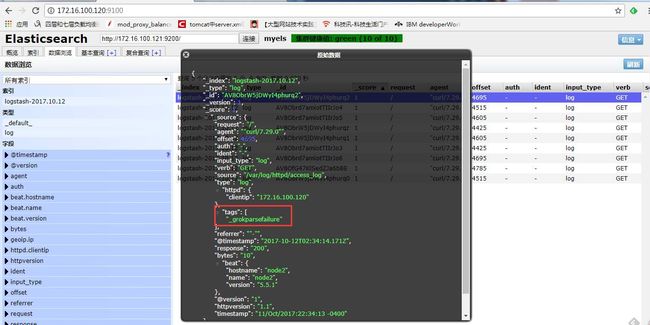

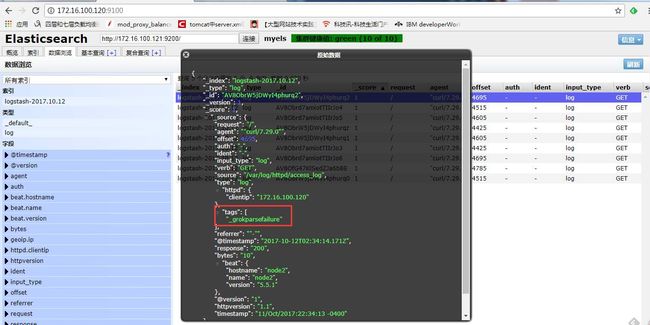

客户端访问测试索引信息是否生成

[root@node5 ~]#curl -XGET '172.16.100.120:9200/_cat/indices'

green open logstash-2017.10.12 baieaGWpSN-BA28dAlqxhA 5 1 27 0 186.7kb 93.3kb

从redis插件读取采集数据

Redis

[root@node4 ~]# yum -y install redis

[root@node4 ~]# vim /etc/redis.conf

bind 0.0.0.0 监听所有地址

requirepass danran 设定密码为danran

[root@node4 ~]# systemctl restart redis

连接测试redis是否正常

[root@node4 ~]# redis-cli -h 172.16.253.181 -a danran

172.16.253.181:6379>

配置logstash server文件

[root@node2 ~]# vim /etc/logstash/conf.d/redis-input.conf

input {

redis {

host => "172.16.253.181"

port => "6379"

password => "danran"

db => "0"

data_type => "list" \\定义数据类型为列表格式

key => "filebeat" \\定义key为filebeat,与filebeat.yml定义key一致

}

}

filter {

grok {

match => {

"message" => "%{COMBINEDAPACHELOG}"

}

remove_field => "message"

}

mutate {

rename => {"clientip" => "[httpd][clientip]" }

}

}

output {

elasticsearch {

hosts => ["http://172.16.100.120:9200","http://172.16.100.121:9200","http://172.16.100.122:9200"]

index => "logstash-%{+YYYY.MM.dd}"

action => "index"

}

}

重启logstash server

[root@node2 ~]# systemctl restart logstash

配置filebeat的数据输出到redis

[root@node2 ~]# vim /etc/filebeat/filebeat.yml

- input_type: log

- /var/log/httpd/access_log* 指定数据的输入路径为access_log开头的所有文件

#-----------------------redis output --------------------------- output.redis: hosts: ["172.16.253.181"] \\redis服务端 port: "6379" password: "danran" \\redis密码 key: "filebeat" \\定义key,与redis-input.conf文件中input字段的key保存一致 db: 0 \\指定输出的数据库为0 timeout: 5

重启filebeat

[root@node2 ~]# systemctl restart filebeat

客户端访问httpd服务

[root@node1 ~]# curl 172.16.100.121

test page

登录redis数据库查看数据是否采集

[root@node4 ~]# redis-cli -h 172.16.253.181 -a danran

查看elasticsearch集群是否采集数据

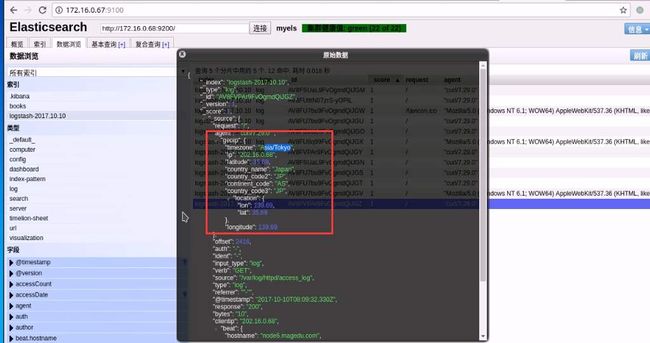

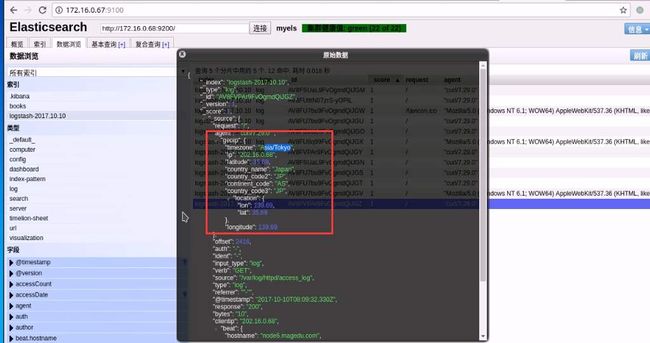

启用geoip插件

下载geoip程序安装

[root@node2 ~]# ll GeoLite2-City.tar.gz

-rw-r–r–. 1 root root 25511308 Aug 21 05:06 GeoLite2-City.tar.gz

[root@node2 ~]# cd GeoLite2-City_20170704/

[root@node2 GeoLite2-City_20170704]# mv GeoLite2-City.mmdb /etc/logstash/

配置logstash server文件

[root@node2 ~]# vim /etc/logstash/conf.d/geoip.conf

input {

redis {

host => "172.16.253.181"

port => "6379"

password => "danran"

db => "0"

data_type => "list" \\定义数据类型为列表格式

key => "filebeat" \\定义key为filebeat,与filebeat.yml定义key一致

}

}

filter {

grok {

match => {

"message" => "%{COMBINEDAPACHELOG}"

}

remove_field => "message"

}

geoip {

source => "clientip" 指定客户端IP查找

target => "geoip"

database => "/etc/logstash/GeoLite2-City.mmdb" \\指定geoip数据库文件

}

}

output {

elasticsearch {

hosts => ["http://172.16.100.120:9200","http://172.16.100.121:9200","http://172.16.100.122:9200"]

index => "logstash-%{+YYYY.MM.dd}"

action => "index"

}

}

测试redis-input.conf文件语法

[root@node2 ~]# /usr/share/logstash/bin/logstash -t -f /etc/logstash/conf.d/redis-input.conf

指定配置文件启动logstash

[root@node2 ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/redis-input.conf

客户端访问httpd

[root@node1 ~]# curl 172.16.100.121 test page

查看elasticsearch-head中信息,可根据IP地址查询区域

模仿两条外部的访问日志信息

[root@node2 ~]# echo '72.16.100.120 - - [11/Oct/2017:22:32:21 -0400] "GET / HTTP/1.1" 200 10 "-" "curl/7.29.0"' >> /var/log/httpd/access_log

[root@node2 ~]# echo '22.16.100.120 - - [11/Oct/2017:22:32:21 -0400] "GET / HTTP/1.1" 200 10 "-" "curl/7.29.0"' >> /var/log/httpd/access_log

查看elasticsearch-head中信息,可根据IP地址查询区域

启用kibana插件

下载安装kibana

[root@node4 ~]# ls kibana-5.5.1-x86_64.rpm

kibana-5.5.1-x86_64.rpm

[root@node4 ~]# rpm -ivh kibana-5.5.1-x86_64.rpm

配置kibana.yml文件

[root@node4 ~]# vim /etc/kibana/kibana.yml

server.port: 5601 监听端口

server.host: "0.0.0.0" 监听地址

elasticsearch.url: "http://172.16.100.120:9200" 指定elasticsearch集群中的某个节点URL

启动服务

[root@node4 ~]# systemctl start kibana brandbot

[root@node4 ~]# ss -ntl 监听5601端口

配置logstash server数据采集文件

[root@node2 ~]# vim /etc/logstash/conf.d/geoip.conf

input {

redis {

host => "172.16.253.181"

port => "6379"

password => "danran"

db => "0"

data_type => "list" \\定义数据类型为列表格式

key => "filebeat" \\定义key为filebeat,与filebeat.yml定义key一致

}

}

filter {

grok {

match => {

"message" => "%{COMBINEDAPACHELOG}"

}

remove_field => "message"

}

geoip {

source => "clientip" 指定客户端IP查找

target => "geoip"

database => "/etc/logstash/GeoLite2-City.mmdb" \\指定geoip数据库文件

}

}

output {

elasticsearch {

hosts => ["http://172.16.100.120:9200","http://172.16.100.121:9200","http://172.16.100.122:9200"]

index => "logstash-%{+YYYY.MM.dd}"

action => "index"

}

}

测试redis-input.conf文件语法

[root@node2 ~]# /usr/share/logstash/bin/logstash -t -f /etc/logstash/conf.d/redis-input.conf

指定配置文件启动logstash

[root@node2 ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/redis-input.conf

elasticsearch-head中查看信息

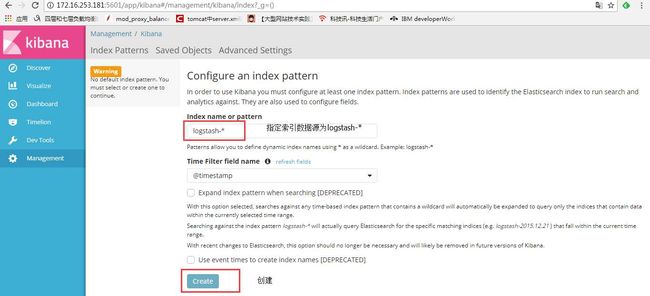

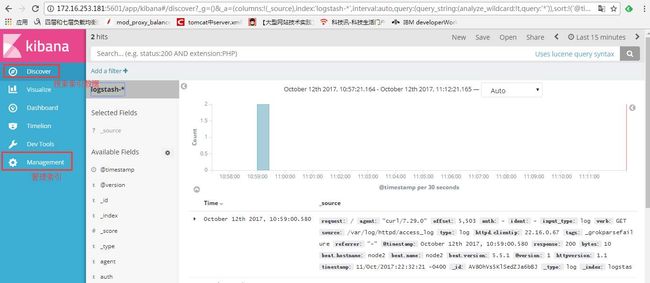

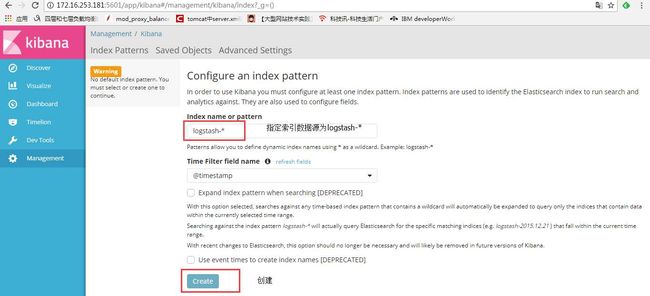

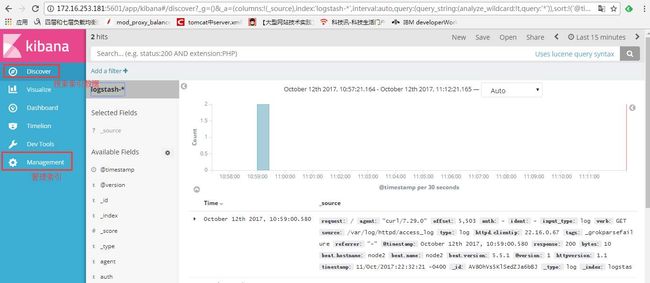

Web加载kibana

浏览器键入http://172.16.253.181:5601

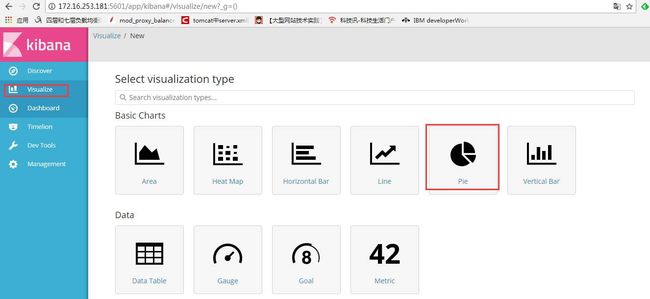

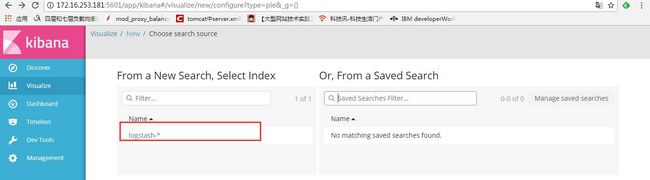

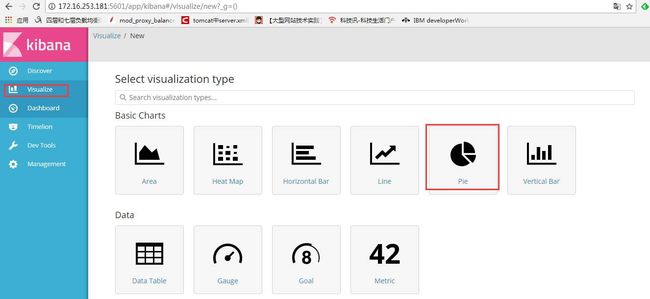

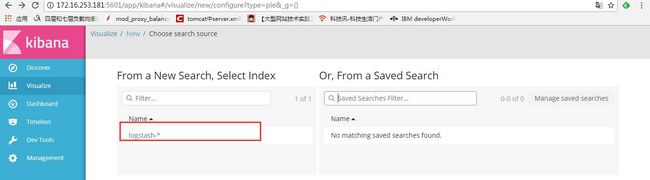

图形显示访问数据统计

采集监控tomcat节点日志

安装tomcat服务

[root@node5 ~]# yum -y install tomcat tomcat-webapps tomcat-admin-webapps tomcat-docs-webapp

[root@node5 ~]# systemctl start tomcat

[root@node5 ~]# ss -ntl \\8080端口已监听

查看日志文件路径

[root@node5 ~]# ls /var/log/tomcat/localhost_access_log.2017-10-12.txt

/var/log/tomcat/localhost_access_log.2017-10-12.txt

安装filebeat

下载filebeat程序包

https://www.elastic.co/downloads/beats/filebeat

[root@node4 ~]# ls filebeat-5.5.1-x86_64.rpm

filebeat-5.5.1-x86_64.rpm

安装filebeat

[root@node4 ~]# yum -y install filebeat-5.5.1-x86_64.rpm

[root@node4 ~]# rpm -ql filebeat

配置filebeat.yml文件

[root@node5 ~]# vim /etc/filebeat/filebeat.yml

- input_type: log

- /var/log/tomcat/*.txt 数据文件的采集路径

#---------------------------redis output --------------------- output.redis: hosts: ["172.16.253.181"] port: "6379" password: "danran" key: "fb-tomcat" db: 0 timeout: 5

启动filebeat服务

[root@node5 ~]# systemctl start filebeat

配置logstash server端数据采集配置文件

[root@node2 ~]# vim /etc/logstash/conf.d/tomcat.conf

input {

redis {

host => "172.16.253.181"

port => "6379"

password => "danran"

db => "0"

data_type => "list" \\定义数据类型为列表格式

key => "fb-tomcat" \\定义key为filebeat,与filebeat.yml定义key一致

}

}

filter {

grok {

match => {

"message" => "%{COMMONAPACHELOG}"

}

remove_field => "message"

}

geoip {

source => "clientip" 指定客户端IP查找

target => "geoip"

database => "/etc/logstash/GeoLite2-City.mmdb" \\指定geoip数据库文件

}

}

output {

elasticsearch {

hosts => ["http://172.16.100.120:9200","http://172.16.100.121:9200","http://172.16.100.122:9200"]

index => "logstash-tomcat-%{+YYYY.MM.dd}"

action => "index"

}

}

测试redis-input.conf文件语法

[root@node2 ~]#/usr/share/logstash/bin/logstash -t -f /etc/logstash/conf.d/tomcat.conf

重新启动logstash

[root@node2 ~]# systemctl restart logstash

elasticsearch-head中查看是否产生logstash-toncat索引信息

配置kibana可视化查看索引数据

浏览器键入http://172.16.253.181:5601